mirror of

https://github.com/outbackdingo/cozystack.git

synced 2026-01-27 10:18:39 +00:00

Preapare release v0.0.1

Signed-off-by: Andrei Kvapil <kvapss@gmail.com>

This commit is contained in:

1

.dockerignore

Normal file

1

.dockerignore

Normal file

@@ -0,0 +1 @@

|

||||

_out

|

||||

1

.gitignore

vendored

Normal file

1

.gitignore

vendored

Normal file

@@ -0,0 +1 @@

|

||||

_out

|

||||

201

LICENSE

Normal file

201

LICENSE

Normal file

@@ -0,0 +1,201 @@

|

||||

Apache License

|

||||

Version 2.0, January 2004

|

||||

http://www.apache.org/licenses/

|

||||

|

||||

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

||||

|

||||

1. Definitions.

|

||||

|

||||

"License" shall mean the terms and conditions for use, reproduction,

|

||||

and distribution as defined by Sections 1 through 9 of this document.

|

||||

|

||||

"Licensor" shall mean the copyright owner or entity authorized by

|

||||

the copyright owner that is granting the License.

|

||||

|

||||

"Legal Entity" shall mean the union of the acting entity and all

|

||||

other entities that control, are controlled by, or are under common

|

||||

control with that entity. For the purposes of this definition,

|

||||

"control" means (i) the power, direct or indirect, to cause the

|

||||

direction or management of such entity, whether by contract or

|

||||

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

||||

outstanding shares, or (iii) beneficial ownership of such entity.

|

||||

|

||||

"You" (or "Your") shall mean an individual or Legal Entity

|

||||

exercising permissions granted by this License.

|

||||

|

||||

"Source" form shall mean the preferred form for making modifications,

|

||||

including but not limited to software source code, documentation

|

||||

source, and configuration files.

|

||||

|

||||

"Object" form shall mean any form resulting from mechanical

|

||||

transformation or translation of a Source form, including but

|

||||

not limited to compiled object code, generated documentation,

|

||||

and conversions to other media types.

|

||||

|

||||

"Work" shall mean the work of authorship, whether in Source or

|

||||

Object form, made available under the License, as indicated by a

|

||||

copyright notice that is included in or attached to the work

|

||||

(an example is provided in the Appendix below).

|

||||

|

||||

"Derivative Works" shall mean any work, whether in Source or Object

|

||||

form, that is based on (or derived from) the Work and for which the

|

||||

editorial revisions, annotations, elaborations, or other modifications

|

||||

represent, as a whole, an original work of authorship. For the purposes

|

||||

of this License, Derivative Works shall not include works that remain

|

||||

separable from, or merely link (or bind by name) to the interfaces of,

|

||||

the Work and Derivative Works thereof.

|

||||

|

||||

"Contribution" shall mean any work of authorship, including

|

||||

the original version of the Work and any modifications or additions

|

||||

to that Work or Derivative Works thereof, that is intentionally

|

||||

submitted to Licensor for inclusion in the Work by the copyright owner

|

||||

or by an individual or Legal Entity authorized to submit on behalf of

|

||||

the copyright owner. For the purposes of this definition, "submitted"

|

||||

means any form of electronic, verbal, or written communication sent

|

||||

to the Licensor or its representatives, including but not limited to

|

||||

communication on electronic mailing lists, source code control systems,

|

||||

and issue tracking systems that are managed by, or on behalf of, the

|

||||

Licensor for the purpose of discussing and improving the Work, but

|

||||

excluding communication that is conspicuously marked or otherwise

|

||||

designated in writing by the copyright owner as "Not a Contribution."

|

||||

|

||||

"Contributor" shall mean Licensor and any individual or Legal Entity

|

||||

on behalf of whom a Contribution has been received by Licensor and

|

||||

subsequently incorporated within the Work.

|

||||

|

||||

2. Grant of Copyright License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

copyright license to reproduce, prepare Derivative Works of,

|

||||

publicly display, publicly perform, sublicense, and distribute the

|

||||

Work and such Derivative Works in Source or Object form.

|

||||

|

||||

3. Grant of Patent License. Subject to the terms and conditions of

|

||||

this License, each Contributor hereby grants to You a perpetual,

|

||||

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

||||

(except as stated in this section) patent license to make, have made,

|

||||

use, offer to sell, sell, import, and otherwise transfer the Work,

|

||||

where such license applies only to those patent claims licensable

|

||||

by such Contributor that are necessarily infringed by their

|

||||

Contribution(s) alone or by combination of their Contribution(s)

|

||||

with the Work to which such Contribution(s) was submitted. If You

|

||||

institute patent litigation against any entity (including a

|

||||

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

||||

or a Contribution incorporated within the Work constitutes direct

|

||||

or contributory patent infringement, then any patent licenses

|

||||

granted to You under this License for that Work shall terminate

|

||||

as of the date such litigation is filed.

|

||||

|

||||

4. Redistribution. You may reproduce and distribute copies of the

|

||||

Work or Derivative Works thereof in any medium, with or without

|

||||

modifications, and in Source or Object form, provided that You

|

||||

meet the following conditions:

|

||||

|

||||

(a) You must give any other recipients of the Work or

|

||||

Derivative Works a copy of this License; and

|

||||

|

||||

(b) You must cause any modified files to carry prominent notices

|

||||

stating that You changed the files; and

|

||||

|

||||

(c) You must retain, in the Source form of any Derivative Works

|

||||

that You distribute, all copyright, patent, trademark, and

|

||||

attribution notices from the Source form of the Work,

|

||||

excluding those notices that do not pertain to any part of

|

||||

the Derivative Works; and

|

||||

|

||||

(d) If the Work includes a "NOTICE" text file as part of its

|

||||

distribution, then any Derivative Works that You distribute must

|

||||

include a readable copy of the attribution notices contained

|

||||

within such NOTICE file, excluding those notices that do not

|

||||

pertain to any part of the Derivative Works, in at least one

|

||||

of the following places: within a NOTICE text file distributed

|

||||

as part of the Derivative Works; within the Source form or

|

||||

documentation, if provided along with the Derivative Works; or,

|

||||

within a display generated by the Derivative Works, if and

|

||||

wherever such third-party notices normally appear. The contents

|

||||

of the NOTICE file are for informational purposes only and

|

||||

do not modify the License. You may add Your own attribution

|

||||

notices within Derivative Works that You distribute, alongside

|

||||

or as an addendum to the NOTICE text from the Work, provided

|

||||

that such additional attribution notices cannot be construed

|

||||

as modifying the License.

|

||||

|

||||

You may add Your own copyright statement to Your modifications and

|

||||

may provide additional or different license terms and conditions

|

||||

for use, reproduction, or distribution of Your modifications, or

|

||||

for any such Derivative Works as a whole, provided Your use,

|

||||

reproduction, and distribution of the Work otherwise complies with

|

||||

the conditions stated in this License.

|

||||

|

||||

5. Submission of Contributions. Unless You explicitly state otherwise,

|

||||

any Contribution intentionally submitted for inclusion in the Work

|

||||

by You to the Licensor shall be under the terms and conditions of

|

||||

this License, without any additional terms or conditions.

|

||||

Notwithstanding the above, nothing herein shall supersede or modify

|

||||

the terms of any separate license agreement you may have executed

|

||||

with Licensor regarding such Contributions.

|

||||

|

||||

6. Trademarks. This License does not grant permission to use the trade

|

||||

names, trademarks, service marks, or product names of the Licensor,

|

||||

except as required for reasonable and customary use in describing the

|

||||

origin of the Work and reproducing the content of the NOTICE file.

|

||||

|

||||

7. Disclaimer of Warranty. Unless required by applicable law or

|

||||

agreed to in writing, Licensor provides the Work (and each

|

||||

Contributor provides its Contributions) on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

||||

implied, including, without limitation, any warranties or conditions

|

||||

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

||||

PARTICULAR PURPOSE. You are solely responsible for determining the

|

||||

appropriateness of using or redistributing the Work and assume any

|

||||

risks associated with Your exercise of permissions under this License.

|

||||

|

||||

8. Limitation of Liability. In no event and under no legal theory,

|

||||

whether in tort (including negligence), contract, or otherwise,

|

||||

unless required by applicable law (such as deliberate and grossly

|

||||

negligent acts) or agreed to in writing, shall any Contributor be

|

||||

liable to You for damages, including any direct, indirect, special,

|

||||

incidental, or consequential damages of any character arising as a

|

||||

result of this License or out of the use or inability to use the

|

||||

Work (including but not limited to damages for loss of goodwill,

|

||||

work stoppage, computer failure or malfunction, or any and all

|

||||

other commercial damages or losses), even if such Contributor

|

||||

has been advised of the possibility of such damages.

|

||||

|

||||

9. Accepting Warranty or Additional Liability. While redistributing

|

||||

the Work or Derivative Works thereof, You may choose to offer,

|

||||

and charge a fee for, acceptance of support, warranty, indemnity,

|

||||

or other liability obligations and/or rights consistent with this

|

||||

License. However, in accepting such obligations, You may act only

|

||||

on Your own behalf and on Your sole responsibility, not on behalf

|

||||

of any other Contributor, and only if You agree to indemnify,

|

||||

defend, and hold each Contributor harmless for any liability

|

||||

incurred by, or claims asserted against, such Contributor by reason

|

||||

of your accepting any such warranty or additional liability.

|

||||

|

||||

END OF TERMS AND CONDITIONS

|

||||

|

||||

APPENDIX: How to apply the Apache License to your work.

|

||||

|

||||

To apply the Apache License to your work, attach the following

|

||||

boilerplate notice, with the fields enclosed by brackets "[]"

|

||||

replaced with your own identifying information. (Don't include

|

||||

the brackets!) The text should be enclosed in the appropriate

|

||||

comment syntax for the file format. We also recommend that a

|

||||

file or class name and description of purpose be included on the

|

||||

same "printed page" as the copyright notice for easier

|

||||

identification within third-party archives.

|

||||

|

||||

Copyright [yyyy] [name of copyright owner]

|

||||

|

||||

Licensed under the Apache License, Version 2.0 (the "License");

|

||||

you may not use this file except in compliance with the License.

|

||||

You may obtain a copy of the License at

|

||||

|

||||

http://www.apache.org/licenses/LICENSE-2.0

|

||||

|

||||

Unless required by applicable law or agreed to in writing, software

|

||||

distributed under the License is distributed on an "AS IS" BASIS,

|

||||

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

||||

See the License for the specific language governing permissions and

|

||||

limitations under the License.

|

||||

15

Makefile

Normal file

15

Makefile

Normal file

@@ -0,0 +1,15 @@

|

||||

.PHONY: manifests repos assets

|

||||

|

||||

manifests:

|

||||

(cd packages/core/installer/; helm template -n cozy-installer installer .) > manifests/cozystack-installer.yaml

|

||||

|

||||

repos:

|

||||

rm -rf _out

|

||||

make -C packages/apps check-version-map

|

||||

make -C packages/extra check-version-map

|

||||

make -C packages/system repo

|

||||

make -C packages/apps repo

|

||||

make -C packages/extra repo

|

||||

|

||||

assets:

|

||||

make -C packages/core/talos/ assets

|

||||

560

README.md

Normal file

560

README.md

Normal file

@@ -0,0 +1,560 @@

|

||||

|

||||

|

||||

[](https://opensource.org/)

|

||||

[](https://opensource.org/licenses/)

|

||||

[](https://aenix.io/contact-us/#meet)

|

||||

[](https://aenix.io/cozystack/)

|

||||

[](https://github.com/aenix-io/cozystack)

|

||||

[](https://github.com/aenix-io/cozystack)

|

||||

|

||||

# Cozystack

|

||||

|

||||

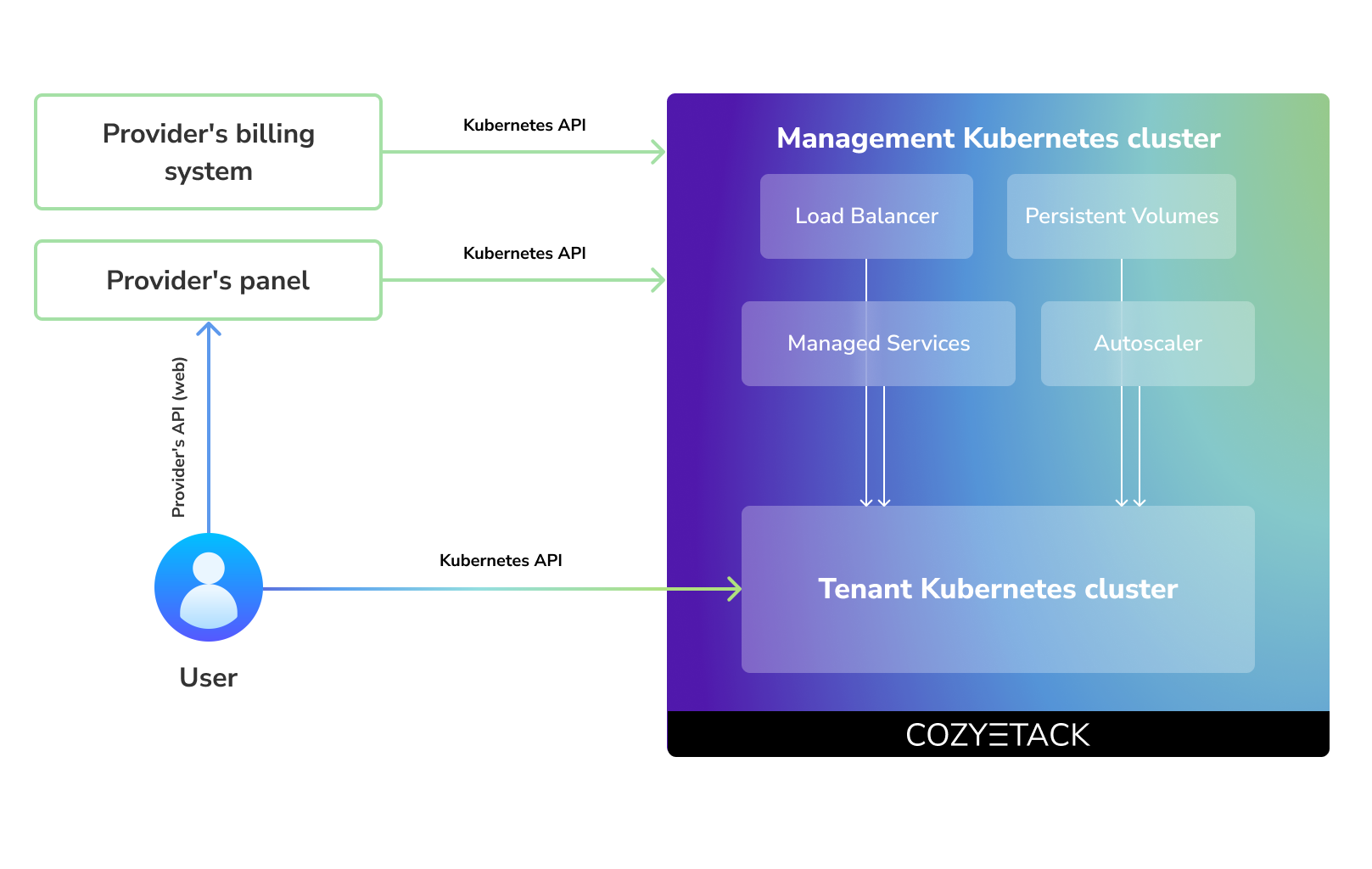

**Cozystack** is an open-source **PaaS platform** for cloud providers.

|

||||

|

||||

With Cozystack, you can transform your bunch of servers into an intelligent system with a simple REST API for spawning Kubernetes clusters, Database-as-a-Service, virtual machines, load balancers, HTTP caching services, and other services with ease.

|

||||

|

||||

You can use Cozystack to build your own cloud or to provide a cost-effective development environments.

|

||||

|

||||

## Use-Cases

|

||||

|

||||

### As a backend for a public cloud

|

||||

|

||||

Cozystack positions itself as a kind of framework for building public clouds. The key word here is framework. In this case, it's important to understand that Cozystack is made for cloud providers, not for end users.

|

||||

|

||||

Despite having a graphical interface, the current security model does not imply public user access to your management cluster.

|

||||

|

||||

Instead, end users get access to their own Kubernetes clusters, can order LoadBalancers and additional services from it, but they have no access and know nothing about your management cluster powered by Cozystack.

|

||||

|

||||

Thus, to integrate with your billing system, it's enough to teach your system to go to the management Kubernetes and place a YAML file signifying the service you're interested in. Cozystack will do the rest of the work for you.

|

||||

|

||||

|

||||

|

||||

### As a private cloud for Infrastructure-as-Code

|

||||

|

||||

One of the use cases is a self-portal for users within your company, where they can order the service they're interested in or a managed database.

|

||||

|

||||

You can implement best GitOps practices, where users will launch their own Kubernetes clusters and databases for their needs with a simple commit of configuration into your infrastructure Git repository.

|

||||

|

||||

Thanks to the standardization of the approach to deploying applications, you can expand the platform's capabilities using the functionality of standard Helm charts.

|

||||

|

||||

### As a Kubernetes distribution for Bare Metal

|

||||

|

||||

We created Cozystack primarily for our own needs, having vast experience in building reliable systems on bare metal infrastructure. This experience led to the formation of a separate boxed product, which is aimed at standardizing and providing a ready-to-use tool for managing your infrastructure.

|

||||

|

||||

Currently, Cozystack already solves a huge scope of infrastructure tasks: starting from provisioning bare metal servers, having a ready monitoring system, fast and reliable storage, a network fabric with the possibility of interconnect with your infrastructure, the ability to run virtual machines, databases, and much more right out of the box.

|

||||

|

||||

All this makes Cozystack a convenient platform for delivering and launching your application on Bare Metal.

|

||||

|

||||

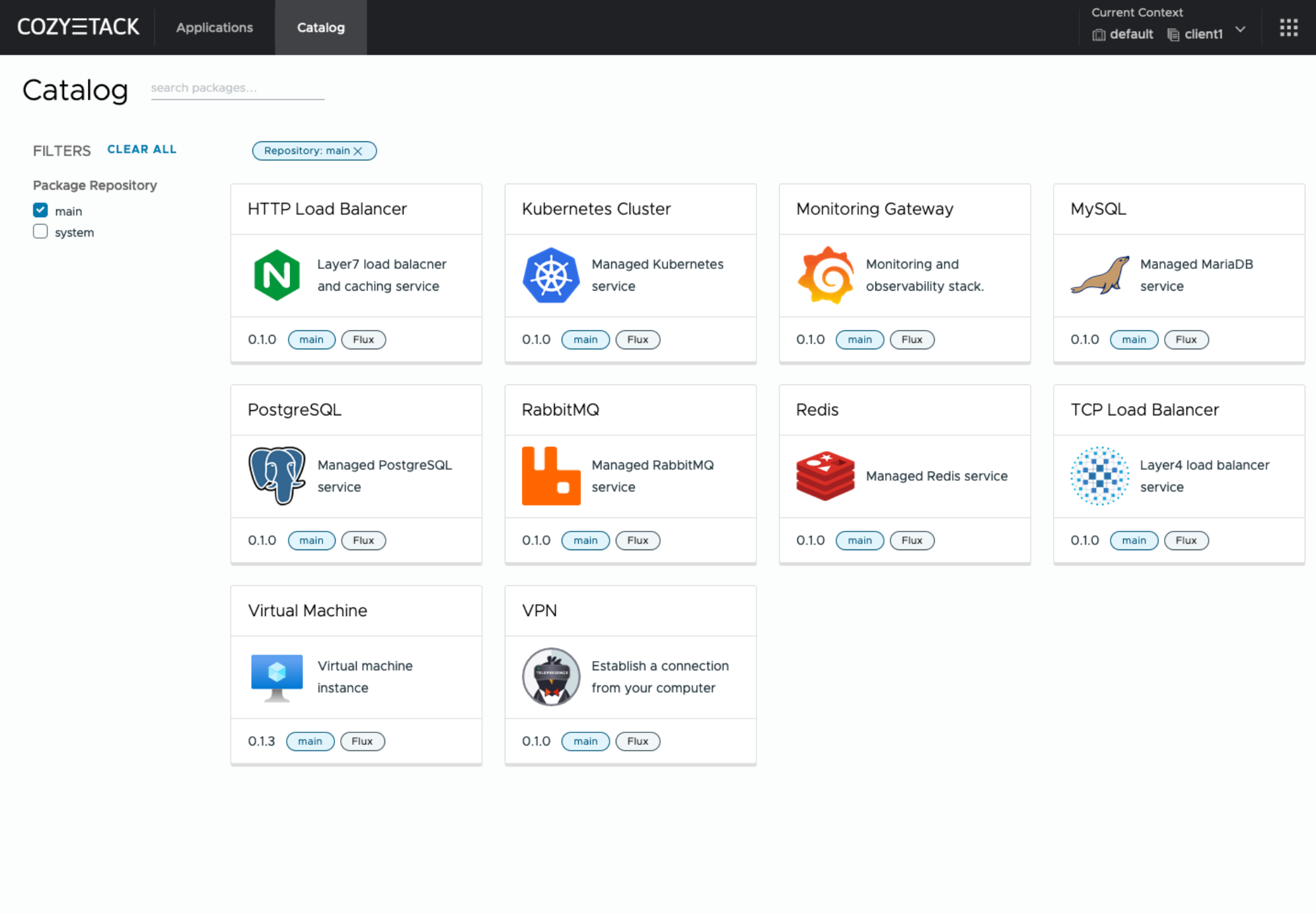

## Screenshot

|

||||

|

||||

|

||||

|

||||

## Core values

|

||||

|

||||

### Standardization and unification

|

||||

All components of the platform are based on open source tools and technologies which are widely known in the industry.

|

||||

|

||||

### Collaborate, not compete

|

||||

If a feature being developed for the platform could be useful to a upstream project, it should be contributed to upstream project, rather than being implemented within the platform.

|

||||

|

||||

### API-first

|

||||

Cozystack is based on Kubernetes and involves close interaction with its API. We don't aim to completely hide the all elements behind a pretty UI or any sort of customizations; instead, we provide a standard interface and teach users how to work with basic primitives. The web interface is used solely for deploying applications and quickly diving into basic concepts of platform.

|

||||

|

||||

## Quick Start

|

||||

|

||||

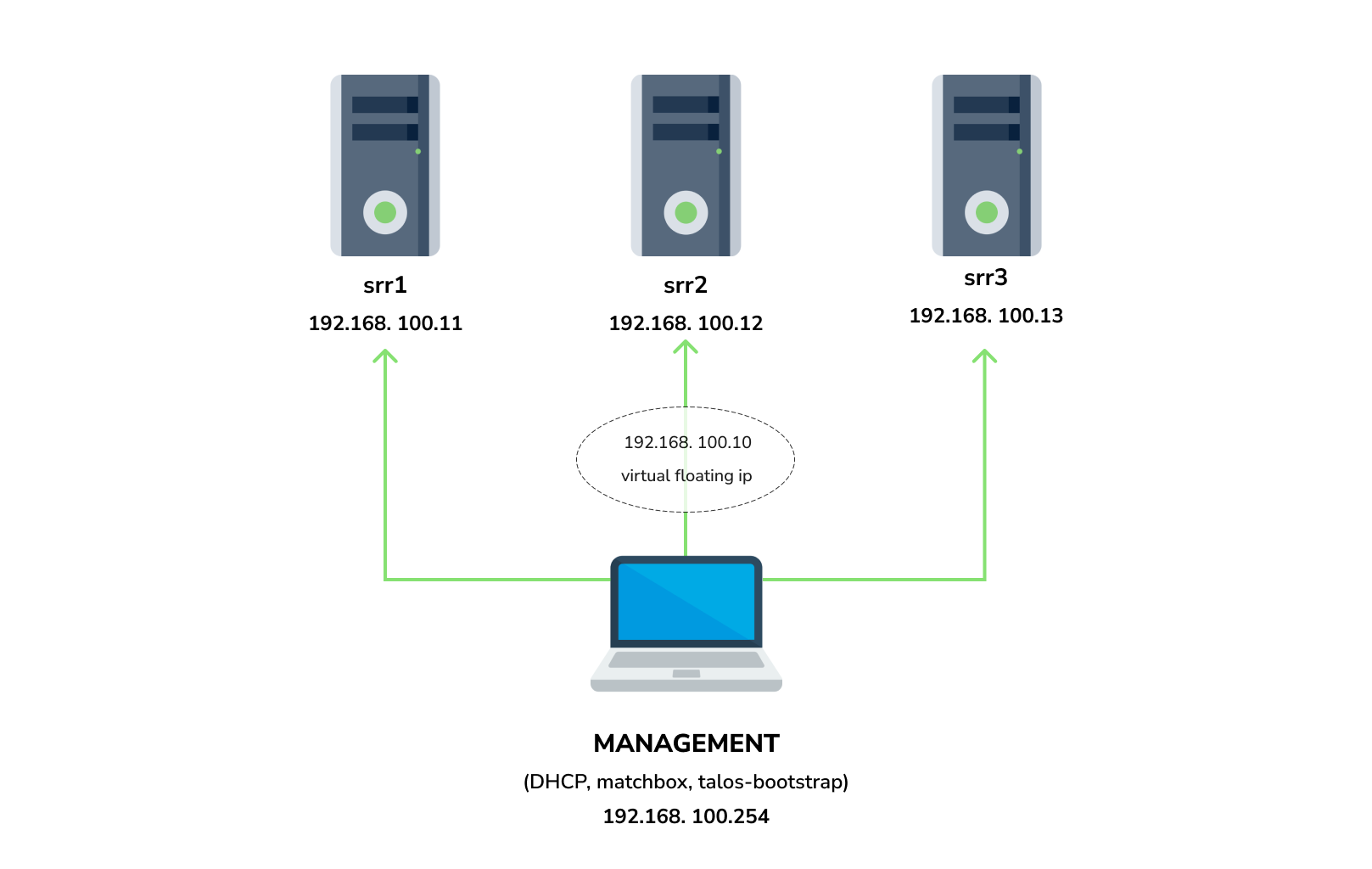

### Preapre infrastructure

|

||||

|

||||

|

||||

|

||||

|

||||

You need 3 physical servers or VMs with nested virtualisation:

|

||||

|

||||

```

|

||||

CPU: 4 cores

|

||||

CPU model: host

|

||||

RAM: 8-16 GB

|

||||

HDD1: 32 GB

|

||||

HDD2: 100GB (raw)

|

||||

```

|

||||

|

||||

And one management VM or physical server connected to the same network.

|

||||

Any Linux system installed on it (eg. Ubuntu should be enough)

|

||||

|

||||

**Note:** The VM should support `x86-64-v2` architecture, the most probably you can achieve this by setting cpu model to `host`

|

||||

|

||||

#### Install dependicies:

|

||||

|

||||

- `docker`

|

||||

- `talosctl`

|

||||

- `dialog`

|

||||

- `nmap`

|

||||

- `make`

|

||||

- `yq`

|

||||

- `kubectl`

|

||||

- `helm`

|

||||

|

||||

### Netboot server

|

||||

|

||||

Start matchbox with prebuilt Talos image for Cozystack:

|

||||

|

||||

```bash

|

||||

sudo docker run --name=matchbox -d --net=host ghcr.io/aenix-io/cozystack/matchbox:v0.0.1 \

|

||||

-address=:8080 \

|

||||

-log-level=debug

|

||||

```

|

||||

|

||||

Start DHCP-Server:

|

||||

```bash

|

||||

sudo docker run --name=dnsmasq -d --cap-add=NET_ADMIN --net=host quay.io/poseidon/dnsmasq \

|

||||

-d -q -p0 \

|

||||

--dhcp-range=192.168.100.3,192.168.100.254 \

|

||||

--dhcp-option=option:router,192.168.100.1 \

|

||||

--enable-tftp \

|

||||

--tftp-root=/var/lib/tftpboot \

|

||||

--dhcp-match=set:bios,option:client-arch,0 \

|

||||

--dhcp-boot=tag:bios,undionly.kpxe \

|

||||

--dhcp-match=set:efi32,option:client-arch,6 \

|

||||

--dhcp-boot=tag:efi32,ipxe.efi \

|

||||

--dhcp-match=set:efibc,option:client-arch,7 \

|

||||

--dhcp-boot=tag:efibc,ipxe.efi \

|

||||

--dhcp-match=set:efi64,option:client-arch,9 \

|

||||

--dhcp-boot=tag:efi64,ipxe.efi \

|

||||

--dhcp-userclass=set:ipxe,iPXE \

|

||||

--dhcp-boot=tag:ipxe,http://192.168.100.254:8080/boot.ipxe \

|

||||

--log-queries \

|

||||

--log-dhcp

|

||||

```

|

||||

|

||||

Where:

|

||||

- `192.168.100.3,192.168.100.254` range to allocate IPs from

|

||||

- `192.168.100.1` your gateway

|

||||

- `192.168.100.254` is address of your management server

|

||||

|

||||

Check status of containers:

|

||||

|

||||

```

|

||||

docker ps

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

|

||||

22044f26f74d quay.io/poseidon/dnsmasq "/usr/sbin/dnsmasq -…" 6 seconds ago Up 5 seconds dnsmasq

|

||||

231ad81ff9e0 ghcr.io/aenix-io/cozystack/matchbox:v0.0.1 "/matchbox -address=…" 58 seconds ago Up 57 seconds matchbox

|

||||

```

|

||||

|

||||

### Bootstrap cluster

|

||||

|

||||

Write configuration for Cozystack:

|

||||

|

||||

```yaml

|

||||

cat > patch.yaml <<\EOT

|

||||

machine:

|

||||

kubelet:

|

||||

nodeIP:

|

||||

validSubnets:

|

||||

- 192.168.100.0/24

|

||||

kernel:

|

||||

modules:

|

||||

- name: openvswitch

|

||||

- name: drbd

|

||||

parameters:

|

||||

- usermode_helper=disabled

|

||||

- name: zfs

|

||||

install:

|

||||

image: ghcr.io/aenix-io/cozystack/talos:v1.6.4

|

||||

files:

|

||||

- content: |

|

||||

[plugins]

|

||||

[plugins."io.containerd.grpc.v1.cri"]

|

||||

device_ownership_from_security_context = true

|

||||

path: /etc/cri/conf.d/20-customization.part

|

||||

op: create

|

||||

|

||||

cluster:

|

||||

network:

|

||||

cni:

|

||||

name: none

|

||||

podSubnets:

|

||||

- 10.244.0.0/16

|

||||

serviceSubnets:

|

||||

- 10.96.0.0/16

|

||||

EOT

|

||||

|

||||

cat > patch-controlplane.yaml <<\EOT

|

||||

cluster:

|

||||

allowSchedulingOnControlPlanes: true

|

||||

controllerManager:

|

||||

extraArgs:

|

||||

bind-address: 0.0.0.0

|

||||

scheduler:

|

||||

extraArgs:

|

||||

bind-address: 0.0.0.0

|

||||

apiServer:

|

||||

certSANs:

|

||||

- 127.0.0.1

|

||||

proxy:

|

||||

disabled: true

|

||||

discovery:

|

||||

enabled: false

|

||||

etcd:

|

||||

advertisedSubnets:

|

||||

- 192.168.100.0/24

|

||||

EOT

|

||||

```

|

||||

|

||||

Run [talos-bootstrap](https://github.com/aenix-io/talos-bootstrap/) to deploy cluster:

|

||||

|

||||

```bash

|

||||

talos-bootstrap install

|

||||

```

|

||||

|

||||

Save admin kubeconfig to access your Kubernetes cluster:

|

||||

```bash

|

||||

cp -i kubeconfig ~/.kube/config

|

||||

```

|

||||

|

||||

Check connection:

|

||||

```bash

|

||||

kubectl get ns

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME STATUS AGE

|

||||

default Active 7m56s

|

||||

kube-node-lease Active 7m56s

|

||||

kube-public Active 7m56s

|

||||

kube-system Active 7m56s

|

||||

```

|

||||

|

||||

### Install Cozystack

|

||||

|

||||

|

||||

write config for cozystack:

|

||||

|

||||

**Note:** please make sure that you written the same setting specified in `patch.yaml` and `patch-controlplane.yaml` files.

|

||||

|

||||

```yaml

|

||||

cat > cozystack-config.yaml <<\EOT

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-system

|

||||

data:

|

||||

cluster-name: "cozystack"

|

||||

ipv4-pod-cidr: "10.244.0.0/16"

|

||||

ipv4-pod-gateway: "10.244.0.1"

|

||||

ipv4-svc-cidr: "10.96.0.0/16"

|

||||

ipv4-join-cidr: "100.64.0.0/16"

|

||||

EOT

|

||||

```

|

||||

|

||||

Create namesapce and install Cozystack system components:

|

||||

|

||||

```bash

|

||||

kubectl create ns cozy-system

|

||||

kubectl apply -f cozystack-config.yaml

|

||||

kubectl apply -f manifests/cozystack-installer.yaml

|

||||

```

|

||||

|

||||

(optional) You can track the logs of installer:

|

||||

```bash

|

||||

kubectl logs -n cozy-system deploy/cozystack -f

|

||||

```

|

||||

|

||||

Wait for a while, then check the status of installation:

|

||||

```bash

|

||||

kubectl get hr -A

|

||||

```

|

||||

|

||||

Wait until all releases become to `Ready` state:

|

||||

```console

|

||||

NAMESPACE NAME AGE READY STATUS

|

||||

cozy-cert-manager cert-manager 4m1s True Release reconciliation succeeded

|

||||

cozy-cert-manager cert-manager-issuers 4m1s True Release reconciliation succeeded

|

||||

cozy-cilium cilium 4m1s True Release reconciliation succeeded

|

||||

cozy-cluster-api capi-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-cluster-api capi-providers 4m1s True Release reconciliation succeeded

|

||||

cozy-dashboard dashboard 4m1s True Release reconciliation succeeded

|

||||

cozy-fluxcd cozy-fluxcd 4m1s True Release reconciliation succeeded

|

||||

cozy-grafana-operator grafana-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-kamaji kamaji 4m1s True Release reconciliation succeeded

|

||||

cozy-kubeovn kubeovn 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt-cdi kubevirt-cdi 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt-cdi kubevirt-cdi-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt kubevirt 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt kubevirt-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-linstor linstor 4m1s True Release reconciliation succeeded

|

||||

cozy-linstor piraeus-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-mariadb-operator mariadb-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-metallb metallb 4m1s True Release reconciliation succeeded

|

||||

cozy-monitoring monitoring 4m1s True Release reconciliation succeeded

|

||||

cozy-postgres-operator postgres-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-rabbitmq-operator rabbitmq-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-redis-operator redis-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-telepresence telepresence 4m1s True Release reconciliation succeeded

|

||||

cozy-victoria-metrics-operator victoria-metrics-operator 4m1s True Release reconciliation succeeded

|

||||

tenant-root tenant-root 4m1s True Release reconciliation succeeded

|

||||

```

|

||||

|

||||

#### Configure Storage

|

||||

|

||||

Setup alias to access LINSTOR:

|

||||

```bash

|

||||

alias linstor='kubectl exec -n cozy-linstor deploy/linstor-controller -- linstor'

|

||||

```

|

||||

|

||||

list your nodes

|

||||

```bash

|

||||

linstor node list

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

+-------------------------------------------------------+

|

||||

| Node | NodeType | Addresses | State |

|

||||

|=======================================================|

|

||||

| srv1 | SATELLITE | 192.168.100.11:3367 (SSL) | Online |

|

||||

| srv2 | SATELLITE | 192.168.100.12:3367 (SSL) | Online |

|

||||

| srv3 | SATELLITE | 192.168.100.13:3367 (SSL) | Online |

|

||||

+-------------------------------------------------------+

|

||||

```

|

||||

|

||||

list empty devices:

|

||||

|

||||

```bash

|

||||

linstor physical-storage list

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

+--------------------------------------------+

|

||||

| Size | Rotational | Nodes |

|

||||

|============================================|

|

||||

| 107374182400 | True | srv3[/dev/sdb] |

|

||||

| | | srv1[/dev/sdb] |

|

||||

| | | srv2[/dev/sdb] |

|

||||

+--------------------------------------------+

|

||||

```

|

||||

|

||||

|

||||

create storage pools:

|

||||

|

||||

```bash

|

||||

linstor ps cdp lvm srv1 /dev/sdb --pool-name data --storage-pool data

|

||||

linstor ps cdp lvm srv2 /dev/sdb --pool-name data --storage-pool data

|

||||

linstor ps cdp lvm srv3 /dev/sdb --pool-name data --storage-pool data

|

||||

```

|

||||

|

||||

list storage pools:

|

||||

|

||||

```bash

|

||||

linstor sp l

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

+-------------------------------------------------------------------------------------------------------------------------------------+

|

||||

| StoragePool | Node | Driver | PoolName | FreeCapacity | TotalCapacity | CanSnapshots | State | SharedName |

|

||||

|=====================================================================================================================================|

|

||||

| DfltDisklessStorPool | srv1 | DISKLESS | | | | False | Ok | srv1;DfltDisklessStorPool |

|

||||

| DfltDisklessStorPool | srv2 | DISKLESS | | | | False | Ok | srv2;DfltDisklessStorPool |

|

||||

| DfltDisklessStorPool | srv3 | DISKLESS | | | | False | Ok | srv3;DfltDisklessStorPool |

|

||||

| data | srv1 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv1;data |

|

||||

| data | srv2 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv2;data |

|

||||

| data | srv3 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv3;data |

|

||||

+-------------------------------------------------------------------------------------------------------------------------------------+

|

||||

```

|

||||

|

||||

|

||||

Create default storage classes:

|

||||

```yaml

|

||||

kubectl create -f- <<EOT

|

||||

---

|

||||

apiVersion: storage.k8s.io/v1

|

||||

kind: StorageClass

|

||||

metadata:

|

||||

name: local

|

||||

annotations:

|

||||

storageclass.kubernetes.io/is-default-class: "true"

|

||||

provisioner: linstor.csi.linbit.com

|

||||

parameters:

|

||||

linstor.csi.linbit.com/storagePool: "data"

|

||||

linstor.csi.linbit.com/layerList: "storage"

|

||||

linstor.csi.linbit.com/allowRemoteVolumeAccess: "false"

|

||||

volumeBindingMode: WaitForFirstConsumer

|

||||

allowVolumeExpansion: true

|

||||

---

|

||||

apiVersion: storage.k8s.io/v1

|

||||

kind: StorageClass

|

||||

metadata:

|

||||

name: replicated

|

||||

provisioner: linstor.csi.linbit.com

|

||||

parameters:

|

||||

linstor.csi.linbit.com/storagePool: "data"

|

||||

linstor.csi.linbit.com/autoPlace: "3"

|

||||

linstor.csi.linbit.com/layerList: "drbd storage"

|

||||

linstor.csi.linbit.com/allowRemoteVolumeAccess: "true"

|

||||

property.linstor.csi.linbit.com/DrbdOptions/auto-quorum: suspend-io

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Resource/on-no-data-accessible: suspend-io

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Resource/on-suspended-primary-outdated: force-secondary

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Net/rr-conflict: retry-connect

|

||||

volumeBindingMode: WaitForFirstConsumer

|

||||

allowVolumeExpansion: true

|

||||

EOT

|

||||

```

|

||||

|

||||

list storageclasses:

|

||||

|

||||

```bash

|

||||

kubectl get storageclasses

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

|

||||

local (default) linstor.csi.linbit.com Delete WaitForFirstConsumer true 11m

|

||||

replicated linstor.csi.linbit.com Delete WaitForFirstConsumer true 11m

|

||||

```

|

||||

|

||||

#### Configure Networking interconnection

|

||||

|

||||

To access your services select the range of unused IPs, eg. `192.168.100.200-192.168.100.250`

|

||||

|

||||

**Note:** These IPs should be from the same network as nodes or they should have all necessary routes for them.

|

||||

|

||||

Configure MetalLB to use and announce this range:

|

||||

```yaml

|

||||

kubectl create -f- <<EOT

|

||||

---

|

||||

apiVersion: metallb.io/v1beta1

|

||||

kind: L2Advertisement

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-metallb

|

||||

spec:

|

||||

ipAddressPools:

|

||||

- cozy-public

|

||||

---

|

||||

apiVersion: metallb.io/v1beta1

|

||||

kind: IPAddressPool

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-metallb

|

||||

spec:

|

||||

addresses:

|

||||

- 192.168.100.200-192.168.100.250

|

||||

autoAssign: true

|

||||

avoidBuggyIPs: false

|

||||

EOT

|

||||

```

|

||||

|

||||

#### Setup basic applications

|

||||

|

||||

Get token from `tenant-root`:

|

||||

```bash

|

||||

kubectl get secret -n tenant-root tenant-root -o go-template='{{ printf "%s\n" (index .data "token" | base64decode) }}'

|

||||

```

|

||||

|

||||

Enable port forward to cozy-dashboard:

|

||||

```bash

|

||||

kubectl port-forward -n cozy-dashboard svc/dashboard 8080:80

|

||||

```

|

||||

|

||||

Open: http://localhost:8080/

|

||||

|

||||

- Select `tenant-root`

|

||||

- Click `Upgrade` button

|

||||

- Write a domain into `host` which you wish to use as parent domain for all deployed applications

|

||||

**Note:**

|

||||

- if you have no domain yet, you can use `192.168.100.200.nip.io` where `192.168.100.200` is a first IP address in your network addresses range.

|

||||

- alternatively you can leave the default value, however you'll be need to modify your `/etc/hosts` every time you want to access specific application.

|

||||

- Set `etcd`, `monitoring` and `ingress` to enabled position

|

||||

- Click Deploy

|

||||

|

||||

|

||||

Check persistent volumes provisioned:

|

||||

|

||||

```bash

|

||||

kubectl get pvc -n tenant-root

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

|

||||

data-etcd-0 Bound pvc-4cbd29cc-a29f-453d-b412-451647cd04bf 10Gi RWO local <unset> 2m10s

|

||||

data-etcd-1 Bound pvc-1579f95a-a69d-4a26-bcc2-b15ccdbede0d 10Gi RWO local <unset> 115s

|

||||

data-etcd-2 Bound pvc-907009e5-88bf-4d18-91e7-b56b0dbfb97e 10Gi RWO local <unset> 91s

|

||||

grafana-db-1 Bound pvc-7b3f4e23-228a-46fd-b820-d033ef4679af 10Gi RWO local <unset> 2m41s

|

||||

grafana-db-2 Bound pvc-ac9b72a4-f40e-47e8-ad24-f50d843b55e4 10Gi RWO local <unset> 113s

|

||||

vmselect-cachedir-vmselect-longterm-0 Bound pvc-622fa398-2104-459f-8744-565eee0a13f1 2Gi RWO local <unset> 2m21s

|

||||

vmselect-cachedir-vmselect-longterm-1 Bound pvc-fc9349f5-02b2-4e25-8bef-6cbc5cc6d690 2Gi RWO local <unset> 2m21s

|

||||

vmselect-cachedir-vmselect-shortterm-0 Bound pvc-7acc7ff6-6b9b-4676-bd1f-6867ea7165e2 2Gi RWO local <unset> 2m41s

|

||||

vmselect-cachedir-vmselect-shortterm-1 Bound pvc-e514f12b-f1f6-40ff-9838-a6bda3580eb7 2Gi RWO local <unset> 2m40s

|

||||

vmstorage-db-vmstorage-longterm-0 Bound pvc-e8ac7fc3-df0d-4692-aebf-9f66f72f9fef 10Gi RWO local <unset> 2m21s

|

||||

vmstorage-db-vmstorage-longterm-1 Bound pvc-68b5ceaf-3ed1-4e5a-9568-6b95911c7c3a 10Gi RWO local <unset> 2m21s

|

||||

vmstorage-db-vmstorage-shortterm-0 Bound pvc-cee3a2a4-5680-4880-bc2a-85c14dba9380 10Gi RWO local <unset> 2m41s

|

||||

vmstorage-db-vmstorage-shortterm-1 Bound pvc-d55c235d-cada-4c4a-8299-e5fc3f161789 10Gi RWO local <unset> 2m41s

|

||||

```

|

||||

|

||||

Check all pods are running:

|

||||

|

||||

|

||||

```bash

|

||||

kubectl get pod -n tenant-root

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME READY STATUS RESTARTS AGE

|

||||

etcd-0 1/1 Running 0 2m1s

|

||||

etcd-1 1/1 Running 0 106s

|

||||

etcd-2 1/1 Running 0 82s

|

||||

grafana-db-1 1/1 Running 0 119s

|

||||

grafana-db-2 1/1 Running 0 13s

|

||||

grafana-deployment-74b5656d6-5dcvn 1/1 Running 0 90s

|

||||

grafana-deployment-74b5656d6-q5589 1/1 Running 1 (105s ago) 111s

|

||||

root-ingress-controller-6ccf55bc6d-pg79l 2/2 Running 0 2m27s

|

||||

root-ingress-controller-6ccf55bc6d-xbs6x 2/2 Running 0 2m29s

|

||||

root-ingress-defaultbackend-686bcbbd6c-5zbvp 1/1 Running 0 2m29s

|

||||

vmalert-vmalert-644986d5c-7hvwk 2/2 Running 0 2m30s

|

||||

vmalertmanager-alertmanager-0 2/2 Running 0 2m32s

|

||||

vmalertmanager-alertmanager-1 2/2 Running 0 2m31s

|

||||

vminsert-longterm-75789465f-hc6cz 1/1 Running 0 2m10s

|

||||

vminsert-longterm-75789465f-m2v4t 1/1 Running 0 2m12s

|

||||

vminsert-shortterm-78456f8fd9-wlwww 1/1 Running 0 2m29s

|

||||

vminsert-shortterm-78456f8fd9-xg7cw 1/1 Running 0 2m28s

|

||||

vmselect-longterm-0 1/1 Running 0 2m12s

|

||||

vmselect-longterm-1 1/1 Running 0 2m12s

|

||||

vmselect-shortterm-0 1/1 Running 0 2m31s

|

||||

vmselect-shortterm-1 1/1 Running 0 2m30s

|

||||

vmstorage-longterm-0 1/1 Running 0 2m12s

|

||||

vmstorage-longterm-1 1/1 Running 0 2m12s

|

||||

vmstorage-shortterm-0 1/1 Running 0 2m32s

|

||||

vmstorage-shortterm-1 1/1 Running 0 2m31s

|

||||

```

|

||||

|

||||

Now you can get public IP of ingress controller:

|

||||

```

|

||||

kubectl get svc -n tenant-root root-ingress-controller

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

|

||||

root-ingress-controller LoadBalancer 10.96.16.141 192.168.100.200 80:31632/TCP,443:30113/TCP 3m33s

|

||||

```

|

||||

|

||||

Use `grafana.example.org` (under 192.168.100.200) to access system monitoring, where `example.org` is your domain specified for `tenant-root`

|

||||

|

||||

- login: `admin`

|

||||

- password:

|

||||

|

||||

```bash

|

||||

kubectl get secret -n tenant-root grafana-admin-password -o go-template='{{ printf "%s\n" (index .data "password" | base64decode) }}'

|

||||

```

|

||||

1

dashboards/.gitignore

vendored

Normal file

1

dashboards/.gitignore

vendored

Normal file

@@ -0,0 +1 @@

|

||||

*.tmp

|

||||

3038

dashboards/cache/nginx-vts-stats.json

vendored

Normal file

3038

dashboards/cache/nginx-vts-stats.json

vendored

Normal file

File diff suppressed because it is too large

Load Diff

2571

dashboards/control-plane/control-plane-status.json

Normal file

2571

dashboards/control-plane/control-plane-status.json

Normal file

File diff suppressed because it is too large

Load Diff

611

dashboards/control-plane/deprecated-resources.json

Normal file

611

dashboards/control-plane/deprecated-resources.json

Normal file

@@ -0,0 +1,611 @@

|

||||

{

|

||||

"annotations": {

|

||||

"list": [

|

||||

{

|

||||

"builtIn": 1,

|

||||

"datasource": {

|

||||

"type": "grafana",

|

||||

"uid": "-- Grafana --"

|

||||

},

|

||||

"enable": true,

|

||||

"hide": true,

|

||||

"iconColor": "rgba(0, 211, 255, 1)",

|

||||

"name": "Annotations & Alerts",

|

||||

"target": {

|

||||

"limit": 100,

|

||||

"matchAny": false,

|

||||

"tags": [],

|

||||

"type": "dashboard"

|

||||

},

|

||||

"type": "dashboard"

|

||||

}

|

||||

]

|

||||

},

|

||||

"description": "Track whether the cluster can be upgraded to the newer Kubernetes versions",

|

||||

"editable": false,

|

||||

"fiscalYearStartMonth": 0,

|

||||

"graphTooltip": 1,

|

||||

"id": 30,

|

||||

"iteration": 1656060742701,

|

||||

"links": [],

|

||||

"liveNow": false,

|

||||

"panels": [

|

||||

{

|

||||

"datasource": {

|

||||

"type": "prometheus",

|

||||

"uid": "$ds_prometheus"

|

||||

},

|

||||

"fieldConfig": {

|

||||

"defaults": {

|

||||

"color": {

|

||||

"fixedColor": "blue",

|

||||

"mode": "fixed"

|

||||

},

|

||||

"mappings": [],

|

||||

"thresholds": {

|

||||

"mode": "absolute",

|

||||

"steps": [

|

||||

{

|

||||

"color": "green",

|

||||

"value": null

|

||||

},

|

||||

{

|

||||

"color": "red",

|

||||

"value": 80

|

||||

}

|

||||

]

|

||||

}

|

||||

},

|

||||

"overrides": []

|

||||

},

|

||||

"gridPos": {

|

||||

"h": 5,

|

||||

"w": 5,

|

||||

"x": 0,

|

||||

"y": 0

|

||||

},

|

||||

"id": 9,

|

||||

"options": {

|

||||

"colorMode": "value",

|

||||

"graphMode": "none",

|

||||

"justifyMode": "auto",

|

||||

"orientation": "auto",

|

||||

"reduceOptions": {

|

||||

"calcs": [

|

||||

"lastNotNull"

|

||||

],

|

||||

"fields": "",

|

||||

"values": false

|

||||

},

|

||||

"text": {},

|

||||

"textMode": "name"

|

||||

},

|

||||

"pluginVersion": "8.5.2",

|

||||

"targets": [

|

||||

{

|

||||

"datasource": {

|

||||

"type": "prometheus",

|

||||

"uid": "$ds_prometheus"

|

||||

},

|

||||

"editorMode": "code",

|

||||

"expr": "topk(1, sum by (git_version) (kubernetes_build_info{job=\"kube-apiserver\"}))",

|

||||

"legendFormat": "{{ git_version }}",

|

||||

"range": true,

|

||||

"refId": "A"

|

||||

}

|

||||

],

|

||||

"title": "Current K8s version",

|

||||

"transformations": [

|

||||

{

|

||||

"id": "reduce",

|

||||

"options": {

|

||||

"labelsToFields": false,

|

||||

"reducers": []

|

||||

}

|

||||

}

|

||||

],

|

||||

"type": "stat"

|

||||

},

|

||||

{

|

||||

"datasource": {

|

||||

"type": "prometheus",

|

||||

"uid": "$ds_prometheus"

|

||||

},

|

||||

"fieldConfig": {

|

||||

"defaults": {

|

||||

"color": {

|

||||

"mode": "thresholds"

|

||||

},

|

||||

"mappings": [

|

||||

{

|

||||

"options": {

|

||||

"from": 1,

|

||||

"result": {

|

||||

"index": 0,

|

||||

"text": "Cannot be upgraded"

|

||||

},

|

||||

"to": 1e+18

|

||||

},

|

||||

"type": "range"

|

||||

},

|

||||

{

|

||||

"options": {

|

||||

"match": "null+nan",

|

||||

"result": {

|

||||

"index": 1,

|

||||

"text": "Can be upgraded"

|

||||

}

|

||||

},

|

||||

"type": "special"

|

||||

}

|

||||

],

|

||||

"thresholds": {

|

||||

"mode": "absolute",

|

||||

"steps": [

|

||||

{

|

||||

"color": "green",

|

||||

"value": null

|

||||

},

|

||||

{

|

||||

"color": "red",

|

||||

"value": 80

|

||||

}

|

||||

]

|

||||

}

|

||||

},

|

||||

"overrides": []

|

||||

},

|

||||

"gridPos": {

|

||||

"h": 5,

|

||||

"w": 7,

|

||||

"x": 5,

|

||||

"y": 0

|

||||

},

|

||||

"id": 4,

|

||||

"options": {

|

||||

"colorMode": "value",

|

||||

"graphMode": "none",

|

||||

"justifyMode": "center",

|

||||

"orientation": "auto",

|

||||

"reduceOptions": {

|

||||

"calcs": [

|

||||

"lastNotNull"

|

||||

],

|

||||

"fields": "",

|

||||

"values": false

|

||||

},

|

||||

"textMode": "auto"

|

||||

},

|

||||

"pluginVersion": "8.5.2",

|

||||

"targets": [

|

||||

{

|

||||

"datasource": {

|

||||

"type": "prometheus",

|

||||

"uid": "$ds_prometheus"

|

||||

},

|

||||

"editorMode": "code",

|

||||

"exemplar": false,

|

||||

"expr": "sum(\n ceil(\n sum by(removed_release, resource, group, version) (\n sum by(removed_release, resource, group, version) \n (apiserver_requested_deprecated_apis{removed_release=\"$k8s\"}) \n *\n on(group,version,resource,subresource)\n group_right() (increase(apiserver_request_total[1h]))\n )\n )\n) or vector(0)\n+ \nsum(\n sum by (api_version, kind, helm_release_name, helm_release_namespace)\n (resource_versions_compatibility{k8s_version=~\"$k8s\"})\n) or vector(0)\n> 0",

|

||||

"instant": true,

|

||||

"range": false,

|

||||

"refId": "A"

|

||||

}

|

||||

],

|

||||

"title": "Upgrade to desired version status",

|

||||

"type": "stat"

|

||||

},

|

||||

{

|

||||

"datasource": {

|

||||

"type": "prometheus",

|

||||

"uid": "$ds_prometheus"

|

||||

},

|

||||

"gridPos": {

|

||||

"h": 5,

|

||||

"w": 12,

|

||||

"x": 12,

|

||||

"y": 0

|

||||

},

|

||||

"id": 7,

|

||||

"options": {

|

||||

"content": "<br>\n\n#### Follow instructions to migrate from using **deprecated APIs**\n\nhttps://kubernetes.io/docs/reference/using-api/deprecation-guide/",

|

||||

"mode": "markdown"

|

||||

},

|

||||

"pluginVersion": "8.5.2",

|

||||

"type": "text"

|

||||

},

|

||||

{

|

||||

"datasource": {

|

||||

"type": "prometheus",

|

||||

"uid": "$ds_prometheus"

|

||||

},

|

||||

"fieldConfig": {

|

||||

"defaults": {

|

||||

"color": {

|

||||

"mode": "thresholds"

|

||||

},

|

||||

"custom": {

|

||||

"align": "auto",

|

||||

"displayMode": "color-background",

|

||||

"filterable": false,

|

||||

"inspect": false,

|

||||

"minWidth": 100

|

||||

},

|

||||

"mappings": [],

|

||||

"thresholds": {

|

||||

"mode": "absolute",

|

||||

"steps": [

|

||||

{

|

||||

"color": "super-light-orange",

|

||||

"value": null

|

||||

},

|

||||

{

|

||||

"color": "light-orange",

|

||||

"value": 50

|

||||

},

|

||||

{

|

||||

"color": "orange",

|

||||

"value": 200

|

||||

},

|

||||

{

|

||||

"color": "semi-dark-orange",

|

||||

"value": 500

|

||||

},

|

||||

{

|

||||

"color": "dark-orange",

|

||||

"value": 1000

|

||||

}

|

||||

]

|

||||

}

|

||||

},

|

||||

"overrides": [

|

||||

{

|

||||

"matcher": {

|

||||

"id": "byName",

|

||||

"options": "Group"

|

||||

},

|

||||

"properties": [

|

||||

{

|

||||

"id": "custom.displayMode",

|

||||

"value": "auto"

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"matcher": {

|

||||

"id": "byName",

|

||||

"options": "Version"

|

||||

},

|

||||

"properties": [

|

||||

{

|

||||

"id": "custom.displayMode",

|

||||

"value": "auto"

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"matcher": {

|

||||

"id": "byName",

|

||||

"options": "Resource"

|

||||

},

|

||||

"properties": [

|

||||

{

|

||||

"id": "custom.displayMode",

|

||||

"value": "auto"

|

||||

}

|

||||

]

|

||||

}

|

||||

]

|

||||

},

|

||||

"gridPos": {

|

||||

"h": 13,

|

||||

"w": 12,

|

||||

"x": 0,

|

||||

"y": 5

|

||||

},

|

||||

"id": 2,

|

||||

"options": {

|

||||

"footer": {

|

||||

"enablePagination": false,

|

||||

"fields": "",

|

||||

"reducer": [

|

||||

"sum"

|

||||

],

|

||||

"show": false

|

||||

},

|

||||

"showHeader": true,

|

||||

"sortBy": []

|

||||

},

|

||||

"pluginVersion": "8.5.2",

|

||||

"targets": [

|

||||

{

|

||||

"datasource": {

|

||||

"type": "prometheus",

|

||||

"uid": "$ds_prometheus"

|

||||

},

|

||||

"editorMode": "code",

|

||||

"exemplar": false,

|

||||

"expr": "ceil(\n sum by(removed_release, resource, group, version)(\n sum by(removed_release, resource, group, version) \n (apiserver_requested_deprecated_apis{removed_release=~\"$k8s\"}) \n * \n on(group,version,resource,subresource)\n group_right() (increase(apiserver_request_total[3h]))\n )\n) > 0",

|

||||

"format": "table",

|

||||

"instant": true,

|

||||

"range": false,

|

||||

"refId": "A"

|

||||

}

|

||||

],

|

||||

"title": "Requests to kube-apiserver (last 3 hours)",

|

||||

"transformations": [

|

||||

{

|

||||

"id": "filterFieldsByName",

|

||||

"options": {

|

||||

"include": {

|

||||

"names": [

|

||||

"group",

|

||||

"removed_release",

|

||||

"resource",

|

||||

"version",

|

||||

"Value"

|

||||

]

|

||||

}

|

||||

}

|

||||

},

|

||||

{

|

||||

"id": "organize",

|

||||

"options": {

|

||||

"excludeByName": {},

|

||||

"indexByName": {

|

||||

"Value": 4,

|

||||

"group": 0,

|

||||

"removed_release": 3,

|

||||

"resource": 2,

|

||||

"version": 1

|

||||

},

|

||||

"renameByName": {

|

||||

"Value": "",

|

||||

"group": "Group",

|

||||

"removed_release": "Removed Release",

|

||||

"resource": "Resource",

|

||||

"version": "Version"

|

||||

}

|

||||

}

|

||||

},

|

||||

{

|

||||

"id": "sortBy",

|

||||

"options": {

|

||||

"fields": {},

|

||||

"sort": [

|

||||

{

|

||||

"desc": true,

|

||||

"field": "Value"

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

],

|

||||

"type": "table"

|

||||

},

|

||||

{

|

||||

"datasource": {

|

||||

"type": "prometheus",

|

||||

"uid": "$ds_prometheus"

|

||||

},

|

||||

"fieldConfig": {

|

||||

"defaults": {

|

||||

"color": {

|

||||

"mode": "thresholds"

|

||||

},

|

||||

"custom": {

|

||||

"align": "auto",

|

||||

"displayMode": "color-background",

|

||||

"filterable": false,

|

||||

"inspect": false,

|

||||

"minWidth": 100

|

||||

},

|

||||

"mappings": [],

|

||||

"thresholds": {

|

||||

"mode": "absolute",

|

||||

"steps": [

|

||||

{

|

||||

"color": "super-light-orange",

|

||||

"value": null

|

||||

},

|

||||

{

|

||||

"color": "#EAB839",

|

||||

"value": 5

|

||||

},

|

||||

{

|

||||

"color": "orange",

|

||||

"value": 15

|

||||

},

|

||||

{

|

||||

"color": "dark-orange",

|

||||

"value": 50

|

||||

}

|

||||

]

|

||||

}

|

||||

},

|

||||

"overrides": [

|

||||

{

|

||||

"matcher": {

|

||||

"id": "byName",

|

||||

"options": "API version"

|

||||

},

|

||||

"properties": [

|

||||

{

|

||||

"id": "custom.displayMode",

|

||||

"value": "auto"

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"matcher": {

|

||||

"id": "byName",

|

||||

"options": "Helm release"

|

||||

},

|

||||

"properties": [

|

||||

{

|

||||

"id": "custom.displayMode",

|

||||

"value": "auto"

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"matcher": {

|

||||

"id": "byName",

|

||||

"options": "Helm release namespace"

|

||||

},

|

||||

"properties": [

|

||||

{

|

||||

"id": "custom.displayMode",

|

||||

"value": "auto"

|

||||

}

|

||||

]

|

||||

},

|

||||

{

|

||||

"matcher": {

|

||||

"id": "byName",

|

||||

"options": "Kind"

|

||||

},

|

||||

"properties": [

|

||||

{

|

||||

"id": "custom.displayMode",

|

||||

"value": "auto"

|

||||

}

|

||||

]

|

||||

}

|

||||

]

|

||||

},

|

||||

"gridPos": {

|

||||

"h": 13,

|

||||

"w": 12,

|

||||

"x": 12,

|

||||

"y": 5

|

||||

},

|

||||

"id": 5,

|

||||

"options": {

|

||||

"footer": {

|

||||

"enablePagination": false,

|

||||

"fields": "",

|

||||

"reducer": [

|

||||

"sum"

|

||||

],

|

||||

"show": false

|

||||

},

|

||||

"showHeader": true,

|

||||

"sortBy": []

|

||||

},

|

||||

"pluginVersion": "8.5.2",

|

||||

"targets": [

|

||||

{

|

||||

"datasource": {

|

||||

"type": "prometheus",

|

||||

"uid": "$ds_prometheus"

|

||||

},

|

||||

"editorMode": "code",

|

||||

"exemplar": false,

|

||||

"expr": "sum by (api_version, kind, helm_release_name, helm_release_namespace) (resource_versions_compatibility{k8s_version=~\"$k8s\"})",

|

||||

"format": "table",

|

||||

"instant": true,

|

||||

"legendFormat": "__auto",

|

||||

"range": false,

|

||||

"refId": "A"

|

||||

}

|

||||

],

|

||||

"title": "Helm releases",

|

||||

"transformations": [

|

||||

{

|

||||

"id": "organize",

|

||||

"options": {

|

||||

"excludeByName": {

|

||||

"Time": true

|

||||

},

|

||||

"indexByName": {},

|

||||

"renameByName": {

|

||||

"Time": "",

|

||||

"Value": "Quantity",

|

||||

"api_version": "API version",

|

||||

"helm_release_name": "Helm release",

|

||||

"helm_release_namespace": "Helm release namespace",

|

||||

"kind": "Kind"

|

||||

}

|

||||

}

|

||||

},

|

||||

{

|

||||

"id": "sortBy",

|

||||

"options": {

|

||||

"fields": {},

|

||||

"sort": [

|

||||

{

|

||||

"desc": true,

|

||||

"field": "Quantity"

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

],

|

||||

"type": "table"

|

||||

}

|

||||

],

|

||||

"schemaVersion": 36,

|

||||

"style": "dark",

|

||||

"tags": [],

|

||||

"templating": {

|

||||

"list": [

|

||||

{

|

||||

"current": {

|

||||

"selected": false,

|

||||

"text": "default",

|

||||

"value": "default"

|

||||

},

|

||||

"description": null,

|

||||

"error": null,

|

||||

"hide": 0,

|

||||

"includeAll": false,

|

||||

"label": "datasource",

|

||||

"multi": false,

|

||||

"name": "ds_prometheus",

|

||||

"options": [],

|

||||

"query": "prometheus",

|

||||

"refresh": 1,

|

||||

"regex": "",

|

||||

"skipUrlSync": false,

|

||||

"type": "datasource"

|

||||

},

|

||||

{

|

||||

"datasource": {

|

||||

"type": "prometheus",

|

||||

"uid": "$ds_prometheus"

|

||||

},

|

||||

"definition": "label_values(apiserver_requested_deprecated_apis, removed_release)",

|

||||

"hide": 0,

|

||||

"includeAll": false,

|

||||

"label": "Desired K8s version",

|

||||

"multi": false,

|

||||

"name": "k8s",

|

||||

"options": [],

|

||||

"query": {

|

||||

"query": "label_values(apiserver_requested_deprecated_apis, removed_release)",

|

||||

"refId": "StandardVariableQuery"

|

||||

},

|

||||

"refresh": 1,

|

||||

"regex": "",

|

||||

"skipUrlSync": false,

|

||||

"sort": 0,

|

||||

"type": "query"

|

||||

}

|

||||

]

|

||||

},

|

||||

"time": {

|

||||

"from": "now-3h",

|

||||

"to": "now"

|

||||

},

|

||||

"timepicker": {

|

||||

"hidden": true,

|

||||

"refresh_intervals": [

|

||||

"30s"

|

||||

]

|

||||

},

|

||||

"timezone": "",

|

||||

"title": "Deprecated APIs",

|

||||

"uid": "B0d1Wt3nk",

|

||||

"version": 2,

|

||||

"weekStart": ""

|

||||

}

|

||||

5638

dashboards/control-plane/dns-coredns.json

Normal file

5638

dashboards/control-plane/dns-coredns.json

Normal file

File diff suppressed because it is too large

Load Diff

1602

dashboards/control-plane/kube-etcd3.json

Normal file

1602

dashboards/control-plane/kube-etcd3.json

Normal file

File diff suppressed because it is too large

Load Diff

6320

dashboards/db/cloudnativepg.json

Normal file

6320

dashboards/db/cloudnativepg.json

Normal file

File diff suppressed because it is too large

Load Diff

4436

dashboards/db/maria-db.json

Normal file

4436

dashboards/db/maria-db.json

Normal file

File diff suppressed because it is too large

Load Diff

1659

dashboards/db/redis.json

Normal file

1659

dashboards/db/redis.json

Normal file

File diff suppressed because it is too large

Load Diff

1558

dashboards/dotdc/k8s-system-coredns.json

Normal file

1558

dashboards/dotdc/k8s-system-coredns.json

Normal file

File diff suppressed because it is too large

Load Diff

2943

dashboards/dotdc/k8s-views-global.json

Normal file

2943

dashboards/dotdc/k8s-views-global.json

Normal file

File diff suppressed because it is too large

Load Diff

2260

dashboards/dotdc/k8s-views-namespaces.json

Normal file

2260

dashboards/dotdc/k8s-views-namespaces.json

Normal file

File diff suppressed because it is too large

Load Diff

2594

dashboards/dotdc/k8s-views-pods.json

Normal file

2594

dashboards/dotdc/k8s-views-pods.json

Normal file

File diff suppressed because it is too large

Load Diff

10221

dashboards/ingress/controller-detail.json

Normal file

10221

dashboards/ingress/controller-detail.json

Normal file

File diff suppressed because it is too large

Load Diff

9524

dashboards/ingress/controllers.json

Normal file

9524

dashboards/ingress/controllers.json

Normal file

File diff suppressed because it is too large

Load Diff

10382

dashboards/ingress/namespace-detail.json

Normal file

10382

dashboards/ingress/namespace-detail.json

Normal file

File diff suppressed because it is too large

Load Diff

8270

dashboards/ingress/namespaces.json

Normal file

8270

dashboards/ingress/namespaces.json

Normal file

File diff suppressed because it is too large

Load Diff

10247

dashboards/ingress/vhost-detail.json

Normal file

10247

dashboards/ingress/vhost-detail.json

Normal file

File diff suppressed because it is too large

Load Diff

8311

dashboards/ingress/vhosts.json

Normal file

8311

dashboards/ingress/vhosts.json

Normal file

File diff suppressed because it is too large

Load Diff

2474

dashboards/main/capacity-planning.json

Normal file

2474

dashboards/main/capacity-planning.json