mirror of

https://github.com/outbackdingo/cozystack.git

synced 2026-01-28 10:18:42 +00:00

156

README.md

156

README.md

@@ -1,20 +1,72 @@

|

||||

|

||||

|

||||

[](https://opensource.org/)

|

||||

[](https://opensource.org/licenses/)

|

||||

[](https://aenix.io/contact-us/#meet)

|

||||

[](https://aenix.io/cozystack/)

|

||||

[](https://github.com/aenix.io/cozystack)

|

||||

[](https://github.com/aenix.io/cozystack)

|

||||

|

||||

# Cozystack

|

||||

|

||||

**Cozystack** is an open-source **PaaS platform** for cloud providers.

|

||||

|

||||

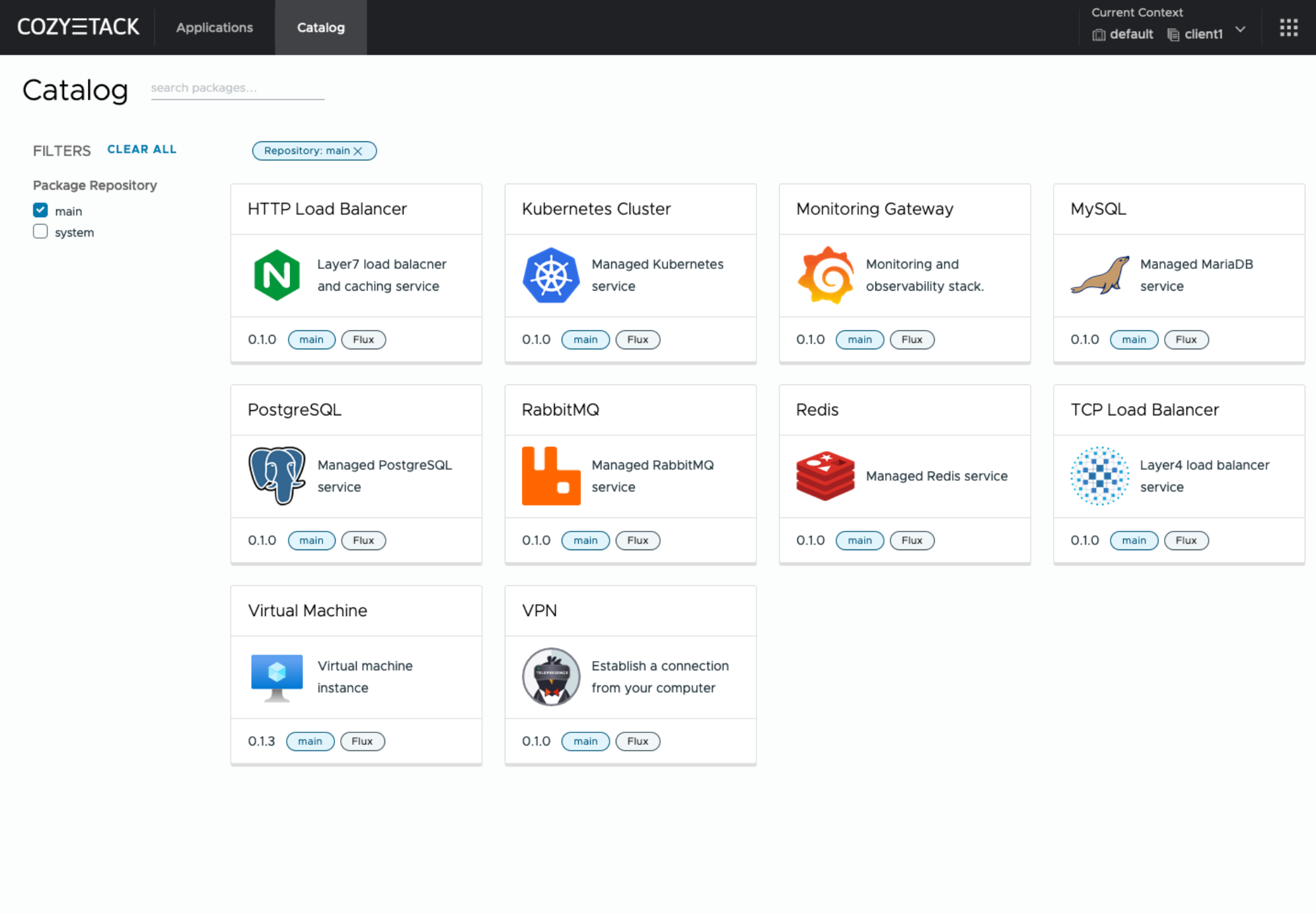

With Cozystack, you can transform your bunch of servers into an intelligent system with a simple REST API for spawning Kubernetes clusters, Database-as-a-Service, virtual machines, load balancers, HTTP caching services, and other services with ease.

|

||||

|

||||

You can use Cozystack to build your own cloud or to provide a cost-effective development environments.

|

||||

|

||||

## Use-Cases

|

||||

|

||||

### As a backend for a public cloud

|

||||

|

||||

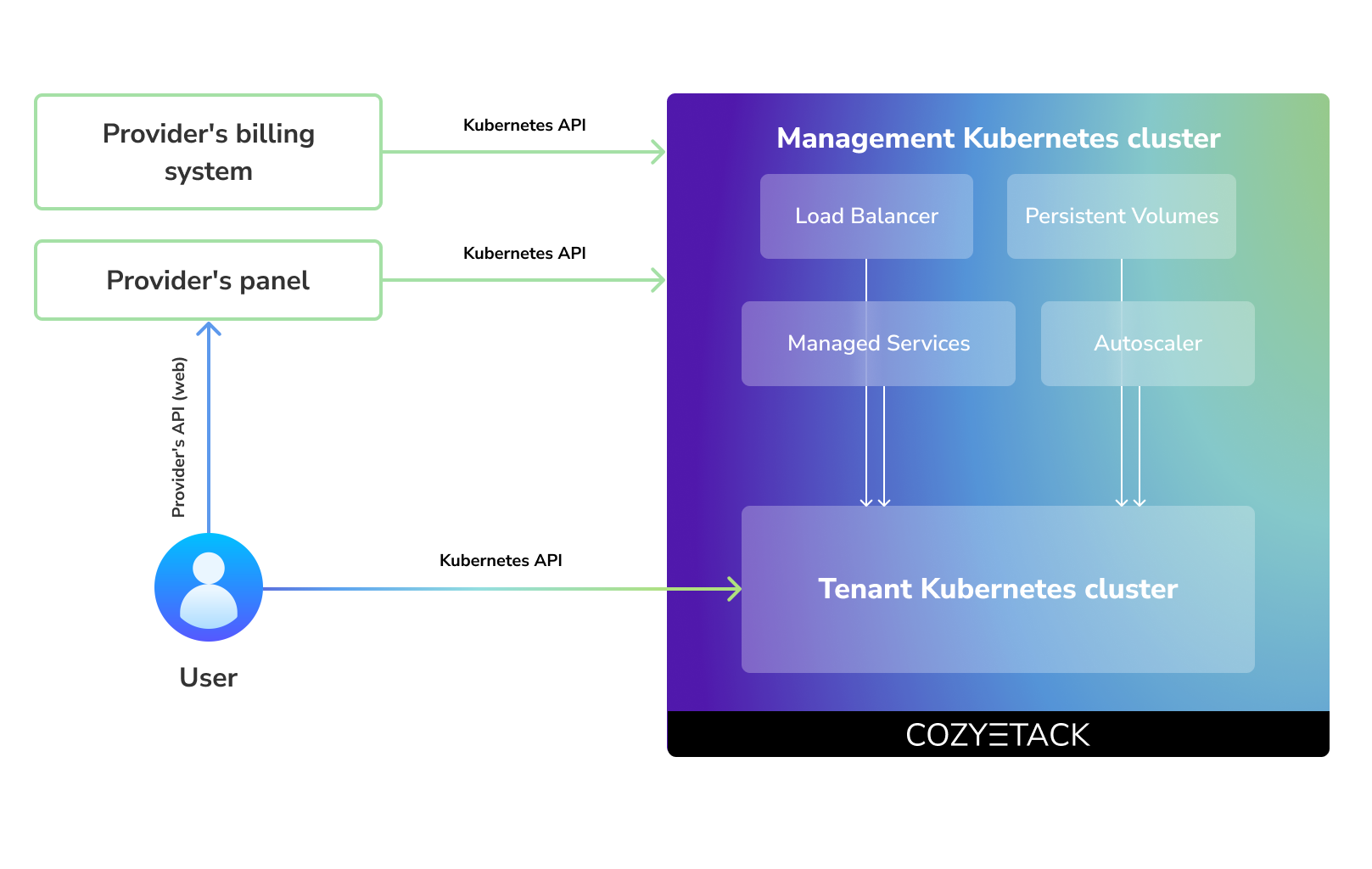

Cozystack positions itself as a kind of framework for building public clouds. The key word here is framework. In this case, it's important to understand that Cozystack is made for cloud providers, not for end users.

|

||||

|

||||

Despite having a graphical interface, the current security model does not imply public user access to your management cluster.

|

||||

|

||||

Instead, end users get access to their own Kubernetes clusters, can order LoadBalancers and additional services from it, but they have no access and know nothing about your management cluster powered by Cozystack.

|

||||

|

||||

Thus, to integrate with your billing system, it's enough to teach your system to go to the management Kubernetes and place a YAML file signifying the service you're interested in. Cozystack will do the rest of the work for you.

|

||||

|

||||

|

||||

|

||||

### As a private cloud for Infrastructure-as-Code

|

||||

|

||||

One of the use cases is a self-portal for users within your company, where they can order the service they're interested in or a managed database.

|

||||

|

||||

You can implement best GitOps practices, where users will launch their own Kubernetes clusters and databases for their needs with a simple commit of configuration into your infrastructure Git repository.

|

||||

|

||||

Thanks to the standardization of the approach to deploying applications, you can expand the platform's capabilities using the functionality of standard Helm charts.

|

||||

|

||||

### As a Kubernetes distribution for Bare Metal

|

||||

|

||||

We created Cozystack primarily for our own needs, having vast experience in building reliable systems on bare metal infrastructure. This experience led to the formation of a separate boxed product, which is aimed at standardizing and providing a ready-to-use tool for managing your infrastructure.

|

||||

|

||||

Currently, Cozystack already solves a huge scope of infrastructure tasks: starting from provisioning bare metal servers, having a ready monitoring system, fast and reliable storage, a network fabric with the possibility of interconnect with your infrastructure, the ability to run virtual machines, databases, and much more right out of the box.

|

||||

|

||||

All this makes Cozystack a convenient platform for delivering and launching your application on Bare Metal.

|

||||

|

||||

## Screenshot

|

||||

|

||||

|

||||

|

||||

## Core values

|

||||

|

||||

### Standardization and unification

|

||||

All components of the platform are based on open source tools and technologies which are widely known in the industry.

|

||||

|

||||

### Collaborate, not compete

|

||||

If a feature being developed for the platform could be useful to a upstream project, it should be contributed to upstream project, rather than being implemented within the platform.

|

||||

|

||||

### API-first

|

||||

Cozystack is based on Kubernetes and involves close interaction with its API. We don't aim to completely hide the all elements behind a pretty UI or any sort of customizations; instead, we provide a standard interface and teach users how to work with basic primitives. The web interface is used solely for deploying applications and quickly diving into basic concepts of platform.

|

||||

|

||||

## Quick Start

|

||||

|

||||

Install dependicies:

|

||||

|

||||

- `docker`

|

||||

- `talosctl`

|

||||

- `dialog`

|

||||

- `nmap`

|

||||

- `make`

|

||||

- `yq`

|

||||

- `kubectl`

|

||||

- `helm`

|

||||

|

||||

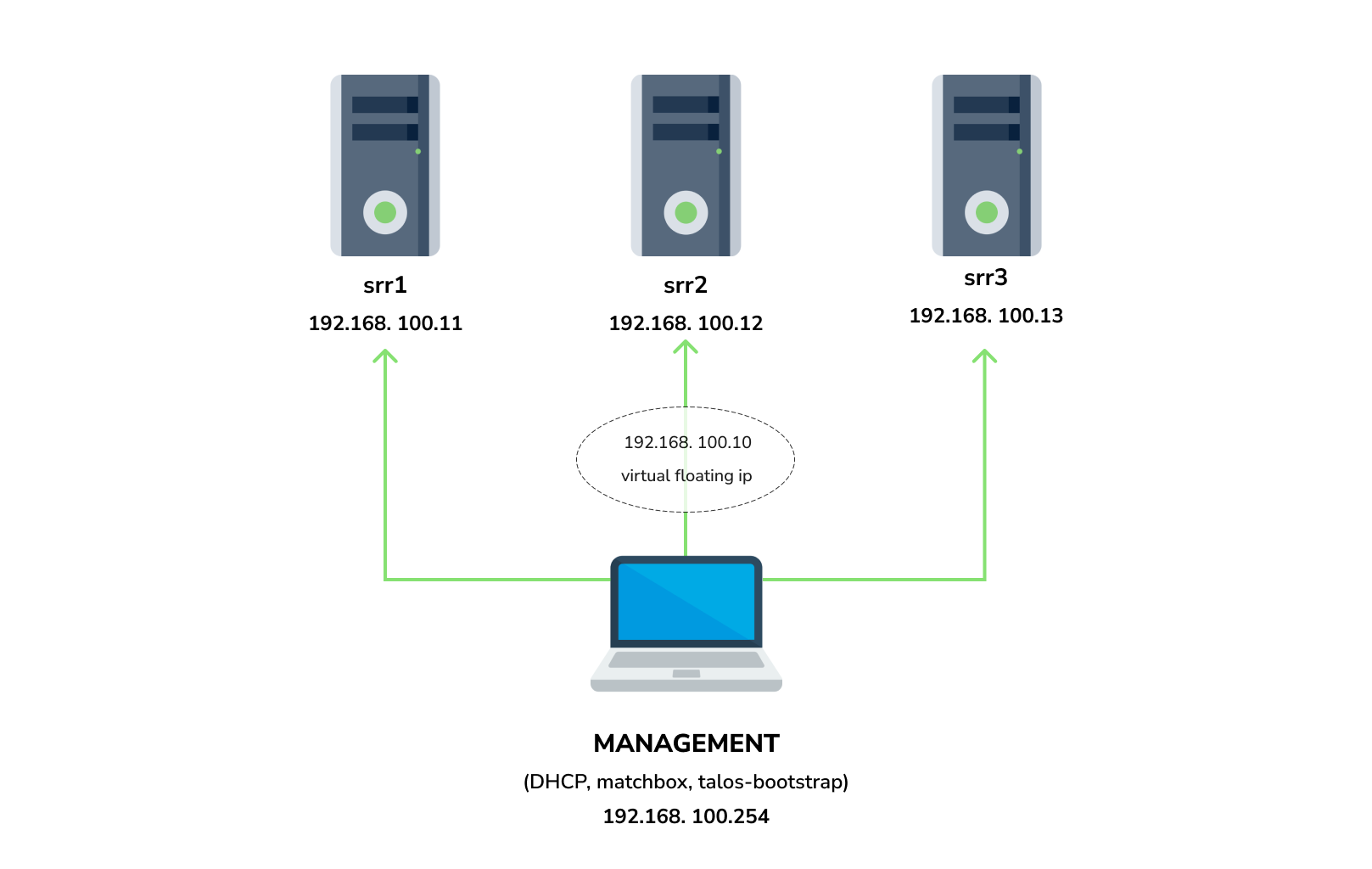

### Preapre infrastructure

|

||||

|

||||

|

||||

|

||||

|

||||

You need 3 physical servers or VMs with nested virtualisation:

|

||||

|

||||

```

|

||||

@@ -30,18 +82,29 @@ Any Linux system installed on it (eg. Ubuntu should be enough)

|

||||

|

||||

**Note:** The VM should support `x86-64-v2` architecture, the most probably you can achieve this by setting cpu model to `host`

|

||||

|

||||

#### Install dependicies:

|

||||

|

||||

- `docker`

|

||||

- `talosctl`

|

||||

- `dialog`

|

||||

- `nmap`

|

||||

- `make`

|

||||

- `yq`

|

||||

- `kubectl`

|

||||

- `helm`

|

||||

|

||||

### Netboot server

|

||||

|

||||

Start matchbox with prebuilt Talos image for Cozystack:

|

||||

|

||||

```

|

||||

```bash

|

||||

sudo docker run --name=matchbox -d --net=host ghcr.io/aenix-io/cozystack/matchbox:v0.0.1 \

|

||||

-address=:8080 \

|

||||

-log-level=debug

|

||||

```

|

||||

|

||||

Start DHCP-Server:

|

||||

```

|

||||

```bash

|

||||

sudo docker run --name=dnsmasq -d --cap-add=NET_ADMIN --net=host quay.io/poseidon/dnsmasq \

|

||||

-d -q -p0 \

|

||||

--dhcp-range=192.168.100.3,192.168.100.254 \

|

||||

@@ -57,7 +120,7 @@ sudo docker run --name=dnsmasq -d --cap-add=NET_ADMIN --net=host quay.io/poseido

|

||||

--dhcp-match=set:efi64,option:client-arch,9 \

|

||||

--dhcp-boot=tag:efi64,ipxe.efi \

|

||||

--dhcp-userclass=set:ipxe,iPXE \

|

||||

--dhcp-boot=tag:ipxe,http://192.168.100.250:8080/boot.ipxe \

|

||||

--dhcp-boot=tag:ipxe,http://192.168.100.254:8080/boot.ipxe \

|

||||

--log-queries \

|

||||

--log-dhcp

|

||||

```

|

||||

@@ -65,7 +128,7 @@ sudo docker run --name=dnsmasq -d --cap-add=NET_ADMIN --net=host quay.io/poseido

|

||||

Where:

|

||||

- `192.168.100.3,192.168.100.254` range to allocate IPs from

|

||||

- `192.168.100.1` your gateway

|

||||

- `192.168.100.250` is address of your management server

|

||||

- `192.168.100.254` is address of your management server

|

||||

|

||||

Check status of containers:

|

||||

|

||||

@@ -73,9 +136,9 @@ Check status of containers:

|

||||

docker ps

|

||||

```

|

||||

|

||||

Example output:

|

||||

example output:

|

||||

|

||||

```

|

||||

```console

|

||||

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

|

||||

22044f26f74d quay.io/poseidon/dnsmasq "/usr/sbin/dnsmasq -…" 6 seconds ago Up 5 seconds dnsmasq

|

||||

231ad81ff9e0 ghcr.io/aenix-io/cozystack/matchbox:v0.0.1 "/matchbox -address=…" 58 seconds ago Up 57 seconds matchbox

|

||||

@@ -143,22 +206,22 @@ EOT

|

||||

|

||||

Run [talos-bootstrap](https://github.com/aenix-io/talos-bootstrap/) to deploy cluster:

|

||||

|

||||

```

|

||||

```bash

|

||||

talos-bootstrap install

|

||||

```

|

||||

|

||||

Save admin kubeconfig to access your Kubernetes cluster:

|

||||

```

|

||||

```bash

|

||||

cp -i kubeconfig ~/.kube/config

|

||||

```

|

||||

|

||||

Check connection:

|

||||

```

|

||||

```bash

|

||||

kubectl get ns

|

||||

```

|

||||

|

||||

example output:

|

||||

```

|

||||

```console

|

||||

NAME STATUS AGE

|

||||

default Active 7m56s

|

||||

kube-node-lease Active 7m56s

|

||||

@@ -191,25 +254,24 @@ EOT

|

||||

|

||||

Create namesapce and install Cozystack system components:

|

||||

|

||||

```

|

||||

```bash

|

||||

kubectl create ns cozy-system

|

||||

kubectl apply -f cozystack-config.yaml

|

||||

kubectl apply -f manifests/cozystack-installer.yaml

|

||||

```

|

||||

|

||||

(optional) You can check logs of installer:

|

||||

```

|

||||

```bash

|

||||

kubectl logs -n cozy-system deploy/cozystack

|

||||

```

|

||||

|

||||

Wait for a while, then check the status of installation:

|

||||

```

|

||||

```bash

|

||||

kubectl get hr -A

|

||||

```

|

||||

|

||||

Wait until all releases become to `Ready` state:

|

||||

|

||||

```

|

||||

```console

|

||||

NAMESPACE NAME AGE READY STATUS

|

||||

cozy-cert-manager cert-manager 2m54s True Release reconciliation succeeded

|

||||

cozy-cert-manager cert-manager-issuers 2m54s True Release reconciliation succeeded

|

||||

@@ -241,18 +303,18 @@ tenant-root tenant-root 2m54s True Rel

|

||||

#### Configure Storage

|

||||

|

||||

Setup alias to access LINSTOR:

|

||||

```

|

||||

```bash

|

||||

alias linstor='kubectl exec -n cozy-linstor deploy/linstor-controller -- linstor'

|

||||

```

|

||||

|

||||

list your nodes

|

||||

```

|

||||

```bash

|

||||

linstor node list

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```

|

||||

```console

|

||||

+-------------------------------------------------------+

|

||||

| Node | NodeType | Addresses | State |

|

||||

|=======================================================|

|

||||

@@ -264,13 +326,12 @@ example output:

|

||||

|

||||

list empty devices:

|

||||

|

||||

```

|

||||

```bash

|

||||

linstor physical-storage list

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```

|

||||

```console

|

||||

+-------------------------------------------+

|

||||

| Size | Rotational | Nodes |

|

||||

|===========================================|

|

||||

@@ -283,7 +344,7 @@ example output:

|

||||

|

||||

create storage pools:

|

||||

|

||||

```

|

||||

```bash

|

||||

linstor ps cdp lvm srv1 /dev/sdb --pool-name data --storage-pool data

|

||||

linstor ps cdp lvm srv2 /dev/sdb --pool-name data --storage-pool data

|

||||

linstor ps cdp lvm srv3 /dev/sdb --pool-name data --storage-pool data

|

||||

@@ -291,13 +352,13 @@ linstor ps cdp lvm srv3 /dev/sdb --pool-name data --storage-pool data

|

||||

|

||||

list storage pools:

|

||||

|

||||

```

|

||||

```bash

|

||||

linstor sp l

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```

|

||||

```console

|

||||

+-------------------------------------------------------------------------------------------------------------------------------------+

|

||||

| StoragePool | Node | Driver | PoolName | FreeCapacity | TotalCapacity | CanSnapshots | State | SharedName |

|

||||

|=====================================================================================================================================|

|

||||

@@ -350,13 +411,12 @@ EOT

|

||||

|

||||

list storageclasses:

|

||||

|

||||

```

|

||||

```bash

|

||||

kubectl get storageclasses

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```

|

||||

```console

|

||||

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

|

||||

local (default) linstor.csi.linbit.com Delete WaitForFirstConsumer true 11m

|

||||

replicated linstor.csi.linbit.com Delete WaitForFirstConsumer true 11m

|

||||

@@ -369,7 +429,7 @@ To access your services select the range of unused IPs, eg. `192.168.100.200-192

|

||||

**Note:** These IPs should be from the same network as nodes or they should have all necessary routes for them.

|

||||

|

||||

Configure MetalLB to use and announce this range:

|

||||

```

|

||||

```yaml

|

||||

kubectl create -f- <<EOT

|

||||

---

|

||||

apiVersion: metallb.io/v1beta1

|

||||

@@ -397,12 +457,12 @@ EOT

|

||||

#### Setup basic applications

|

||||

|

||||

Get token from `tenant-root`:

|

||||

```

|

||||

```bash

|

||||

kubectl get secret -n tenant-root tenant-root -o go-template='{{ printf "%s\n" (index .data "token" | base64decode) }}'

|

||||

```

|

||||

|

||||

Enable port forward to cozy-dashboard:

|

||||

```

|

||||

```bash

|

||||

kubectl port-forward -n cozy-dashboard svc/dashboard 8080:80

|

||||

```

|

||||

|

||||

@@ -420,12 +480,12 @@ Open: http://localhost:8080/

|

||||

|

||||

Check persistent volumes provisioned:

|

||||

|

||||

```

|

||||

```bash

|

||||

kubectl get pvc -n tenant-root

|

||||

```

|

||||

|

||||

example output:

|

||||

```

|

||||

```console

|

||||

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

|

||||

data-etcd-0 Bound pvc-bfc844a6-4253-411c-a2cd-94fb5a98b1ce 10Gi RWO local <unset> 28m

|

||||

data-etcd-1 Bound pvc-b198f493-fb47-431c-a7aa-3befcf38a7d2 10Gi RWO local <unset> 28m

|

||||

@@ -445,13 +505,12 @@ vmstorage-db-vmstorage-shortterm-1 Bound pvc-d8d9da02-523e-4ec7-809a-bf

|

||||

Check all pods are running:

|

||||

|

||||

|

||||

```

|

||||

```bash

|

||||

kubectl get pod -n tenant-root

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```

|

||||

```console

|

||||

NAME READY STATUS RESTARTS AGE

|

||||

etcd-0 1/1 Running 0 90s

|

||||

etcd-1 1/1 Running 0 90s

|

||||

@@ -484,8 +543,7 @@ kubectl get svc -n tenant-root root-ingress-controller

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```

|

||||

```console

|

||||

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

|

||||

root-ingress-controller LoadBalancer 10.96.101.234 192.168.100.200 80:31879/TCP,443:31262/TCP 49s

|

||||

```

|

||||

@@ -495,6 +553,6 @@ Use `grafana.example.org` (under 192.168.100.200) to access system monitoring, w

|

||||

- login: `admin`

|

||||

- password:

|

||||

|

||||

```

|

||||

```bash

|

||||

kubectl get secret -n tenant-root grafana-admin-password -o go-template='{{ printf "%s\n" (index .data "password" | base64decode) }}'

|

||||

```

|

||||

|

||||

30

TODO

30

TODO

@@ -1,30 +0,0 @@

|

||||

main installation script and uplaod secrets to kubernetes

|

||||

kubectl exec -ti -n cozy-linstor srv3 -- vgcreate data /dev/sdb

|

||||

grafana admin password

|

||||

grafana redis password

|

||||

autoconfigure ONCALL_API_URL

|

||||

oidc

|

||||

talos setup via tcp-proxy

|

||||

migrate kubeapps redis to operator

|

||||

kubeapps patch helm chart to use custom images

|

||||

flux policies

|

||||

talos linux firmware

|

||||

replace reconcile.sh

|

||||

metallb-configuration

|

||||

docs: each chart should be self sufficient

|

||||

docs: core charts must be accessible via helm template | kubectl apply -f

|

||||

docs: system charts must be accessible via helm install & flux

|

||||

docs: how to get first token to access cluster

|

||||

docs: where to store talosconfig

|

||||

how to version helm charts

|

||||

autombump chart versions for system charts

|

||||

move icons to repo

|

||||

reconcile system helm releases

|

||||

remove cluster and other namespace resources from apps charts, eg extension-apiserver-authentication-reader

|

||||

nginx-ingress has no values

|

||||

update all applications to be managed by operators

|

||||

fullnameOverride kamaji-etcd

|

||||

automatically delete provisioned services with the cluster

|

||||

README Who is the platform intended for?

|

||||

README how to use platfom

|

||||

README logo and sreenshots

|

||||

51

img/cozystack-logo.svg

Normal file

51

img/cozystack-logo.svg

Normal file

@@ -0,0 +1,51 @@

|

||||

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

|

||||

<svg

|

||||

width="521"

|

||||

height="185"

|

||||

fill="none"

|

||||

version="1.1"

|

||||

id="svg830"

|

||||

sodipodi:docname="cozyl.svg"

|

||||

inkscape:version="1.1.1 (c3084ef, 2021-09-22)"

|

||||

xmlns:inkscape="http://www.inkscape.org/namespaces/inkscape"

|

||||

xmlns:sodipodi="http://sodipodi.sourceforge.net/DTD/sodipodi-0.dtd"

|

||||

xmlns="http://www.w3.org/2000/svg"

|

||||

xmlns:svg="http://www.w3.org/2000/svg">

|

||||

<defs

|

||||

id="defs834" />

|

||||

<sodipodi:namedview

|

||||

id="namedview832"

|

||||

pagecolor="#ffffff"

|

||||

bordercolor="#666666"

|

||||

borderopacity="1.0"

|

||||

inkscape:pageshadow="2"

|

||||

inkscape:pageopacity="0.0"

|

||||

inkscape:pagecheckerboard="0"

|

||||

showgrid="false"

|

||||

inkscape:zoom="2.193858"

|

||||

inkscape:cx="266.42563"

|

||||

inkscape:cy="81.363517"

|

||||

inkscape:window-width="1312"

|

||||

inkscape:window-height="969"

|

||||

inkscape:window-x="0"

|

||||

inkscape:window-y="25"

|

||||

inkscape:window-maximized="0"

|

||||

inkscape:current-layer="svg830" />

|

||||

<path

|

||||

d="M472.993 120.863V57.41h7.38V86.21h.18l30.782-28.802h9.451L485.503 90.26l.09-3.96 37.173 34.562h-9.72l-32.493-29.882h-.18v29.882zM439.884 121.673c-6.24 0-11.58-1.32-16.02-3.96-4.442-2.64-7.862-6.39-10.262-11.25-2.34-4.861-3.51-10.652-3.51-17.372 0-6.72 1.17-12.48 3.51-17.281 2.4-4.86 5.82-8.61 10.261-11.251 4.44-2.64 9.78-3.96 16.021-3.96 4.38 0 8.43.69 12.151 2.07 3.72 1.38 6.84 3.39 9.36 6.03l-2.88 6.03c-2.76-2.58-5.64-4.44-8.64-5.58-2.94-1.2-6.21-1.8-9.81-1.8-7.142 0-12.602 2.25-16.382 6.75-3.78 4.5-5.67 10.831-5.67 18.992 0 8.16 1.89 14.52 5.67 19.081 3.78 4.5 9.24 6.75 16.381 6.75 3.6 0 6.87-.57 9.81-1.71 3.001-1.2 5.881-3.09 8.641-5.67l2.88 6.03c-2.52 2.58-5.64 4.591-9.36 6.031-3.72 1.38-7.77 2.07-12.15 2.07zM341.795 120.863l27.992-63.454h6.3l27.992 63.454h-7.65l-7.83-18.091 3.6 1.89h-38.703l3.69-1.89-7.74 18.091zm31.052-54.814-14.49 34.113-2.16-1.71h33.301l-1.98 1.71-14.49-34.113zM316.2 120.863V63.8h-23.043v-6.39h53.554v6.39H323.67v57.064z"

|

||||

fill="#fff"

|

||||

id="path824"

|

||||

style="fill:#000000" />

|

||||

<path

|

||||

fill-rule="evenodd"

|

||||

clip-rule="evenodd"

|

||||

d="M236.645 57.33h50.827v6.353h-50.827zm0 57.18h50.827v6.353h-50.827zm50.827-28.59h-50.827v6.353h50.827z"

|

||||

fill="#fff"

|

||||

id="path826"

|

||||

style="fill:#000000" />

|

||||

<path

|

||||

d="M199.863 120.863V88.011l1.62 5.13-25.922-35.732h8.641l20.431 28.262h-1.89l20.432-28.262h8.37L205.713 93.14l1.531-5.13v32.852zM126.991 120.863v-5.49l38.523-54.454v2.88h-38.523v-6.39h45.453v5.49l-38.522 54.364v-2.79h39.783v6.39zM89.464 121.673c-4.38 0-8.371-.75-11.971-2.25-3.6-1.56-6.66-3.75-9.18-6.57-2.521-2.82-4.471-6.24-5.851-10.261-1.32-4.02-1.98-8.52-1.98-13.501 0-5.04.66-9.54 1.98-13.501 1.38-4.02 3.33-7.41 5.85-10.17 2.52-2.82 5.55-4.981 9.09-6.481 3.601-1.56 7.621-2.34 12.062-2.34 4.5 0 8.52.75 12.06 2.25 3.6 1.5 6.66 3.66 9.181 6.48 2.58 2.82 4.53 6.24 5.85 10.261 1.38 3.96 2.07 8.43 2.07 13.41 0 5.041-.69 9.572-2.07 13.592-1.38 4.02-3.33 7.44-5.85 10.26-2.52 2.82-5.58 5.01-9.18 6.571-3.54 1.5-7.561 2.25-12.061 2.25zm0-6.57c4.56 0 8.4-1.02 11.52-3.06 3.18-2.04 5.61-5.01 7.29-8.911 1.681-3.9 2.521-8.58 2.521-14.041 0-5.52-.84-10.2-2.52-14.041-1.68-3.84-4.11-6.78-7.29-8.82-3.12-2.04-6.961-3.06-11.521-3.06-4.44 0-8.251 1.02-11.431 3.06-3.12 2.04-5.52 5.01-7.2 8.91-1.68 3.84-2.52 8.49-2.52 13.95 0 5.461.84 10.142 2.52 14.042 1.68 3.84 4.08 6.81 7.2 8.91 3.18 2.04 6.99 3.06 11.43 3.06zM31.559 121.673c-6.24 0-11.581-1.32-16.022-3.96-4.44-2.64-7.86-6.39-10.26-11.25-2.34-4.861-3.51-10.652-3.51-17.372 0-6.72 1.17-12.48 3.51-17.281 2.4-4.86 5.82-8.61 10.26-11.251 4.44-2.64 9.781-3.96 16.022-3.96 4.38 0 8.43.69 12.15 2.07 3.72 1.38 6.84 3.39 9.361 6.03l-2.88 6.03c-2.76-2.58-5.64-4.44-8.64-5.58-2.941-1.2-6.211-1.8-9.811-1.8-7.14 0-12.601 2.25-16.382 6.75-3.78 4.5-5.67 10.831-5.67 18.992 0 8.16 1.89 14.52 5.67 19.081 3.78 4.5 9.241 6.75 16.382 6.75 3.6 0 6.87-.57 9.81-1.71 3-1.2 5.88-3.09 8.64-5.67l2.881 6.03c-2.52 2.58-5.64 4.591-9.36 6.031-3.72 1.38-7.771 2.07-12.151 2.07z"

|

||||

fill="#fff"

|

||||

id="path828"

|

||||

style="fill:#000000" />

|

||||

</svg>

|

||||

|

After Width: | Height: | Size: 4.0 KiB |

@@ -72,14 +72,14 @@ spec:

|

||||

serviceAccountName: cozystack

|

||||

containers:

|

||||

- name: cozystack

|

||||

image: "ghcr.io/aenix-io/cozystack/installer:v0.0.1@sha256:d198c1131ed0952dba70918f970bb25764547e64d4e12a455e5b7bfe3040d5e6"

|

||||

image: "ghcr.io/aenix-io/cozystack/installer:v0.0.1@sha256:e88dd9fa65136863ab8daffdd2deee76cdf5b4d7be4b135115ef0002e97d185a"

|

||||

env:

|

||||

- name: KUBERNETES_SERVICE_HOST

|

||||

value: localhost

|

||||

- name: KUBERNETES_SERVICE_PORT

|

||||

value: "7445"

|

||||

- name: darkhttpd

|

||||

image: "ghcr.io/aenix-io/cozystack/installer:v0.0.1@sha256:d198c1131ed0952dba70918f970bb25764547e64d4e12a455e5b7bfe3040d5e6"

|

||||

image: "ghcr.io/aenix-io/cozystack/installer:v0.0.1@sha256:e88dd9fa65136863ab8daffdd2deee76cdf5b4d7be4b135115ef0002e97d185a"

|

||||

command:

|

||||

- /usr/bin/darkhttpd

|

||||

- /cozystack/assets

|

||||

|

||||

47

packages/apps/tenant/README.md

Normal file

47

packages/apps/tenant/README.md

Normal file

@@ -0,0 +1,47 @@

|

||||

# Tenant

|

||||

|

||||

A tenant is the main unit of security on the platform. The closest analogy would be Linux kernel namespaces.

|

||||

|

||||

Tenants can be created recursively and are subject to the following rules:

|

||||

|

||||

### Higher-level tenants can access lower-level ones.

|

||||

|

||||

Higher-level tenants can view and manage the applications of all their children.

|

||||

|

||||

### Each tenant has its own domain

|

||||

|

||||

By default (unless otherwise specified), it inherits the domain of its parent with a prefix of its name, for example, if the parent had the domain `example.org`, then `tenant-foo` would get the domain `foo.example.org` by default.

|

||||

|

||||

Kubernetes clusters created in this tenant namespace would get domains like: `kubernetes-cluster.foo.example.org`

|

||||

|

||||

Example:

|

||||

```

|

||||

tenant-root (example.org)

|

||||

└── tenant-foo (foo.example.org)

|

||||

└── kubernetes-cluster1 (kubernetes-cluster1.foo.example.org)

|

||||

```

|

||||

|

||||

### Lower-level tenants can access the cluster services of their parent (provided they do not run their own)

|

||||

|

||||

Thus, you can create `tenant-u1` with a set of services like `etcd`, `ingress`, `monitoring`. And create another tenant namespace `tenant-u2` inside of `tenant-u1`.

|

||||

|

||||

Let's see what will happen when you run Kubernetes and Postgres under `tenant-u2` namesapce.

|

||||

|

||||

Since `tenant-u2` does not have its own cluster services like `etcd`, `ingress`, and `monitoring`, the applications will use the cluster services of the parent tenant.

|

||||

This in turn means:

|

||||

|

||||

- The Kubernetes cluster data will be stored in etcd for `tenant-u1`.

|

||||

- Access to the cluster will be through the common ingress of `tenant-u1`.

|

||||

- Essentially, all metrics will be collected in the monitoring from `tenant-u1`, and only it will have access to them.

|

||||

|

||||

|

||||

Example:

|

||||

```

|

||||

tenant-u1

|

||||

├── etcd

|

||||

├── ingress

|

||||

├── monitoring

|

||||

└── tenant-u2

|

||||

├── kubernetes-cluster1

|

||||

└── postgres-db1

|

||||

```

|

||||

@@ -1,14 +1,14 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:fad46f3695123e4675805045d07394722b6afa36a2fb8becc0af63169585d851",

|

||||

"containerimage.config.digest": "sha256:b49eb4e818bbedb37ba0447d8c42f17f59746e93c7ed854029abf4f5e7840706",

|

||||

"containerimage.descriptor": {

|

||||

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

|

||||

"digest": "sha256:d198c1131ed0952dba70918f970bb25764547e64d4e12a455e5b7bfe3040d5e6",

|

||||

"digest": "sha256:e88dd9fa65136863ab8daffdd2deee76cdf5b4d7be4b135115ef0002e97d185a",

|

||||

"size": 2074,

|

||||

"platform": {

|

||||

"architecture": "amd64",

|

||||

"os": "linux"

|

||||

}

|

||||

},

|

||||

"containerimage.digest": "sha256:d198c1131ed0952dba70918f970bb25764547e64d4e12a455e5b7bfe3040d5e6",

|

||||

"containerimage.digest": "sha256:e88dd9fa65136863ab8daffdd2deee76cdf5b4d7be4b135115ef0002e97d185a",

|

||||

"image.name": "ghcr.io/aenix-io/cozystack/installer:v0.0.1"

|

||||

}

|

||||

@@ -1,37 +0,0 @@

|

||||

defaults

|

||||

mode tcp

|

||||

option dontlognull

|

||||

timeout http-request 10s

|

||||

timeout queue 20s

|

||||

timeout connect 5s

|

||||

timeout client 5m

|

||||

timeout server 5m

|

||||

timeout tunnel 5m

|

||||

timeout http-keep-alive 10s

|

||||

timeout check 10s

|

||||

|

||||

frontend kubernetes

|

||||

bind :::6443 v4v6

|

||||

mode tcp

|

||||

default_backend kubernetes

|

||||

|

||||

frontend talos

|

||||

bind :::50000 v4v6

|

||||

mode tcp

|

||||

default_backend talos

|

||||

|

||||

backend kubernetes

|

||||

mode tcp

|

||||

balance leastconn

|

||||

default-server observe layer4 error-limit 10 on-error mark-down check

|

||||

server srv0 192.168.100.11:6443 check

|

||||

server srv1 192.168.100.12:6443 check

|

||||

server srv2 192.168.100.13:6443 check

|

||||

|

||||

backend talos

|

||||

mode tcp

|

||||

balance leastconn

|

||||

default-server observe layer4 error-limit 10 on-error mark-down check

|

||||

server srv0 192.168.100.11:50000 check

|

||||

server srv1 192.168.100.12:50000 check

|

||||

server srv2 192.168.100.13:50000 check

|

||||

@@ -1,46 +0,0 @@

|

||||

#!/usr/sbin/nft -f

|

||||

|

||||

flush ruleset

|

||||

|

||||

table inet filter {

|

||||

chain input {

|

||||

type filter hook input priority 0; policy drop;

|

||||

|

||||

ct state invalid counter drop comment "early drop of invalid packets"

|

||||

|

||||

ct state {established, related} accept comment "accept all connections established, related"

|

||||

|

||||

iif lo accept comment "accept loopback"

|

||||

|

||||

ip saddr 0.0.0.0/0 tcp dport 22 accept comment "accept ssh"

|

||||

ip saddr 0.0.0.0/0 tcp dport 8006 accept comment "accept proxmox"

|

||||

ip saddr 0.0.0.0/0 tcp dport 6443 accept comment "accept kubernetes"

|

||||

ip saddr 0.0.0.0/0 tcp dport 5000 accept comment "accept talos"

|

||||

|

||||

ip saddr 10.0.0.0/8 accept comment "accept from private networks"

|

||||

ip saddr 192.168.0.0/16 accept comment "accept from private networks"

|

||||

|

||||

include "/tmp/nftables-*.conf"

|

||||

|

||||

ip protocol icmp accept comment "accept all ICMP types"

|

||||

|

||||

#log prefix "Dropped: " flags all drop comment "dropped packets logger"

|

||||

#log prefix "Rejected: " flags all reject comment "rejected packets logger"

|

||||

|

||||

counter comment "count dropped packets"

|

||||

}

|

||||

chain forward {

|

||||

type filter hook forward priority 0; policy accept;

|

||||

}

|

||||

chain output {

|

||||

type filter hook output priority 0; policy accept;

|

||||

}

|

||||

}

|

||||

|

||||

table ip nat {

|

||||

chain postrouting {

|

||||

type nat hook postrouting priority srcnat; policy accept;

|

||||

oifname "enp41s0" ip saddr 10.0.0.0/8 masquerade comment "masquerade lan"

|

||||

oifname "enp41s0" ip saddr 192.168.0.0/16 masquerade comment "masquerade lan"

|

||||

}

|

||||

}

|

||||

Reference in New Issue

Block a user