mirror of

https://github.com/outbackdingo/cozystack.git

synced 2026-01-29 02:18:47 +00:00

Compare commits

2 Commits

71-configu

...

fix-redis

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

042cc98c34 | ||

|

|

9180896f54 |

48

.github/workflows/ci.yml

vendored

48

.github/workflows/ci.yml

vendored

@@ -1,48 +0,0 @@

|

||||

---

|

||||

name: CI/CD Workflow

|

||||

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

paths:

|

||||

- '**.yaml'

|

||||

- '**/Dockerfile'

|

||||

- '**/charts/**'

|

||||

tags:

|

||||

- 'v*'

|

||||

|

||||

env:

|

||||

IMAGE_NGINX_CACHE: nginx-cache

|

||||

REGISTRY: ghcr.io/${{ github.repository_owner }}

|

||||

PUSH: 1

|

||||

LOAD: 1

|

||||

NGINX_CACHE_TAG: v0.1.0

|

||||

TAG: v0.3.1

|

||||

PLATFORM_ARCH: linux/amd64

|

||||

|

||||

jobs:

|

||||

build-and-push:

|

||||

name: Build Cozystack

|

||||

runs-on: ubuntu-latest

|

||||

services:

|

||||

registry:

|

||||

image: registry:2

|

||||

ports:

|

||||

- 5000:5000

|

||||

steps:

|

||||

- name: Set up Docker Registry

|

||||

run: |

|

||||

if [ "$GITHUB_ACTIONS" = "true" ]; then

|

||||

echo "REGISTRY=ghcr.io/${{ github.repository_owner }}" >> $GITHUB_ENV

|

||||

else

|

||||

echo "REGISTRY=localhost:5000/cozystack_local" >> $GITHUB_ENV

|

||||

fi

|

||||

|

||||

- uses: actions/checkout@v3

|

||||

- name: Build usig make

|

||||

run: |

|

||||

make

|

||||

|

||||

- name: Set up QEMU

|

||||

uses: docker/setup-qemu-action@v3

|

||||

22

.github/workflows/e2e.yaml

vendored

22

.github/workflows/e2e.yaml

vendored

@@ -1,22 +0,0 @@

|

||||

name: Run E2E Tests

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

branches:

|

||||

- main

|

||||

|

||||

jobs:

|

||||

e2e-tests:

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v2

|

||||

|

||||

- name: Set up SSH

|

||||

uses: webfactory/ssh-agent@v0.5.3

|

||||

with:

|

||||

ssh-private-key: ${{ secrets.SSH_PRIVATE_KEY }}

|

||||

|

||||

- name: Run E2E Tests on Remote Server

|

||||

run: ssh -p 2222 root@mgr.cp.if.ua 'bash -s' < /home/cozystack/hack/e2e.sh

|

||||

48

.github/workflows/lint.yml

vendored

48

.github/workflows/lint.yml

vendored

@@ -1,48 +0,0 @@

|

||||

name: Lint

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [ main ] # Lint only on pushes to the main branch

|

||||

pull_request:

|

||||

branches: [ main ] # Lint on PRs targeting the main branch

|

||||

|

||||

permissions:

|

||||

contents: read

|

||||

|

||||

jobs:

|

||||

lint:

|

||||

name: Super-Linter

|

||||

runs-on: ubuntu-latest

|

||||

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

fetch-depth: 0

|

||||

|

||||

- name: Run Super-Linter

|

||||

uses: github/super-linter@v4

|

||||

env:

|

||||

# To report GitHub Actions status checks

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

VALIDATE_ALL_CODEBASE: false # Lint only changed files

|

||||

VALIDATE_TERRAFORM: false # Disable Terraform linting (remove if you need it)

|

||||

DEFAULT_BRANCH: main # Set your default branch

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

# Enable only the linters you need for your project

|

||||

VALIDATE_JAVASCRIPT_ES: true

|

||||

VALIDATE_PYTHON_BLACK: true

|

||||

VALIDATE_HTML: false

|

||||

VALIDATE_GO: false

|

||||

VALIDATE_XML: false

|

||||

VALIDATE_JAVA: false

|

||||

VALIDATE_DOCKERFILE: false

|

||||

# turn off JSCPD copy/paste detection, which results in lots of results for examples and devops repos

|

||||

VALIDATE_JSCPD: false

|

||||

# turn off shfmt shell formatter as we already have shellcheck

|

||||

VALIDATE_SHELL_SHFMT: false

|

||||

VALIDATE_EDITORCONFIG: false

|

||||

# prevent Kubernetes CRD API's from causing kubeval to fail

|

||||

# also change schema location to an up-to-date list

|

||||

# https://github.com/yannh/kubernetes-json-schema/#kubeval

|

||||

KUBERNETES_KUBEVAL_OPTIONS: --ignore-missing-schemas --schema-location https://raw.githubusercontent.com/yannh/kubernetes-json-schema/master/

|

||||

7

.github/workflows/linters/.markdown-lint.yml

vendored

7

.github/workflows/linters/.markdown-lint.yml

vendored

@@ -1,7 +0,0 @@

|

||||

---

|

||||

# MD013/line-length - Line length

|

||||

MD013:

|

||||

# Number of characters, default is 80

|

||||

line_length: 9999

|

||||

# check code blocks?

|

||||

code_blocks: false

|

||||

55

.github/workflows/linters/.yaml-lint.yml

vendored

55

.github/workflows/linters/.yaml-lint.yml

vendored

@@ -1,55 +0,0 @@

|

||||

|

||||

yaml-files:

|

||||

- '*.yaml'

|

||||

- '*.yml'

|

||||

- '.yamllint'

|

||||

|

||||

rules:

|

||||

braces:

|

||||

level: warning

|

||||

min-spaces-inside: 0

|

||||

max-spaces-inside: 0

|

||||

min-spaces-inside-empty: 1

|

||||

max-spaces-inside-empty: 5

|

||||

brackets:

|

||||

level: warning

|

||||

min-spaces-inside: 0

|

||||

max-spaces-inside: 0

|

||||

min-spaces-inside-empty: 1

|

||||

max-spaces-inside-empty: 5

|

||||

colons:

|

||||

level: warning

|

||||

max-spaces-before: 0

|

||||

max-spaces-after: 1

|

||||

commas:

|

||||

level: warning

|

||||

max-spaces-before: 0

|

||||

min-spaces-after: 1

|

||||

max-spaces-after: 1

|

||||

comments: disable

|

||||

comments-indentation: disable

|

||||

document-end: disable

|

||||

document-start: disable

|

||||

empty-lines:

|

||||

level: warning

|

||||

max: 2

|

||||

max-start: 0

|

||||

max-end: 0

|

||||

hyphens:

|

||||

level: warning

|

||||

max-spaces-after: 1

|

||||

indentation:

|

||||

level: warning

|

||||

spaces: consistent

|

||||

indent-sequences: true

|

||||

check-multi-line-strings: false

|

||||

key-duplicates: enable

|

||||

line-length: disable

|

||||

new-line-at-end-of-file: disable

|

||||

new-lines:

|

||||

type: unix

|

||||

trailing-spaces: disable

|

||||

line-length:

|

||||

max: 130

|

||||

allow-non-breakable-words: true

|

||||

allow-non-breakable-inline-mappings: false

|

||||

73

.github/workflows/pr.yml

vendored

73

.github/workflows/pr.yml

vendored

@@ -1,73 +0,0 @@

|

||||

name: Pull Request Workflow

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

types: [opened, synchronize, reopened]

|

||||

|

||||

env:

|

||||

REGISTRY: ghcr.io

|

||||

IMAGE_NAME: ${{ github.repository }}

|

||||

|

||||

jobs:

|

||||

build-and-test:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3

|

||||

|

||||

- name: Login to GitHub Container Registry

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

registry: ${{ env.REGISTRY }}

|

||||

username: ${{ github.actor }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

- name: Build images

|

||||

run: make build

|

||||

env:

|

||||

DOCKER_BUILDKIT: 1

|

||||

|

||||

- name: Tag and push images

|

||||

run: |

|

||||

PR_NUMBER=${{ github.event.pull_request.number }}

|

||||

BRANCH_NAME="test-pr${PR_NUMBER}"

|

||||

git checkout -b ${BRANCH_NAME}

|

||||

git push origin ${BRANCH_NAME}

|

||||

|

||||

# Tag images with PR number

|

||||

for image in $(docker images --format "{{.Repository}}:{{.Tag}}" | grep ${IMAGE_NAME}); do

|

||||

docker tag ${image} ${image}-pr${PR_NUMBER}

|

||||

docker push ${image}-pr${PR_NUMBER}

|

||||

done

|

||||

|

||||

- name: Run tests

|

||||

run: make test

|

||||

|

||||

cleanup:

|

||||

needs: build-and-test

|

||||

if: github.event.action == 'closed'

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3

|

||||

|

||||

- name: Login to GitHub Container Registry

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

registry: ${{ env.REGISTRY }}

|

||||

username: ${{ github.actor }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

- name: Delete PR-tagged images

|

||||

run: |

|

||||

PR_NUMBER=${{ github.event.pull_request.number }}

|

||||

for image in $(docker images --format "{{.Repository}}:{{.Tag}}" | grep ${IMAGE_NAME} | grep "pr${PR_NUMBER}"); do

|

||||

docker rmi ${image}

|

||||

docker push ${image} --delete

|

||||

done

|

||||

51

.github/workflows/release.yml

vendored

51

.github/workflows/release.yml

vendored

@@ -1,51 +0,0 @@

|

||||

name: Release Workflow

|

||||

|

||||

on:

|

||||

release:

|

||||

types: [published]

|

||||

|

||||

env:

|

||||

REGISTRY: ghcr.io

|

||||

IMAGE_NAME: ${{ github.repository }}

|

||||

|

||||

jobs:

|

||||

test-and-release:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Set up Docker Buildx

|

||||

uses: docker/setup-buildx-action@v3

|

||||

|

||||

- name: Login to GitHub Container Registry

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

registry: ${{ env.REGISTRY }}

|

||||

username: ${{ github.actor }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

- name: Run tests

|

||||

run: make test

|

||||

|

||||

- name: Build images

|

||||

run: make build

|

||||

env:

|

||||

DOCKER_BUILDKIT: 1

|

||||

|

||||

- name: Tag and push release images

|

||||

run: |

|

||||

VERSION=${{ github.event.release.tag_name }}

|

||||

for image in $(docker images --format "{{.Repository}}:{{.Tag}}" | grep ${IMAGE_NAME}); do

|

||||

docker tag ${image} ${image}:${VERSION}

|

||||

docker push ${image}:${VERSION}

|

||||

done

|

||||

|

||||

- name: Create release notes

|

||||

uses: softprops/action-gh-release@v1

|

||||

with:

|

||||

files: |

|

||||

README.md

|

||||

CHANGELOG.md

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

2

.gitignore

vendored

2

.gitignore

vendored

@@ -1,3 +1 @@

|

||||

_out

|

||||

.git

|

||||

.idea

|

||||

6

Makefile

6

Makefile

@@ -3,8 +3,6 @@

|

||||

build:

|

||||

make -C packages/apps/http-cache image

|

||||

make -C packages/apps/kubernetes image

|

||||

make -C packages/system/cilium image

|

||||

make -C packages/system/kubeovn image

|

||||

make -C packages/system/dashboard image

|

||||

make -C packages/core/installer image

|

||||

make manifests

|

||||

@@ -20,8 +18,6 @@ repos:

|

||||

make -C packages/system repo

|

||||

make -C packages/apps repo

|

||||

make -C packages/extra repo

|

||||

mkdir -p _out/logos

|

||||

cp ./packages/apps/*/logos/*.svg ./packages/extra/*/logos/*.svg _out/logos/

|

||||

|

||||

assets:

|

||||

make -C packages/core/installer/ assets

|

||||

make -C packages/core/talos/ assets

|

||||

|

||||

553

README.md

553

README.md

@@ -10,7 +10,7 @@

|

||||

|

||||

# Cozystack

|

||||

|

||||

**Cozystack** is a free PaaS platform and framework for building clouds.

|

||||

**Cozystack** is an open-source **PaaS platform** for cloud providers.

|

||||

|

||||

With Cozystack, you can transform your bunch of servers into an intelligent system with a simple REST API for spawning Kubernetes clusters, Database-as-a-Service, virtual machines, load balancers, HTTP caching services, and other services with ease.

|

||||

|

||||

@@ -18,55 +18,548 @@ You can use Cozystack to build your own cloud or to provide a cost-effective dev

|

||||

|

||||

## Use-Cases

|

||||

|

||||

* [**Using Cozystack to build public cloud**](https://cozystack.io/docs/use-cases/public-cloud/)

|

||||

You can use Cozystack as backend for a public cloud

|

||||

### As a backend for a public cloud

|

||||

|

||||

* [**Using Cozystack to build private cloud**](https://cozystack.io/docs/use-cases/private-cloud/)

|

||||

You can use Cozystack as platform to build a private cloud powered by Infrastructure-as-Code approach

|

||||

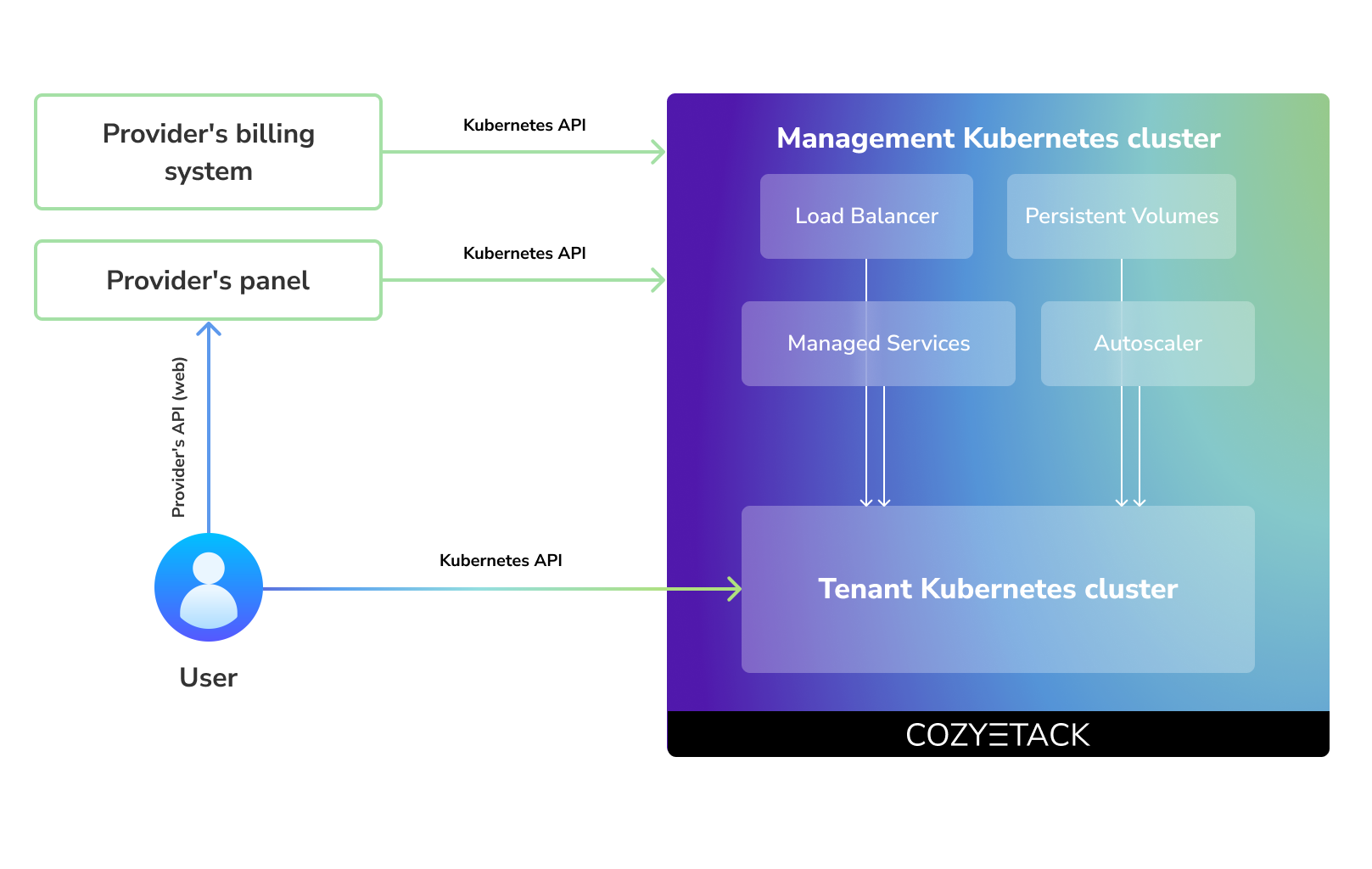

Cozystack positions itself as a kind of framework for building public clouds. The key word here is framework. In this case, it's important to understand that Cozystack is made for cloud providers, not for end users.

|

||||

|

||||

* [**Using Cozystack as Kubernetes distribution**](https://cozystack.io/docs/use-cases/kubernetes-distribution/)

|

||||

You can use Cozystack as Kubernetes distribution for Bare Metal

|

||||

Despite having a graphical interface, the current security model does not imply public user access to your management cluster.

|

||||

|

||||

Instead, end users get access to their own Kubernetes clusters, can order LoadBalancers and additional services from it, but they have no access and know nothing about your management cluster powered by Cozystack.

|

||||

|

||||

Thus, to integrate with your billing system, it's enough to teach your system to go to the management Kubernetes and place a YAML file signifying the service you're interested in. Cozystack will do the rest of the work for you.

|

||||

|

||||

|

||||

|

||||

### As a private cloud for Infrastructure-as-Code

|

||||

|

||||

One of the use cases is a self-portal for users within your company, where they can order the service they're interested in or a managed database.

|

||||

|

||||

You can implement best GitOps practices, where users will launch their own Kubernetes clusters and databases for their needs with a simple commit of configuration into your infrastructure Git repository.

|

||||

|

||||

Thanks to the standardization of the approach to deploying applications, you can expand the platform's capabilities using the functionality of standard Helm charts.

|

||||

|

||||

### As a Kubernetes distribution for Bare Metal

|

||||

|

||||

We created Cozystack primarily for our own needs, having vast experience in building reliable systems on bare metal infrastructure. This experience led to the formation of a separate boxed product, which is aimed at standardizing and providing a ready-to-use tool for managing your infrastructure.

|

||||

|

||||

Currently, Cozystack already solves a huge scope of infrastructure tasks: starting from provisioning bare metal servers, having a ready monitoring system, fast and reliable storage, a network fabric with the possibility of interconnect with your infrastructure, the ability to run virtual machines, databases, and much more right out of the box.

|

||||

|

||||

All this makes Cozystack a convenient platform for delivering and launching your application on Bare Metal.

|

||||

|

||||

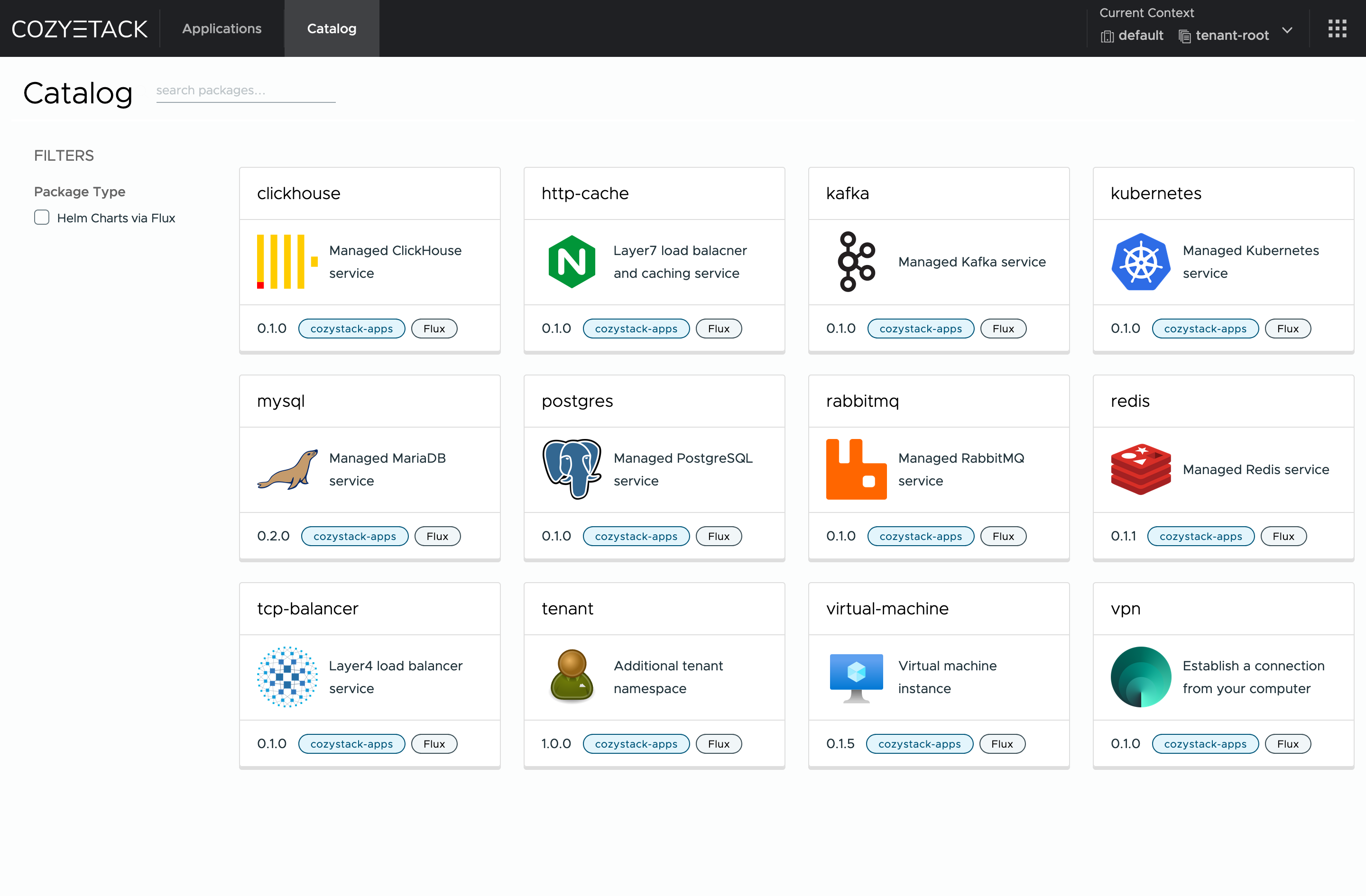

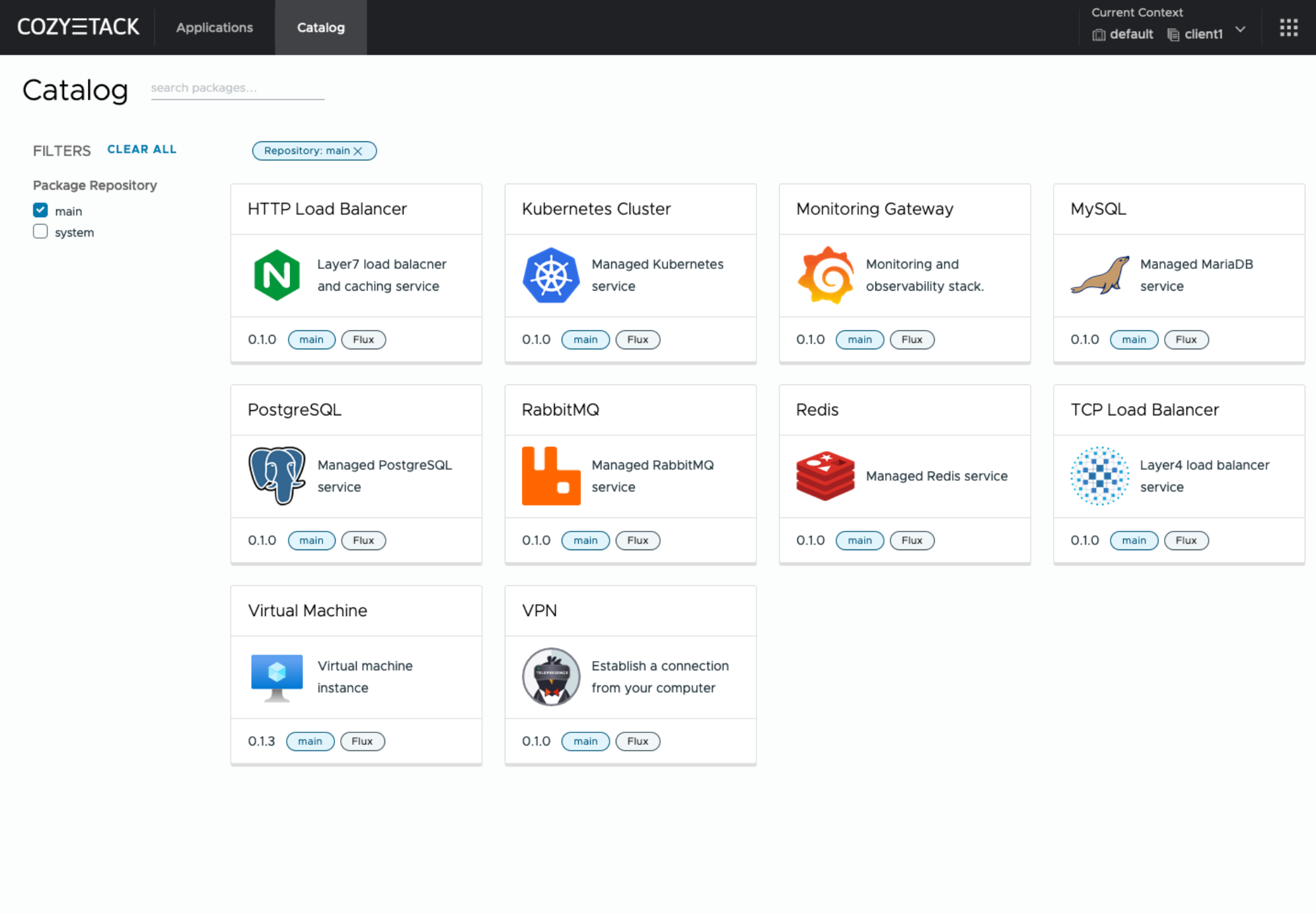

## Screenshot

|

||||

|

||||

|

||||

|

||||

|

||||

## Documentation

|

||||

## Core values

|

||||

|

||||

The documentation is located on official [cozystack.io](https://cozystack.io) website.

|

||||

### Standardization and unification

|

||||

All components of the platform are based on open source tools and technologies which are widely known in the industry.

|

||||

|

||||

Read [Get Started](https://cozystack.io/docs/get-started/) section for a quick start.

|

||||

### Collaborate, not compete

|

||||

If a feature being developed for the platform could be useful to a upstream project, it should be contributed to upstream project, rather than being implemented within the platform.

|

||||

|

||||

If you encounter any difficulties, start with the [troubleshooting guide](https://cozystack.io/docs/troubleshooting/), and work your way through the process that we've outlined.

|

||||

### API-first

|

||||

Cozystack is based on Kubernetes and involves close interaction with its API. We don't aim to completely hide the all elements behind a pretty UI or any sort of customizations; instead, we provide a standard interface and teach users how to work with basic primitives. The web interface is used solely for deploying applications and quickly diving into basic concepts of platform.

|

||||

|

||||

## Versioning

|

||||

## Quick Start

|

||||

|

||||

Versioning adheres to the [Semantic Versioning](http://semver.org/) principles.

|

||||

A full list of the available releases is available in the GitHub repository's [Release](https://github.com/aenix-io/cozystack/releases) section.

|

||||

### Prepare infrastructure

|

||||

|

||||

- [Roadmap](https://github.com/orgs/aenix-io/projects/2)

|

||||

|

||||

## Contributions

|

||||

|

||||

|

||||

Contributions are highly appreciated and very welcomed!

|

||||

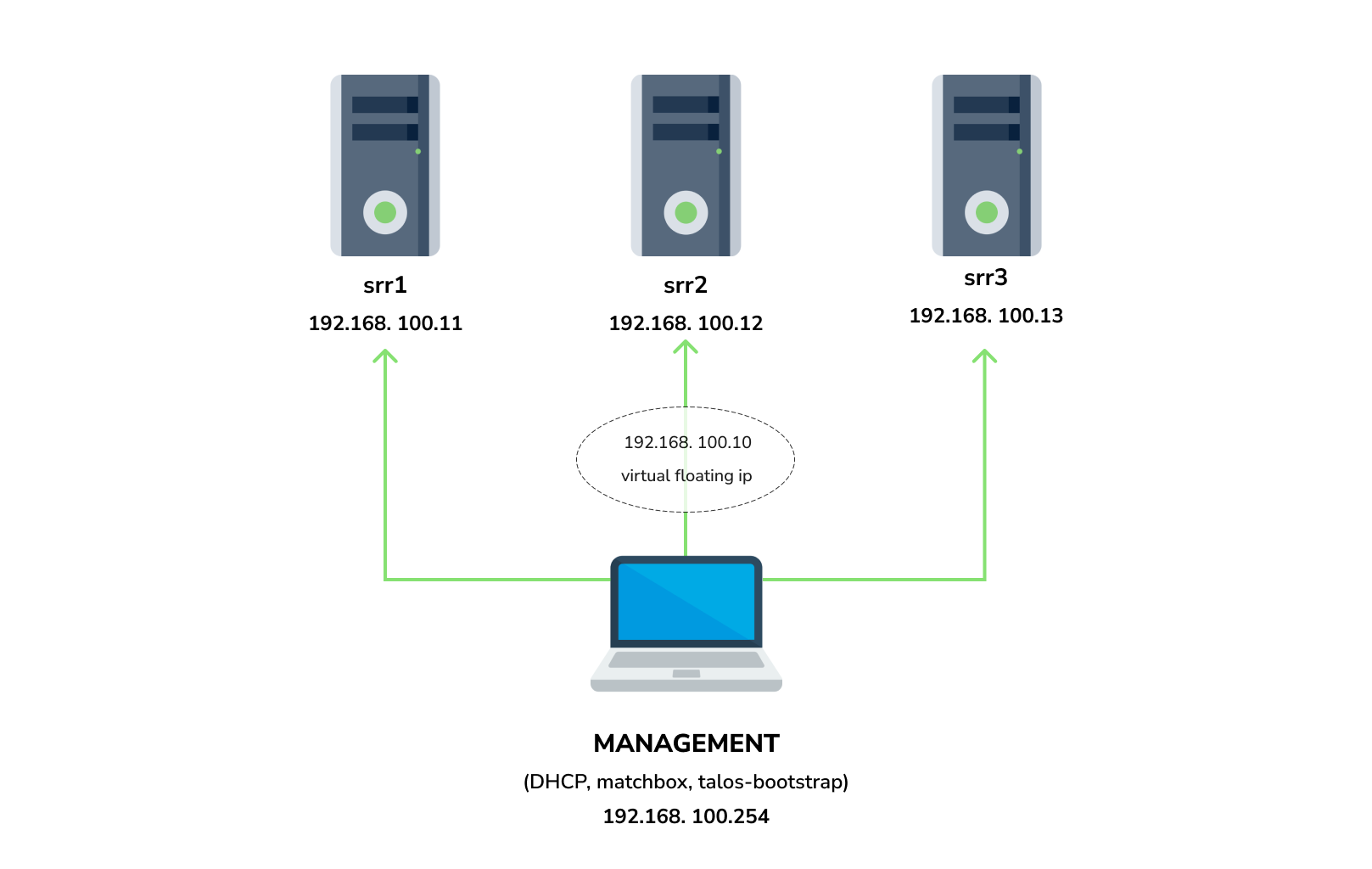

You need 3 physical servers or VMs with nested virtualisation:

|

||||

|

||||

In case of bugs, please, check if the issue has been already opened by checking the [GitHub Issues](https://github.com/aenix-io/cozystack/issues) section.

|

||||

In case it isn't, you can open a new one: a detailed report will help us to replicate it, assess it, and work on a fix.

|

||||

```

|

||||

CPU: 4 cores

|

||||

CPU model: host

|

||||

RAM: 8-16 GB

|

||||

HDD1: 32 GB

|

||||

HDD2: 100GB (raw)

|

||||

```

|

||||

|

||||

You can express your intention in working on the fix on your own.

|

||||

Commits are used to generate the changelog, and their author will be referenced in it.

|

||||

And one management VM or physical server connected to the same network.

|

||||

Any Linux system installed on it (eg. Ubuntu should be enough)

|

||||

|

||||

In case of **Feature Requests** please use the [Discussion's Feature Request section](https://github.com/aenix-io/cozystack/discussions/categories/feature-requests).

|

||||

**Note:** The VM should support `x86-64-v2` architecture, the most probably you can achieve this by setting cpu model to `host`

|

||||

|

||||

## License

|

||||

#### Install dependencies:

|

||||

|

||||

Cozystack is licensed under Apache 2.0.

|

||||

The code is provided as-is with no warranties.

|

||||

- `docker`

|

||||

- `talosctl`

|

||||

- `dialog`

|

||||

- `nmap`

|

||||

- `make`

|

||||

- `yq`

|

||||

- `kubectl`

|

||||

- `helm`

|

||||

|

||||

## Commercial Support

|

||||

### Netboot server

|

||||

|

||||

[**Ænix**](https://aenix.io) offers enterprise-grade support, available 24/7.

|

||||

Start matchbox with prebuilt Talos image for Cozystack:

|

||||

|

||||

We provide all types of assistance, including consultations, development of missing features, design, assistance with installation, and integration.

|

||||

```bash

|

||||

sudo docker run --name=matchbox -d --net=host ghcr.io/aenix-io/cozystack/matchbox:v1.6.4 \

|

||||

-address=:8080 \

|

||||

-log-level=debug

|

||||

```

|

||||

|

||||

[Contact us](https://aenix.io/contact/)

|

||||

Start DHCP-Server:

|

||||

```bash

|

||||

sudo docker run --name=dnsmasq -d --cap-add=NET_ADMIN --net=host quay.io/poseidon/dnsmasq \

|

||||

-d -q -p0 \

|

||||

--dhcp-range=192.168.100.3,192.168.100.254 \

|

||||

--dhcp-option=option:router,192.168.100.1 \

|

||||

--enable-tftp \

|

||||

--tftp-root=/var/lib/tftpboot \

|

||||

--dhcp-match=set:bios,option:client-arch,0 \

|

||||

--dhcp-boot=tag:bios,undionly.kpxe \

|

||||

--dhcp-match=set:efi32,option:client-arch,6 \

|

||||

--dhcp-boot=tag:efi32,ipxe.efi \

|

||||

--dhcp-match=set:efibc,option:client-arch,7 \

|

||||

--dhcp-boot=tag:efibc,ipxe.efi \

|

||||

--dhcp-match=set:efi64,option:client-arch,9 \

|

||||

--dhcp-boot=tag:efi64,ipxe.efi \

|

||||

--dhcp-userclass=set:ipxe,iPXE \

|

||||

--dhcp-boot=tag:ipxe,http://192.168.100.254:8080/boot.ipxe \

|

||||

--log-queries \

|

||||

--log-dhcp

|

||||

```

|

||||

|

||||

Where:

|

||||

- `192.168.100.3,192.168.100.254` range to allocate IPs from

|

||||

- `192.168.100.1` your gateway

|

||||

- `192.168.100.254` is address of your management server

|

||||

|

||||

Check status of containers:

|

||||

|

||||

```

|

||||

docker ps

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

|

||||

22044f26f74d quay.io/poseidon/dnsmasq "/usr/sbin/dnsmasq -…" 6 seconds ago Up 5 seconds dnsmasq

|

||||

231ad81ff9e0 ghcr.io/aenix-io/cozystack/matchbox:v0.0.2 "/matchbox -address=…" 58 seconds ago Up 57 seconds matchbox

|

||||

```

|

||||

|

||||

### Bootstrap cluster

|

||||

|

||||

Write configuration for Cozystack:

|

||||

|

||||

```yaml

|

||||

cat > patch.yaml <<\EOT

|

||||

machine:

|

||||

kubelet:

|

||||

nodeIP:

|

||||

validSubnets:

|

||||

- 192.168.100.0/24

|

||||

kernel:

|

||||

modules:

|

||||

- name: openvswitch

|

||||

- name: drbd

|

||||

parameters:

|

||||

- usermode_helper=disabled

|

||||

- name: zfs

|

||||

install:

|

||||

image: ghcr.io/aenix-io/cozystack/talos:v1.6.4

|

||||

files:

|

||||

- content: |

|

||||

[plugins]

|

||||

[plugins."io.containerd.grpc.v1.cri"]

|

||||

device_ownership_from_security_context = true

|

||||

path: /etc/cri/conf.d/20-customization.part

|

||||

op: create

|

||||

|

||||

cluster:

|

||||

network:

|

||||

cni:

|

||||

name: none

|

||||

podSubnets:

|

||||

- 10.244.0.0/16

|

||||

serviceSubnets:

|

||||

- 10.96.0.0/16

|

||||

EOT

|

||||

|

||||

cat > patch-controlplane.yaml <<\EOT

|

||||

cluster:

|

||||

allowSchedulingOnControlPlanes: true

|

||||

controllerManager:

|

||||

extraArgs:

|

||||

bind-address: 0.0.0.0

|

||||

scheduler:

|

||||

extraArgs:

|

||||

bind-address: 0.0.0.0

|

||||

apiServer:

|

||||

certSANs:

|

||||

- 127.0.0.1

|

||||

proxy:

|

||||

disabled: true

|

||||

discovery:

|

||||

enabled: false

|

||||

etcd:

|

||||

advertisedSubnets:

|

||||

- 192.168.100.0/24

|

||||

EOT

|

||||

```

|

||||

|

||||

Run [talos-bootstrap](https://github.com/aenix-io/talos-bootstrap/) to deploy cluster:

|

||||

|

||||

```bash

|

||||

talos-bootstrap install

|

||||

```

|

||||

|

||||

Save admin kubeconfig to access your Kubernetes cluster:

|

||||

```bash

|

||||

cp -i kubeconfig ~/.kube/config

|

||||

```

|

||||

|

||||

Check connection:

|

||||

```bash

|

||||

kubectl get ns

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME STATUS AGE

|

||||

default Active 7m56s

|

||||

kube-node-lease Active 7m56s

|

||||

kube-public Active 7m56s

|

||||

kube-system Active 7m56s

|

||||

```

|

||||

|

||||

|

||||

**Note:**: All nodes should currently show as "Not Ready", don't worry about that, this is because you disabled the default CNI plugin in the previous step. Cozystack will install it's own CNI-plugin on the next step.

|

||||

|

||||

|

||||

### Install Cozystack

|

||||

|

||||

|

||||

write config for cozystack:

|

||||

|

||||

**Note:** please make sure that you written the same setting specified in `patch.yaml` and `patch-controlplane.yaml` files.

|

||||

|

||||

```yaml

|

||||

cat > cozystack-config.yaml <<\EOT

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-system

|

||||

data:

|

||||

cluster-name: "cozystack"

|

||||

ipv4-pod-cidr: "10.244.0.0/16"

|

||||

ipv4-pod-gateway: "10.244.0.1"

|

||||

ipv4-svc-cidr: "10.96.0.0/16"

|

||||

ipv4-join-cidr: "100.64.0.0/16"

|

||||

EOT

|

||||

```

|

||||

|

||||

Create namesapce and install Cozystack system components:

|

||||

|

||||

```bash

|

||||

kubectl create ns cozy-system

|

||||

kubectl apply -f cozystack-config.yaml

|

||||

kubectl apply -f manifests/cozystack-installer.yaml

|

||||

```

|

||||

|

||||

(optional) You can track the logs of installer:

|

||||

```bash

|

||||

kubectl logs -n cozy-system deploy/cozystack -f

|

||||

```

|

||||

|

||||

Wait for a while, then check the status of installation:

|

||||

```bash

|

||||

kubectl get hr -A

|

||||

```

|

||||

|

||||

Wait until all releases become to `Ready` state:

|

||||

```console

|

||||

NAMESPACE NAME AGE READY STATUS

|

||||

cozy-cert-manager cert-manager 4m1s True Release reconciliation succeeded

|

||||

cozy-cert-manager cert-manager-issuers 4m1s True Release reconciliation succeeded

|

||||

cozy-cilium cilium 4m1s True Release reconciliation succeeded

|

||||

cozy-cluster-api capi-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-cluster-api capi-providers 4m1s True Release reconciliation succeeded

|

||||

cozy-dashboard dashboard 4m1s True Release reconciliation succeeded

|

||||

cozy-fluxcd cozy-fluxcd 4m1s True Release reconciliation succeeded

|

||||

cozy-grafana-operator grafana-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-kamaji kamaji 4m1s True Release reconciliation succeeded

|

||||

cozy-kubeovn kubeovn 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt-cdi kubevirt-cdi 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt-cdi kubevirt-cdi-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt kubevirt 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt kubevirt-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-linstor linstor 4m1s True Release reconciliation succeeded

|

||||

cozy-linstor piraeus-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-mariadb-operator mariadb-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-metallb metallb 4m1s True Release reconciliation succeeded

|

||||

cozy-monitoring monitoring 4m1s True Release reconciliation succeeded

|

||||

cozy-postgres-operator postgres-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-rabbitmq-operator rabbitmq-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-redis-operator redis-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-telepresence telepresence 4m1s True Release reconciliation succeeded

|

||||

cozy-victoria-metrics-operator victoria-metrics-operator 4m1s True Release reconciliation succeeded

|

||||

tenant-root tenant-root 4m1s True Release reconciliation succeeded

|

||||

```

|

||||

|

||||

#### Configure Storage

|

||||

|

||||

Setup alias to access LINSTOR:

|

||||

```bash

|

||||

alias linstor='kubectl exec -n cozy-linstor deploy/linstor-controller -- linstor'

|

||||

```

|

||||

|

||||

list your nodes

|

||||

```bash

|

||||

linstor node list

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

+-------------------------------------------------------+

|

||||

| Node | NodeType | Addresses | State |

|

||||

|=======================================================|

|

||||

| srv1 | SATELLITE | 192.168.100.11:3367 (SSL) | Online |

|

||||

| srv2 | SATELLITE | 192.168.100.12:3367 (SSL) | Online |

|

||||

| srv3 | SATELLITE | 192.168.100.13:3367 (SSL) | Online |

|

||||

+-------------------------------------------------------+

|

||||

```

|

||||

|

||||

list empty devices:

|

||||

|

||||

```bash

|

||||

linstor physical-storage list

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

+--------------------------------------------+

|

||||

| Size | Rotational | Nodes |

|

||||

|============================================|

|

||||

| 107374182400 | True | srv3[/dev/sdb] |

|

||||

| | | srv1[/dev/sdb] |

|

||||

| | | srv2[/dev/sdb] |

|

||||

+--------------------------------------------+

|

||||

```

|

||||

|

||||

|

||||

create storage pools:

|

||||

|

||||

```bash

|

||||

linstor ps cdp lvm srv1 /dev/sdb --pool-name data --storage-pool data

|

||||

linstor ps cdp lvm srv2 /dev/sdb --pool-name data --storage-pool data

|

||||

linstor ps cdp lvm srv3 /dev/sdb --pool-name data --storage-pool data

|

||||

```

|

||||

|

||||

list storage pools:

|

||||

|

||||

```bash

|

||||

linstor sp l

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

+-------------------------------------------------------------------------------------------------------------------------------------+

|

||||

| StoragePool | Node | Driver | PoolName | FreeCapacity | TotalCapacity | CanSnapshots | State | SharedName |

|

||||

|=====================================================================================================================================|

|

||||

| DfltDisklessStorPool | srv1 | DISKLESS | | | | False | Ok | srv1;DfltDisklessStorPool |

|

||||

| DfltDisklessStorPool | srv2 | DISKLESS | | | | False | Ok | srv2;DfltDisklessStorPool |

|

||||

| DfltDisklessStorPool | srv3 | DISKLESS | | | | False | Ok | srv3;DfltDisklessStorPool |

|

||||

| data | srv1 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv1;data |

|

||||

| data | srv2 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv2;data |

|

||||

| data | srv3 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv3;data |

|

||||

+-------------------------------------------------------------------------------------------------------------------------------------+

|

||||

```

|

||||

|

||||

|

||||

Create default storage classes:

|

||||

```yaml

|

||||

kubectl create -f- <<EOT

|

||||

---

|

||||

apiVersion: storage.k8s.io/v1

|

||||

kind: StorageClass

|

||||

metadata:

|

||||

name: local

|

||||

annotations:

|

||||

storageclass.kubernetes.io/is-default-class: "true"

|

||||

provisioner: linstor.csi.linbit.com

|

||||

parameters:

|

||||

linstor.csi.linbit.com/storagePool: "data"

|

||||

linstor.csi.linbit.com/layerList: "storage"

|

||||

linstor.csi.linbit.com/allowRemoteVolumeAccess: "false"

|

||||

volumeBindingMode: WaitForFirstConsumer

|

||||

allowVolumeExpansion: true

|

||||

---

|

||||

apiVersion: storage.k8s.io/v1

|

||||

kind: StorageClass

|

||||

metadata:

|

||||

name: replicated

|

||||

provisioner: linstor.csi.linbit.com

|

||||

parameters:

|

||||

linstor.csi.linbit.com/storagePool: "data"

|

||||

linstor.csi.linbit.com/autoPlace: "3"

|

||||

linstor.csi.linbit.com/layerList: "drbd storage"

|

||||

linstor.csi.linbit.com/allowRemoteVolumeAccess: "true"

|

||||

property.linstor.csi.linbit.com/DrbdOptions/auto-quorum: suspend-io

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Resource/on-no-data-accessible: suspend-io

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Resource/on-suspended-primary-outdated: force-secondary

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Net/rr-conflict: retry-connect

|

||||

volumeBindingMode: WaitForFirstConsumer

|

||||

allowVolumeExpansion: true

|

||||

EOT

|

||||

```

|

||||

|

||||

list storageclasses:

|

||||

|

||||

```bash

|

||||

kubectl get storageclasses

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

|

||||

local (default) linstor.csi.linbit.com Delete WaitForFirstConsumer true 11m

|

||||

replicated linstor.csi.linbit.com Delete WaitForFirstConsumer true 11m

|

||||

```

|

||||

|

||||

#### Configure Networking interconnection

|

||||

|

||||

To access your services select the range of unused IPs, eg. `192.168.100.200-192.168.100.250`

|

||||

|

||||

**Note:** These IPs should be from the same network as nodes or they should have all necessary routes for them.

|

||||

|

||||

Configure MetalLB to use and announce this range:

|

||||

```yaml

|

||||

kubectl create -f- <<EOT

|

||||

---

|

||||

apiVersion: metallb.io/v1beta1

|

||||

kind: L2Advertisement

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-metallb

|

||||

spec:

|

||||

ipAddressPools:

|

||||

- cozystack

|

||||

---

|

||||

apiVersion: metallb.io/v1beta1

|

||||

kind: IPAddressPool

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-metallb

|

||||

spec:

|

||||

addresses:

|

||||

- 192.168.100.200-192.168.100.250

|

||||

autoAssign: true

|

||||

avoidBuggyIPs: false

|

||||

EOT

|

||||

```

|

||||

|

||||

#### Setup basic applications

|

||||

|

||||

Get token from `tenant-root`:

|

||||

```bash

|

||||

kubectl get secret -n tenant-root tenant-root -o go-template='{{ printf "%s\n" (index .data "token" | base64decode) }}'

|

||||

```

|

||||

|

||||

Enable port forward to cozy-dashboard:

|

||||

```bash

|

||||

kubectl port-forward -n cozy-dashboard svc/dashboard 8080:80

|

||||

```

|

||||

|

||||

Open: http://localhost:8080/

|

||||

|

||||

- Select `tenant-root`

|

||||

- Click `Upgrade` button

|

||||

- Write a domain into `host` which you wish to use as parent domain for all deployed applications

|

||||

**Note:**

|

||||

- if you have no domain yet, you can use `192.168.100.200.nip.io` where `192.168.100.200` is a first IP address in your network addresses range.

|

||||

- alternatively you can leave the default value, however you'll be need to modify your `/etc/hosts` every time you want to access specific application.

|

||||

- Set `etcd`, `monitoring` and `ingress` to enabled position

|

||||

- Click Deploy

|

||||

|

||||

|

||||

Check persistent volumes provisioned:

|

||||

|

||||

```bash

|

||||

kubectl get pvc -n tenant-root

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

|

||||

data-etcd-0 Bound pvc-4cbd29cc-a29f-453d-b412-451647cd04bf 10Gi RWO local <unset> 2m10s

|

||||

data-etcd-1 Bound pvc-1579f95a-a69d-4a26-bcc2-b15ccdbede0d 10Gi RWO local <unset> 115s

|

||||

data-etcd-2 Bound pvc-907009e5-88bf-4d18-91e7-b56b0dbfb97e 10Gi RWO local <unset> 91s

|

||||

grafana-db-1 Bound pvc-7b3f4e23-228a-46fd-b820-d033ef4679af 10Gi RWO local <unset> 2m41s

|

||||

grafana-db-2 Bound pvc-ac9b72a4-f40e-47e8-ad24-f50d843b55e4 10Gi RWO local <unset> 113s

|

||||

vmselect-cachedir-vmselect-longterm-0 Bound pvc-622fa398-2104-459f-8744-565eee0a13f1 2Gi RWO local <unset> 2m21s

|

||||

vmselect-cachedir-vmselect-longterm-1 Bound pvc-fc9349f5-02b2-4e25-8bef-6cbc5cc6d690 2Gi RWO local <unset> 2m21s

|

||||

vmselect-cachedir-vmselect-shortterm-0 Bound pvc-7acc7ff6-6b9b-4676-bd1f-6867ea7165e2 2Gi RWO local <unset> 2m41s

|

||||

vmselect-cachedir-vmselect-shortterm-1 Bound pvc-e514f12b-f1f6-40ff-9838-a6bda3580eb7 2Gi RWO local <unset> 2m40s

|

||||

vmstorage-db-vmstorage-longterm-0 Bound pvc-e8ac7fc3-df0d-4692-aebf-9f66f72f9fef 10Gi RWO local <unset> 2m21s

|

||||

vmstorage-db-vmstorage-longterm-1 Bound pvc-68b5ceaf-3ed1-4e5a-9568-6b95911c7c3a 10Gi RWO local <unset> 2m21s

|

||||

vmstorage-db-vmstorage-shortterm-0 Bound pvc-cee3a2a4-5680-4880-bc2a-85c14dba9380 10Gi RWO local <unset> 2m41s

|

||||

vmstorage-db-vmstorage-shortterm-1 Bound pvc-d55c235d-cada-4c4a-8299-e5fc3f161789 10Gi RWO local <unset> 2m41s

|

||||

```

|

||||

|

||||

Check all pods are running:

|

||||

|

||||

|

||||

```bash

|

||||

kubectl get pod -n tenant-root

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME READY STATUS RESTARTS AGE

|

||||

etcd-0 1/1 Running 0 2m1s

|

||||

etcd-1 1/1 Running 0 106s

|

||||

etcd-2 1/1 Running 0 82s

|

||||

grafana-db-1 1/1 Running 0 119s

|

||||

grafana-db-2 1/1 Running 0 13s

|

||||

grafana-deployment-74b5656d6-5dcvn 1/1 Running 0 90s

|

||||

grafana-deployment-74b5656d6-q5589 1/1 Running 1 (105s ago) 111s

|

||||

root-ingress-controller-6ccf55bc6d-pg79l 2/2 Running 0 2m27s

|

||||

root-ingress-controller-6ccf55bc6d-xbs6x 2/2 Running 0 2m29s

|

||||

root-ingress-defaultbackend-686bcbbd6c-5zbvp 1/1 Running 0 2m29s

|

||||

vmalert-vmalert-644986d5c-7hvwk 2/2 Running 0 2m30s

|

||||

vmalertmanager-alertmanager-0 2/2 Running 0 2m32s

|

||||

vmalertmanager-alertmanager-1 2/2 Running 0 2m31s

|

||||

vminsert-longterm-75789465f-hc6cz 1/1 Running 0 2m10s

|

||||

vminsert-longterm-75789465f-m2v4t 1/1 Running 0 2m12s

|

||||

vminsert-shortterm-78456f8fd9-wlwww 1/1 Running 0 2m29s

|

||||

vminsert-shortterm-78456f8fd9-xg7cw 1/1 Running 0 2m28s

|

||||

vmselect-longterm-0 1/1 Running 0 2m12s

|

||||

vmselect-longterm-1 1/1 Running 0 2m12s

|

||||

vmselect-shortterm-0 1/1 Running 0 2m31s

|

||||

vmselect-shortterm-1 1/1 Running 0 2m30s

|

||||

vmstorage-longterm-0 1/1 Running 0 2m12s

|

||||

vmstorage-longterm-1 1/1 Running 0 2m12s

|

||||

vmstorage-shortterm-0 1/1 Running 0 2m32s

|

||||

vmstorage-shortterm-1 1/1 Running 0 2m31s

|

||||

```

|

||||

|

||||

Now you can get public IP of ingress controller:

|

||||

```

|

||||

kubectl get svc -n tenant-root root-ingress-controller

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

|

||||

root-ingress-controller LoadBalancer 10.96.16.141 192.168.100.200 80:31632/TCP,443:30113/TCP 3m33s

|

||||

```

|

||||

|

||||

Use `grafana.example.org` (under 192.168.100.200) to access system monitoring, where `example.org` is your domain specified for `tenant-root`

|

||||

|

||||

- login: `admin`

|

||||

- password:

|

||||

|

||||

```bash

|

||||

kubectl get secret -n tenant-root grafana-admin-password -o go-template='{{ printf "%s\n" (index .data "password" | base64decode) }}'

|

||||

```

|

||||

|

||||

318

hack/e2e.sh

318

hack/e2e.sh

@@ -1,318 +0,0 @@

|

||||

#!/bin/bash

|

||||

if [ "$COZYSTACK_INSTALLER_YAML" = "" ]; then

|

||||

echo 'COZYSTACK_INSTALLER_YAML variable is not set!' >&2

|

||||

echo 'please set it with following command:' >&2

|

||||

echo >&2

|

||||

echo 'export COZYSTACK_INSTALLER_YAML=$(helm template -n cozy-system installer packages/core/installer)' >&2

|

||||

echo >&2

|

||||

exit 1

|

||||

fi

|

||||

|

||||

if [ "$(cat /proc/sys/net/ipv4/ip_forward)" != 1 ]; then

|

||||

echo "IPv4 forwarding is not enabled!" >&2

|

||||

echo 'please enable forwarding with the following command:' >&2

|

||||

echo >&2

|

||||

echo 'echo 1 > /proc/sys/net/ipv4/ip_forward' >&2

|

||||

echo >&2

|

||||

exit 1

|

||||

fi

|

||||

|

||||

set -x

|

||||

set -e

|

||||

|

||||

kill `cat srv1/qemu.pid srv2/qemu.pid srv3/qemu.pid` || true

|

||||

|

||||

ip link del cozy-br0 || true

|

||||

ip link add cozy-br0 type bridge

|

||||

ip link set cozy-br0 up

|

||||

ip addr add 192.168.123.1/24 dev cozy-br0

|

||||

|

||||

# Enable forward & masquerading

|

||||

echo 1 > /proc/sys/net/ipv4/ip_forward

|

||||

iptables -t nat -A POSTROUTING -s 192.168.123.0/24 -j MASQUERADE

|

||||

|

||||

rm -rf srv1 srv2 srv3

|

||||

mkdir -p srv1 srv2 srv3

|

||||

|

||||

# Prepare cloud-init

|

||||

for i in 1 2 3; do

|

||||

echo "local-hostname: srv$i" > "srv$i/meta-data"

|

||||

echo '#cloud-config' > "srv$i/user-data"

|

||||

cat > "srv$i/network-config" <<EOT

|

||||

version: 2

|

||||

ethernets:

|

||||

eth0:

|

||||

dhcp4: false

|

||||

addresses:

|

||||

- "192.168.123.1$i/26"

|

||||

gateway4: "192.168.123.1"

|

||||

nameservers:

|

||||

search: [cluster.local]

|

||||

addresses: [8.8.8.8]

|

||||

EOT

|

||||

|

||||

( cd srv$i && genisoimage \

|

||||

-output seed.img \

|

||||

-volid cidata -rational-rock -joliet \

|

||||

user-data meta-data network-config

|

||||

)

|

||||

done

|

||||

|

||||

# Prepare system drive

|

||||

if [ ! -f nocloud-amd64.raw ]; then

|

||||

wget https://github.com/aenix-io/cozystack/releases/latest/download/nocloud-amd64.raw.xz -O nocloud-amd64.raw.xz

|

||||

rm -f nocloud-amd64.raw

|

||||

xz --decompress nocloud-amd64.raw.xz

|

||||

fi

|

||||

for i in 1 2 3; do

|

||||

cp nocloud-amd64.raw srv$i/system.img

|

||||

qemu-img resize srv$i/system.img 20G

|

||||

done

|

||||

|

||||

# Prepare data drives

|

||||

for i in 1 2 3; do

|

||||

qemu-img create srv$i/data.img 100G

|

||||

done

|

||||

|

||||

# Prepare networking

|

||||

for i in 1 2 3; do

|

||||

ip link del cozy-srv$i || true

|

||||

ip tuntap add dev cozy-srv$i mode tap

|

||||

ip link set cozy-srv$i up

|

||||

ip link set cozy-srv$i master cozy-br0

|

||||

done

|

||||

|

||||

# Start VMs

|

||||

for i in 1 2 3; do

|

||||

qemu-system-x86_64 -machine type=pc,accel=kvm -cpu host -smp 4 -m 8192 \

|

||||

-device virtio-net,netdev=net0,mac=52:54:00:12:34:5$i -netdev tap,id=net0,ifname=cozy-srv$i,script=no,downscript=no \

|

||||

-drive file=srv$i/system.img,if=virtio,format=raw \

|

||||

-drive file=srv$i/seed.img,if=virtio,format=raw \

|

||||

-drive file=srv$i/data.img,if=virtio,format=raw \

|

||||

-display none -daemonize -pidfile srv$i/qemu.pid

|

||||

done

|

||||

|

||||

sleep 5

|

||||

|

||||

# Wait for VM to start up

|

||||

timeout 60 sh -c 'until nc -nzv 192.168.123.11 50000 && nc -nzv 192.168.123.12 50000 && nc -nzv 192.168.123.13 50000; do sleep 1; done'

|

||||

|

||||

cat > patch.yaml <<\EOT

|

||||

machine:

|

||||

kubelet:

|

||||

nodeIP:

|

||||

validSubnets:

|

||||

- 192.168.123.0/24

|

||||

extraConfig:

|

||||

maxPods: 512

|

||||

kernel:

|

||||

modules:

|

||||

- name: openvswitch

|

||||

- name: drbd

|

||||

parameters:

|

||||

- usermode_helper=disabled

|

||||

- name: zfs

|

||||

- name: spl

|

||||

install:

|

||||

image: ghcr.io/aenix-io/cozystack/talos:v1.7.1

|

||||

files:

|

||||

- content: |

|

||||

[plugins]

|

||||

[plugins."io.containerd.grpc.v1.cri"]

|

||||

device_ownership_from_security_context = true

|

||||

path: /etc/cri/conf.d/20-customization.part

|

||||

op: create

|

||||

|

||||

cluster:

|

||||

network:

|

||||

cni:

|

||||

name: none

|

||||

dnsDomain: cozy.local

|

||||

podSubnets:

|

||||

- 10.244.0.0/16

|

||||

serviceSubnets:

|

||||

- 10.96.0.0/16

|

||||

EOT

|

||||

|

||||

cat > patch-controlplane.yaml <<\EOT

|

||||

machine:

|

||||

network:

|

||||

interfaces:

|

||||

- interface: eth0

|

||||

vip:

|

||||

ip: 192.168.123.10

|

||||

cluster:

|

||||

allowSchedulingOnControlPlanes: true

|

||||

controllerManager:

|

||||

extraArgs:

|

||||

bind-address: 0.0.0.0

|

||||

scheduler:

|

||||

extraArgs:

|

||||

bind-address: 0.0.0.0

|

||||

apiServer:

|

||||

certSANs:

|

||||

- 127.0.0.1

|

||||

proxy:

|

||||

disabled: true

|

||||

discovery:

|

||||

enabled: false

|

||||

etcd:

|

||||

advertisedSubnets:

|

||||

- 192.168.123.0/24

|

||||

EOT

|

||||

|

||||

# Gen configuration

|

||||

if [ ! -f secrets.yaml ]; then

|

||||

talosctl gen secrets

|

||||

fi

|

||||

|

||||

rm -f controlplane.yaml worker.yaml talosconfig kubeconfig

|

||||

talosctl gen config --with-secrets secrets.yaml cozystack https://192.168.123.10:6443 --config-patch=@patch.yaml --config-patch-control-plane @patch-controlplane.yaml

|

||||

export TALOSCONFIG=$PWD/talosconfig

|

||||

|

||||

# Apply configuration

|

||||

talosctl apply -f controlplane.yaml -n 192.168.123.11 -e 192.168.123.11 -i

|

||||

talosctl apply -f controlplane.yaml -n 192.168.123.12 -e 192.168.123.12 -i

|

||||

talosctl apply -f controlplane.yaml -n 192.168.123.13 -e 192.168.123.13 -i

|

||||

|

||||

# Wait for VM to be configured

|

||||

timeout 60 sh -c 'until nc -nzv 192.168.123.11 50000 && nc -nzv 192.168.123.12 50000 && nc -nzv 192.168.123.13 50000; do sleep 1; done'

|

||||

|

||||

# Bootstrap

|

||||

talosctl bootstrap -n 192.168.123.11 -e 192.168.123.11

|

||||

|

||||

# Wait for etcd

|

||||

timeout 120 sh -c 'while talosctl etcd members -n 192.168.123.11,192.168.123.12,192.168.123.13 -e 192.168.123.10 2>&1 | grep "rpc error"; do sleep 1; done'

|

||||

|

||||

rm -f kubeconfig

|

||||

talosctl kubeconfig kubeconfig -e 192.168.123.10 -n 192.168.123.10

|

||||

export KUBECONFIG=$PWD/kubeconfig

|

||||

|

||||

# Wait for kubernetes nodes appear

|

||||

timeout 60 sh -c 'until [ $(kubectl get node -o name | wc -l) = 3 ]; do sleep 1; done'

|

||||

kubectl create ns cozy-system

|

||||

kubectl create -f - <<\EOT

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-system

|

||||

data:

|

||||

bundle-name: "paas-full"

|

||||

ipv4-pod-cidr: "10.244.0.0/16"

|

||||

ipv4-pod-gateway: "10.244.0.1"

|

||||

ipv4-svc-cidr: "10.96.0.0/16"

|

||||

ipv4-join-cidr: "100.64.0.0/16"

|

||||

EOT

|

||||

|

||||

#

|

||||

echo "$COZYSTACK_INSTALLER_YAML" | kubectl apply -f -

|

||||

|

||||

# wait for cozystack pod to start

|

||||

kubectl wait deploy --timeout=1m --for=condition=available -n cozy-system cozystack

|

||||

|

||||

# wait for helmreleases appear

|

||||

timeout 60 sh -c 'until kubectl get hr -A | grep cozy; do sleep 1; done'

|

||||

|

||||

sleep 5

|

||||

|

||||

kubectl get hr -A | awk 'NR>1 {print "kubectl wait --timeout=15m --for=condition=ready -n " $1 " hr/" $2 " &"} END{print "wait"}' | sh -x

|

||||

# Wait for linstor controller

|

||||

kubectl wait deploy --timeout=5m --for=condition=available -n cozy-linstor linstor-controller

|

||||

|

||||

# Wait for all linstor nodes become Online

|

||||

timeout 60 sh -c 'until [ $(kubectl exec -n cozy-linstor deploy/linstor-controller -- linstor node list | grep -c Online) = 3 ]; do sleep 1; done'

|

||||

|

||||

kubectl exec -n cozy-linstor deploy/linstor-controller -- linstor ps cdp zfs srv1 /dev/vdc --pool-name data --storage-pool data

|

||||

kubectl exec -n cozy-linstor deploy/linstor-controller -- linstor ps cdp zfs srv2 /dev/vdc --pool-name data --storage-pool data

|

||||

kubectl exec -n cozy-linstor deploy/linstor-controller -- linstor ps cdp zfs srv3 /dev/vdc --pool-name data --storage-pool data

|

||||

|

||||

kubectl create -f- <<EOT

|

||||

---

|

||||

apiVersion: storage.k8s.io/v1

|

||||

kind: StorageClass

|

||||

metadata:

|

||||

name: local

|

||||

annotations:

|

||||

storageclass.kubernetes.io/is-default-class: "true"

|

||||

provisioner: linstor.csi.linbit.com

|

||||

parameters:

|

||||

linstor.csi.linbit.com/storagePool: "data"

|

||||

linstor.csi.linbit.com/layerList: "storage"

|

||||

linstor.csi.linbit.com/allowRemoteVolumeAccess: "false"

|

||||

volumeBindingMode: WaitForFirstConsumer

|

||||

allowVolumeExpansion: true

|

||||

---

|

||||

apiVersion: storage.k8s.io/v1

|

||||

kind: StorageClass

|

||||

metadata:

|

||||

name: replicated

|

||||

provisioner: linstor.csi.linbit.com

|

||||

parameters:

|

||||

linstor.csi.linbit.com/storagePool: "data"

|

||||

linstor.csi.linbit.com/autoPlace: "3"

|

||||

linstor.csi.linbit.com/layerList: "drbd storage"

|

||||

linstor.csi.linbit.com/allowRemoteVolumeAccess: "true"

|

||||

property.linstor.csi.linbit.com/DrbdOptions/auto-quorum: suspend-io

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Resource/on-no-data-accessible: suspend-io

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Resource/on-suspended-primary-outdated: force-secondary

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Net/rr-conflict: retry-connect

|

||||

volumeBindingMode: WaitForFirstConsumer

|

||||

allowVolumeExpansion: true

|

||||

EOT

|

||||

kubectl create -f- <<EOT

|

||||

---

|

||||

apiVersion: metallb.io/v1beta1

|

||||

kind: L2Advertisement

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-metallb

|

||||

spec:

|

||||

ipAddressPools:

|

||||

- cozystack

|

||||

---

|

||||

apiVersion: metallb.io/v1beta1

|

||||

kind: IPAddressPool

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-metallb

|

||||

spec:

|

||||

addresses:

|

||||

- 192.168.123.200-192.168.123.250

|

||||

autoAssign: true

|

||||

avoidBuggyIPs: false

|

||||

EOT

|

||||

|

||||

kubectl patch -n tenant-root hr/tenant-root --type=merge -p '{"spec":{ "values":{

|

||||

"host": "example.org",

|

||||

"ingress": true,

|

||||

"monitoring": true,

|

||||

"etcd": true

|

||||

}}}'

|

||||

|

||||

# Wait for HelmRelease be created

|

||||

timeout 60 sh -c 'until kubectl get hr -n tenant-root etcd ingress monitoring tenant-root; do sleep 1; done'

|

||||

|

||||

# Wait for HelmReleases be installed

|

||||

kubectl wait --timeout=2m --for=condition=ready -n tenant-root hr etcd ingress monitoring tenant-root

|

||||

|

||||

# Wait for nginx-ingress-controller

|

||||

timeout 60 sh -c 'until kubectl get deploy -n tenant-root root-ingress-controller; do sleep 1; done'

|

||||

kubectl wait --timeout=5m --for=condition=available -n tenant-root deploy root-ingress-controller

|

||||

|

||||

# Wait for etcd

|

||||

kubectl wait --timeout=5m --for=jsonpath=.status.readyReplicas=3 -n tenant-root sts etcd

|

||||

|

||||

# Wait for Victoria metrics

|

||||

kubectl wait --timeout=5m --for=condition=available deploy -n tenant-root vmalert-vmalert vminsert-longterm vminsert-shortterm

|

||||

kubectl wait --timeout=5m --for=jsonpath=.status.readyReplicas=2 -n tenant-root sts vmalertmanager-alertmanager vmselect-longterm vmselect-shortterm vmstorage-longterm vmstorage-shortterm

|

||||

|

||||

# Wait for grafana

|

||||

kubectl wait --timeout=5m --for=condition=ready -n tenant-root clusters.postgresql.cnpg.io grafana-db

|

||||

kubectl wait --timeout=5m --for=condition=available -n tenant-root deploy grafana-deployment

|

||||

|

||||

# Get IP of nginx-ingress

|

||||

ip=$(kubectl get svc -n tenant-root root-ingress-controller -o jsonpath='{.status.loadBalancer.ingress..ip}')

|

||||

|

||||

# Check Grafana

|

||||

curl -sS -k "https://$ip" -H 'Host: grafana.example.org' | grep Found

|

||||

@@ -20,28 +20,9 @@ miss_map=$(echo "$new_map" | awk 'NR==FNR { new_map[$1 " " $2] = $3; next } { if

|

||||

resolved_miss_map=$(

|

||||

echo "$miss_map" | while read chart version commit; do

|

||||

if [ "$commit" = HEAD ]; then

|

||||

line=$(awk '/^version:/ {print NR; exit}' "./$chart/Chart.yaml")

|

||||

change_commit=$(git --no-pager blame -L"$line",+1 -- "$chart/Chart.yaml" | awk '{print $1}')

|

||||

|

||||

if [ "$change_commit" = "00000000" ]; then

|

||||

# Not commited yet, use previus commit

|

||||

line=$(git show HEAD:"./$chart/Chart.yaml" | awk '/^version:/ {print NR; exit}')

|

||||

commit=$(git --no-pager blame -L"$line",+1 HEAD -- "$chart/Chart.yaml" | awk '{print $1}')

|

||||

if [ $(echo $commit | cut -c1) = "^" ]; then

|

||||

# Previus commit not exists

|

||||

commit=$(echo $commit | cut -c2-)

|

||||

fi

|

||||

else

|

||||

# Commited, but version_map wasn't updated

|

||||

line=$(git show HEAD:"./$chart/Chart.yaml" | awk '/^version:/ {print NR; exit}')

|

||||

change_commit=$(git --no-pager blame -L"$line",+1 HEAD -- "$chart/Chart.yaml" | awk '{print $1}')

|

||||

if [ $(echo $change_commit | cut -c1) = "^" ]; then

|

||||

# Previus commit not exists

|

||||

commit=$(echo $change_commit | cut -c2-)

|

||||

else

|

||||

commit=$(git describe --always "$change_commit~1")

|

||||

fi

|

||||

fi

|

||||

line=$(git show HEAD:"./$chart/Chart.yaml" | awk '/^version:/ {print NR; exit}')

|

||||

change_commit=$(git --no-pager blame -L"$line",+1 HEAD -- "$chart/Chart.yaml" | awk '{print $1}')

|

||||

commit=$(git describe --always "$change_commit~1")

|

||||

fi

|

||||

echo "$chart $version $commit"

|

||||

done

|

||||

|

||||

22

hack/prepare_release.sh

Executable file

22

hack/prepare_release.sh

Executable file

@@ -0,0 +1,22 @@

|

||||

#!/bin/sh

|

||||

set -e

|

||||

|

||||

if [ -e $1 ]; then

|

||||

echo "Please pass version in the first argument"

|

||||

echo "Example: $0 v0.0.2"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

version=$1

|

||||

talos_version=$(awk '/^version:/ {print $2}' packages/core/installer/images/talos/profiles/installer.yaml)

|

||||

|

||||

set -x

|

||||

|

||||

sed -i "s|\(ghcr.io/aenix-io/cozystack/matchbox:\)v[^ ]\+|\1${talos_version}|g" README.md

|

||||

sed -i "s|\(ghcr.io/aenix-io/cozystack/talos:\)v[^ ]\+|\1${talos_version}|g" README.md

|

||||

|

||||

sed -i "/^TAG / s|=.*|= ${version}|" \

|

||||

packages/apps/http-cache/Makefile \

|

||||

packages/apps/kubernetes/Makefile \

|

||||

packages/core/installer/Makefile \

|

||||

packages/system/dashboard/Makefile

|

||||

@@ -15,6 +15,13 @@ metadata:

|

||||

namespace: cozy-system

|

||||

---

|

||||

# Source: cozy-installer/templates/cozystack.yaml

|

||||

apiVersion: v1

|

||||

kind: ServiceAccount

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-system

|

||||

---

|

||||

# Source: cozy-installer/templates/cozystack.yaml

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

kind: ClusterRoleBinding

|

||||

metadata:

|

||||

@@ -55,10 +62,7 @@ spec:

|

||||

matchLabels:

|

||||

app: cozystack

|

||||

strategy:

|

||||

type: RollingUpdate

|

||||

rollingUpdate:

|

||||

maxSurge: 0

|

||||

maxUnavailable: 1

|

||||

type: Recreate

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

@@ -68,26 +72,14 @@ spec:

|

||||

serviceAccountName: cozystack

|

||||

containers:

|

||||

- name: cozystack

|

||||

image: "ghcr.io/aenix-io/cozystack/cozystack:v0.9.0"

|

||||

image: "ghcr.io/aenix-io/cozystack/installer:v0.0.2"

|

||||

env:

|

||||

- name: KUBERNETES_SERVICE_HOST

|

||||

value: localhost

|

||||

- name: KUBERNETES_SERVICE_PORT

|

||||

value: "7445"

|

||||

- name: K8S_AWAIT_ELECTION_ENABLED

|

||||

value: "1"

|

||||

- name: K8S_AWAIT_ELECTION_NAME

|

||||

value: cozystack

|

||||

- name: K8S_AWAIT_ELECTION_LOCK_NAME

|

||||

value: cozystack

|

||||

- name: K8S_AWAIT_ELECTION_LOCK_NAMESPACE

|

||||

value: cozy-system

|

||||

- name: K8S_AWAIT_ELECTION_IDENTITY

|

||||

valueFrom:

|

||||

fieldRef:

|

||||

fieldPath: metadata.name

|

||||

- name: darkhttpd

|

||||

image: "ghcr.io/aenix-io/cozystack/cozystack:v0.9.0"

|

||||

image: "ghcr.io/aenix-io/cozystack/installer:v0.0.2"

|

||||

command:

|

||||

- /usr/bin/darkhttpd

|

||||

- /cozystack/assets

|

||||

@@ -100,6 +92,3 @@ spec:

|

||||

- key: "node.kubernetes.io/not-ready"

|

||||

operator: "Exists"

|

||||

effect: "NoSchedule"

|

||||

- key: "node.cilium.io/agent-not-ready"

|

||||

operator: "Exists"

|

||||

effect: "NoSchedule"

|

||||

|

||||

@@ -7,11 +7,11 @@ repo:

|

||||

awk '$$3 != "HEAD" {print "mkdir -p $(TMP)/" $$1 "-" $$2}' versions_map | sh -ex

|

||||

awk '$$3 != "HEAD" {print "git archive " $$3 " " $$1 " | tar -xf- --strip-components=1 -C $(TMP)/" $$1 "-" $$2 }' versions_map | sh -ex

|

||||

helm package -d "$(OUT)" $$(find . $(TMP) -mindepth 2 -maxdepth 2 -name Chart.yaml | awk 'sub("/Chart.yaml", "")' | sort -V)

|

||||

cd "$(OUT)" && helm repo index . --url http://cozystack.cozy-system.svc/repos/apps

|

||||

cd "$(OUT)" && helm repo index .

|

||||

rm -rf "$(TMP)"

|

||||

|

||||

fix-chartnames:

|

||||

find . -maxdepth 2 -name Chart.yaml | awk -F/ '{print $$2}' | while read i; do sed -i "s/^name: .*/name: $$i/" "$$i/Chart.yaml"; done

|

||||

find . -name Chart.yaml -maxdepth 2 | awk -F/ '{print $$2}' | while read i; do sed -i "s/^name: .*/name: $$i/" "$$i/Chart.yaml"; done

|

||||

|

||||

gen-versions-map: fix-chartnames

|

||||

../../hack/gen_versions_map.sh

|

||||

|

||||

@@ -1,3 +0,0 @@

|

||||

.helmignore

|

||||

/logos

|

||||

/Makefile

|

||||

@@ -1,25 +0,0 @@

|

||||

apiVersion: v2

|

||||

name: clickhouse

|

||||

description: Managed ClickHouse service

|

||||

icon: /logos/clickhouse.svg

|

||||

|

||||

# A chart can be either an 'application' or a 'library' chart.

|

||||

#

|

||||

# Application charts are a collection of templates that can be packaged into versioned archives

|

||||

# to be deployed.

|

||||

#

|

||||

# Library charts provide useful utilities or functions for the chart developer. They're included as

|

||||

# a dependency of application charts to inject those utilities and functions into the rendering

|

||||