mirror of

https://github.com/outbackdingo/cozystack.git

synced 2026-01-29 10:18:54 +00:00

Compare commits

13 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

5310bd3591 | ||

|

|

88d2dcc3e1 | ||

|

|

b53e264c5e | ||

|

|

0c92d25669 | ||

|

|

e17dcaa65e | ||

|

|

85d4ed251d | ||

|

|

f1c01a0fe8 | ||

|

|

2cff181279 | ||

|

|

2e3555600d | ||

|

|

98f488fcac | ||

|

|

1c6de1ccf5 | ||

|

|

235a2fcf47 | ||

|

|

24151b09f3 |

2

.gitignore

vendored

2

.gitignore

vendored

@@ -1 +1,3 @@

|

||||

_out

|

||||

.git

|

||||

.idea

|

||||

553

README.md

553

README.md

@@ -10,7 +10,7 @@

|

||||

|

||||

# Cozystack

|

||||

|

||||

**Cozystack** is an open-source **PaaS platform** for cloud providers.

|

||||

**Cozystack** is a free PaaS platform and framework for building clouds.

|

||||

|

||||

With Cozystack, you can transform your bunch of servers into an intelligent system with a simple REST API for spawning Kubernetes clusters, Database-as-a-Service, virtual machines, load balancers, HTTP caching services, and other services with ease.

|

||||

|

||||

@@ -18,548 +18,55 @@ You can use Cozystack to build your own cloud or to provide a cost-effective dev

|

||||

|

||||

## Use-Cases

|

||||

|

||||

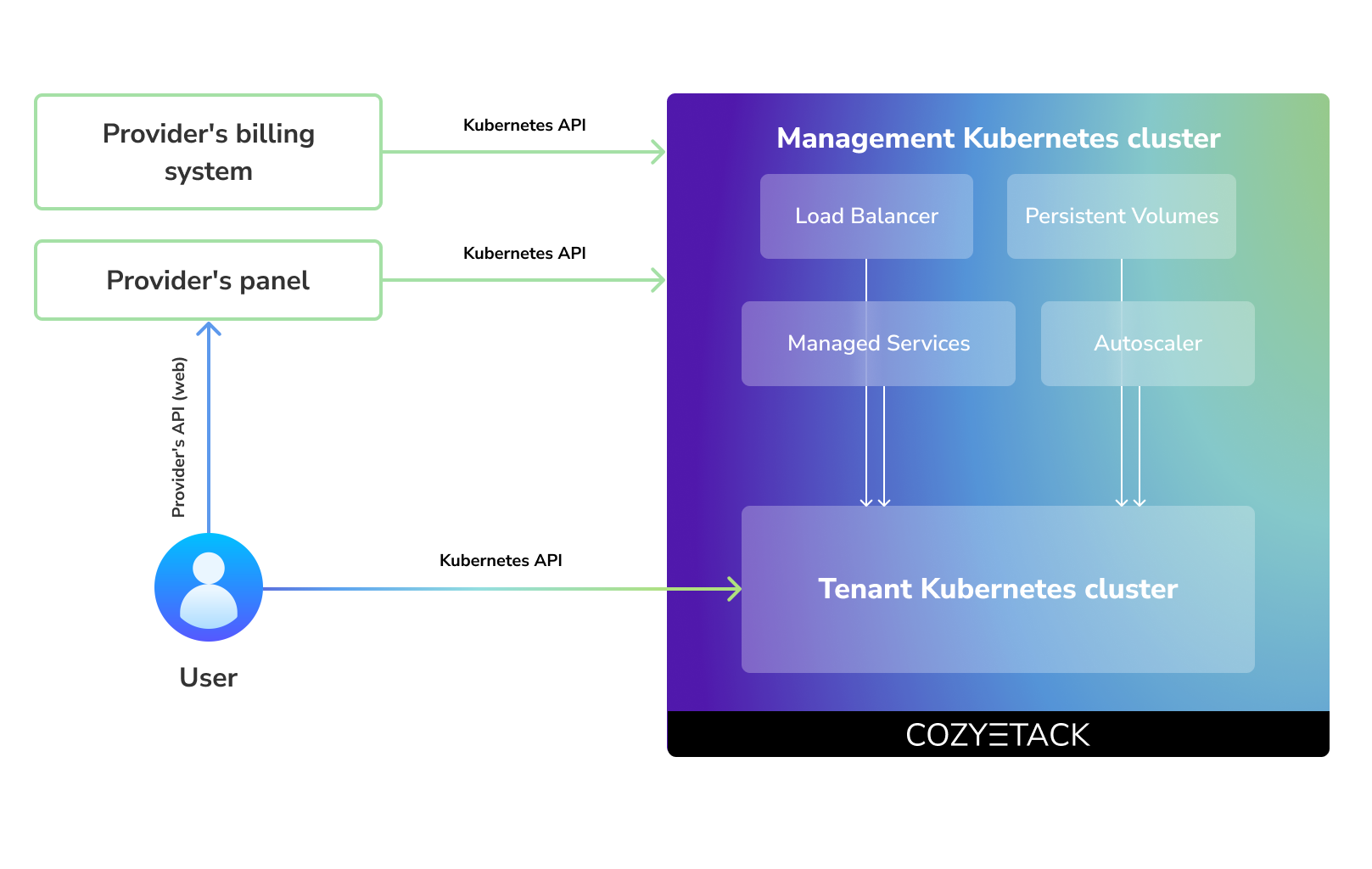

### As a backend for a public cloud

|

||||

* [**Using Cozystack to build public cloud**](https://cozystack.io/docs/use-cases/public-cloud/)

|

||||

You can use Cozystack as backend for a public cloud

|

||||

|

||||

Cozystack positions itself as a kind of framework for building public clouds. The key word here is framework. In this case, it's important to understand that Cozystack is made for cloud providers, not for end users.

|

||||

* [**Using Cozystack to build private cloud**](https://cozystack.io/docs/use-cases/private-cloud/)

|

||||

You can use Cozystack as platform to build a private cloud powered by Infrastructure-as-Code approach

|

||||

|

||||

Despite having a graphical interface, the current security model does not imply public user access to your management cluster.

|

||||

|

||||

Instead, end users get access to their own Kubernetes clusters, can order LoadBalancers and additional services from it, but they have no access and know nothing about your management cluster powered by Cozystack.

|

||||

|

||||

Thus, to integrate with your billing system, it's enough to teach your system to go to the management Kubernetes and place a YAML file signifying the service you're interested in. Cozystack will do the rest of the work for you.

|

||||

|

||||

|

||||

|

||||

### As a private cloud for Infrastructure-as-Code

|

||||

|

||||

One of the use cases is a self-portal for users within your company, where they can order the service they're interested in or a managed database.

|

||||

|

||||

You can implement best GitOps practices, where users will launch their own Kubernetes clusters and databases for their needs with a simple commit of configuration into your infrastructure Git repository.

|

||||

|

||||

Thanks to the standardization of the approach to deploying applications, you can expand the platform's capabilities using the functionality of standard Helm charts.

|

||||

|

||||

### As a Kubernetes distribution for Bare Metal

|

||||

|

||||

We created Cozystack primarily for our own needs, having vast experience in building reliable systems on bare metal infrastructure. This experience led to the formation of a separate boxed product, which is aimed at standardizing and providing a ready-to-use tool for managing your infrastructure.

|

||||

|

||||

Currently, Cozystack already solves a huge scope of infrastructure tasks: starting from provisioning bare metal servers, having a ready monitoring system, fast and reliable storage, a network fabric with the possibility of interconnect with your infrastructure, the ability to run virtual machines, databases, and much more right out of the box.

|

||||

|

||||

All this makes Cozystack a convenient platform for delivering and launching your application on Bare Metal.

|

||||

* [**Using Cozystack as Kubernetes distribution**](https://cozystack.io/docs/use-cases/kubernetes-distribution/)

|

||||

You can use Cozystack as Kubernetes distribution for Bare Metal

|

||||

|

||||

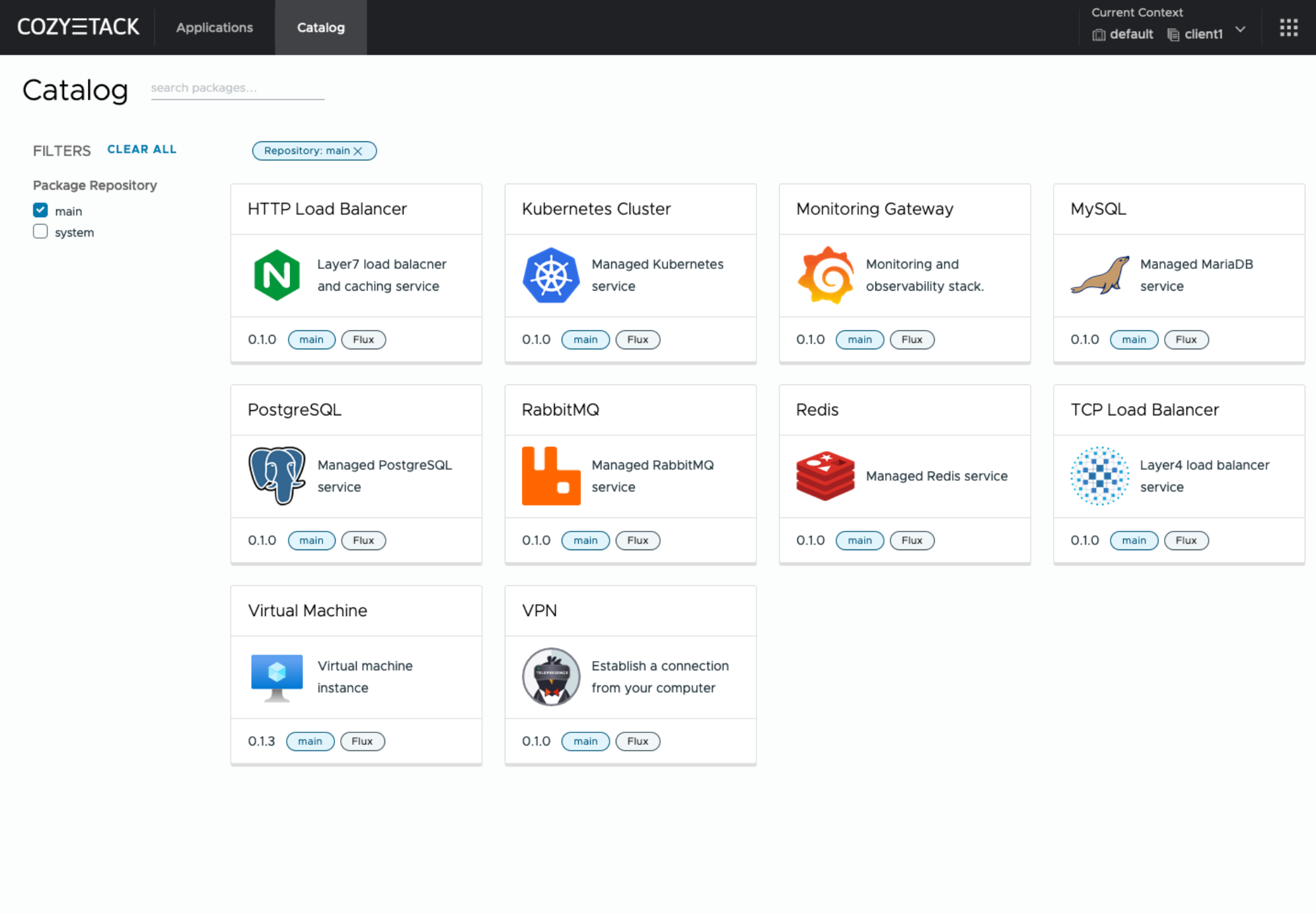

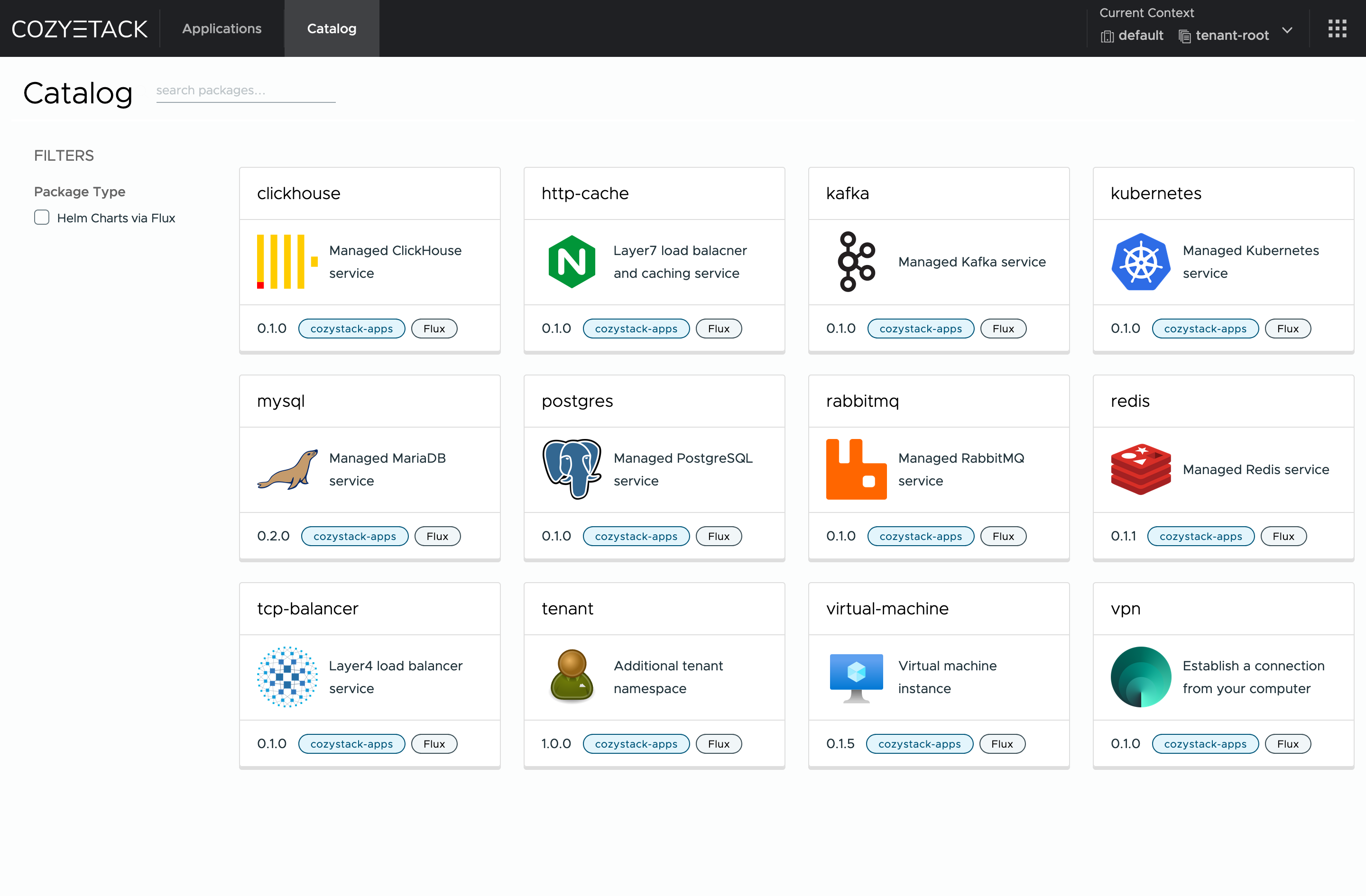

## Screenshot

|

||||

|

||||

|

||||

|

||||

|

||||

## Core values

|

||||

## Documentation

|

||||

|

||||

### Standardization and unification

|

||||

All components of the platform are based on open source tools and technologies which are widely known in the industry.

|

||||

The documentation is located on official [cozystack.io](cozystack.io) website.

|

||||

|

||||

### Collaborate, not compete

|

||||

If a feature being developed for the platform could be useful to a upstream project, it should be contributed to upstream project, rather than being implemented within the platform.

|

||||

Read [Get Started](https://cozystack.io/docs/get-started/) section for a quick start.

|

||||

|

||||

### API-first

|

||||

Cozystack is based on Kubernetes and involves close interaction with its API. We don't aim to completely hide the all elements behind a pretty UI or any sort of customizations; instead, we provide a standard interface and teach users how to work with basic primitives. The web interface is used solely for deploying applications and quickly diving into basic concepts of platform.

|

||||

If you encounter any difficulties, start with the [troubleshooting guide](https://cozystack.io/docs/troubleshooting/), and work your way through the process that we've outlined.

|

||||

|

||||

## Quick Start

|

||||

## Versioning

|

||||

|

||||

### Prepare infrastructure

|

||||

Versioning adheres to the [Semantic Versioning](http://semver.org/) principles.

|

||||

A full list of the available releases is available in the GitHub repository's [Release](https://github.com/aenix-io/cozystack/releases) section.

|

||||

|

||||

- [Roadmap](https://github.com/orgs/aenix-io/projects/2)

|

||||

|

||||

|

||||

## Contributions

|

||||

|

||||

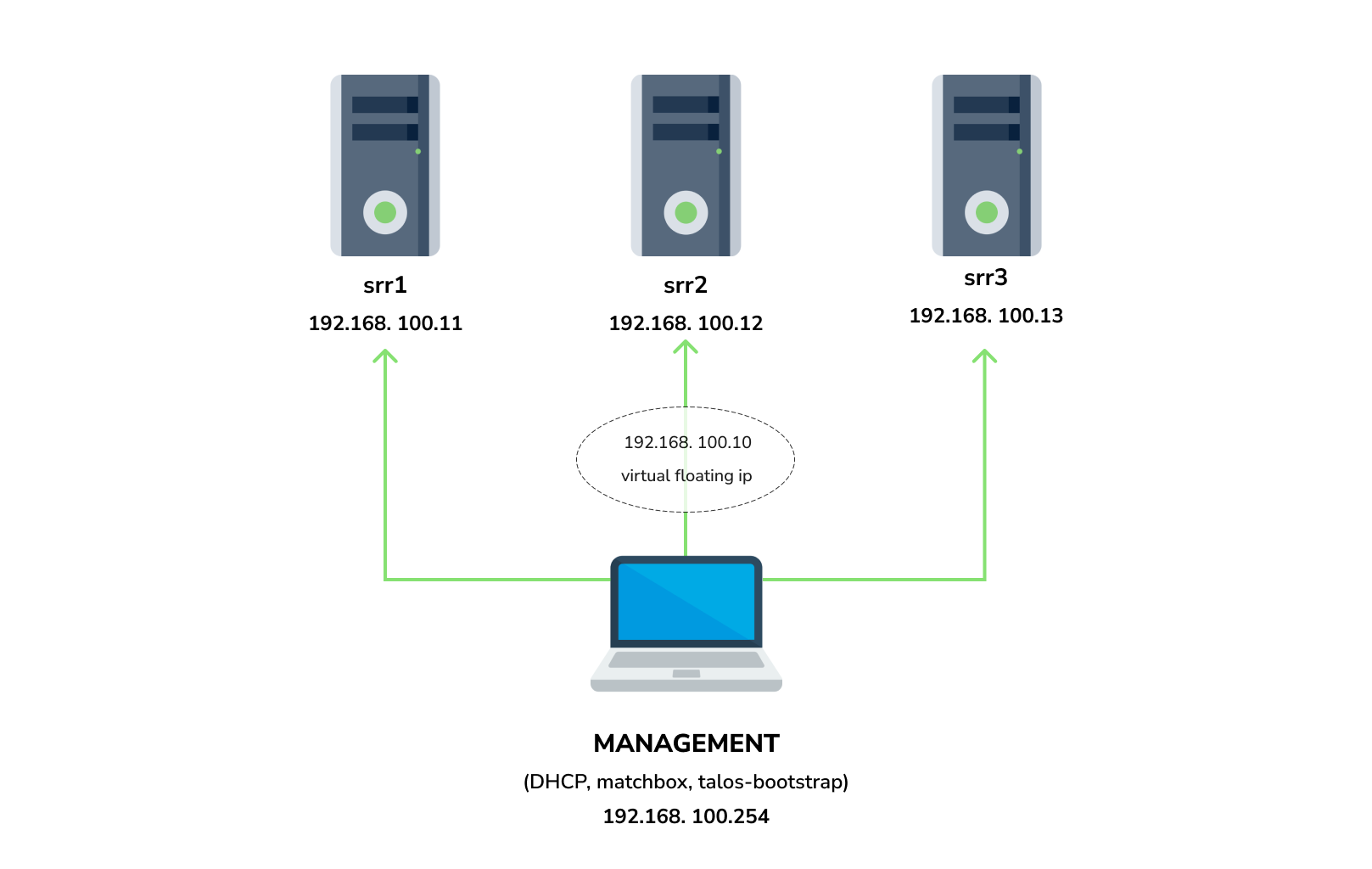

You need 3 physical servers or VMs with nested virtualisation:

|

||||

Contributions are highly appreciated and very welcomed!

|

||||

|

||||

```

|

||||

CPU: 4 cores

|

||||

CPU model: host

|

||||

RAM: 8-16 GB

|

||||

HDD1: 32 GB

|

||||

HDD2: 100GB (raw)

|

||||

```

|

||||

In case of bugs, please, check if the issue has been already opened by checking the [GitHub Issues](https://github.com/aenix-io/cozystack/issues) section.

|

||||

In case it isn't, you can open a new one: a detailed report will help us to replicate it, assess it, and work on a fix.

|

||||

|

||||

And one management VM or physical server connected to the same network.

|

||||

Any Linux system installed on it (eg. Ubuntu should be enough)

|

||||

You can express your intention in working on the fix on your own.

|

||||

Commits are used to generate the changelog, and their author will be referenced in it.

|

||||

|

||||

**Note:** The VM should support `x86-64-v2` architecture, the most probably you can achieve this by setting cpu model to `host`

|

||||

In case of **Feature Requests** please use the [Discussion's Feature Request section](https://github.com/aenix-io/cozystack/discussions/categories/feature-requests).

|

||||

|

||||

#### Install dependencies:

|

||||

## License

|

||||

|

||||

- `docker`

|

||||

- `talosctl`

|

||||

- `dialog`

|

||||

- `nmap`

|

||||

- `make`

|

||||

- `yq`

|

||||

- `kubectl`

|

||||

- `helm`

|

||||

Cozystack is licensed under Apache 2.0.

|

||||

The code is provided as-is with no warranties.

|

||||

|

||||

### Netboot server

|

||||

## Commercial Support

|

||||

|

||||

Start matchbox with prebuilt Talos image for Cozystack:

|

||||

[**Ænix**](https://aenix.io) offers enterprise-grade support, available 24/7.

|

||||

|

||||

```bash

|

||||

sudo docker run --name=matchbox -d --net=host ghcr.io/aenix-io/cozystack/matchbox:v1.6.4 \

|

||||

-address=:8080 \

|

||||

-log-level=debug

|

||||

```

|

||||

We provide all types of assistance, including consultations, development of missing features, design, assistance with installation, and integration.

|

||||

|

||||

Start DHCP-Server:

|

||||

```bash

|

||||

sudo docker run --name=dnsmasq -d --cap-add=NET_ADMIN --net=host quay.io/poseidon/dnsmasq \

|

||||

-d -q -p0 \

|

||||

--dhcp-range=192.168.100.3,192.168.100.254 \

|

||||

--dhcp-option=option:router,192.168.100.1 \

|

||||

--enable-tftp \

|

||||

--tftp-root=/var/lib/tftpboot \

|

||||

--dhcp-match=set:bios,option:client-arch,0 \

|

||||

--dhcp-boot=tag:bios,undionly.kpxe \

|

||||

--dhcp-match=set:efi32,option:client-arch,6 \

|

||||

--dhcp-boot=tag:efi32,ipxe.efi \

|

||||

--dhcp-match=set:efibc,option:client-arch,7 \

|

||||

--dhcp-boot=tag:efibc,ipxe.efi \

|

||||

--dhcp-match=set:efi64,option:client-arch,9 \

|

||||

--dhcp-boot=tag:efi64,ipxe.efi \

|

||||

--dhcp-userclass=set:ipxe,iPXE \

|

||||

--dhcp-boot=tag:ipxe,http://192.168.100.254:8080/boot.ipxe \

|

||||

--log-queries \

|

||||

--log-dhcp

|

||||

```

|

||||

|

||||

Where:

|

||||

- `192.168.100.3,192.168.100.254` range to allocate IPs from

|

||||

- `192.168.100.1` your gateway

|

||||

- `192.168.100.254` is address of your management server

|

||||

|

||||

Check status of containers:

|

||||

|

||||

```

|

||||

docker ps

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

|

||||

22044f26f74d quay.io/poseidon/dnsmasq "/usr/sbin/dnsmasq -…" 6 seconds ago Up 5 seconds dnsmasq

|

||||

231ad81ff9e0 ghcr.io/aenix-io/cozystack/matchbox:v0.0.2 "/matchbox -address=…" 58 seconds ago Up 57 seconds matchbox

|

||||

```

|

||||

|

||||

### Bootstrap cluster

|

||||

|

||||

Write configuration for Cozystack:

|

||||

|

||||

```yaml

|

||||

cat > patch.yaml <<\EOT

|

||||

machine:

|

||||

kubelet:

|

||||

nodeIP:

|

||||

validSubnets:

|

||||

- 192.168.100.0/24

|

||||

kernel:

|

||||

modules:

|

||||

- name: openvswitch

|

||||

- name: drbd

|

||||

parameters:

|

||||

- usermode_helper=disabled

|

||||

- name: zfs

|

||||

install:

|

||||

image: ghcr.io/aenix-io/cozystack/talos:v1.6.4

|

||||

files:

|

||||

- content: |

|

||||

[plugins]

|

||||

[plugins."io.containerd.grpc.v1.cri"]

|

||||

device_ownership_from_security_context = true

|

||||

path: /etc/cri/conf.d/20-customization.part

|

||||

op: create

|

||||

|

||||

cluster:

|

||||

network:

|

||||

cni:

|

||||

name: none

|

||||

podSubnets:

|

||||

- 10.244.0.0/16

|

||||

serviceSubnets:

|

||||

- 10.96.0.0/16

|

||||

EOT

|

||||

|

||||

cat > patch-controlplane.yaml <<\EOT

|

||||

cluster:

|

||||

allowSchedulingOnControlPlanes: true

|

||||

controllerManager:

|

||||

extraArgs:

|

||||

bind-address: 0.0.0.0

|

||||

scheduler:

|

||||

extraArgs:

|

||||

bind-address: 0.0.0.0

|

||||

apiServer:

|

||||

certSANs:

|

||||

- 127.0.0.1

|

||||

proxy:

|

||||

disabled: true

|

||||

discovery:

|

||||

enabled: false

|

||||

etcd:

|

||||

advertisedSubnets:

|

||||

- 192.168.100.0/24

|

||||

EOT

|

||||

```

|

||||

|

||||

Run [talos-bootstrap](https://github.com/aenix-io/talos-bootstrap/) to deploy cluster:

|

||||

|

||||

```bash

|

||||

talos-bootstrap install

|

||||

```

|

||||

|

||||

Save admin kubeconfig to access your Kubernetes cluster:

|

||||

```bash

|

||||

cp -i kubeconfig ~/.kube/config

|

||||

```

|

||||

|

||||

Check connection:

|

||||

```bash

|

||||

kubectl get ns

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME STATUS AGE

|

||||

default Active 7m56s

|

||||

kube-node-lease Active 7m56s

|

||||

kube-public Active 7m56s

|

||||

kube-system Active 7m56s

|

||||

```

|

||||

|

||||

|

||||

**Note:**: All nodes should currently show as "Not Ready", don't worry about that, this is because you disabled the default CNI plugin in the previous step. Cozystack will install it's own CNI-plugin on the next step.

|

||||

|

||||

|

||||

### Install Cozystack

|

||||

|

||||

|

||||

write config for cozystack:

|

||||

|

||||

**Note:** please make sure that you written the same setting specified in `patch.yaml` and `patch-controlplane.yaml` files.

|

||||

|

||||

```yaml

|

||||

cat > cozystack-config.yaml <<\EOT

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-system

|

||||

data:

|

||||

cluster-name: "cozystack"

|

||||

ipv4-pod-cidr: "10.244.0.0/16"

|

||||

ipv4-pod-gateway: "10.244.0.1"

|

||||

ipv4-svc-cidr: "10.96.0.0/16"

|

||||

ipv4-join-cidr: "100.64.0.0/16"

|

||||

EOT

|

||||

```

|

||||

|

||||

Create namesapce and install Cozystack system components:

|

||||

|

||||

```bash

|

||||

kubectl create ns cozy-system

|

||||

kubectl apply -f cozystack-config.yaml

|

||||

kubectl apply -f manifests/cozystack-installer.yaml

|

||||

```

|

||||

|

||||

(optional) You can track the logs of installer:

|

||||

```bash

|

||||

kubectl logs -n cozy-system deploy/cozystack -f

|

||||

```

|

||||

|

||||

Wait for a while, then check the status of installation:

|

||||

```bash

|

||||

kubectl get hr -A

|

||||

```

|

||||

|

||||

Wait until all releases become to `Ready` state:

|

||||

```console

|

||||

NAMESPACE NAME AGE READY STATUS

|

||||

cozy-cert-manager cert-manager 4m1s True Release reconciliation succeeded

|

||||

cozy-cert-manager cert-manager-issuers 4m1s True Release reconciliation succeeded

|

||||

cozy-cilium cilium 4m1s True Release reconciliation succeeded

|

||||

cozy-cluster-api capi-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-cluster-api capi-providers 4m1s True Release reconciliation succeeded

|

||||

cozy-dashboard dashboard 4m1s True Release reconciliation succeeded

|

||||

cozy-fluxcd cozy-fluxcd 4m1s True Release reconciliation succeeded

|

||||

cozy-grafana-operator grafana-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-kamaji kamaji 4m1s True Release reconciliation succeeded

|

||||

cozy-kubeovn kubeovn 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt-cdi kubevirt-cdi 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt-cdi kubevirt-cdi-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt kubevirt 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt kubevirt-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-linstor linstor 4m1s True Release reconciliation succeeded

|

||||

cozy-linstor piraeus-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-mariadb-operator mariadb-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-metallb metallb 4m1s True Release reconciliation succeeded

|

||||

cozy-monitoring monitoring 4m1s True Release reconciliation succeeded

|

||||

cozy-postgres-operator postgres-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-rabbitmq-operator rabbitmq-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-redis-operator redis-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-telepresence telepresence 4m1s True Release reconciliation succeeded

|

||||

cozy-victoria-metrics-operator victoria-metrics-operator 4m1s True Release reconciliation succeeded

|

||||

tenant-root tenant-root 4m1s True Release reconciliation succeeded

|

||||

```

|

||||

|

||||

#### Configure Storage

|

||||

|

||||

Setup alias to access LINSTOR:

|

||||

```bash

|

||||

alias linstor='kubectl exec -n cozy-linstor deploy/linstor-controller -- linstor'

|

||||

```

|

||||

|

||||

list your nodes

|

||||

```bash

|

||||

linstor node list

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

+-------------------------------------------------------+

|

||||

| Node | NodeType | Addresses | State |

|

||||

|=======================================================|

|

||||

| srv1 | SATELLITE | 192.168.100.11:3367 (SSL) | Online |

|

||||

| srv2 | SATELLITE | 192.168.100.12:3367 (SSL) | Online |

|

||||

| srv3 | SATELLITE | 192.168.100.13:3367 (SSL) | Online |

|

||||

+-------------------------------------------------------+

|

||||

```

|

||||

|

||||

list empty devices:

|

||||

|

||||

```bash

|

||||

linstor physical-storage list

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

+--------------------------------------------+

|

||||

| Size | Rotational | Nodes |

|

||||

|============================================|

|

||||

| 107374182400 | True | srv3[/dev/sdb] |

|

||||

| | | srv1[/dev/sdb] |

|

||||

| | | srv2[/dev/sdb] |

|

||||

+--------------------------------------------+

|

||||

```

|

||||

|

||||

|

||||

create storage pools:

|

||||

|

||||

```bash

|

||||

linstor ps cdp lvm srv1 /dev/sdb --pool-name data --storage-pool data

|

||||

linstor ps cdp lvm srv2 /dev/sdb --pool-name data --storage-pool data

|

||||

linstor ps cdp lvm srv3 /dev/sdb --pool-name data --storage-pool data

|

||||

```

|

||||

|

||||

list storage pools:

|

||||

|

||||

```bash

|

||||

linstor sp l

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

+-------------------------------------------------------------------------------------------------------------------------------------+

|

||||

| StoragePool | Node | Driver | PoolName | FreeCapacity | TotalCapacity | CanSnapshots | State | SharedName |

|

||||

|=====================================================================================================================================|

|

||||

| DfltDisklessStorPool | srv1 | DISKLESS | | | | False | Ok | srv1;DfltDisklessStorPool |

|

||||

| DfltDisklessStorPool | srv2 | DISKLESS | | | | False | Ok | srv2;DfltDisklessStorPool |

|

||||

| DfltDisklessStorPool | srv3 | DISKLESS | | | | False | Ok | srv3;DfltDisklessStorPool |

|

||||

| data | srv1 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv1;data |

|

||||

| data | srv2 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv2;data |

|

||||

| data | srv3 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv3;data |

|

||||

+-------------------------------------------------------------------------------------------------------------------------------------+

|

||||

```

|

||||

|

||||

|

||||

Create default storage classes:

|

||||

```yaml

|

||||

kubectl create -f- <<EOT

|

||||

---

|

||||

apiVersion: storage.k8s.io/v1

|

||||

kind: StorageClass

|

||||

metadata:

|

||||

name: local

|

||||

annotations:

|

||||

storageclass.kubernetes.io/is-default-class: "true"

|

||||

provisioner: linstor.csi.linbit.com

|

||||

parameters:

|

||||

linstor.csi.linbit.com/storagePool: "data"

|

||||

linstor.csi.linbit.com/layerList: "storage"

|

||||

linstor.csi.linbit.com/allowRemoteVolumeAccess: "false"

|

||||

volumeBindingMode: WaitForFirstConsumer

|

||||

allowVolumeExpansion: true

|

||||

---

|

||||

apiVersion: storage.k8s.io/v1

|

||||

kind: StorageClass

|

||||

metadata:

|

||||

name: replicated

|

||||

provisioner: linstor.csi.linbit.com

|

||||

parameters:

|

||||

linstor.csi.linbit.com/storagePool: "data"

|

||||

linstor.csi.linbit.com/autoPlace: "3"

|

||||

linstor.csi.linbit.com/layerList: "drbd storage"

|

||||

linstor.csi.linbit.com/allowRemoteVolumeAccess: "true"

|

||||

property.linstor.csi.linbit.com/DrbdOptions/auto-quorum: suspend-io

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Resource/on-no-data-accessible: suspend-io

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Resource/on-suspended-primary-outdated: force-secondary

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Net/rr-conflict: retry-connect

|

||||

volumeBindingMode: WaitForFirstConsumer

|

||||

allowVolumeExpansion: true

|

||||

EOT

|

||||

```

|

||||

|

||||

list storageclasses:

|

||||

|

||||

```bash

|

||||

kubectl get storageclasses

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

|

||||

local (default) linstor.csi.linbit.com Delete WaitForFirstConsumer true 11m

|

||||

replicated linstor.csi.linbit.com Delete WaitForFirstConsumer true 11m

|

||||

```

|

||||

|

||||

#### Configure Networking interconnection

|

||||

|

||||

To access your services select the range of unused IPs, eg. `192.168.100.200-192.168.100.250`

|

||||

|

||||

**Note:** These IPs should be from the same network as nodes or they should have all necessary routes for them.

|

||||

|

||||

Configure MetalLB to use and announce this range:

|

||||

```yaml

|

||||

kubectl create -f- <<EOT

|

||||

---

|

||||

apiVersion: metallb.io/v1beta1

|

||||

kind: L2Advertisement

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-metallb

|

||||

spec:

|

||||

ipAddressPools:

|

||||

- cozystack

|

||||

---

|

||||

apiVersion: metallb.io/v1beta1

|

||||

kind: IPAddressPool

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-metallb

|

||||

spec:

|

||||

addresses:

|

||||

- 192.168.100.200-192.168.100.250

|

||||

autoAssign: true

|

||||

avoidBuggyIPs: false

|

||||

EOT

|

||||

```

|

||||

|

||||

#### Setup basic applications

|

||||

|

||||

Get token from `tenant-root`:

|

||||

```bash

|

||||

kubectl get secret -n tenant-root tenant-root -o go-template='{{ printf "%s\n" (index .data "token" | base64decode) }}'

|

||||

```

|

||||

|

||||

Enable port forward to cozy-dashboard:

|

||||

```bash

|

||||

kubectl port-forward -n cozy-dashboard svc/dashboard 8080:80

|

||||

```

|

||||

|

||||

Open: http://localhost:8080/

|

||||

|

||||

- Select `tenant-root`

|

||||

- Click `Upgrade` button

|

||||

- Write a domain into `host` which you wish to use as parent domain for all deployed applications

|

||||

**Note:**

|

||||

- if you have no domain yet, you can use `192.168.100.200.nip.io` where `192.168.100.200` is a first IP address in your network addresses range.

|

||||

- alternatively you can leave the default value, however you'll be need to modify your `/etc/hosts` every time you want to access specific application.

|

||||

- Set `etcd`, `monitoring` and `ingress` to enabled position

|

||||

- Click Deploy

|

||||

|

||||

|

||||

Check persistent volumes provisioned:

|

||||

|

||||

```bash

|

||||

kubectl get pvc -n tenant-root

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

|

||||

data-etcd-0 Bound pvc-4cbd29cc-a29f-453d-b412-451647cd04bf 10Gi RWO local <unset> 2m10s

|

||||

data-etcd-1 Bound pvc-1579f95a-a69d-4a26-bcc2-b15ccdbede0d 10Gi RWO local <unset> 115s

|

||||

data-etcd-2 Bound pvc-907009e5-88bf-4d18-91e7-b56b0dbfb97e 10Gi RWO local <unset> 91s

|

||||

grafana-db-1 Bound pvc-7b3f4e23-228a-46fd-b820-d033ef4679af 10Gi RWO local <unset> 2m41s

|

||||

grafana-db-2 Bound pvc-ac9b72a4-f40e-47e8-ad24-f50d843b55e4 10Gi RWO local <unset> 113s

|

||||

vmselect-cachedir-vmselect-longterm-0 Bound pvc-622fa398-2104-459f-8744-565eee0a13f1 2Gi RWO local <unset> 2m21s

|

||||

vmselect-cachedir-vmselect-longterm-1 Bound pvc-fc9349f5-02b2-4e25-8bef-6cbc5cc6d690 2Gi RWO local <unset> 2m21s

|

||||

vmselect-cachedir-vmselect-shortterm-0 Bound pvc-7acc7ff6-6b9b-4676-bd1f-6867ea7165e2 2Gi RWO local <unset> 2m41s

|

||||

vmselect-cachedir-vmselect-shortterm-1 Bound pvc-e514f12b-f1f6-40ff-9838-a6bda3580eb7 2Gi RWO local <unset> 2m40s

|

||||

vmstorage-db-vmstorage-longterm-0 Bound pvc-e8ac7fc3-df0d-4692-aebf-9f66f72f9fef 10Gi RWO local <unset> 2m21s

|

||||

vmstorage-db-vmstorage-longterm-1 Bound pvc-68b5ceaf-3ed1-4e5a-9568-6b95911c7c3a 10Gi RWO local <unset> 2m21s

|

||||

vmstorage-db-vmstorage-shortterm-0 Bound pvc-cee3a2a4-5680-4880-bc2a-85c14dba9380 10Gi RWO local <unset> 2m41s

|

||||

vmstorage-db-vmstorage-shortterm-1 Bound pvc-d55c235d-cada-4c4a-8299-e5fc3f161789 10Gi RWO local <unset> 2m41s

|

||||

```

|

||||

|

||||

Check all pods are running:

|

||||

|

||||

|

||||

```bash

|

||||

kubectl get pod -n tenant-root

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME READY STATUS RESTARTS AGE

|

||||

etcd-0 1/1 Running 0 2m1s

|

||||

etcd-1 1/1 Running 0 106s

|

||||

etcd-2 1/1 Running 0 82s

|

||||

grafana-db-1 1/1 Running 0 119s

|

||||

grafana-db-2 1/1 Running 0 13s

|

||||

grafana-deployment-74b5656d6-5dcvn 1/1 Running 0 90s

|

||||

grafana-deployment-74b5656d6-q5589 1/1 Running 1 (105s ago) 111s

|

||||

root-ingress-controller-6ccf55bc6d-pg79l 2/2 Running 0 2m27s

|

||||

root-ingress-controller-6ccf55bc6d-xbs6x 2/2 Running 0 2m29s

|

||||

root-ingress-defaultbackend-686bcbbd6c-5zbvp 1/1 Running 0 2m29s

|

||||

vmalert-vmalert-644986d5c-7hvwk 2/2 Running 0 2m30s

|

||||

vmalertmanager-alertmanager-0 2/2 Running 0 2m32s

|

||||

vmalertmanager-alertmanager-1 2/2 Running 0 2m31s

|

||||

vminsert-longterm-75789465f-hc6cz 1/1 Running 0 2m10s

|

||||

vminsert-longterm-75789465f-m2v4t 1/1 Running 0 2m12s

|

||||

vminsert-shortterm-78456f8fd9-wlwww 1/1 Running 0 2m29s

|

||||

vminsert-shortterm-78456f8fd9-xg7cw 1/1 Running 0 2m28s

|

||||

vmselect-longterm-0 1/1 Running 0 2m12s

|

||||

vmselect-longterm-1 1/1 Running 0 2m12s

|

||||

vmselect-shortterm-0 1/1 Running 0 2m31s

|

||||

vmselect-shortterm-1 1/1 Running 0 2m30s

|

||||

vmstorage-longterm-0 1/1 Running 0 2m12s

|

||||

vmstorage-longterm-1 1/1 Running 0 2m12s

|

||||

vmstorage-shortterm-0 1/1 Running 0 2m32s

|

||||

vmstorage-shortterm-1 1/1 Running 0 2m31s

|

||||

```

|

||||

|

||||

Now you can get public IP of ingress controller:

|

||||

```

|

||||

kubectl get svc -n tenant-root root-ingress-controller

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

|

||||

root-ingress-controller LoadBalancer 10.96.16.141 192.168.100.200 80:31632/TCP,443:30113/TCP 3m33s

|

||||

```

|

||||

|

||||

Use `grafana.example.org` (under 192.168.100.200) to access system monitoring, where `example.org` is your domain specified for `tenant-root`

|

||||

|

||||

- login: `admin`

|

||||

- password:

|

||||

|

||||

```bash

|

||||

kubectl get secret -n tenant-root grafana-admin-password -o go-template='{{ printf "%s\n" (index .data "password" | base64decode) }}'

|

||||

```

|

||||

[Contact us](https://aenix.io/contact/)

|

||||

|

||||

@@ -12,9 +12,6 @@ talos_version=$(awk '/^version:/ {print $2}' packages/core/installer/images/talo

|

||||

|

||||

set -x

|

||||

|

||||

sed -i "s|\(ghcr.io/aenix-io/cozystack/matchbox:\)v[^ ]\+|\1${talos_version}|g" README.md

|

||||

sed -i "s|\(ghcr.io/aenix-io/cozystack/talos:\)v[^ ]\+|\1${talos_version}|g" README.md

|

||||

|

||||

sed -i "/^TAG / s|=.*|= ${version}|" \

|

||||

packages/apps/http-cache/Makefile \

|

||||

packages/apps/kubernetes/Makefile \

|

||||

|

||||

@@ -61,8 +61,6 @@ spec:

|

||||

selector:

|

||||

matchLabels:

|

||||

app: cozystack

|

||||

strategy:

|

||||

type: Recreate

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

@@ -72,14 +70,26 @@ spec:

|

||||

serviceAccountName: cozystack

|

||||

containers:

|

||||

- name: cozystack

|

||||

image: "ghcr.io/aenix-io/cozystack/installer:v0.0.2"

|

||||

image: "ghcr.io/aenix-io/cozystack/cozystack:v0.1.0"

|

||||

env:

|

||||

- name: KUBERNETES_SERVICE_HOST

|

||||

value: localhost

|

||||

- name: KUBERNETES_SERVICE_PORT

|

||||

value: "7445"

|

||||

- name: K8S_AWAIT_ELECTION_ENABLED

|

||||

value: "1"

|

||||

- name: K8S_AWAIT_ELECTION_NAME

|

||||

value: cozystack

|

||||

- name: K8S_AWAIT_ELECTION_LOCK_NAME

|

||||

value: cozystack

|

||||

- name: K8S_AWAIT_ELECTION_LOCK_NAMESPACE

|

||||

value: cozy-system

|

||||

- name: K8S_AWAIT_ELECTION_IDENTITY

|

||||

valueFrom:

|

||||

fieldRef:

|

||||

fieldPath: metadata.name

|

||||

- name: darkhttpd

|

||||

image: "ghcr.io/aenix-io/cozystack/installer:v0.0.2"

|

||||

image: "ghcr.io/aenix-io/cozystack/cozystack:v0.1.0"

|

||||

command:

|

||||

- /usr/bin/darkhttpd

|

||||

- /cozystack/assets

|

||||

|

||||

@@ -2,7 +2,7 @@ PUSH := 1

|

||||

LOAD := 0

|

||||

REGISTRY := ghcr.io/aenix-io/cozystack

|

||||

NGINX_CACHE_TAG = v0.1.0

|

||||

TAG := v0.0.2

|

||||

TAG := v0.2.0

|

||||

|

||||

image: image-nginx

|

||||

|

||||

|

||||

@@ -1,14 +1,4 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:f4ad0559a74749de0d11b1835823bf9c95332962b0909450251d849113f22c19",

|

||||

"containerimage.descriptor": {

|

||||

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

|

||||

"digest": "sha256:3a0e8d791e0ccf681711766387ea9278e7d39f1956509cead2f72aa0001797ef",

|

||||

"size": 1093,

|

||||

"platform": {

|

||||

"architecture": "amd64",

|

||||

"os": "linux"

|

||||

}

|

||||

},

|

||||

"containerimage.digest": "sha256:3a0e8d791e0ccf681711766387ea9278e7d39f1956509cead2f72aa0001797ef",

|

||||

"image.name": "ghcr.io/aenix-io/cozystack/nginx-cache:v0.1.0,ghcr.io/aenix-io/cozystack/nginx-cache:v0.1.0-v0.0.2"

|

||||

"containerimage.config.digest": "sha256:318fd8d0d6f6127387042f6ad150e87023d1961c7c5059dd5324188a54b0ab4e",

|

||||

"containerimage.digest": "sha256:e3cf145238e6e45f7f13b9acaea445c94ff29f76a34ba9fa50828401a5a3cc68"

|

||||

}

|

||||

@@ -1,7 +1,7 @@

|

||||

PUSH := 1

|

||||

LOAD := 0

|

||||

REGISTRY := ghcr.io/aenix-io/cozystack

|

||||

TAG := v0.0.2

|

||||

TAG := v0.2.0

|

||||

UBUNTU_CONTAINER_DISK_TAG = v1.29.1

|

||||

|

||||

image: image-ubuntu-container-disk

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:e982cfa2320d3139ed311ae44bcc5ea18db7e4e76d2746e0af04c516288ff0f1",

|

||||

"containerimage.digest": "sha256:34f6aba5b5a2afbb46bbb891ef4ddc0855c2ffe4f9e5a99e8e553286ddd2c070"

|

||||

"containerimage.config.digest": "sha256:ee8968be63c7c45621ec45f3687211e0875acb24e8d9784e8d2ebcbf46a3538c",

|

||||

"containerimage.digest": "sha256:16c3c07e74212585786dc1f1ae31d3ab90a575014806193e8e37d1d7751cb084"

|

||||

}

|

||||

@@ -3,7 +3,7 @@ NAME=installer

|

||||

PUSH := 1

|

||||

LOAD := 0

|

||||

REGISTRY := ghcr.io/aenix-io/cozystack

|

||||

TAG := v0.0.2

|

||||

TAG := v0.2.0

|

||||

TALOS_VERSION=$(shell awk '/^version:/ {print $$2}' images/talos/profiles/installer.yaml)

|

||||

|

||||

show:

|

||||

@@ -18,19 +18,18 @@ diff:

|

||||

update:

|

||||

hack/gen-profiles.sh

|

||||

|

||||

image: image-installer image-talos image-matchbox

|

||||

image: image-cozystack image-talos image-matchbox

|

||||

|

||||

image-installer:

|

||||

docker buildx build -f images/installer/Dockerfile ../../.. \

|

||||

image-cozystack:

|

||||

docker buildx build -f images/cozystack/Dockerfile ../../.. \

|

||||

--provenance false \

|

||||

--tag $(REGISTRY)/installer:$(TAG) \

|

||||

--tag $(REGISTRY)/installer:$(TALOS_VERSION)-$(TAG) \

|

||||

--cache-from type=registry,ref=$(REGISTRY)/installer:$(TALOS_VERSION) \

|

||||

--tag $(REGISTRY)/cozystack:$(TAG) \

|

||||

--cache-from type=registry,ref=$(REGISTRY)/cozystack:$(TAG) \

|

||||

--cache-to type=inline \

|

||||

--metadata-file images/installer.json \

|

||||

--metadata-file images/cozystack.json \

|

||||

--push=$(PUSH) \

|

||||

--load=$(LOAD)

|

||||

echo "$(REGISTRY)/installer:$(TALOS_VERSION)" > images/installer.tag

|

||||

echo "$(REGISTRY)/cozystack:$(TAG)" > images/cozystack.tag

|

||||

|

||||

image-talos:

|

||||

test -f ../../../_out/assets/installer-amd64.tar || make talos-installer

|

||||

@@ -55,4 +54,7 @@ image-matchbox:

|

||||

assets: talos-iso

|

||||

|

||||

talos-initramfs talos-kernel talos-installer talos-iso:

|

||||

cat images/talos/profiles/$(subst talos-,,$@).yaml | docker run --rm -i -v $${PWD}/../../../_out/assets:/out -v /dev:/dev --privileged "ghcr.io/siderolabs/imager:$(TALOS_VERSION)" -

|

||||

mkdir -p ../../../_out/assets

|

||||

cat images/talos/profiles/$(subst talos-,,$@).yaml | \

|

||||

docker run --rm -i -v /dev:/dev --privileged "ghcr.io/siderolabs/imager:$(TALOS_VERSION)" --tar-to-stdout - | \

|

||||

tar -C ../../../_out/assets -xzf-

|

||||

|

||||

4

packages/core/installer/images/cozystack.json

Normal file

4

packages/core/installer/images/cozystack.json

Normal file

@@ -0,0 +1,4 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:ec8a4983a663f06a1503507482667a206e83e0d8d3663dff60ced9221855d6b0",

|

||||

"containerimage.digest": "sha256:abb7b2fbc1f143c922f2a35afc4423a74b2b63c0bddfe620750613ed835aa861"

|

||||

}

|

||||

1

packages/core/installer/images/cozystack.tag

Normal file

1

packages/core/installer/images/cozystack.tag

Normal file

@@ -0,0 +1 @@

|

||||

ghcr.io/aenix-io/cozystack/cozystack:v0.1.0

|

||||

@@ -1,14 +0,0 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:5c7f51a9cbc945c13d52157035eba6ba4b6f3b68b76280f8e64b4f6ba239db1a",

|

||||

"containerimage.descriptor": {

|

||||

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

|

||||

"digest": "sha256:7cda3480faf0539ed4a3dd252aacc7a997645d3a390ece377c36cf55f9e57e11",

|

||||

"size": 2074,

|

||||

"platform": {

|

||||

"architecture": "amd64",

|

||||

"os": "linux"

|

||||

}

|

||||

},

|

||||

"containerimage.digest": "sha256:7cda3480faf0539ed4a3dd252aacc7a997645d3a390ece377c36cf55f9e57e11",

|

||||

"image.name": "ghcr.io/aenix-io/cozystack/installer:v0.0.2"

|

||||

}

|

||||

@@ -1 +0,0 @@

|

||||

ghcr.io/aenix-io/cozystack/installer:v0.0.2

|

||||

@@ -1,14 +1,4 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:cb8cb211017e51f6eb55604287c45cbf6ed8add5df482aaebff3d493a11b5a76",

|

||||

"containerimage.descriptor": {

|

||||

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

|

||||

"digest": "sha256:3be72cdce2f4ab4886a70fb7b66e4518a1fe4ba0771319c96fa19a0d6f409602",

|

||||

"size": 1488,

|

||||

"platform": {

|

||||

"architecture": "amd64",

|

||||

"os": "linux"

|

||||

}

|

||||

},

|

||||

"containerimage.digest": "sha256:3be72cdce2f4ab4886a70fb7b66e4518a1fe4ba0771319c96fa19a0d6f409602",

|

||||

"image.name": "ghcr.io/aenix-io/cozystack/matchbox:v0.0.2"

|

||||

"containerimage.config.digest": "sha256:b869a6324f9c0e6d1dd48eee67cbe3842ee14efd59bdde477736ad2f90568ff7",

|

||||

"containerimage.digest": "sha256:c30b237c5fa4fbbe47e1aba56e8f99569fe865620aa1953f31fc373794123cd7"

|

||||

}

|

||||

@@ -1 +1 @@

|

||||

ghcr.io/aenix-io/cozystack/matchbox:v0.0.2

|

||||

ghcr.io/aenix-io/cozystack/matchbox:v1.6.4

|

||||

|

||||

@@ -50,7 +50,7 @@ spec:

|

||||

serviceAccountName: cozystack

|

||||

containers:

|

||||

- name: cozystack

|

||||

image: "{{ .Files.Get "images/installer.tag" | trim }}@{{ index (.Files.Get "images/installer.json" | fromJson) "containerimage.digest" }}"

|

||||

image: "{{ .Files.Get "images/cozystack.tag" | trim }}@{{ index (.Files.Get "images/cozystack.json" | fromJson) "containerimage.digest" }}"

|

||||

env:

|

||||

- name: KUBERNETES_SERVICE_HOST

|

||||

value: localhost

|

||||

@@ -69,7 +69,7 @@ spec:

|

||||

fieldRef:

|

||||

fieldPath: metadata.name

|

||||

- name: darkhttpd

|

||||

image: "{{ .Files.Get "images/installer.tag" | trim }}@{{ index (.Files.Get "images/installer.json" | fromJson) "containerimage.digest" }}"

|

||||

image: "{{ .Files.Get "images/cozystack.tag" | trim }}@{{ index (.Files.Get "images/cozystack.json" | fromJson) "containerimage.digest" }}"

|

||||

command:

|

||||

- /usr/bin/darkhttpd

|

||||

- /cozystack/assets

|

||||

|

||||

@@ -16,4 +16,4 @@ namespaces-apply:

|

||||

helm template -n $(NAMESPACE) $(NAME) . --dry-run=server $(API_VERSIONS_FLAGS) -s templates/namespaces.yaml | kubectl apply -f-

|

||||

|

||||

diff:

|

||||

helm template -n $(NAMESPACE) $(NAME) . --dry-run=server $(API_VERSIONS_FLAGS) -s templates/namespaces.yaml | kubectl diff -f-

|

||||

helm template -n $(NAMESPACE) $(NAME) . --dry-run=server $(API_VERSIONS_FLAGS) | kubectl diff -f-

|

||||

|

||||

96

packages/core/platform/bundles/full-distro.yaml

Normal file

96

packages/core/platform/bundles/full-distro.yaml

Normal file

@@ -0,0 +1,96 @@

|

||||

{{- $cozyConfig := lookup "v1" "ConfigMap" "cozy-system" "cozystack" }}

|

||||

|

||||

releases:

|

||||

- name: cilium

|

||||

releaseName: cilium

|

||||

chart: cozy-cilium

|

||||

namespace: cozy-cilium

|

||||

privileged: true

|

||||

dependsOn: []

|

||||

|

||||

- name: fluxcd

|

||||

releaseName: fluxcd

|

||||

chart: cozy-fluxcd

|

||||

namespace: cozy-fluxcd

|

||||

dependsOn: [cilium]

|

||||

|

||||

- name: cert-manager

|

||||

releaseName: cert-manager

|

||||

chart: cozy-cert-manager

|

||||

namespace: cozy-cert-manager

|

||||

dependsOn: [cilium]

|

||||

|

||||

- name: cert-manager-issuers

|

||||

releaseName: cert-manager-issuers

|

||||

chart: cozy-cert-manager-issuers

|

||||

namespace: cozy-cert-manager

|

||||

dependsOn: [cilium,cert-manager]

|

||||

|

||||

- name: victoria-metrics-operator

|

||||

releaseName: victoria-metrics-operator

|

||||

chart: cozy-victoria-metrics-operator

|

||||

namespace: cozy-victoria-metrics-operator

|

||||

dependsOn: [cilium,cert-manager]

|

||||

|

||||

- name: monitoring

|

||||

releaseName: monitoring

|

||||

chart: cozy-monitoring

|

||||

namespace: cozy-monitoring

|

||||

privileged: true

|

||||

dependsOn: [cilium,victoria-metrics-operator]

|

||||

|

||||

- name: metallb

|

||||

releaseName: metallb

|

||||

chart: cozy-metallb

|

||||

namespace: cozy-metallb

|

||||

privileged: true

|

||||

dependsOn: [cilium]

|

||||

|

||||

- name: grafana-operator

|

||||

releaseName: grafana-operator

|

||||

chart: cozy-grafana-operator

|

||||

namespace: cozy-grafana-operator

|

||||

dependsOn: [cilium]

|

||||

|

||||

- name: mariadb-operator

|

||||

releaseName: mariadb-operator

|

||||

chart: cozy-mariadb-operator

|

||||

namespace: cozy-mariadb-operator

|

||||

dependsOn: [cilium,cert-manager,victoria-metrics-operator]

|

||||

|

||||

- name: postgres-operator

|

||||

releaseName: postgres-operator

|

||||

chart: cozy-postgres-operator

|

||||

namespace: cozy-postgres-operator

|

||||

dependsOn: [cilium,cert-manager]

|

||||

|

||||

- name: rabbitmq-operator

|

||||

releaseName: rabbitmq-operator

|

||||

chart: cozy-rabbitmq-operator

|

||||

namespace: cozy-rabbitmq-operator

|

||||

dependsOn: [cilium]

|

||||

|

||||

- name: redis-operator

|

||||

releaseName: redis-operator

|

||||

chart: cozy-redis-operator

|

||||

namespace: cozy-redis-operator

|

||||

dependsOn: [cilium]

|

||||

|

||||

- name: piraeus-operator

|

||||

releaseName: piraeus-operator

|

||||

chart: cozy-piraeus-operator

|

||||

namespace: cozy-linstor

|

||||

dependsOn: [cilium,cert-manager]

|

||||

|

||||

- name: linstor

|

||||

releaseName: linstor

|

||||

chart: cozy-linstor

|

||||

namespace: cozy-linstor

|

||||

privileged: true

|

||||

dependsOn: [piraeus-operator,cilium,cert-manager]

|

||||

|

||||

- name: telepresence

|

||||

releaseName: traffic-manager

|

||||

chart: cozy-telepresence

|

||||

namespace: cozy-telepresence

|

||||

dependsOn: [kubeovn]

|

||||

177

packages/core/platform/bundles/full-paas.yaml

Normal file

177

packages/core/platform/bundles/full-paas.yaml

Normal file

@@ -0,0 +1,177 @@

|

||||

{{- $cozyConfig := lookup "v1" "ConfigMap" "cozy-system" "cozystack" }}

|

||||

|

||||

releases:

|

||||

- name: cilium

|

||||

releaseName: cilium

|

||||

chart: cozy-cilium

|

||||

namespace: cozy-cilium

|

||||

privileged: true

|

||||

dependsOn: []

|

||||

|

||||

- name: kubeovn

|

||||

releaseName: kubeovn

|

||||

chart: cozy-kubeovn

|

||||

namespace: cozy-kubeovn

|

||||

privileged: true

|

||||

dependsOn: [cilium]

|

||||

values:

|

||||

cozystack:

|

||||

nodesHash: {{ include "cozystack.master-node-ips" . | sha256sum }}

|

||||

kube-ovn:

|

||||

ipv4:

|

||||

POD_CIDR: "{{ index $cozyConfig.data "ipv4-pod-cidr" }}"

|

||||

POD_GATEWAY: "{{ index $cozyConfig.data "ipv4-pod-gateway" }}"

|

||||

SVC_CIDR: "{{ index $cozyConfig.data "ipv4-svc-cidr" }}"

|

||||

JOIN_CIDR: "{{ index $cozyConfig.data "ipv4-join-cidr" }}"

|

||||

|

||||

- name: fluxcd

|

||||

releaseName: fluxcd

|

||||

chart: cozy-fluxcd

|

||||

namespace: cozy-fluxcd

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: cert-manager

|

||||

releaseName: cert-manager

|

||||

chart: cozy-cert-manager

|

||||

namespace: cozy-cert-manager

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: cert-manager-issuers

|

||||

releaseName: cert-manager-issuers

|

||||

chart: cozy-cert-manager-issuers

|

||||

namespace: cozy-cert-manager

|

||||

dependsOn: [cilium,kubeovn,cert-manager]

|

||||

|

||||

- name: victoria-metrics-operator

|

||||

releaseName: victoria-metrics-operator

|

||||

chart: cozy-victoria-metrics-operator

|

||||

namespace: cozy-victoria-metrics-operator

|

||||

dependsOn: [cilium,kubeovn,cert-manager]

|

||||

|

||||

- name: monitoring

|

||||

releaseName: monitoring

|

||||

chart: cozy-monitoring

|

||||

namespace: cozy-monitoring

|

||||

privileged: true

|

||||

dependsOn: [cilium,kubeovn,victoria-metrics-operator]

|

||||

|

||||

- name: kubevirt-operator

|

||||

releaseName: kubevirt-operator

|

||||

chart: cozy-kubevirt-operator

|

||||

namespace: cozy-kubevirt

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: kubevirt

|

||||

releaseName: kubevirt

|

||||

chart: cozy-kubevirt

|

||||

namespace: cozy-kubevirt

|

||||

privileged: true

|

||||

dependsOn: [cilium,kubeovn,kubevirt-operator]

|

||||

|

||||

- name: kubevirt-cdi-operator

|

||||

releaseName: kubevirt-cdi-operator

|

||||

chart: cozy-kubevirt-cdi-operator

|

||||

namespace: cozy-kubevirt-cdi

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: kubevirt-cdi

|

||||

releaseName: kubevirt-cdi

|

||||

chart: cozy-kubevirt-cdi

|

||||

namespace: cozy-kubevirt-cdi

|

||||

dependsOn: [cilium,kubeovn,kubevirt-cdi-operator]

|

||||

|

||||

- name: metallb

|

||||

releaseName: metallb

|

||||

chart: cozy-metallb

|

||||

namespace: cozy-metallb

|

||||

privileged: true

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: grafana-operator

|

||||

releaseName: grafana-operator

|

||||

chart: cozy-grafana-operator

|

||||

namespace: cozy-grafana-operator

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: mariadb-operator

|

||||

releaseName: mariadb-operator

|

||||

chart: cozy-mariadb-operator

|

||||

namespace: cozy-mariadb-operator

|

||||

dependsOn: [cilium,kubeovn,cert-manager,victoria-metrics-operator]

|

||||

|

||||

- name: postgres-operator

|

||||

releaseName: postgres-operator

|

||||

chart: cozy-postgres-operator

|

||||

namespace: cozy-postgres-operator

|

||||

dependsOn: [cilium,kubeovn,cert-manager]

|

||||

|

||||

- name: rabbitmq-operator

|

||||

releaseName: rabbitmq-operator

|

||||

chart: cozy-rabbitmq-operator

|

||||

namespace: cozy-rabbitmq-operator

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: redis-operator

|

||||

releaseName: redis-operator

|

||||

chart: cozy-redis-operator

|

||||

namespace: cozy-redis-operator

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: piraeus-operator

|

||||

releaseName: piraeus-operator

|

||||

chart: cozy-piraeus-operator

|

||||

namespace: cozy-linstor

|

||||

dependsOn: [cilium,kubeovn,cert-manager]

|

||||

|

||||

- name: linstor

|

||||

releaseName: linstor

|

||||

chart: cozy-linstor

|

||||

namespace: cozy-linstor

|

||||

privileged: true

|

||||

dependsOn: [piraeus-operator,cilium,kubeovn,cert-manager]

|

||||

|

||||

- name: telepresence

|

||||

releaseName: traffic-manager

|

||||

chart: cozy-telepresence

|

||||

namespace: cozy-telepresence

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: dashboard

|

||||

releaseName: dashboard

|

||||

chart: cozy-dashboard

|

||||

namespace: cozy-dashboard

|

||||

dependsOn: [cilium,kubeovn]

|

||||

{{- if .Capabilities.APIVersions.Has "source.toolkit.fluxcd.io/v1beta2" }}

|

||||

{{- with (lookup "source.toolkit.fluxcd.io/v1beta2" "HelmRepository" "cozy-public" "").items }}

|

||||

values:

|

||||

kubeapps:

|

||||

redis:

|

||||

master:

|

||||

podAnnotations:

|

||||

{{- range $index, $repo := . }}

|

||||

{{- with (($repo.status).artifact).revision }}

|

||||

repository.cozystack.io/{{ $repo.metadata.name }}: {{ quote . }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

|

||||

- name: kamaji

|

||||

releaseName: kamaji

|

||||

chart: cozy-kamaji

|

||||

namespace: cozy-kamaji

|

||||

dependsOn: [cilium,kubeovn,cert-manager]

|

||||

|

||||

- name: capi-operator

|

||||

releaseName: capi-operator

|

||||

chart: cozy-capi-operator

|

||||

namespace: cozy-cluster-api

|

||||

privileged: true

|

||||

dependsOn: [cilium,kubeovn,cert-manager]

|

||||

|

||||

- name: capi-providers

|

||||

releaseName: capi-providers

|

||||

chart: cozy-capi-providers

|

||||

namespace: cozy-cluster-api

|

||||

privileged: true

|

||||

dependsOn: [cilium,kubeovn,capi-operator]

|

||||

69

packages/core/platform/bundles/hosted-distro.yaml

Normal file

69

packages/core/platform/bundles/hosted-distro.yaml

Normal file

@@ -0,0 +1,69 @@

|

||||

{{- $cozyConfig := lookup "v1" "ConfigMap" "cozy-system" "cozystack" }}

|

||||

|

||||

releases:

|

||||

- name: fluxcd

|

||||

releaseName: fluxcd

|

||||

chart: cozy-fluxcd

|

||||

namespace: cozy-fluxcd

|

||||

dependsOn: []

|

||||

|

||||

- name: cert-manager

|

||||

releaseName: cert-manager

|

||||

chart: cozy-cert-manager

|

||||

namespace: cozy-cert-manager

|

||||

dependsOn: []

|

||||

|

||||

- name: cert-manager-issuers

|

||||

releaseName: cert-manager-issuers

|

||||

chart: cozy-cert-manager-issuers

|

||||

namespace: cozy-cert-manager

|

||||

dependsOn: [cert-manager]

|

||||

|

||||

- name: victoria-metrics-operator

|

||||

releaseName: victoria-metrics-operator

|

||||

chart: cozy-victoria-metrics-operator

|

||||

namespace: cozy-victoria-metrics-operator

|

||||

dependsOn: [cert-manager]

|

||||

|

||||

- name: monitoring

|

||||

releaseName: monitoring

|

||||

chart: cozy-monitoring

|

||||

namespace: cozy-monitoring

|

||||

privileged: true

|

||||

dependsOn: [victoria-metrics-operator]

|

||||

|

||||

- name: grafana-operator

|

||||

releaseName: grafana-operator

|

||||

chart: cozy-grafana-operator

|

||||

namespace: cozy-grafana-operator

|

||||

dependsOn: []

|

||||

|

||||

- name: mariadb-operator

|

||||

releaseName: mariadb-operator

|

||||

chart: cozy-mariadb-operator

|

||||

namespace: cozy-mariadb-operator

|

||||

dependsOn: [victoria-metrics-operator]

|

||||

|

||||

- name: postgres-operator

|

||||

releaseName: postgres-operator

|

||||

chart: cozy-postgres-operator

|

||||

namespace: cozy-postgres-operator

|

||||

dependsOn: [cert-manager]

|

||||

|

||||

- name: rabbitmq-operator

|

||||

releaseName: rabbitmq-operator

|

||||

chart: cozy-rabbitmq-operator

|

||||

namespace: cozy-rabbitmq-operator

|

||||

dependsOn: []

|

||||

|

||||

- name: redis-operator

|

||||

releaseName: redis-operator

|

||||

chart: cozy-redis-operator

|

||||

namespace: cozy-redis-operator

|

||||

dependsOn: []

|

||||

|

||||

- name: telepresence

|

||||

releaseName: traffic-manager

|

||||

chart: cozy-telepresence

|

||||

namespace: cozy-telepresence

|

||||

dependsOn: []

|

||||

95

packages/core/platform/bundles/hosted-paas.yaml

Normal file

95

packages/core/platform/bundles/hosted-paas.yaml

Normal file

@@ -0,0 +1,95 @@

|

||||

{{- $cozyConfig := lookup "v1" "ConfigMap" "cozy-system" "cozystack" }}

|

||||

|

||||

releases:

|

||||

- name: fluxcd

|

||||

releaseName: fluxcd

|

||||

chart: cozy-fluxcd

|

||||

namespace: cozy-fluxcd

|

||||

dependsOn: []

|

||||

|

||||

- name: cert-manager

|

||||

releaseName: cert-manager

|

||||

chart: cozy-cert-manager

|

||||

namespace: cozy-cert-manager

|

||||

dependsOn: []

|

||||

|

||||

- name: cert-manager-issuers

|

||||

releaseName: cert-manager-issuers

|

||||

chart: cozy-cert-manager-issuers

|

||||

namespace: cozy-cert-manager

|

||||

dependsOn: [cert-manager]

|

||||

|

||||

- name: victoria-metrics-operator

|

||||

releaseName: victoria-metrics-operator

|

||||

chart: cozy-victoria-metrics-operator

|

||||

namespace: cozy-victoria-metrics-operator

|

||||

dependsOn: [cert-manager]

|

||||

|

||||

- name: monitoring

|

||||

releaseName: monitoring

|

||||

chart: cozy-monitoring

|

||||

namespace: cozy-monitoring

|

||||

privileged: true

|

||||

dependsOn: [victoria-metrics-operator]

|

||||

|

||||

- name: grafana-operator

|

||||

releaseName: grafana-operator

|

||||

chart: cozy-grafana-operator

|

||||

namespace: cozy-grafana-operator

|

||||

dependsOn: []

|

||||

|

||||

- name: mariadb-operator

|

||||

releaseName: mariadb-operator

|

||||

chart: cozy-mariadb-operator

|

||||

namespace: cozy-mariadb-operator

|

||||

dependsOn: [cert-manager,victoria-metrics-operator]

|

||||

|

||||

- name: postgres-operator

|

||||

releaseName: postgres-operator

|

||||

chart: cozy-postgres-operator

|

||||

namespace: cozy-postgres-operator

|

||||

dependsOn: [cert-manager]

|

||||

|

||||

- name: rabbitmq-operator

|

||||

releaseName: rabbitmq-operator

|

||||

chart: cozy-rabbitmq-operator

|

||||

namespace: cozy-rabbitmq-operator

|

||||

dependsOn: []

|

||||

|

||||

- name: redis-operator

|

||||

releaseName: redis-operator

|

||||

chart: cozy-redis-operator

|

||||

namespace: cozy-redis-operator

|

||||

dependsOn: []

|

||||

|

||||

- name: piraeus-operator

|

||||

releaseName: piraeus-operator

|

||||

chart: cozy-piraeus-operator

|

||||

namespace: cozy-linstor

|

||||

dependsOn: [cert-manager]

|

||||

|

||||

- name: telepresence

|

||||

releaseName: traffic-manager

|

||||

chart: cozy-telepresence

|

||||

namespace: cozy-telepresence

|

||||

dependsOn: []

|

||||

|

||||

- name: dashboard

|

||||

releaseName: dashboard

|

||||

chart: cozy-dashboard

|

||||

namespace: cozy-dashboard

|

||||

dependsOn: []

|

||||

{{- if .Capabilities.APIVersions.Has "source.toolkit.fluxcd.io/v1beta2" }}

|

||||

{{- with (lookup "source.toolkit.fluxcd.io/v1beta2" "HelmRepository" "cozy-public" "").items }}

|

||||

values:

|

||||

kubeapps:

|

||||

redis:

|

||||

master:

|

||||

podAnnotations:

|

||||

{{- range $index, $repo := . }}

|

||||

{{- with (($repo.status).artifact).revision }}

|

||||

repository.cozystack.io/{{ $repo.metadata.name }}: {{ quote . }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

@@ -1,7 +1,7 @@

|

||||

{{/*

|

||||

Get IP-addresses of master nodes

|

||||

*/}}

|

||||

{{- define "master.nodeIPs" -}}

|

||||

{{- define "cozystack.master-node-ips" -}}

|

||||

{{- $nodes := lookup "v1" "Node" "" "" -}}

|

||||

{{- $ips := list -}}

|

||||

{{- range $node := $nodes.items -}}

|

||||

|

||||

@@ -1,38 +1,27 @@

|

||||

apiVersion: helm.toolkit.fluxcd.io/v2beta1

|

||||

kind: HelmRelease

|

||||

metadata:

|

||||

name: cilium

|

||||

namespace: cozy-cilium

|

||||

labels:

|

||||

cozystack.io/repository: system

|

||||

spec:

|

||||

interval: 1m

|

||||

releaseName: cilium

|

||||

install:

|

||||

remediation:

|

||||

retries: -1

|

||||

upgrade:

|

||||

remediation:

|

||||

retries: -1

|

||||

chart:

|

||||

spec:

|

||||

chart: cozy-cilium

|

||||

reconcileStrategy: Revision

|

||||

sourceRef:

|

||||

kind: HelmRepository

|

||||

name: cozystack-system

|

||||

namespace: cozy-system

|

||||

{{- $cozyConfig := lookup "v1" "ConfigMap" "cozy-system" "cozystack" }}

|

||||

{{- $bundleName := index $cozyConfig.data "bundle-name" }}

|

||||

{{- $bundle := tpl (.Files.Get (printf "bundles/%s.yaml" $bundleName)) . | fromYaml }}

|

||||

{{- $dependencyNamespaces := dict }}

|

||||

{{- $disabledComponents := splitList "," ((index $cozyConfig.data "bundle-disable") | default "") }}

|

||||

|

||||

{{/* collect dependency namespaces from releases */}}

|

||||

{{- range $x := $bundle.releases }}

|

||||

{{- $_ := set $dependencyNamespaces $x.name $x.namespace }}

|

||||

{{- end }}

|

||||

|

||||

{{- range $x := $bundle.releases }}

|

||||

{{- if not (has $x.name $disabledComponents) }}

|

||||

---

|

||||

apiVersion: helm.toolkit.fluxcd.io/v2beta1

|

||||

apiVersion: helm.toolkit.fluxcd.io/v2beta2

|

||||

kind: HelmRelease

|

||||

metadata:

|

||||

name: kubeovn

|

||||

namespace: cozy-kubeovn

|

||||

name: {{ $x.name }}

|

||||

namespace: {{ $x.namespace }}

|

||||

labels:

|

||||

cozystack.io/repository: system

|

||||

spec:

|

||||

interval: 1m

|

||||

releaseName: kubeovn

|

||||

releaseName: {{ $x.releaseName | default $x.name }}

|

||||

install:

|

||||

remediation:

|

||||

retries: -1

|

||||

@@ -41,704 +30,31 @@ spec:

|

||||

retries: -1

|

||||

chart:

|

||||

spec:

|

||||

chart: cozy-kubeovn

|

||||

chart: {{ $x.chart }}

|

||||

reconcileStrategy: Revision

|

||||

sourceRef:

|

||||

kind: HelmRepository

|

||||

name: cozystack-system

|

||||

namespace: cozy-system

|

||||

{{- $values := dict }}

|

||||

{{- with $x.values }}

|

||||

{{- $values = merge . $values }}

|

||||

{{- end }}

|

||||

{{- with index $cozyConfig.data (printf "values-%s" $x.name) }}

|

||||

{{- $values = merge (fromYaml .) $values }}

|

||||

{{- end }}

|

||||

{{- with $values }}

|

||||

values:

|

||||

cozystack:

|

||||

configHash: {{ index (lookup "v1" "ConfigMap" "cozy-system" "cozystack") "data" | toJson | sha256sum }}

|

||||

nodesHash: {{ include "master.nodeIPs" . | sha256sum }}

|

||||

{{- toYaml . | nindent 4}}

|

||||

{{- end }}

|

||||

{{- with $x.dependsOn }}

|

||||

dependsOn:

|

||||

- name: cilium

|

||||

namespace: cozy-cilium

|

||||

---

|

||||

apiVersion: helm.toolkit.fluxcd.io/v2beta1

|

||||

kind: HelmRelease

|

||||

metadata:

|

||||

name: cozy-fluxcd

|

||||

namespace: cozy-fluxcd

|

||||

labels:

|

||||

cozystack.io/repository: system

|

||||

spec:

|

||||

interval: 1m

|

||||

releaseName: fluxcd

|

||||

install:

|

||||

remediation:

|

||||

retries: -1

|

||||

upgrade:

|

||||

remediation:

|

||||

retries: -1

|

||||

chart:

|

||||

spec:

|

||||

chart: cozy-fluxcd

|

||||

reconcileStrategy: Revision

|

||||

sourceRef:

|

||||

kind: HelmRepository

|

||||

name: cozystack-system

|

||||

namespace: cozy-system

|

||||

dependsOn:

|

||||

- name: cilium

|

||||

namespace: cozy-cilium

|

||||

- name: kubeovn

|

||||

namespace: cozy-kubeovn

|

||||

---

|

||||

apiVersion: helm.toolkit.fluxcd.io/v2beta1

|

||||

kind: HelmRelease

|

||||

metadata:

|

||||

name: cert-manager

|

||||

namespace: cozy-cert-manager

|

||||

labels:

|

||||

cozystack.io/repository: system

|

||||

spec:

|

||||

interval: 1m

|

||||

releaseName: cert-manager

|

||||

install:

|

||||

remediation:

|

||||

retries: -1

|

||||

upgrade:

|

||||

remediation:

|

||||

retries: -1

|

||||

chart:

|

||||

spec:

|

||||