mirror of

https://github.com/outbackdingo/cozystack.git

synced 2026-01-28 18:18:41 +00:00

Compare commits

16 Commits

main

...

release-0.

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

e98fa9cd72 | ||

|

|

01d90bb736 | ||

|

|

e04cfaaa58 | ||

|

|

8c86905b22 | ||

|

|

84955d13ac | ||

|

|

46e5044851 | ||

|

|

3a3f44a427 | ||

|

|

0cc35a212c | ||

|

|

0bb79adec0 | ||

|

|

9e89a9d3ad | ||

|

|

ddfb1d65e3 | ||

|

|

efafe16d3b | ||

|

|

e1b4861c8a | ||

|

|

4d0bf14fc3 | ||

|

|

35069ff3e9 | ||

|

|

b9afd69df0 |

2

.github/CODEOWNERS

vendored

2

.github/CODEOWNERS

vendored

@@ -1 +1 @@

|

||||

* @kvaps @lllamnyp @nbykov0

|

||||

* @kvaps @lllamnyp @klinch0

|

||||

|

||||

50

.github/ISSUE_TEMPLATE/bug_report.md

vendored

50

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -1,50 +0,0 @@

|

||||

---

|

||||

name: Bug report

|

||||

about: Create a report to help us improve

|

||||

labels: 'bug'

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

<!--

|

||||

Thank you for submitting a bug report!

|

||||

Please fill in the fields below to help us investigate the problem.

|

||||

-->

|

||||

|

||||

**Describe the bug**

|

||||

A clear and concise description of what the bug is.

|

||||

|

||||

**Environment**

|

||||

- Cozystack version

|

||||

- Provider: on-prem, Hetzner, and so on

|

||||

|

||||

**To Reproduce**

|

||||

Steps to reproduce the behavior:

|

||||

1. Go to '...'

|

||||

2. Click on '....'

|

||||

3. Scroll down to '....'

|

||||

4. See error

|

||||

|

||||

**Expected behaviour**

|

||||

When taking the steps to reproduce, what should have happened differently?

|

||||

|

||||

**Actual behaviour**

|

||||

A clear and concise description of what happens when the bug occurs. Explain how the system currently behaves, including error messages, unexpected results, or incorrect functionality observed during execution.

|

||||

|

||||

|

||||

**Logs**

|

||||

```

|

||||

Paste any relevant logs here. Please redact tokens, passwords, private keys.

|

||||

```

|

||||

|

||||

**Screenshots**

|

||||

If applicable, add screenshots to help explain the problem.

|

||||

|

||||

**Additional context**

|

||||

Add any other context about the problem here.

|

||||

|

||||

**Checklist**

|

||||

- [ ] I have checked the documentation

|

||||

- [ ] I have searched for similar issues

|

||||

- [ ] I have included all required information

|

||||

- [ ] I have provided clear steps to reproduce

|

||||

- [ ] I have included relevant logs

|

||||

24

.github/PULL_REQUEST_TEMPLATE.md

vendored

24

.github/PULL_REQUEST_TEMPLATE.md

vendored

@@ -1,24 +0,0 @@

|

||||

<!-- Thank you for making a contribution! Here are some tips for you:

|

||||

- Start the PR title with the [label] of Cozystack component:

|

||||

- For system components: [platform], [system], [linstor], [cilium], [kube-ovn], [dashboard], [cluster-api], etc.

|

||||

- For managed apps: [apps], [tenant], [kubernetes], [postgres], [virtual-machine] etc.

|

||||

- For development and maintenance: [tests], [ci], [docs], [maintenance].

|

||||

- If it's a work in progress, consider creating this PR as a draft.

|

||||

- Don't hesistate to ask for opinion and review in the community chats, even if it's still a draft.

|

||||

- Add the label `backport` if it's a bugfix that needs to be backported to a previous version.

|

||||

-->

|

||||

|

||||

## What this PR does

|

||||

|

||||

|

||||

### Release note

|

||||

|

||||

<!-- Write a release note:

|

||||

- Explain what has changed internally and for users.

|

||||

- Start with the same [label] as in the PR title

|

||||

- Follow the guidelines at https://github.com/kubernetes/community/blob/master/contributors/guide/release-notes.md.

|

||||

-->

|

||||

|

||||

```release-note

|

||||

[]

|

||||

```

|

||||

12

.github/workflows/pre-commit.yml

vendored

12

.github/workflows/pre-commit.yml

vendored

@@ -2,7 +2,7 @@ name: Pre-Commit Checks

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

types: [opened, synchronize, reopened]

|

||||

types: [labeled, opened, synchronize, reopened]

|

||||

|

||||

concurrency:

|

||||

group: pre-commit-${{ github.workflow }}-${{ github.event.pull_request.number }}

|

||||

@@ -28,7 +28,15 @@ jobs:

|

||||

|

||||

- name: Install generate

|

||||

run: |

|

||||

curl -sSL https://github.com/cozystack/cozyvalues-gen/releases/download/v1.0.5/cozyvalues-gen-linux-amd64.tar.gz | tar -xzvf- -C /usr/local/bin/ cozyvalues-gen

|

||||

sudo apt update

|

||||

sudo apt install curl -y

|

||||

curl -fsSL https://deb.nodesource.com/setup_16.x | sudo -E bash -

|

||||

sudo apt install nodejs -y

|

||||

git clone https://github.com/bitnami/readme-generator-for-helm

|

||||

cd ./readme-generator-for-helm

|

||||

npm install

|

||||

npm install -g pkg

|

||||

pkg . -o /usr/local/bin/readme-generator

|

||||

|

||||

- name: Run pre-commit hooks

|

||||

run: |

|

||||

|

||||

95

.github/workflows/pull-requests-release.yaml

vendored

95

.github/workflows/pull-requests-release.yaml

vendored

@@ -1,17 +1,100 @@

|

||||

name: "Releasing PR"

|

||||

name: Releasing PR

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

types: [closed]

|

||||

paths-ignore:

|

||||

- 'docs/**/*'

|

||||

types: [labeled, opened, synchronize, reopened, closed]

|

||||

|

||||

# Cancel in‑flight runs for the same PR when a new push arrives.

|

||||

concurrency:

|

||||

group: pr-${{ github.workflow }}-${{ github.event.pull_request.number }}

|

||||

group: pull-requests-release-${{ github.workflow }}-${{ github.event.pull_request.number }}

|

||||

cancel-in-progress: true

|

||||

|

||||

jobs:

|

||||

verify:

|

||||

name: Test Release

|

||||

runs-on: [self-hosted]

|

||||

permissions:

|

||||

contents: read

|

||||

packages: write

|

||||

|

||||

if: |

|

||||

contains(github.event.pull_request.labels.*.name, 'release') &&

|

||||

github.event.action != 'closed'

|

||||

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0

|

||||

fetch-tags: true

|

||||

|

||||

- name: Login to GitHub Container Registry

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

username: ${{ github.repository_owner }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

registry: ghcr.io

|

||||

|

||||

- name: Extract tag from PR branch

|

||||

id: get_tag

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

script: |

|

||||

const branch = context.payload.pull_request.head.ref;

|

||||

const m = branch.match(/^release-(\d+\.\d+\.\d+(?:[-\w\.]+)?)$/);

|

||||

if (!m) {

|

||||

core.setFailed(`❌ Branch '${branch}' does not match 'release-X.Y.Z[-suffix]'`);

|

||||

return;

|

||||

}

|

||||

const tag = `v${m[1]}`;

|

||||

core.setOutput('tag', tag);

|

||||

|

||||

- name: Find draft release and get asset IDs

|

||||

id: fetch_assets

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

github-token: ${{ secrets.GH_PAT }}

|

||||

script: |

|

||||

const tag = '${{ steps.get_tag.outputs.tag }}';

|

||||

const releases = await github.rest.repos.listReleases({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

per_page: 100

|

||||

});

|

||||

const draft = releases.data.find(r => r.tag_name === tag && r.draft);

|

||||

if (!draft) {

|

||||

core.setFailed(`Draft release '${tag}' not found`);

|

||||

return;

|

||||

}

|

||||

const findAssetId = (name) =>

|

||||

draft.assets.find(a => a.name === name)?.id;

|

||||

const installerId = findAssetId("cozystack-installer.yaml");

|

||||

const diskId = findAssetId("nocloud-amd64.raw.xz");

|

||||

if (!installerId || !diskId) {

|

||||

core.setFailed("Missing required assets");

|

||||

return;

|

||||

}

|

||||

core.setOutput("installer_id", installerId);

|

||||

core.setOutput("disk_id", diskId);

|

||||

|

||||

- name: Download assets from GitHub API

|

||||

run: |

|

||||

mkdir -p _out/assets

|

||||

curl -sSL \

|

||||

-H "Authorization: token ${GH_PAT}" \

|

||||

-H "Accept: application/octet-stream" \

|

||||

-o _out/assets/cozystack-installer.yaml \

|

||||

"https://api.github.com/repos/${GITHUB_REPOSITORY}/releases/assets/${{ steps.fetch_assets.outputs.installer_id }}"

|

||||

curl -sSL \

|

||||

-H "Authorization: token ${GH_PAT}" \

|

||||

-H "Accept: application/octet-stream" \

|

||||

-o _out/assets/nocloud-amd64.raw.xz \

|

||||

"https://api.github.com/repos/${GITHUB_REPOSITORY}/releases/assets/${{ steps.fetch_assets.outputs.disk_id }}"

|

||||

env:

|

||||

GH_PAT: ${{ secrets.GH_PAT }}

|

||||

|

||||

- name: Run tests

|

||||

run: make test

|

||||

|

||||

finalize:

|

||||

name: Finalize Release

|

||||

runs-on: [self-hosted]

|

||||

|

||||

341

.github/workflows/pull-requests.yaml

vendored

341

.github/workflows/pull-requests.yaml

vendored

@@ -1,17 +1,11 @@

|

||||

name: Pull Request

|

||||

|

||||

env:

|

||||

# TODO: unhardcode this

|

||||

REGISTRY: iad.ocir.io/idyksih5sir9/cozystack

|

||||

on:

|

||||

pull_request:

|

||||

types: [opened, synchronize, reopened]

|

||||

paths-ignore:

|

||||

- 'docs/**/*'

|

||||

types: [labeled, opened, synchronize, reopened]

|

||||

|

||||

# Cancel in‑flight runs for the same PR when a new push arrives.

|

||||

concurrency:

|

||||

group: pr-${{ github.workflow }}-${{ github.event.pull_request.number }}

|

||||

group: pull-requests-${{ github.workflow }}-${{ github.event.pull_request.number }}

|

||||

cancel-in-progress: true

|

||||

|

||||

jobs:

|

||||

@@ -33,322 +27,35 @@ jobs:

|

||||

fetch-depth: 0

|

||||

fetch-tags: true

|

||||

|

||||

- name: Run unit tests

|

||||

run: make unit-tests

|

||||

|

||||

- name: Set up Docker config

|

||||

run: |

|

||||

if [ -d ~/.docker ]; then

|

||||

cp -r ~/.docker "${{ runner.temp }}/.docker"

|

||||

fi

|

||||

|

||||

- name: Login to GitHub Container Registry

|

||||

if: ${{ !github.event.pull_request.head.repo.fork }}

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

username: ${{ secrets.OCIR_USER}}

|

||||

password: ${{ secrets.OCIR_TOKEN }}

|

||||

registry: iad.ocir.io

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

username: ${{ github.repository_owner }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

registry: ghcr.io

|

||||

|

||||

- name: Build

|

||||

run: make build

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

|

||||

- name: Build Talos image

|

||||

run: make -C packages/core/installer talos-nocloud

|

||||

|

||||

- name: Save git diff as patch

|

||||

if: "!contains(github.event.pull_request.labels.*.name, 'release')"

|

||||

run: git diff HEAD > _out/assets/pr.patch

|

||||

|

||||

- name: Upload git diff patch

|

||||

if: "!contains(github.event.pull_request.labels.*.name, 'release')"

|

||||

- name: Upload artifacts

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: pr-patch

|

||||

path: _out/assets/pr.patch

|

||||

|

||||

- name: Upload installer

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: cozystack-installer

|

||||

path: _out/assets/cozystack-installer.yaml

|

||||

name: cozystack-artefacts

|

||||

path: |

|

||||

_out/assets/nocloud-amd64.raw.xz

|

||||

_out/assets/cozystack-installer.yaml

|

||||

|

||||

- name: Upload Talos image

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: talos-image

|

||||

path: _out/assets/nocloud-amd64.raw.xz

|

||||

|

||||

resolve_assets:

|

||||

name: "Resolve assets"

|

||||

runs-on: ubuntu-latest

|

||||

if: contains(github.event.pull_request.labels.*.name, 'release')

|

||||

outputs:

|

||||

installer_id: ${{ steps.fetch_assets.outputs.installer_id }}

|

||||

disk_id: ${{ steps.fetch_assets.outputs.disk_id }}

|

||||

|

||||

steps:

|

||||

- name: Checkout code

|

||||

if: contains(github.event.pull_request.labels.*.name, 'release')

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0

|

||||

fetch-tags: true

|

||||

|

||||

- name: Extract tag from PR branch (release PR)

|

||||

if: contains(github.event.pull_request.labels.*.name, 'release')

|

||||

id: get_tag

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

script: |

|

||||

const branch = context.payload.pull_request.head.ref;

|

||||

const m = branch.match(/^release-(\d+\.\d+\.\d+(?:[-\w\.]+)?)$/);

|

||||

if (!m) {

|

||||

core.setFailed(`❌ Branch '${branch}' does not match 'release-X.Y.Z[-suffix]'`);

|

||||

return;

|

||||

}

|

||||

core.setOutput('tag', `v${m[1]}`);

|

||||

|

||||

- name: Find draft release & asset IDs (release PR)

|

||||

if: contains(github.event.pull_request.labels.*.name, 'release')

|

||||

id: fetch_assets

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

github-token: ${{ secrets.GH_PAT }}

|

||||

script: |

|

||||

const tag = '${{ steps.get_tag.outputs.tag }}';

|

||||

const releases = await github.rest.repos.listReleases({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

per_page: 100

|

||||

});

|

||||

const draft = releases.data.find(r => r.tag_name === tag && r.draft);

|

||||

if (!draft) {

|

||||

core.setFailed(`Draft release '${tag}' not found`);

|

||||

return;

|

||||

}

|

||||

const find = (n) => draft.assets.find(a => a.name === n)?.id;

|

||||

const installerId = find('cozystack-installer.yaml');

|

||||

const diskId = find('nocloud-amd64.raw.xz');

|

||||

if (!installerId || !diskId) {

|

||||

core.setFailed('Required assets missing in draft release');

|

||||

return;

|

||||

}

|

||||

core.setOutput('installer_id', installerId);

|

||||

core.setOutput('disk_id', diskId);

|

||||

|

||||

|

||||

prepare_env:

|

||||

name: "Prepare environment"

|

||||

test:

|

||||

name: Test

|

||||

runs-on: [self-hosted]

|

||||

permissions:

|

||||

contents: read

|

||||

packages: read

|

||||

needs: ["build", "resolve_assets"]

|

||||

if: ${{ always() && (needs.build.result == 'success' || needs.resolve_assets.result == 'success') }}

|

||||

needs: build

|

||||

|

||||

steps:

|

||||

# ▸ Checkout and prepare the codebase

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

|

||||

# ▸ Regular PR path – download artefacts produced by the *build* job

|

||||

- name: "Download Talos image (regular PR)"

|

||||

if: "!contains(github.event.pull_request.labels.*.name, 'release')"

|

||||

uses: actions/download-artifact@v4

|

||||

with:

|

||||

name: talos-image

|

||||

path: _out/assets

|

||||

|

||||

- name: Download PR patch

|

||||

if: "!contains(github.event.pull_request.labels.*.name, 'release')"

|

||||

uses: actions/download-artifact@v4

|

||||

with:

|

||||

name: pr-patch

|

||||

path: _out/assets

|

||||

|

||||

- name: Apply patch

|

||||

if: "!contains(github.event.pull_request.labels.*.name, 'release')"

|

||||

run: |

|

||||

git apply _out/assets/pr.patch

|

||||

|

||||

# ▸ Release PR path – fetch artefacts from the corresponding draft release

|

||||

- name: Download assets from draft release (release PR)

|

||||

if: contains(github.event.pull_request.labels.*.name, 'release')

|

||||

run: |

|

||||

mkdir -p _out/assets

|

||||

curl -sSL -H "Authorization: token ${GH_PAT}" -H "Accept: application/octet-stream" \

|

||||

-o _out/assets/nocloud-amd64.raw.xz \

|

||||

"https://api.github.com/repos/${GITHUB_REPOSITORY}/releases/assets/${{ needs.resolve_assets.outputs.disk_id }}"

|

||||

env:

|

||||

GH_PAT: ${{ secrets.GH_PAT }}

|

||||

|

||||

- name: Set sandbox ID

|

||||

run: echo "SANDBOX_NAME=cozy-e2e-sandbox-$(echo "${GITHUB_REPOSITORY}:${GITHUB_WORKFLOW}:${GITHUB_REF}" | sha256sum | cut -c1-10)" >> $GITHUB_ENV

|

||||

|

||||

# ▸ Start actual job steps

|

||||

- name: Prepare workspace

|

||||

run: |

|

||||

rm -rf /tmp/$SANDBOX_NAME

|

||||

cp -r ${{ github.workspace }} /tmp/$SANDBOX_NAME

|

||||

|

||||

- name: Prepare environment

|

||||

run: |

|

||||

cd /tmp/$SANDBOX_NAME

|

||||

attempt=0

|

||||

until make SANDBOX_NAME=$SANDBOX_NAME prepare-env; do

|

||||

attempt=$((attempt + 1))

|

||||

if [ $attempt -ge 3 ]; then

|

||||

echo "❌ Attempt $attempt failed, exiting..."

|

||||

exit 1

|

||||

fi

|

||||

echo "❌ Attempt $attempt failed, retrying..."

|

||||

done

|

||||

echo "✅ The task completed successfully after $attempt attempts"

|

||||

|

||||

install_cozystack:

|

||||

name: "Install Cozystack"

|

||||

runs-on: [self-hosted]

|

||||

permissions:

|

||||

contents: read

|

||||

packages: read

|

||||

needs: ["prepare_env", "resolve_assets"]

|

||||

if: ${{ always() && needs.prepare_env.result == 'success' }}

|

||||

|

||||

steps:

|

||||

- name: Prepare _out/assets directory

|

||||

run: mkdir -p _out/assets

|

||||

|

||||

# ▸ Regular PR path – download artefacts produced by the *build* job

|

||||

- name: "Download installer (regular PR)"

|

||||

if: "!contains(github.event.pull_request.labels.*.name, 'release')"

|

||||

uses: actions/download-artifact@v4

|

||||

with:

|

||||

name: cozystack-installer

|

||||

path: _out/assets

|

||||

|

||||

# ▸ Release PR path – fetch artefacts from the corresponding draft release

|

||||

- name: Download assets from draft release (release PR)

|

||||

if: contains(github.event.pull_request.labels.*.name, 'release')

|

||||

run: |

|

||||

mkdir -p _out/assets

|

||||

curl -sSL -H "Authorization: token ${GH_PAT}" -H "Accept: application/octet-stream" \

|

||||

-o _out/assets/cozystack-installer.yaml \

|

||||

"https://api.github.com/repos/${GITHUB_REPOSITORY}/releases/assets/${{ needs.resolve_assets.outputs.installer_id }}"

|

||||

env:

|

||||

GH_PAT: ${{ secrets.GH_PAT }}

|

||||

|

||||

# ▸ Start actual job steps

|

||||

- name: Set sandbox ID

|

||||

run: echo "SANDBOX_NAME=cozy-e2e-sandbox-$(echo "${GITHUB_REPOSITORY}:${GITHUB_WORKFLOW}:${GITHUB_REF}" | sha256sum | cut -c1-10)" >> $GITHUB_ENV

|

||||

|

||||

- name: Sync _out/assets directory

|

||||

run: |

|

||||

mkdir -p /tmp/$SANDBOX_NAME/_out/assets

|

||||

mv _out/assets/* /tmp/$SANDBOX_NAME/_out/assets/

|

||||

|

||||

- name: Install Cozystack into sandbox

|

||||

run: |

|

||||

cd /tmp/$SANDBOX_NAME

|

||||

attempt=0

|

||||

until make -C packages/core/testing SANDBOX_NAME=$SANDBOX_NAME install-cozystack; do

|

||||

attempt=$((attempt + 1))

|

||||

if [ $attempt -ge 3 ]; then

|

||||

echo "❌ Attempt $attempt failed, exiting..."

|

||||

exit 1

|

||||

fi

|

||||

echo "❌ Attempt $attempt failed, retrying..."

|

||||

done

|

||||

echo "✅ The task completed successfully after $attempt attempts."

|

||||

|

||||

- name: Run OpenAPI tests

|

||||

run: |

|

||||

cd /tmp/$SANDBOX_NAME

|

||||

make -C packages/core/testing SANDBOX_NAME=$SANDBOX_NAME test-openapi

|

||||

|

||||

detect_test_matrix:

|

||||

name: "Detect e2e test matrix"

|

||||

runs-on: ubuntu-latest

|

||||

outputs:

|

||||

matrix: ${{ steps.set.outputs.matrix }}

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- id: set

|

||||

run: |

|

||||

apps=$(ls hack/e2e-apps/*.bats | cut -f3 -d/ | cut -f1 -d. | jq -R | jq -cs)

|

||||

echo "matrix={\"app\":$apps}" >> "$GITHUB_OUTPUT"

|

||||

|

||||

test_apps:

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix: ${{ fromJson(needs.detect_test_matrix.outputs.matrix) }}

|

||||

name: Test ${{ matrix.app }}

|

||||

runs-on: [self-hosted]

|

||||

needs: [install_cozystack,detect_test_matrix]

|

||||

if: ${{ always() && (needs.install_cozystack.result == 'success' && needs.detect_test_matrix.result == 'success') }}

|

||||

|

||||

steps:

|

||||

- name: Set sandbox ID

|

||||

run: echo "SANDBOX_NAME=cozy-e2e-sandbox-$(echo "${GITHUB_REPOSITORY}:${GITHUB_WORKFLOW}:${GITHUB_REF}" | sha256sum | cut -c1-10)" >> $GITHUB_ENV

|

||||

|

||||

- name: E2E Apps

|

||||

run: |

|

||||

cd /tmp/$SANDBOX_NAME

|

||||

attempt=0

|

||||

until make -C packages/core/testing SANDBOX_NAME=$SANDBOX_NAME test-apps-${{ matrix.app }}; do

|

||||

attempt=$((attempt + 1))

|

||||

if [ $attempt -ge 3 ]; then

|

||||

echo "❌ Attempt $attempt failed, exiting..."

|

||||

exit 1

|

||||

fi

|

||||

echo "❌ Attempt $attempt failed, retrying..."

|

||||

done

|

||||

echo "✅ The task completed successfully after $attempt attempts"

|

||||

|

||||

collect_debug_information:

|

||||

name: Collect debug information

|

||||

runs-on: [self-hosted]

|

||||

needs: [test_apps]

|

||||

if: ${{ always() }}

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Set sandbox ID

|

||||

run: echo "SANDBOX_NAME=cozy-e2e-sandbox-$(echo "${GITHUB_REPOSITORY}:${GITHUB_WORKFLOW}:${GITHUB_REF}" | sha256sum | cut -c1-10)" >> $GITHUB_ENV

|

||||

|

||||

- name: Collect report

|

||||

run: |

|

||||

cd /tmp/$SANDBOX_NAME

|

||||

make -C packages/core/testing SANDBOX_NAME=$SANDBOX_NAME collect-report

|

||||

|

||||

- name: Upload cozyreport.tgz

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: cozyreport

|

||||

path: /tmp/${{ env.SANDBOX_NAME }}/_out/cozyreport.tgz

|

||||

|

||||

- name: Collect images list

|

||||

run: |

|

||||

cd /tmp/$SANDBOX_NAME

|

||||

make -C packages/core/testing SANDBOX_NAME=$SANDBOX_NAME collect-images

|

||||

|

||||

- name: Upload image list

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: image-list

|

||||

path: /tmp/${{ env.SANDBOX_NAME }}/_out/images.txt

|

||||

|

||||

cleanup:

|

||||

name: Tear down environment

|

||||

runs-on: [self-hosted]

|

||||

needs: [collect_debug_information]

|

||||

if: ${{ always() && needs.test_apps.result == 'success' }}

|

||||

# Never run when the PR carries the "release" label.

|

||||

if: |

|

||||

!contains(github.event.pull_request.labels.*.name, 'release')

|

||||

|

||||

steps:

|

||||

- name: Checkout code

|

||||

@@ -357,13 +64,11 @@ jobs:

|

||||

fetch-depth: 0

|

||||

fetch-tags: true

|

||||

|

||||

- name: Set sandbox ID

|

||||

run: echo "SANDBOX_NAME=cozy-e2e-sandbox-$(echo "${GITHUB_REPOSITORY}:${GITHUB_WORKFLOW}:${GITHUB_REF}" | sha256sum | cut -c1-10)" >> $GITHUB_ENV

|

||||

|

||||

- name: Tear down sandbox

|

||||

run: make -C packages/core/testing SANDBOX_NAME=$SANDBOX_NAME delete

|

||||

|

||||

- name: Remove workspace

|

||||

run: rm -rf /tmp/$SANDBOX_NAME

|

||||

|

||||

- name: Download artifacts

|

||||

uses: actions/download-artifact@v4

|

||||

with:

|

||||

name: cozystack-artefacts

|

||||

path: _out/assets/

|

||||

|

||||

- name: Test

|

||||

run: make test

|

||||

|

||||

41

.github/workflows/tags.yaml

vendored

41

.github/workflows/tags.yaml

vendored

@@ -99,26 +99,18 @@ jobs:

|

||||

username: ${{ github.repository_owner }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

registry: ghcr.io

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

|

||||

# Build project artifacts

|

||||

- name: Build

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

run: make build

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

|

||||

# Commit built artifacts

|

||||

- name: Commit release artifacts

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

env:

|

||||

GH_PAT: ${{ secrets.GH_PAT }}

|

||||

run: |

|

||||

git config user.name "cozystack-bot"

|

||||

git config user.email "217169706+cozystack-bot@users.noreply.github.com"

|

||||

git remote set-url origin https://cozystack-bot:${GH_PAT}@github.com/${GITHUB_REPOSITORY}

|

||||

git config --unset-all http.https://github.com/.extraheader || true

|

||||

git config user.name "github-actions"

|

||||

git config user.email "github-actions@github.com"

|

||||

git add .

|

||||

git commit -m "Prepare release ${GITHUB_REF#refs/tags/}" -s || echo "No changes to commit"

|

||||

git push origin HEAD || true

|

||||

@@ -149,35 +141,36 @@ jobs:

|

||||

version: ${{ steps.tag.outputs.tag }} # A

|

||||

compare-to: ${{ steps.latest_release.outputs.tag }} # B

|

||||

|

||||

# Create or reuse draft release

|

||||

# Create or reuse DRAFT GitHub Release

|

||||

- name: Create / reuse draft release

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

id: release

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

script: |

|

||||

const tag = '${{ steps.tag.outputs.tag }}';

|

||||

const isRc = ${{ steps.tag.outputs.is_rc }};

|

||||

const releases = await github.rest.repos.listReleases({

|

||||

const tag = '${{ steps.tag.outputs.tag }}';

|

||||

const isRc = ${{ steps.tag.outputs.is_rc }};

|

||||

const outdated = '${{ steps.semver.outputs.comparison-result }}' === '<';

|

||||

const makeLatest = outdated ? false : 'legacy';

|

||||

const releases = await github.rest.repos.listReleases({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo

|

||||

});

|

||||

|

||||

let rel = releases.data.find(r => r.tag_name === tag);

|

||||

let rel = releases.data.find(r => r.tag_name === tag);

|

||||

if (!rel) {

|

||||

rel = await github.rest.repos.createRelease({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

tag_name: tag,

|

||||

name: tag,

|

||||

draft: true,

|

||||

prerelease: isRc // no make_latest for drafts

|

||||

tag_name: tag,

|

||||

name: tag,

|

||||

draft: true,

|

||||

prerelease: isRc,

|

||||

make_latest: makeLatest

|

||||

});

|

||||

console.log(`Draft release created for ${tag}`);

|

||||

} else {

|

||||

console.log(`Re-using existing release ${tag}`);

|

||||

}

|

||||

|

||||

core.setOutput('upload_url', rel.upload_url);

|

||||

|

||||

# Build + upload assets (optional)

|

||||

@@ -192,12 +185,7 @@ jobs:

|

||||

# Create release-X.Y.Z branch and push (force-update)

|

||||

- name: Create release branch

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

env:

|

||||

GH_PAT: ${{ secrets.GH_PAT }}

|

||||

run: |

|

||||

git config user.name "cozystack-bot"

|

||||

git config user.email "217169706+cozystack-bot@users.noreply.github.com"

|

||||

git remote set-url origin https://cozystack-bot:${GH_PAT}@github.com/${GITHUB_REPOSITORY}

|

||||

BRANCH="release-${GITHUB_REF#refs/tags/v}"

|

||||

git branch -f "$BRANCH"

|

||||

git push -f origin "$BRANCH"

|

||||

@@ -207,7 +195,6 @@ jobs:

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

github-token: ${{ secrets.GH_PAT }}

|

||||

script: |

|

||||

const version = context.ref.replace('refs/tags/v', '');

|

||||

const base = '${{ steps.get_base.outputs.branch }}';

|

||||

|

||||

3

.gitignore

vendored

3

.gitignore

vendored

@@ -1,5 +1,4 @@

|

||||

_out

|

||||

_repos

|

||||

.git

|

||||

.idea

|

||||

.vscode

|

||||

@@ -78,5 +77,3 @@ fabric.properties

|

||||

|

||||

.DS_Store

|

||||

**/.DS_Store

|

||||

|

||||

tmp/

|

||||

|

||||

@@ -1,17 +1,24 @@

|

||||

repos:

|

||||

- repo: local

|

||||

hooks:

|

||||

- id: gen-versions-map

|

||||

name: Generate versions map and check for changes

|

||||

entry: sh -c 'make -C packages/apps check-version-map && make -C packages/extra check-version-map'

|

||||

language: system

|

||||

types: [file]

|

||||

pass_filenames: false

|

||||

description: Run the script and fail if it generates changes

|

||||

- id: run-make-generate

|

||||

name: Run 'make generate' in all app directories

|

||||

entry: |

|

||||

flock -x .git/pre-commit.lock sh -c '

|

||||

for dir in ./packages/apps/*/ ./packages/extra/*/; do

|

||||

/bin/bash -c '

|

||||

for dir in ./packages/apps/*/; do

|

||||

if [ -d "$dir" ]; then

|

||||

echo "Running make generate in $dir"

|

||||

make generate -C "$dir" || exit $?

|

||||

(cd "$dir" && make generate)

|

||||

fi

|

||||

done

|

||||

git diff --color=always | cat

|

||||

'

|

||||

language: system

|

||||

language: script

|

||||

files: ^.*$

|

||||

|

||||

@@ -30,6 +30,3 @@ This list is sorted in chronological order, based on the submission date.

|

||||

| [Bootstack](https://bootstack.app/) | @mrkhachaturov | 2024-08-01| At Bootstack, we utilize a Kubernetes operator specifically designed to simplify and streamline cloud infrastructure creation.|

|

||||

| [gohost](https://gohost.kz/) | @karabass_off | 2024-02-01 | Our company has been working in the market of Kazakhstan for more than 15 years, providing clients with a standard set of services: VPS/VDC, IaaS, shared hosting, etc. Now we are expanding the lineup by introducing Bare Metal Kubenetes cluster under Cozystack management. |

|

||||

| [Urmanac](https://urmanac.com) | @kingdonb | 2024-12-04 | Urmanac is the future home of a hosting platform for the knowledge base of a community of personal server enthusiasts. We use Cozystack to provide support services for web sites hosted using both conventional deployments and on SpinKube, with WASM. |

|

||||

| [Hidora](https://hikube.cloud) | @matthieu-robin | 2025-09-17 | Hidora is a Swiss cloud provider delivering managed services and infrastructure solutions through datacenters located in Switzerland, ensuring data sovereignty and reliability. Its sovereign cloud platform, Hikube, is designed to run workloads with high availability across multiple datacenters, providing enterprises with a secure and scalable foundation for their applications based on Cozystack. |

|

||||

| [QOSI](https://qosi.kz) | @tabu-a | 2025-10-04 | QOSI is a non-profit organization driving open-source adoption and digital sovereignty across Kazakhstan and Central Asia. We use Cozystack as a platform for deploying sovereign, GPU-enabled clouds and educational environments under the National AI Program. Our goal is to accelerate the region’s transition toward open, self-hosted cloud-native technologies |

|

||||

|

|

||||

38

AGENTS.md

38

AGENTS.md

@@ -1,38 +0,0 @@

|

||||

# AI Agents Overview

|

||||

|

||||

This file provides structured guidance for AI coding assistants and agents

|

||||

working with the **Cozystack** project.

|

||||

|

||||

## Agent Documentation

|

||||

|

||||

| Agent | Purpose |

|

||||

|-------|---------|

|

||||

| [overview.md](./docs/agents/overview.md) | Project structure and conventions |

|

||||

| [contributing.md](./docs/agents/contributing.md) | Commits, pull requests, and git workflow |

|

||||

| [changelog.md](./docs/agents/changelog.md) | Changelog generation instructions |

|

||||

| [releasing.md](./docs/agents/releasing.md) | Release process and workflow |

|

||||

|

||||

## Project Overview

|

||||

|

||||

**Cozystack** is a Kubernetes-based platform for building cloud infrastructure with managed services (databases, VMs, K8s clusters), multi-tenancy, and GitOps delivery.

|

||||

|

||||

## Quick Reference

|

||||

|

||||

### Code Structure

|

||||

- `packages/core/` - Core platform charts (installer, platform)

|

||||

- `packages/system/` - System components (CSI, CNI, operators)

|

||||

- `packages/apps/` - User-facing applications in catalog

|

||||

- `packages/extra/` - Tenant-specific modules

|

||||

- `cmd/`, `internal/`, `pkg/` - Go code

|

||||

- `api/` - Kubernetes CRDs

|

||||

|

||||

### Conventions

|

||||

- **Helm Charts**: Umbrella pattern, vendored upstream charts in `charts/`

|

||||

- **Go Code**: Controller-runtime patterns, kubebuilder style

|

||||

- **Git Commits**: `[component] Description` format with `--signoff`

|

||||

|

||||

### What NOT to Do

|

||||

- ❌ Edit `/vendor/`, `zz_generated.*.go`, upstream charts directly

|

||||

- ❌ Modify `go.mod`/`go.sum` manually (use `go get`)

|

||||

- ❌ Force push to main/master

|

||||

- ❌ Commit built artifacts from `_out`

|

||||

@@ -1,22 +1,3 @@

|

||||

# Code of Conduct

|

||||

|

||||

Cozystack follows the [CNCF Code of Conduct](https://github.com/cncf/foundation/blob/master/code-of-conduct.md).

|

||||

|

||||

# Cozystack Vendor Neutrality Manifesto

|

||||

|

||||

Cozystack exists for the cloud-native community. We are committed to a project culture where no single company, product, or commercial agenda directs our roadmap, governance, brand, or releases. Our North Star is user value, technical excellence, and open collaboration under the CNCF umbrella.

|

||||

|

||||

## Our Commitments

|

||||

|

||||

- **Community-first:** Decisions prioritize the broader community over any vendor interest.

|

||||

- **Open collaboration:** Ideas, discussions, and outcomes happen in public spaces; contributions are welcomed from all.

|

||||

- **Merit over affiliation:** Proposals are evaluated on technical merit and user impact, not on who submits them.

|

||||

- **Inclusive stewardship:** Leadership and maintenance are open to contributors who demonstrate sustained, constructive impact.

|

||||

- **Technology choice:** We prefer open, pluggable designs that interoperate with multiple ecosystems and providers.

|

||||

- **Neutral brand & voice:** Our name, logo, website, and documentation do not imply endorsement or preference for any vendor.

|

||||

- **Transparent practices:** Funding acknowledgments, partnerships, and potential conflicts are communicated openly.

|

||||

- **User trust:** Security handling, releases, and communications aim to be timely, transparent, and fair to all users.

|

||||

|

||||

By contributing to Cozystack, we affirm these principles and work together to keep the project open, welcoming, and vendor-neutral.

|

||||

|

||||

*— The Cozystack community*

|

||||

|

||||

@@ -1,151 +0,0 @@

|

||||

# Contributor Ladder

|

||||

|

||||

* [Contributor Ladder](#contributor-ladder)

|

||||

* [Community Participant](#community-participant)

|

||||

* [Contributor](#contributor)

|

||||

* [Reviewer](#reviewer)

|

||||

* [Maintainer](#maintainer)

|

||||

* [Inactivity](#inactivity)

|

||||

* [Involuntary Removal](#involuntary-removal-or-demotion)

|

||||

* [Stepping Down/Emeritus Process](#stepping-downemeritus-process)

|

||||

* [Contact](#contact)

|

||||

|

||||

|

||||

## Contributor Ladder

|

||||

|

||||

Hello! We are excited that you want to learn more about our project contributor ladder! This contributor ladder outlines the different contributor roles within the project, along with the responsibilities and privileges that come with them. Community members generally start at the first levels of the "ladder" and advance up it as their involvement in the project grows. Our project members are happy to help you advance along the contributor ladder.

|

||||

|

||||

Each of the contributor roles below is organized into lists of three types of things. "Responsibilities" are things that a contributor is expected to do. "Requirements" are qualifications a person needs to meet to be in that role, and "Privileges" are things contributors on that level are entitled to.

|

||||

|

||||

|

||||

### Community Participant

|

||||

Description: A Community Participant engages with the project and its community, contributing their time, thoughts, etc. Community participants are usually users who have stopped being anonymous and started being active in project discussions.

|

||||

|

||||

* Responsibilities:

|

||||

* Must follow the [CNCF CoC](https://github.com/cncf/foundation/blob/main/code-of-conduct.md)

|

||||

* How users can get involved with the community:

|

||||

* Participating in community discussions

|

||||

* Helping other users

|

||||

* Submitting bug reports

|

||||

* Commenting on issues

|

||||

* Trying out new releases

|

||||

* Attending community events

|

||||

|

||||

|

||||

### Contributor

|

||||

Description: A Contributor contributes directly to the project and adds value to it. Contributions need not be code. People at the Contributor level may be new contributors, or they may only contribute occasionally.

|

||||

|

||||

* Responsibilities include:

|

||||

* Follow the [CNCF CoC](https://github.com/cncf/foundation/blob/main/code-of-conduct.md)

|

||||

* Follow the project [contributing guide] (https://github.com/cozystack/cozystack/blob/main/CONTRIBUTING.md)

|

||||

* Requirements (one or several of the below):

|

||||

* Report and sometimes resolve issues

|

||||

* Occasionally submit PRs

|

||||

* Contribute to the documentation

|

||||

* Show up at meetings, takes notes

|

||||

* Answer questions from other community members

|

||||

* Submit feedback on issues and PRs

|

||||

* Test releases and patches and submit reviews

|

||||

* Run or helps run events

|

||||

* Promote the project in public

|

||||

* Help run the project infrastructure

|

||||

* Privileges:

|

||||

* Invitations to contributor events

|

||||

* Eligible to become a Maintainer

|

||||

|

||||

|

||||

### Reviewer

|

||||

Description: A Reviewer has responsibility for specific code, documentation, test, or other project areas. They are collectively responsible, with other Reviewers, for reviewing all changes to those areas and indicating whether those changes are ready to merge. They have a track record of contribution and review in the project.

|

||||

|

||||

Reviewers are responsible for a "specific area." This can be a specific code directory, driver, chapter of the docs, test job, event, or other clearly-defined project component that is smaller than an entire repository or subproject. Most often it is one or a set of directories in one or more Git repositories. The "specific area" below refers to this area of responsibility.

|

||||

|

||||

Reviewers have all the rights and responsibilities of a Contributor, plus:

|

||||

|

||||

* Responsibilities include:

|

||||

* Continues to contribute regularly, as demonstrated by having at least 15 PRs a year, as demonstrated by [Cozystack devstats](https://cozystack.devstats.cncf.io).

|

||||

* Following the reviewing guide

|

||||

* Reviewing most Pull Requests against their specific areas of responsibility

|

||||

* Reviewing at least 40 PRs per year

|

||||

* Helping other contributors become reviewers

|

||||

* Requirements:

|

||||

* Must have successful contributions to the project, including at least one of the following:

|

||||

* 10 accepted PRs,

|

||||

* Reviewed 20 PRs,

|

||||

* Resolved and closed 20 Issues,

|

||||

* Become responsible for a key project management area,

|

||||

* Or some equivalent combination or contribution

|

||||

* Must have been contributing for at least 6 months

|

||||

* Must be actively contributing to at least one project area

|

||||

* Must have two sponsors who are also Reviewers or Maintainers, at least one of whom does not work for the same employer

|

||||

* Has reviewed, or helped review, at least 20 Pull Requests

|

||||

* Has analyzed and resolved test failures in their specific area

|

||||

* Has demonstrated an in-depth knowledge of the specific area

|

||||

* Commits to being responsible for that specific area

|

||||

* Is supportive of new and occasional contributors and helps get useful PRs in shape to commit

|

||||

* Additional privileges:

|

||||

* Has GitHub or CI/CD rights to approve pull requests in specific directories

|

||||

* Can recommend and review other contributors to become Reviewers

|

||||

* May be assigned Issues and Reviews

|

||||

* May give commands to CI/CD automation

|

||||

* Can recommend other contributors to become Reviewers

|

||||

|

||||

|

||||

The process of becoming a Reviewer is:

|

||||

1. The contributor is nominated by opening a PR against the appropriate repository, which adds their GitHub username to the OWNERS file for one or more directories.

|

||||

2. At least two members of the team that owns that repository or main directory, who are already Approvers, approve the PR.

|

||||

|

||||

|

||||

### Maintainer

|

||||

Description: Maintainers are very established contributors who are responsible for the entire project. As such, they have the ability to approve PRs against any area of the project, and are expected to participate in making decisions about the strategy and priorities of the project.

|

||||

|

||||

A Maintainer must meet the responsibilities and requirements of a Reviewer, plus:

|

||||

|

||||

* Responsibilities include:

|

||||

* Reviewing at least 40 PRs per year, especially PRs that involve multiple parts of the project

|

||||

* Mentoring new Reviewers

|

||||

* Writing refactoring PRs

|

||||

* Participating in CNCF maintainer activities

|

||||

* Determining strategy and policy for the project

|

||||

* Participating in, and leading, community meetings

|

||||

* Requirements

|

||||

* Experience as a Reviewer for at least 6 months

|

||||

* Demonstrates a broad knowledge of the project across multiple areas

|

||||

* Is able to exercise judgment for the good of the project, independent of their employer, friends, or team

|

||||

* Mentors other contributors

|

||||

* Can commit to spending at least 10 hours per month working on the project

|

||||

* Additional privileges:

|

||||

* Approve PRs to any area of the project

|

||||

* Represent the project in public as a Maintainer

|

||||

* Communicate with the CNCF on behalf of the project

|

||||

* Have a vote in Maintainer decision-making meetings

|

||||

|

||||

|

||||

Process of becoming a maintainer:

|

||||

1. Any current Maintainer may nominate a current Reviewer to become a new Maintainer, by opening a PR against the root of the cozystack repository adding the nominee as an Approver in the [MAINTAINERS](https://github.com/cozystack/cozystack/blob/main/MAINTAINERS.md) file.

|

||||

2. The nominee will add a comment to the PR testifying that they agree to all requirements of becoming a Maintainer.

|

||||

3. A majority of the current Maintainers must then approve the PR.

|

||||

|

||||

|

||||

## Inactivity

|

||||

It is important for contributors to be and stay active to set an example and show commitment to the project. Inactivity is harmful to the project as it may lead to unexpected delays, contributor attrition, and a lost of trust in the project.

|

||||

|

||||

* Inactivity is measured by:

|

||||

* Periods of no contributions for longer than 6 months

|

||||

* Periods of no communication for longer than 3 months

|

||||

* Consequences of being inactive include:

|

||||

* Involuntary removal or demotion

|

||||

* Being asked to move to Emeritus status

|

||||

|

||||

## Involuntary Removal or Demotion

|

||||

|

||||

Involuntary removal/demotion of a contributor happens when responsibilities and requirements aren't being met. This may include repeated patterns of inactivity, extended period of inactivity, a period of failing to meet the requirements of your role, and/or a violation of the Code of Conduct. This process is important because it protects the community and its deliverables while also opens up opportunities for new contributors to step in.

|

||||

|

||||

Involuntary removal or demotion is handled through a vote by a majority of the current Maintainers.

|

||||

|

||||

## Stepping Down/Emeritus Process

|

||||

If and when contributors' commitment levels change, contributors can consider stepping down (moving down the contributor ladder) vs moving to emeritus status (completely stepping away from the project).

|

||||

|

||||

Contact the Maintainers about changing to Emeritus status, or reducing your contributor level.

|

||||

|

||||

## Contact

|

||||

* For inquiries, please reach out to: @kvaps, @tym83

|

||||

@@ -7,6 +7,6 @@

|

||||

| Kingdon Barrett | [@kingdonb](https://github.com/kingdonb) | Urmanac | FluxCD and flux-operator |

|

||||

| Timofei Larkin | [@lllamnyp](https://github.com/lllamnyp) | 3commas | Etcd-operator Lead |

|

||||

| Artem Bortnikov | [@aobort](https://github.com/aobort) | Timescale | Etcd-operator Lead |

|

||||

| Andrei Gumilev | [@chumkaska](https://github.com/chumkaska) | Ænix | Platform Documentation |

|

||||

| Timur Tukaev | [@tym83](https://github.com/tym83) | Ænix | Cozystack Website, Marketing, Community Management |

|

||||

| Kirill Klinchenkov | [@klinch0](https://github.com/klinch0) | Ænix | Core Maintainer |

|

||||

| Nikita Bykov | [@nbykov0](https://github.com/nbykov0) | Ænix | Maintainer of ARM and stuff |

|

||||

|

||||

20

Makefile

20

Makefile

@@ -1,4 +1,4 @@

|

||||

.PHONY: manifests repos assets unit-tests helm-unit-tests

|

||||

.PHONY: manifests repos assets

|

||||

|

||||

build-deps:

|

||||

@command -V find docker skopeo jq gh helm > /dev/null

|

||||

@@ -9,31 +9,34 @@ build-deps:

|

||||

|

||||

build: build-deps

|

||||

make -C packages/apps/http-cache image

|

||||

make -C packages/apps/postgres image

|

||||

make -C packages/apps/mysql image

|

||||

make -C packages/apps/clickhouse image

|

||||

make -C packages/apps/kubernetes image

|

||||

make -C packages/extra/monitoring image

|

||||

make -C packages/system/cozystack-api image

|

||||

make -C packages/system/cozystack-controller image

|

||||

make -C packages/system/lineage-controller-webhook image

|

||||

make -C packages/system/cilium image

|

||||

make -C packages/system/kubeovn image

|

||||

make -C packages/system/kubeovn-webhook image

|

||||

make -C packages/system/kubeovn-plunger image

|

||||

make -C packages/system/dashboard image

|

||||

make -C packages/system/metallb image

|

||||

make -C packages/system/kamaji image

|

||||

make -C packages/system/bucket image

|

||||

make -C packages/system/objectstorage-controller image

|

||||

make -C packages/core/testing image

|

||||

make -C packages/core/installer image

|

||||

make manifests

|

||||

|

||||

repos:

|

||||

rm -rf _out

|

||||

make -C packages/apps check-version-map

|

||||

make -C packages/extra check-version-map

|

||||

make -C packages/system repo

|

||||

make -C packages/apps repo

|

||||

make -C packages/extra repo

|

||||

mkdir -p _out/logos

|

||||

cp ./packages/apps/*/logos/*.svg ./packages/extra/*/logos/*.svg _out/logos/

|

||||

|

||||

|

||||

manifests:

|

||||

mkdir -p _out/assets

|

||||

@@ -46,15 +49,6 @@ test:

|

||||

make -C packages/core/testing apply

|

||||

make -C packages/core/testing test

|

||||

|

||||

unit-tests: helm-unit-tests

|

||||

|

||||

helm-unit-tests:

|

||||

hack/helm-unit-tests.sh

|

||||

|

||||

prepare-env:

|

||||

make -C packages/core/testing apply

|

||||

make -C packages/core/testing prepare-cluster

|

||||

|

||||

generate:

|

||||

hack/update-codegen.sh

|

||||

|

||||

|

||||

12

README.md

12

README.md

@@ -12,15 +12,11 @@

|

||||

|

||||

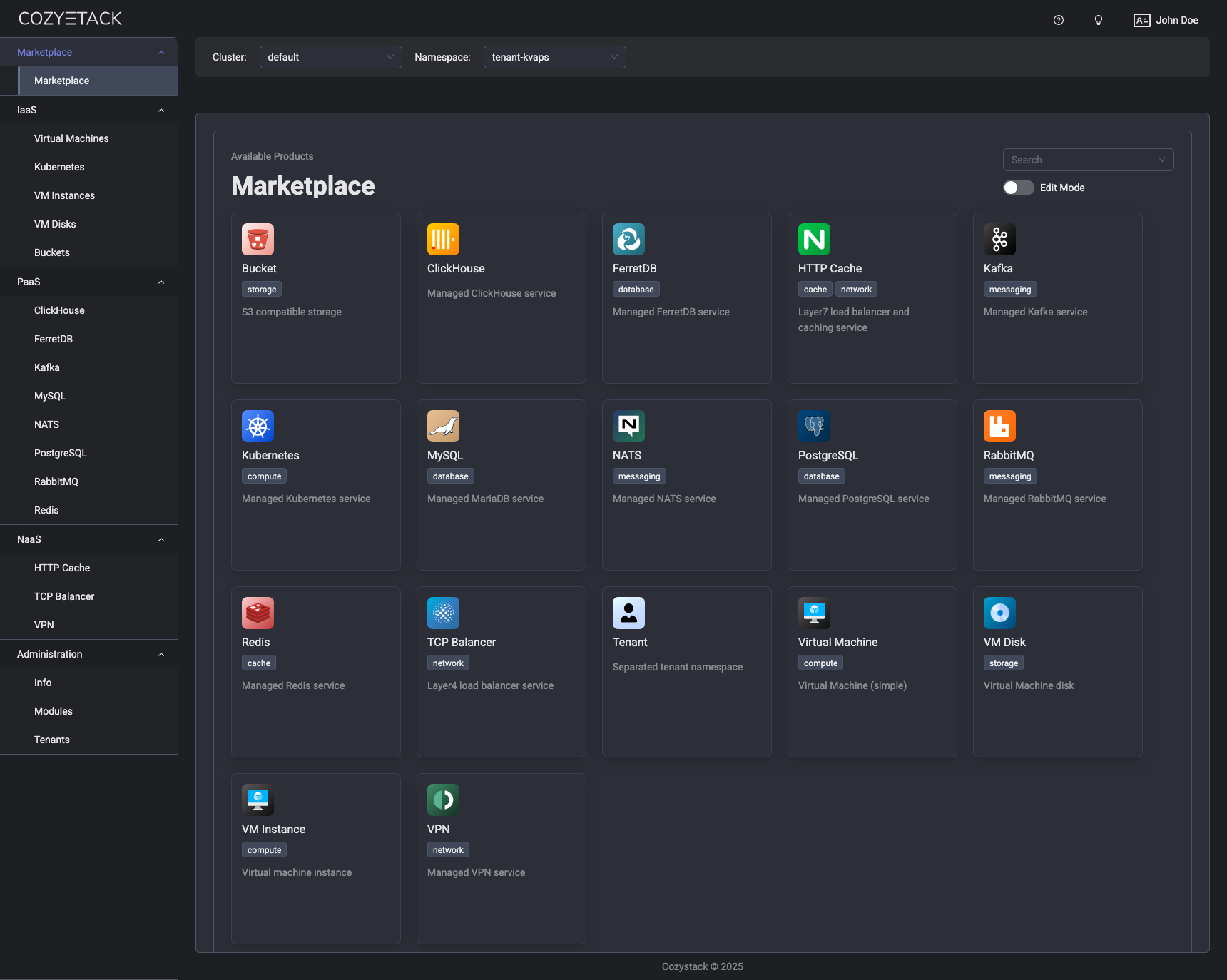

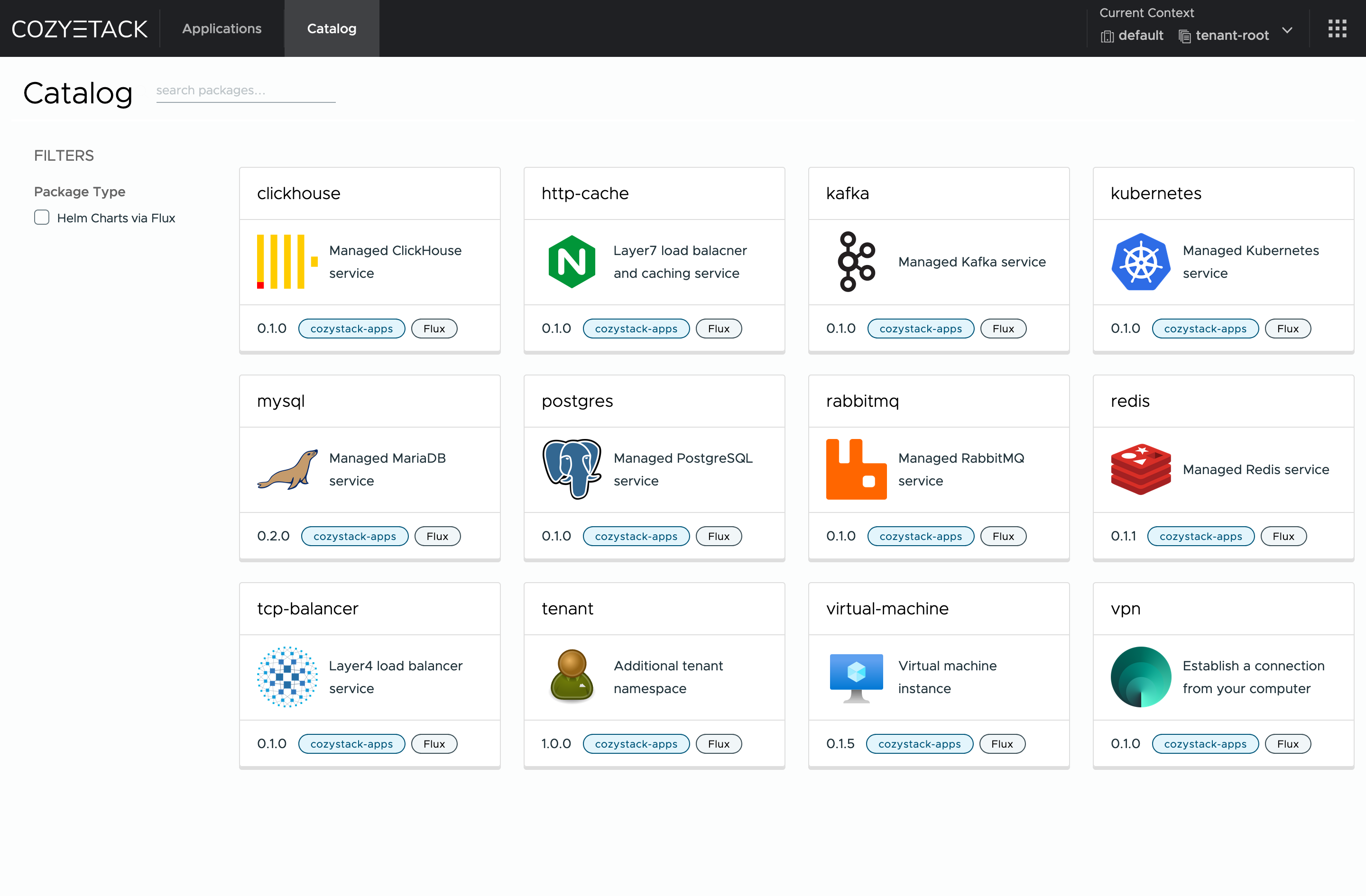

**Cozystack** is a free PaaS platform and framework for building clouds.

|

||||

|

||||

Cozystack is a [CNCF Sandbox Level Project](https://www.cncf.io/sandbox-projects/) that was originally built and sponsored by [Ænix](https://aenix.io/).

|

||||

|

||||

With Cozystack, you can transform a bunch of servers into an intelligent system with a simple REST API for spawning Kubernetes clusters,

|

||||

Database-as-a-Service, virtual machines, load balancers, HTTP caching services, and other services with ease.

|

||||

|

||||

Use Cozystack to build your own cloud or provide a cost-effective development environment.

|

||||

|

||||

|

||||

|

||||

## Use-Cases

|

||||

|

||||

* [**Using Cozystack to build a public cloud**](https://cozystack.io/docs/guides/use-cases/public-cloud/)

|

||||

@@ -32,6 +28,9 @@ You can use Cozystack as a platform to build a private cloud powered by Infrastr

|

||||

* [**Using Cozystack as a Kubernetes distribution**](https://cozystack.io/docs/guides/use-cases/kubernetes-distribution/)

|

||||

You can use Cozystack as a Kubernetes distribution for Bare Metal

|

||||

|

||||

## Screenshot

|

||||

|

||||

|

||||

|

||||

## Documentation

|

||||

|

||||

@@ -60,10 +59,7 @@ Commits are used to generate the changelog, and their author will be referenced

|

||||

|

||||

If you have **Feature Requests** please use the [Discussion's Feature Request section](https://github.com/cozystack/cozystack/discussions/categories/feature-requests).

|

||||

|

||||

## Community

|

||||

|

||||

You are welcome to join our [Telegram group](https://t.me/cozystack) and come to our weekly community meetings.

|

||||

Add them to your [Google Calendar](https://calendar.google.com/calendar?cid=ZTQzZDIxZTVjOWI0NWE5NWYyOGM1ZDY0OWMyY2IxZTFmNDMzZTJlNjUzYjU2ZGJiZGE3NGNhMzA2ZjBkMGY2OEBncm91cC5jYWxlbmRhci5nb29nbGUuY29t) or [iCal](https://calendar.google.com/calendar/ical/e43d21e5c9b45a95f28c5d649c2cb1e1f433e2e653b56dbbda74ca306f0d0f68%40group.calendar.google.com/public/basic.ics) for convenience.

|

||||

You are welcome to join our weekly community meetings (just add this events to your [Google Calendar](https://calendar.google.com/calendar?cid=ZTQzZDIxZTVjOWI0NWE5NWYyOGM1ZDY0OWMyY2IxZTFmNDMzZTJlNjUzYjU2ZGJiZGE3NGNhMzA2ZjBkMGY2OEBncm91cC5jYWxlbmRhci5nb29nbGUuY29t) or [iCal](https://calendar.google.com/calendar/ical/e43d21e5c9b45a95f28c5d649c2cb1e1f433e2e653b56dbbda74ca306f0d0f68%40group.calendar.google.com/public/basic.ics)) or [Telegram group](https://t.me/cozystack).

|

||||

|

||||

## License

|

||||

|

||||

|

||||

@@ -1,5 +1,4 @@

|

||||

API rule violation: list_type_missing,github.com/cozystack/cozystack/pkg/apis/apps/v1alpha1,ApplicationStatus,Conditions

|

||||

API rule violation: list_type_missing,github.com/cozystack/cozystack/pkg/apis/core/v1alpha1,TenantModuleStatus,Conditions

|

||||

API rule violation: names_match,k8s.io/apiextensions-apiserver/pkg/apis/apiextensions/v1,JSONSchemaProps,Ref

|

||||

API rule violation: names_match,k8s.io/apiextensions-apiserver/pkg/apis/apiextensions/v1,JSONSchemaProps,Schema

|

||||

API rule violation: names_match,k8s.io/apiextensions-apiserver/pkg/apis/apiextensions/v1,JSONSchemaProps,XEmbeddedResource

|

||||

|

||||

@@ -1,255 +0,0 @@

|

||||

// SPDX-License-Identifier: Apache-2.0

|

||||

// Package v1alpha1 defines front.in-cloud.io API types.

|

||||

//

|

||||

// Group: dashboard.cozystack.io

|

||||

// Version: v1alpha1

|

||||

package v1alpha1

|

||||

|

||||

import (

|

||||

v1 "k8s.io/apiextensions-apiserver/pkg/apis/apiextensions/v1"

|

||||

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

|

||||

)

|

||||

|

||||

// -----------------------------------------------------------------------------

|

||||

// Shared shapes

|

||||

// -----------------------------------------------------------------------------

|

||||

|

||||

// CommonStatus is a generic Status block with Kubernetes conditions.

|

||||

type CommonStatus struct {

|

||||

// ObservedGeneration reflects the most recent generation observed by the controller.

|

||||

// +optional

|

||||

ObservedGeneration int64 `json:"observedGeneration,omitempty"`

|

||||

|

||||

// Conditions represent the latest available observations of an object's state.

|

||||

// +optional

|

||||

Conditions []metav1.Condition `json:"conditions,omitempty"`

|

||||

}

|

||||

|

||||

// ArbitrarySpec holds schemaless user data and preserves unknown fields.

|

||||

// We map the entire .spec to a single JSON payload to mirror the CRDs you provided.

|

||||

// NOTE: Using apiextensionsv1.JSON avoids losing arbitrary structure during round-trips.

|

||||

type ArbitrarySpec struct {

|

||||

// +kubebuilder:validation:XPreserveUnknownFields

|

||||

// +kubebuilder:pruning:PreserveUnknownFields

|

||||

v1.JSON `json:",inline"`

|

||||

}

|

||||

|

||||

// -----------------------------------------------------------------------------

|

||||

// Sidebar

|

||||

// -----------------------------------------------------------------------------

|

||||

|

||||

// +kubebuilder:object:root=true

|

||||

// +kubebuilder:resource:path=sidebars,scope=Cluster

|

||||

// +kubebuilder:subresource:status

|

||||

type Sidebar struct {

|

||||

metav1.TypeMeta `json:",inline"`

|

||||

metav1.ObjectMeta `json:"metadata,omitempty"`

|

||||

|

||||

Spec ArbitrarySpec `json:"spec"`

|

||||

Status CommonStatus `json:"status,omitempty"`

|

||||

}

|

||||

|

||||

// +kubebuilder:object:root=true

|

||||

type SidebarList struct {

|

||||

metav1.TypeMeta `json:",inline"`

|

||||

metav1.ListMeta `json:"metadata,omitempty"`

|

||||

Items []Sidebar `json:"items"`

|

||||

}

|

||||

|

||||

// -----------------------------------------------------------------------------

|

||||

// CustomFormsPrefill (shortName: cfp)

|

||||

// -----------------------------------------------------------------------------

|

||||

|

||||

// +kubebuilder:object:root=true

|

||||

// +kubebuilder:resource:path=customformsprefills,scope=Cluster,shortName=cfp

|

||||

// +kubebuilder:subresource:status

|

||||

type CustomFormsPrefill struct {

|

||||

metav1.TypeMeta `json:",inline"`

|

||||

metav1.ObjectMeta `json:"metadata,omitempty"`

|

||||

|

||||

Spec ArbitrarySpec `json:"spec"`

|

||||

Status CommonStatus `json:"status,omitempty"`

|

||||

}

|

||||

|

||||

// +kubebuilder:object:root=true

|

||||

type CustomFormsPrefillList struct {

|

||||

metav1.TypeMeta `json:",inline"`

|

||||

metav1.ListMeta `json:"metadata,omitempty"`

|

||||

Items []CustomFormsPrefill `json:"items"`

|

||||

}

|

||||

|

||||

// -----------------------------------------------------------------------------

|

||||

// BreadcrumbInside

|

||||

// -----------------------------------------------------------------------------

|

||||

|

||||

// +kubebuilder:object:root=true

|

||||

// +kubebuilder:resource:path=breadcrumbsinside,scope=Cluster

|

||||

// +kubebuilder:subresource:status

|

||||

type BreadcrumbInside struct {

|

||||

metav1.TypeMeta `json:",inline"`

|

||||

metav1.ObjectMeta `json:"metadata,omitempty"`

|

||||

|

||||

Spec ArbitrarySpec `json:"spec"`

|

||||

Status CommonStatus `json:"status,omitempty"`

|

||||

}

|

||||

|

||||

// +kubebuilder:object:root=true

|

||||

type BreadcrumbInsideList struct {

|

||||

metav1.TypeMeta `json:",inline"`

|

||||

metav1.ListMeta `json:"metadata,omitempty"`

|

||||

Items []BreadcrumbInside `json:"items"`

|

||||

}

|

||||

|

||||

// -----------------------------------------------------------------------------

|

||||

// CustomFormsOverride (shortName: cfo)

|

||||

// -----------------------------------------------------------------------------

|

||||

|

||||

// +kubebuilder:object:root=true

|

||||

// +kubebuilder:resource:path=customformsoverrides,scope=Cluster,shortName=cfo

|

||||

// +kubebuilder:subresource:status

|

||||

type CustomFormsOverride struct {

|

||||

metav1.TypeMeta `json:",inline"`

|

||||

metav1.ObjectMeta `json:"metadata,omitempty"`

|

||||

|

||||

Spec ArbitrarySpec `json:"spec"`

|

||||

Status CommonStatus `json:"status,omitempty"`

|

||||

}

|

||||

|

||||

// +kubebuilder:object:root=true

|

||||

type CustomFormsOverrideList struct {

|

||||

metav1.TypeMeta `json:",inline"`

|

||||

metav1.ListMeta `json:"metadata,omitempty"`

|

||||

Items []CustomFormsOverride `json:"items"`

|

||||

}

|

||||

|

||||

// -----------------------------------------------------------------------------

|

||||

// TableUriMapping

|

||||

// -----------------------------------------------------------------------------

|

||||

|

||||

// +kubebuilder:object:root=true

|

||||

// +kubebuilder:resource:path=tableurimappings,scope=Cluster

|

||||

// +kubebuilder:subresource:status

|

||||

type TableUriMapping struct {

|

||||

metav1.TypeMeta `json:",inline"`

|

||||

metav1.ObjectMeta `json:"metadata,omitempty"`

|

||||

|

||||

Spec ArbitrarySpec `json:"spec"`

|

||||

Status CommonStatus `json:"status,omitempty"`

|

||||

}

|

||||

|

||||

// +kubebuilder:object:root=true

|

||||

type TableUriMappingList struct {

|

||||

metav1.TypeMeta `json:",inline"`

|

||||

metav1.ListMeta `json:"metadata,omitempty"`

|

||||

Items []TableUriMapping `json:"items"`

|

||||

}

|

||||

|

||||

// -----------------------------------------------------------------------------

|

||||

// Breadcrumb

|

||||

// -----------------------------------------------------------------------------

|

||||

|

||||

// +kubebuilder:object:root=true

|

||||

// +kubebuilder:resource:path=breadcrumbs,scope=Cluster

|

||||

// +kubebuilder:subresource:status

|

||||

type Breadcrumb struct {

|

||||

metav1.TypeMeta `json:",inline"`

|

||||

metav1.ObjectMeta `json:"metadata,omitempty"`

|

||||

|

||||

Spec ArbitrarySpec `json:"spec"`

|

||||

Status CommonStatus `json:"status,omitempty"`

|

||||

}

|

||||

|

||||

// +kubebuilder:object:root=true

|

||||

type BreadcrumbList struct {

|

||||

metav1.TypeMeta `json:",inline"`

|

||||

metav1.ListMeta `json:"metadata,omitempty"`

|

||||

Items []Breadcrumb `json:"items"`

|

||||

}

|

||||

|

||||

// -----------------------------------------------------------------------------

|

||||

// MarketplacePanel

|

||||

// -----------------------------------------------------------------------------

|

||||

|

||||

// +kubebuilder:object:root=true

|

||||

// +kubebuilder:resource:path=marketplacepanels,scope=Cluster

|

||||

// +kubebuilder:subresource:status

|

||||

type MarketplacePanel struct {

|

||||

metav1.TypeMeta `json:",inline"`

|

||||

metav1.ObjectMeta `json:"metadata,omitempty"`

|

||||

|

||||

Spec ArbitrarySpec `json:"spec"`

|

||||

Status CommonStatus `json:"status,omitempty"`

|

||||

}

|

||||

|

||||

// +kubebuilder:object:root=true

|

||||

type MarketplacePanelList struct {

|

||||

metav1.TypeMeta `json:",inline"`