mirror of

https://github.com/outbackdingo/cozystack.git

synced 2026-01-29 18:19:00 +00:00

Compare commits

1 Commits

release-0.

...

kubernetes

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

b193bd96d4 |

24

.github/PULL_REQUEST_TEMPLATE.md

vendored

24

.github/PULL_REQUEST_TEMPLATE.md

vendored

@@ -1,24 +0,0 @@

|

||||

<!-- Thank you for making a contribution! Here are some tips for you:

|

||||

- Start the PR title with the [label] of Cozystack component:

|

||||

- For system components: [platform], [system], [linstor], [cilium], [kube-ovn], [dashboard], [cluster-api], etc.

|

||||

- For managed apps: [apps], [tenant], [kubernetes], [postgres], [virtual-machine] etc.

|

||||

- For development and maintenance: [tests], [ci], [docs], [maintenance].

|

||||

- If it's a work in progress, consider creating this PR as a draft.

|

||||

- Don't hesistate to ask for opinion and review in the community chats, even if it's still a draft.

|

||||

- Add the label `backport` if it's a bugfix that needs to be backported to a previous version.

|

||||

-->

|

||||

|

||||

## What this PR does

|

||||

|

||||

|

||||

### Release note

|

||||

|

||||

<!-- Write a release note:

|

||||

- Explain what has changed internally and for users.

|

||||

- Start with the same [label] as in the PR title

|

||||

- Follow the guidelines at https://github.com/kubernetes/community/blob/master/contributors/guide/release-notes.md.

|

||||

-->

|

||||

|

||||

```release-note

|

||||

[]

|

||||

```

|

||||

12

.github/workflows/pre-commit.yml

vendored

12

.github/workflows/pre-commit.yml

vendored

@@ -2,7 +2,7 @@ name: Pre-Commit Checks

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

types: [opened, synchronize, reopened]

|

||||

types: [labeled, opened, synchronize, reopened]

|

||||

|

||||

concurrency:

|

||||

group: pre-commit-${{ github.workflow }}-${{ github.event.pull_request.number }}

|

||||

@@ -28,7 +28,15 @@ jobs:

|

||||

|

||||

- name: Install generate

|

||||

run: |

|

||||

curl -sSL https://github.com/cozystack/readme-generator-for-helm/releases/download/v1.0.0/readme-generator-for-helm-linux-amd64.tar.gz | tar -xzvf- -C /usr/local/bin/ readme-generator-for-helm

|

||||

sudo apt update

|

||||

sudo apt install curl -y

|

||||

curl -fsSL https://deb.nodesource.com/setup_16.x | sudo -E bash -

|

||||

sudo apt install nodejs -y

|

||||

git clone https://github.com/bitnami/readme-generator-for-helm

|

||||

cd ./readme-generator-for-helm

|

||||

npm install

|

||||

npm install -g pkg

|

||||

pkg . -o /usr/local/bin/readme-generator

|

||||

|

||||

- name: Run pre-commit hooks

|

||||

run: |

|

||||

|

||||

85

.github/workflows/pull-requests-release.yaml

vendored

85

.github/workflows/pull-requests-release.yaml

vendored

@@ -1,17 +1,43 @@

|

||||

name: "Releasing PR"

|

||||

name: Releasing PR

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

types: [closed]

|

||||

paths-ignore:

|

||||

- 'docs/**/*'

|

||||

types: [labeled, opened, synchronize, reopened, closed]

|

||||

|

||||

# Cancel in‑flight runs for the same PR when a new push arrives.

|

||||

concurrency:

|

||||

group: pr-${{ github.workflow }}-${{ github.event.pull_request.number }}

|

||||

group: pull-requests-release-${{ github.workflow }}-${{ github.event.pull_request.number }}

|

||||

cancel-in-progress: true

|

||||

|

||||

jobs:

|

||||

verify:

|

||||

name: Test Release

|

||||

runs-on: [self-hosted]

|

||||

permissions:

|

||||

contents: read

|

||||

packages: write

|

||||

|

||||

# Run only when the PR carries the "release" label and not closed.

|

||||

if: |

|

||||

contains(github.event.pull_request.labels.*.name, 'release') &&

|

||||

github.event.action != 'closed'

|

||||

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0

|

||||

fetch-tags: true

|

||||

|

||||

- name: Login to GitHub Container Registry

|

||||

uses: docker/login-action@v3

|

||||

with:

|

||||

username: ${{ github.repository_owner }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

registry: ghcr.io

|

||||

|

||||

- name: Run tests

|

||||

run: make test

|

||||

|

||||

finalize:

|

||||

name: Finalize Release

|

||||

runs-on: [self-hosted]

|

||||

@@ -54,7 +80,6 @@ jobs:

|

||||

- name: Ensure maintenance branch release-X.Y

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

github-token: ${{ secrets.GH_PAT }}

|

||||

script: |

|

||||

const tag = '${{ steps.get_tag.outputs.tag }}'; // e.g. v0.1.3 or v0.1.3-rc3

|

||||

const match = tag.match(/^v(\d+)\.(\d+)\.\d+(?:[-\w\.]+)?$/);

|

||||

@@ -64,45 +89,21 @@ jobs:

|

||||

}

|

||||

const line = `${match[1]}.${match[2]}`;

|

||||

const branch = `release-${line}`;

|

||||

|

||||

// Get main branch commit for the tag

|

||||

const ref = await github.rest.git.getRef({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

ref: `tags/${tag}`

|

||||

});

|

||||

|

||||

const commitSha = ref.data.object.sha;

|

||||

|

||||

try {

|

||||

await github.rest.repos.getBranch({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

repo: context.repo.repo,

|

||||

branch

|

||||

});

|

||||

|

||||

await github.rest.git.updateRef({

|

||||

console.log(`Branch '${branch}' already exists`);

|

||||

} catch (_) {

|

||||

await github.rest.git.createRef({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

ref: `heads/${branch}`,

|

||||

sha: commitSha,

|

||||

force: true

|

||||

repo: context.repo.repo,

|

||||

ref: `refs/heads/${branch}`,

|

||||

sha: context.sha

|

||||

});

|

||||

console.log(`🔁 Force-updated '${branch}' to ${commitSha}`);

|

||||

} catch (err) {

|

||||

if (err.status === 404) {

|

||||

await github.rest.git.createRef({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

ref: `refs/heads/${branch}`,

|

||||

sha: commitSha

|

||||

});

|

||||

console.log(`✅ Created branch '${branch}' at ${commitSha}`);

|

||||

} else {

|

||||

console.error('Unexpected error --', err);

|

||||

core.setFailed(`Unexpected error creating/updating branch: ${err.message}`);

|

||||

throw err;

|

||||

}

|

||||

console.log(`✅ Branch '${branch}' created at ${context.sha}`);

|

||||

}

|

||||

|

||||

# Get the latest published release

|

||||

@@ -136,12 +137,12 @@ jobs:

|

||||

with:

|

||||

script: |

|

||||

const tag = '${{ steps.get_tag.outputs.tag }}'; // v0.31.5-rc.1

|

||||

const m = tag.match(/^v(\d+\.\d+\.\d+)(-(?:alpha|beta|rc)\.\d+)?$/);

|

||||

const m = tag.match(/^v(\d+\.\d+\.\d+)(-rc\.\d+)?$/);

|

||||

if (!m) {

|

||||

core.setFailed(`❌ tag '${tag}' must match 'vX.Y.Z' or 'vX.Y.Z-(alpha|beta|rc).N'`);

|

||||

core.setFailed(`❌ tag '${tag}' must match 'vX.Y.Z' or 'vX.Y.Z-rc.N'`);

|

||||

return;

|

||||

}

|

||||

const version = m[1] + (m[2] ?? ''); // 0.31.5-rc.1

|

||||

const version = m[1] + (m[2] ?? ''); // 0.31.5‑rc.1

|

||||

const isRc = Boolean(m[2]);

|

||||

core.setOutput('is_rc', isRc);

|

||||

const outdated = '${{ steps.semver.outputs.comparison-result }}' === '<';

|

||||

|

||||

322

.github/workflows/pull-requests.yaml

vendored

322

.github/workflows/pull-requests.yaml

vendored

@@ -2,18 +2,15 @@ name: Pull Request

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

types: [opened, synchronize, reopened]

|

||||

paths-ignore:

|

||||

- 'docs/**/*'

|

||||

types: [labeled, opened, synchronize, reopened]

|

||||

|

||||

# Cancel in‑flight runs for the same PR when a new push arrives.

|

||||

concurrency:

|

||||

group: pr-${{ github.workflow }}-${{ github.event.pull_request.number }}

|

||||

group: pull-requests-${{ github.workflow }}-${{ github.event.pull_request.number }}

|

||||

cancel-in-progress: true

|

||||

|

||||

jobs:

|

||||

build:

|

||||

name: Build

|

||||

e2e:

|

||||

name: Build and Test

|

||||

runs-on: [self-hosted]

|

||||

permissions:

|

||||

contents: read

|

||||

@@ -36,316 +33,9 @@ jobs:

|

||||

username: ${{ github.repository_owner }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

registry: ghcr.io

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

|

||||

- name: Build

|

||||

run: make build

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

|

||||

- name: Build Talos image

|

||||

run: make -C packages/core/installer talos-nocloud

|

||||

|

||||

- name: Save git diff as patch

|

||||

if: "!contains(github.event.pull_request.labels.*.name, 'release')"

|

||||

run: git diff HEAD > _out/assets/pr.patch

|

||||

|

||||

- name: Upload git diff patch

|

||||

if: "!contains(github.event.pull_request.labels.*.name, 'release')"

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: pr-patch

|

||||

path: _out/assets/pr.patch

|

||||

|

||||

- name: Upload installer

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: cozystack-installer

|

||||

path: _out/assets/cozystack-installer.yaml

|

||||

|

||||

- name: Upload Talos image

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: talos-image

|

||||

path: _out/assets/nocloud-amd64.raw.xz

|

||||

|

||||

resolve_assets:

|

||||

name: "Resolve assets"

|

||||

runs-on: ubuntu-latest

|

||||

if: contains(github.event.pull_request.labels.*.name, 'release')

|

||||

outputs:

|

||||

installer_id: ${{ steps.fetch_assets.outputs.installer_id }}

|

||||

disk_id: ${{ steps.fetch_assets.outputs.disk_id }}

|

||||

|

||||

steps:

|

||||

- name: Checkout code

|

||||

if: contains(github.event.pull_request.labels.*.name, 'release')

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0

|

||||

fetch-tags: true

|

||||

|

||||

- name: Extract tag from PR branch (release PR)

|

||||

if: contains(github.event.pull_request.labels.*.name, 'release')

|

||||

id: get_tag

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

script: |

|

||||

const branch = context.payload.pull_request.head.ref;

|

||||

const m = branch.match(/^release-(\d+\.\d+\.\d+(?:[-\w\.]+)?)$/);

|

||||

if (!m) {

|

||||

core.setFailed(`❌ Branch '${branch}' does not match 'release-X.Y.Z[-suffix]'`);

|

||||

return;

|

||||

}

|

||||

core.setOutput('tag', `v${m[1]}`);

|

||||

|

||||

- name: Find draft release & asset IDs (release PR)

|

||||

if: contains(github.event.pull_request.labels.*.name, 'release')

|

||||

id: fetch_assets

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

github-token: ${{ secrets.GH_PAT }}

|

||||

script: |

|

||||

const tag = '${{ steps.get_tag.outputs.tag }}';

|

||||

const releases = await github.rest.repos.listReleases({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

per_page: 100

|

||||

});

|

||||

const draft = releases.data.find(r => r.tag_name === tag && r.draft);

|

||||

if (!draft) {

|

||||

core.setFailed(`Draft release '${tag}' not found`);

|

||||

return;

|

||||

}

|

||||

const find = (n) => draft.assets.find(a => a.name === n)?.id;

|

||||

const installerId = find('cozystack-installer.yaml');

|

||||

const diskId = find('nocloud-amd64.raw.xz');

|

||||

if (!installerId || !diskId) {

|

||||

core.setFailed('Required assets missing in draft release');

|

||||

return;

|

||||

}

|

||||

core.setOutput('installer_id', installerId);

|

||||

core.setOutput('disk_id', diskId);

|

||||

|

||||

|

||||

prepare_env:

|

||||

name: "Prepare environment"

|

||||

runs-on: [self-hosted]

|

||||

permissions:

|

||||

contents: read

|

||||

packages: read

|

||||

needs: ["build", "resolve_assets"]

|

||||

if: ${{ always() && (needs.build.result == 'success' || needs.resolve_assets.result == 'success') }}

|

||||

|

||||

steps:

|

||||

# ▸ Checkout and prepare the codebase

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

|

||||

# ▸ Regular PR path – download artefacts produced by the *build* job

|

||||

- name: "Download Talos image (regular PR)"

|

||||

if: "!contains(github.event.pull_request.labels.*.name, 'release')"

|

||||

uses: actions/download-artifact@v4

|

||||

with:

|

||||

name: talos-image

|

||||

path: _out/assets

|

||||

|

||||

- name: Download PR patch

|

||||

if: "!contains(github.event.pull_request.labels.*.name, 'release')"

|

||||

uses: actions/download-artifact@v4

|

||||

with:

|

||||

name: pr-patch

|

||||

path: _out/assets

|

||||

|

||||

- name: Apply patch

|

||||

if: "!contains(github.event.pull_request.labels.*.name, 'release')"

|

||||

run: |

|

||||

git apply _out/assets/pr.patch

|

||||

|

||||

# ▸ Release PR path – fetch artefacts from the corresponding draft release

|

||||

- name: Download assets from draft release (release PR)

|

||||

if: contains(github.event.pull_request.labels.*.name, 'release')

|

||||

run: |

|

||||

mkdir -p _out/assets

|

||||

curl -sSL -H "Authorization: token ${GH_PAT}" -H "Accept: application/octet-stream" \

|

||||

-o _out/assets/nocloud-amd64.raw.xz \

|

||||

"https://api.github.com/repos/${GITHUB_REPOSITORY}/releases/assets/${{ needs.resolve_assets.outputs.disk_id }}"

|

||||

env:

|

||||

GH_PAT: ${{ secrets.GH_PAT }}

|

||||

|

||||

- name: Set sandbox ID

|

||||

run: echo "SANDBOX_NAME=cozy-e2e-sandbox-$(echo "${GITHUB_REPOSITORY}:${GITHUB_WORKFLOW}:${GITHUB_REF}" | sha256sum | cut -c1-10)" >> $GITHUB_ENV

|

||||

|

||||

# ▸ Start actual job steps

|

||||

- name: Prepare workspace

|

||||

run: |

|

||||

rm -rf /tmp/$SANDBOX_NAME

|

||||

cp -r ${{ github.workspace }} /tmp/$SANDBOX_NAME

|

||||

|

||||

- name: Prepare environment

|

||||

run: |

|

||||

cd /tmp/$SANDBOX_NAME

|

||||

attempt=0

|

||||

until make SANDBOX_NAME=$SANDBOX_NAME prepare-env; do

|

||||

attempt=$((attempt + 1))

|

||||

if [ $attempt -ge 3 ]; then

|

||||

echo "❌ Attempt $attempt failed, exiting..."

|

||||

exit 1

|

||||

fi

|

||||

echo "❌ Attempt $attempt failed, retrying..."

|

||||

done

|

||||

echo "✅ The task completed successfully after $attempt attempts"

|

||||

|

||||

install_cozystack:

|

||||

name: "Install Cozystack"

|

||||

runs-on: [self-hosted]

|

||||

permissions:

|

||||

contents: read

|

||||

packages: read

|

||||

needs: ["prepare_env", "resolve_assets"]

|

||||

if: ${{ always() && needs.prepare_env.result == 'success' }}

|

||||

|

||||

steps:

|

||||

- name: Prepare _out/assets directory

|

||||

run: mkdir -p _out/assets

|

||||

|

||||

# ▸ Regular PR path – download artefacts produced by the *build* job

|

||||

- name: "Download installer (regular PR)"

|

||||

if: "!contains(github.event.pull_request.labels.*.name, 'release')"

|

||||

uses: actions/download-artifact@v4

|

||||

with:

|

||||

name: cozystack-installer

|

||||

path: _out/assets

|

||||

|

||||

# ▸ Release PR path – fetch artefacts from the corresponding draft release

|

||||

- name: Download assets from draft release (release PR)

|

||||

if: contains(github.event.pull_request.labels.*.name, 'release')

|

||||

run: |

|

||||

mkdir -p _out/assets

|

||||

curl -sSL -H "Authorization: token ${GH_PAT}" -H "Accept: application/octet-stream" \

|

||||

-o _out/assets/cozystack-installer.yaml \

|

||||

"https://api.github.com/repos/${GITHUB_REPOSITORY}/releases/assets/${{ needs.resolve_assets.outputs.installer_id }}"

|

||||

env:

|

||||

GH_PAT: ${{ secrets.GH_PAT }}

|

||||

|

||||

# ▸ Start actual job steps

|

||||

- name: Set sandbox ID

|

||||

run: echo "SANDBOX_NAME=cozy-e2e-sandbox-$(echo "${GITHUB_REPOSITORY}:${GITHUB_WORKFLOW}:${GITHUB_REF}" | sha256sum | cut -c1-10)" >> $GITHUB_ENV

|

||||

|

||||

- name: Sync _out/assets directory

|

||||

run: |

|

||||

mkdir -p /tmp/$SANDBOX_NAME/_out/assets

|

||||

mv _out/assets/* /tmp/$SANDBOX_NAME/_out/assets/

|

||||

|

||||

- name: Install Cozystack into sandbox

|

||||

run: |

|

||||

cd /tmp/$SANDBOX_NAME

|

||||

attempt=0

|

||||

until make -C packages/core/testing SANDBOX_NAME=$SANDBOX_NAME install-cozystack; do

|

||||

attempt=$((attempt + 1))

|

||||

if [ $attempt -ge 3 ]; then

|

||||

echo "❌ Attempt $attempt failed, exiting..."

|

||||

exit 1

|

||||

fi

|

||||

echo "❌ Attempt $attempt failed, retrying..."

|

||||

done

|

||||

echo "✅ The task completed successfully after $attempt attempts."

|

||||

|

||||

detect_test_matrix:

|

||||

name: "Detect e2e test matrix"

|

||||

runs-on: ubuntu-latest

|

||||

outputs:

|

||||

matrix: ${{ steps.set.outputs.matrix }}

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- id: set

|

||||

run: |

|

||||

apps=$(find hack/e2e-apps -maxdepth 1 -mindepth 1 -name '*.bats' | \

|

||||

awk -F/ '{sub(/\..+/, "", $NF); print $NF}' | jq -R . | jq -cs .)

|

||||

echo "matrix={\"app\":$apps}" >> "$GITHUB_OUTPUT"

|

||||

|

||||

test_apps:

|

||||

strategy:

|

||||

matrix: ${{ fromJson(needs.detect_test_matrix.outputs.matrix) }}

|

||||

name: Test ${{ matrix.app }}

|

||||

runs-on: [self-hosted]

|

||||

needs: [install_cozystack,detect_test_matrix]

|

||||

if: ${{ always() && (needs.install_cozystack.result == 'success' && needs.detect_test_matrix.result == 'success') }}

|

||||

|

||||

steps:

|

||||

- name: Set sandbox ID

|

||||

run: echo "SANDBOX_NAME=cozy-e2e-sandbox-$(echo "${GITHUB_REPOSITORY}:${GITHUB_WORKFLOW}:${GITHUB_REF}" | sha256sum | cut -c1-10)" >> $GITHUB_ENV

|

||||

|

||||

- name: E2E Apps

|

||||

run: |

|

||||

cd /tmp/$SANDBOX_NAME

|

||||

attempt=0

|

||||

until make -C packages/core/testing SANDBOX_NAME=$SANDBOX_NAME test-apps-${{ matrix.app }}; do

|

||||

attempt=$((attempt + 1))

|

||||

if [ $attempt -ge 3 ]; then

|

||||

echo "❌ Attempt $attempt failed, exiting..."

|

||||

exit 1

|

||||

fi

|

||||

echo "❌ Attempt $attempt failed, retrying..."

|

||||

done

|

||||

echo "✅ The task completed successfully after $attempt attempts"

|

||||

|

||||

collect_debug_information:

|

||||

name: Collect debug information

|

||||

runs-on: [self-hosted]

|

||||

needs: [test_apps]

|

||||

if: ${{ always() }}

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

|

||||

- name: Set sandbox ID

|

||||

run: echo "SANDBOX_NAME=cozy-e2e-sandbox-$(echo "${GITHUB_REPOSITORY}:${GITHUB_WORKFLOW}:${GITHUB_REF}" | sha256sum | cut -c1-10)" >> $GITHUB_ENV

|

||||

|

||||

- name: Collect report

|

||||

run: |

|

||||

cd /tmp/$SANDBOX_NAME

|

||||

make -C packages/core/testing SANDBOX_NAME=$SANDBOX_NAME collect-report

|

||||

|

||||

- name: Upload cozyreport.tgz

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: cozyreport

|

||||

path: /tmp/${{ env.SANDBOX_NAME }}/_out/cozyreport.tgz

|

||||

|

||||

- name: Collect images list

|

||||

run: |

|

||||

cd /tmp/$SANDBOX_NAME

|

||||

make -C packages/core/testing SANDBOX_NAME=$SANDBOX_NAME collect-images

|

||||

|

||||

- name: Upload image list

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: image-list

|

||||

path: /tmp/${{ env.SANDBOX_NAME }}/_out/images.txt

|

||||

|

||||

cleanup:

|

||||

name: Tear down environment

|

||||

runs-on: [self-hosted]

|

||||

needs: [collect_debug_information]

|

||||

if: ${{ always() && needs.test_apps.result == 'success' }}

|

||||

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

fetch-depth: 0

|

||||

fetch-tags: true

|

||||

|

||||

- name: Set sandbox ID

|

||||

run: echo "SANDBOX_NAME=cozy-e2e-sandbox-$(echo "${GITHUB_REPOSITORY}:${GITHUB_WORKFLOW}:${GITHUB_REF}" | sha256sum | cut -c1-10)" >> $GITHUB_ENV

|

||||

|

||||

- name: Tear down sandbox

|

||||

run: make -C packages/core/testing SANDBOX_NAME=$SANDBOX_NAME delete

|

||||

|

||||

- name: Remove workspace

|

||||

run: rm -rf /tmp/$SANDBOX_NAME

|

||||

|

||||

|

||||

- name: Test

|

||||

run: make test

|

||||

|

||||

39

.github/workflows/tags.yaml

vendored

39

.github/workflows/tags.yaml

vendored

@@ -3,10 +3,9 @@ name: Versioned Tag

|

||||

on:

|

||||

push:

|

||||

tags:

|

||||

- 'v*.*.*' # vX.Y.Z

|

||||

- 'v*.*.*-rc.*' # vX.Y.Z-rc.N

|

||||

- 'v*.*.*-beta.*' # vX.Y.Z-beta.N

|

||||

- 'v*.*.*-alpha.*' # vX.Y.Z-alpha.N

|

||||

- 'v*.*.*' # vX.Y.Z

|

||||

- 'v*.*.*-rc.*' # vX.Y.Z-rc.N

|

||||

|

||||

|

||||

concurrency:

|

||||

group: tags-${{ github.workflow }}-${{ github.ref }}

|

||||

@@ -43,7 +42,7 @@ jobs:

|

||||

if: steps.check_release.outputs.skip == 'true'

|

||||

run: echo "Release already exists, skipping workflow."

|

||||

|

||||

# Parse tag meta-data (rc?, maintenance line, etc.)

|

||||

# Parse tag meta‑data (rc?, maintenance line, etc.)

|

||||

- name: Parse tag

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

id: tag

|

||||

@@ -51,12 +50,12 @@ jobs:

|

||||

with:

|

||||

script: |

|

||||

const ref = context.ref.replace('refs/tags/', ''); // e.g. v0.31.5-rc.1

|

||||

const m = ref.match(/^v(\d+\.\d+\.\d+)(-(?:alpha|beta|rc)\.\d+)?$/); // ['0.31.5', '-rc.1' | '-beta.1' | …]

|

||||

const m = ref.match(/^v(\d+\.\d+\.\d+)(-rc\.\d+)?$/); // ['0.31.5', '-rc.1']

|

||||

if (!m) {

|

||||

core.setFailed(`❌ tag '${ref}' must match 'vX.Y.Z' or 'vX.Y.Z-(alpha|beta|rc).N'`);

|

||||

core.setFailed(`❌ tag '${ref}' must match 'vX.Y.Z' or 'vX.Y.Z-rc.N'`);

|

||||

return;

|

||||

}

|

||||

const version = m[1] + (m[2] ?? ''); // 0.31.5-rc.1

|

||||

const version = m[1] + (m[2] ?? ''); // 0.31.5‑rc.1

|

||||

const isRc = Boolean(m[2]);

|

||||

const [maj, min] = m[1].split('.');

|

||||

core.setOutput('tag', ref); // v0.31.5-rc.1

|

||||

@@ -64,7 +63,7 @@ jobs:

|

||||

core.setOutput('is_rc', isRc); // true

|

||||

core.setOutput('line', `${maj}.${min}`); // 0.31

|

||||

|

||||

# Detect base branch (main or release-X.Y) the tag was pushed from

|

||||

# Detect base branch (main or release‑X.Y) the tag was pushed from

|

||||

- name: Get base branch

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

id: get_base

|

||||

@@ -99,26 +98,18 @@ jobs:

|

||||

username: ${{ github.repository_owner }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

registry: ghcr.io

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

|

||||

# Build project artifacts

|

||||

- name: Build

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

run: make build

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

|

||||

# Commit built artifacts

|

||||

- name: Commit release artifacts

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

env:

|

||||

GH_PAT: ${{ secrets.GH_PAT }}

|

||||

run: |

|

||||

git config user.name "cozystack-bot"

|

||||

git config user.email "217169706+cozystack-bot@users.noreply.github.com"

|

||||

git remote set-url origin https://cozystack-bot:${GH_PAT}@github.com/${GITHUB_REPOSITORY}

|

||||

git config --unset-all http.https://github.com/.extraheader || true

|

||||

git config user.name "github-actions"

|

||||

git config user.email "github-actions@github.com"

|

||||

git add .

|

||||

git commit -m "Prepare release ${GITHUB_REF#refs/tags/}" -s || echo "No changes to commit"

|

||||

git push origin HEAD || true

|

||||

@@ -177,7 +168,7 @@ jobs:

|

||||

});

|

||||

console.log(`Draft release created for ${tag}`);

|

||||

} else {

|

||||

console.log(`Re-using existing release ${tag}`);

|

||||

console.log(`Re‑using existing release ${tag}`);

|

||||

}

|

||||

core.setOutput('upload_url', rel.upload_url);

|

||||

|

||||

@@ -190,15 +181,10 @@ jobs:

|

||||

env:

|

||||

GH_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

# Create release-X.Y.Z branch and push (force-update)

|

||||

# Create release‑X.Y.Z branch and push (force‑update)

|

||||

- name: Create release branch

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

env:

|

||||

GH_PAT: ${{ secrets.GH_PAT }}

|

||||

run: |

|

||||

git config user.name "cozystack-bot"

|

||||

git config user.email "217169706+cozystack-bot@users.noreply.github.com"

|

||||

git remote set-url origin https://cozystack-bot:${GH_PAT}@github.com/${GITHUB_REPOSITORY}

|

||||

BRANCH="release-${GITHUB_REF#refs/tags/v}"

|

||||

git branch -f "$BRANCH"

|

||||

git push -f origin "$BRANCH"

|

||||

@@ -208,7 +194,6 @@ jobs:

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

github-token: ${{ secrets.GH_PAT }}

|

||||

script: |

|

||||

const version = context.ref.replace('refs/tags/v', '');

|

||||

const base = '${{ steps.get_base.outputs.branch }}';

|

||||

|

||||

@@ -11,14 +11,14 @@ repos:

|

||||

- id: run-make-generate

|

||||

name: Run 'make generate' in all app directories

|

||||

entry: |

|

||||

flock -x .git/pre-commit.lock sh -c '

|

||||

for dir in ./packages/apps/*/ ./packages/extra/*/ ./packages/system/cozystack-api/; do

|

||||

/bin/bash -c '

|

||||

for dir in ./packages/apps/*/; do

|

||||

if [ -d "$dir" ]; then

|

||||

echo "Running make generate in $dir"

|

||||

make generate -C "$dir" || exit $?

|

||||

(cd "$dir" && make generate)

|

||||

fi

|

||||

done

|

||||

git diff --color=always | cat

|

||||

'

|

||||

language: system

|

||||

language: script

|

||||

files: ^.*$

|

||||

|

||||

9

Makefile

9

Makefile

@@ -9,6 +9,7 @@ build-deps:

|

||||

|

||||

build: build-deps

|

||||

make -C packages/apps/http-cache image

|

||||

make -C packages/apps/postgres image

|

||||

make -C packages/apps/mysql image

|

||||

make -C packages/apps/clickhouse image

|

||||

make -C packages/apps/kubernetes image

|

||||

@@ -28,8 +29,10 @@ build: build-deps

|

||||

|

||||

repos:

|

||||

rm -rf _out

|

||||

make -C packages/library check-version-map

|

||||

make -C packages/apps check-version-map

|

||||

make -C packages/extra check-version-map

|

||||

make -C packages/library repo

|

||||

make -C packages/system repo

|

||||

make -C packages/apps repo

|

||||

make -C packages/extra repo

|

||||

@@ -42,16 +45,12 @@ manifests:

|

||||

(cd packages/core/installer/; helm template -n cozy-installer installer .) > _out/assets/cozystack-installer.yaml

|

||||

|

||||

assets:

|

||||

make -C packages/core/installer assets

|

||||

make -C packages/core/installer/ assets

|

||||

|

||||

test:

|

||||

make -C packages/core/testing apply

|

||||

make -C packages/core/testing test

|

||||

|

||||

prepare-env:

|

||||

make -C packages/core/testing apply

|

||||

make -C packages/core/testing prepare-cluster

|

||||

|

||||

generate:

|

||||

hack/update-codegen.sh

|

||||

|

||||

|

||||

12

README.md

12

README.md

@@ -12,15 +12,11 @@

|

||||

|

||||

**Cozystack** is a free PaaS platform and framework for building clouds.

|

||||

|

||||

Cozystack is a [CNCF Sandbox Level Project](https://www.cncf.io/sandbox-projects/) that was originally built and sponsored by [Ænix](https://aenix.io/).

|

||||

|

||||

With Cozystack, you can transform a bunch of servers into an intelligent system with a simple REST API for spawning Kubernetes clusters,

|

||||

Database-as-a-Service, virtual machines, load balancers, HTTP caching services, and other services with ease.

|

||||

|

||||

Use Cozystack to build your own cloud or provide a cost-effective development environment.

|

||||

|

||||

|

||||

|

||||

## Use-Cases

|

||||

|

||||

* [**Using Cozystack to build a public cloud**](https://cozystack.io/docs/guides/use-cases/public-cloud/)

|

||||

@@ -32,6 +28,9 @@ You can use Cozystack as a platform to build a private cloud powered by Infrastr

|

||||

* [**Using Cozystack as a Kubernetes distribution**](https://cozystack.io/docs/guides/use-cases/kubernetes-distribution/)

|

||||

You can use Cozystack as a Kubernetes distribution for Bare Metal

|

||||

|

||||

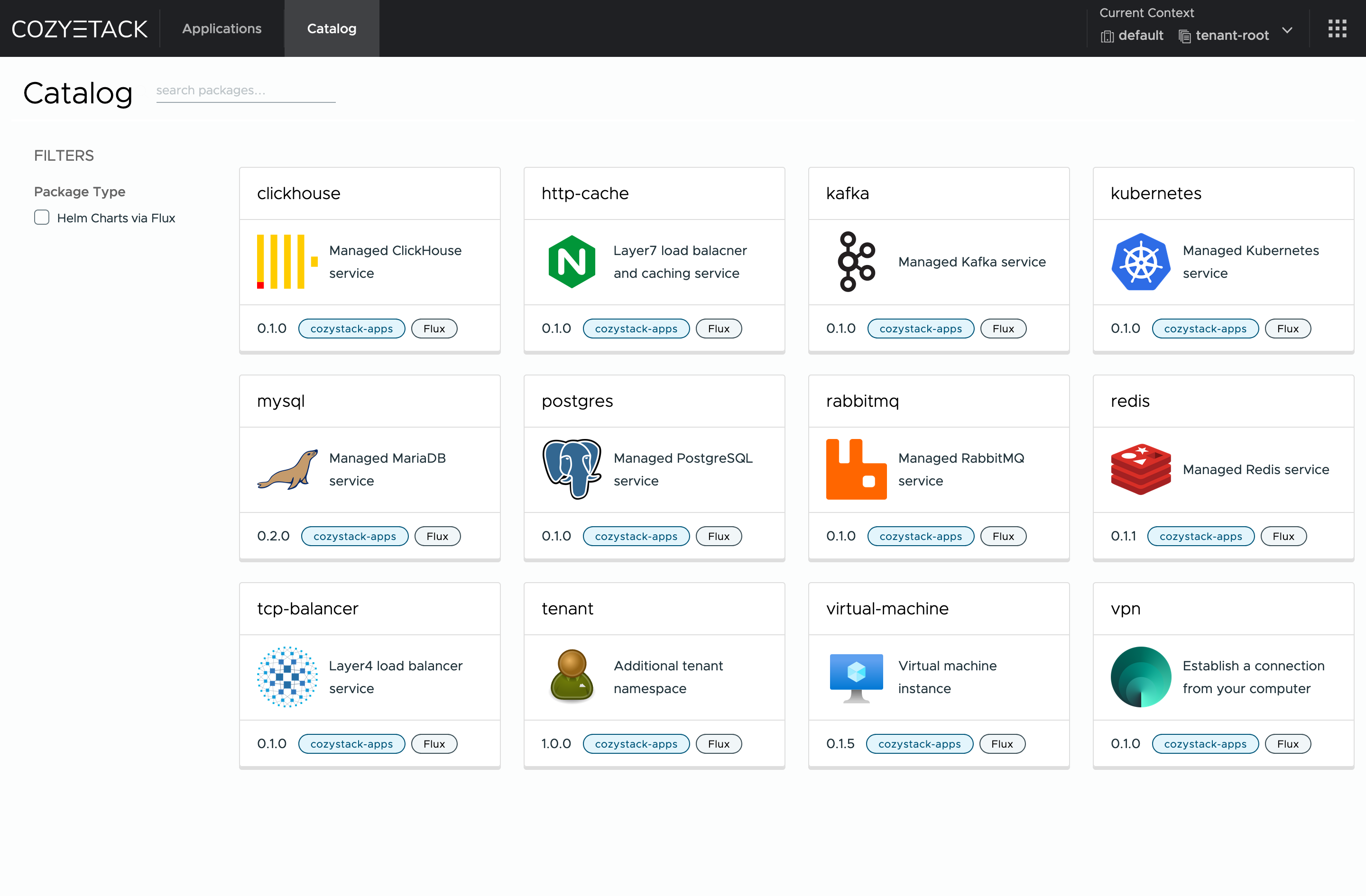

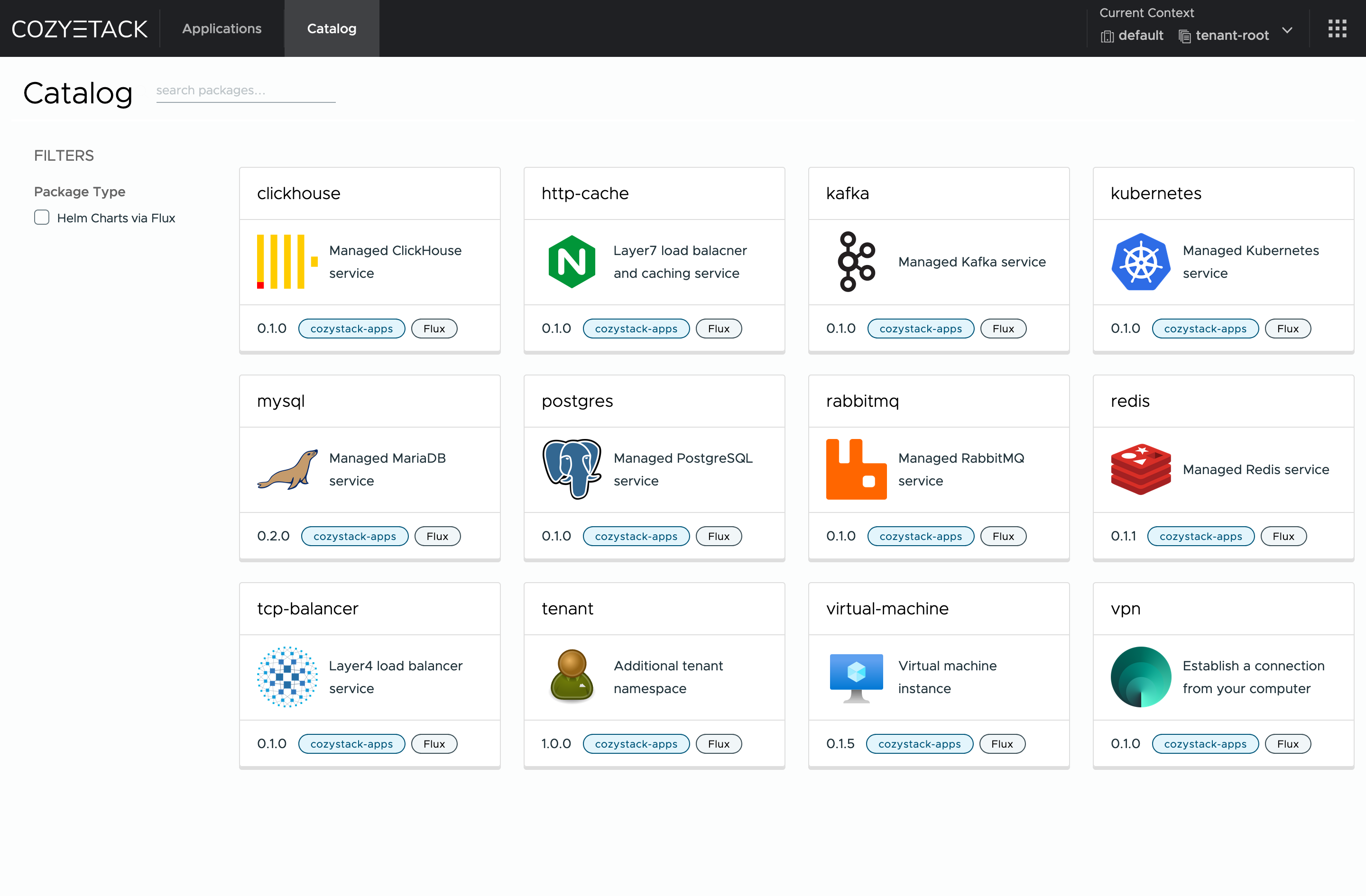

## Screenshot

|

||||

|

||||

|

||||

|

||||

## Documentation

|

||||

|

||||

@@ -60,10 +59,7 @@ Commits are used to generate the changelog, and their author will be referenced

|

||||

|

||||

If you have **Feature Requests** please use the [Discussion's Feature Request section](https://github.com/cozystack/cozystack/discussions/categories/feature-requests).

|

||||

|

||||

## Community

|

||||

|

||||

You are welcome to join our [Telegram group](https://t.me/cozystack) and come to our weekly community meetings.

|

||||

Add them to your [Google Calendar](https://calendar.google.com/calendar?cid=ZTQzZDIxZTVjOWI0NWE5NWYyOGM1ZDY0OWMyY2IxZTFmNDMzZTJlNjUzYjU2ZGJiZGE3NGNhMzA2ZjBkMGY2OEBncm91cC5jYWxlbmRhci5nb29nbGUuY29t) or [iCal](https://calendar.google.com/calendar/ical/e43d21e5c9b45a95f28c5d649c2cb1e1f433e2e653b56dbbda74ca306f0d0f68%40group.calendar.google.com/public/basic.ics) for convenience.

|

||||

You are welcome to join our weekly community meetings (just add this events to your [Google Calendar](https://calendar.google.com/calendar?cid=ZTQzZDIxZTVjOWI0NWE5NWYyOGM1ZDY0OWMyY2IxZTFmNDMzZTJlNjUzYjU2ZGJiZGE3NGNhMzA2ZjBkMGY2OEBncm91cC5jYWxlbmRhci5nb29nbGUuY29t) or [iCal](https://calendar.google.com/calendar/ical/e43d21e5c9b45a95f28c5d649c2cb1e1f433e2e653b56dbbda74ca306f0d0f68%40group.calendar.google.com/public/basic.ics)) or [Telegram group](https://t.me/cozystack).

|

||||

|

||||

## License

|

||||

|

||||

|

||||

@@ -194,15 +194,7 @@ func main() {

|

||||

Client: mgr.GetClient(),

|

||||

Scheme: mgr.GetScheme(),

|

||||

}).SetupWithManager(mgr); err != nil {

|

||||

setupLog.Error(err, "unable to create controller", "controller", "TenantHelmReconciler")

|

||||

os.Exit(1)

|

||||

}

|

||||

|

||||

if err = (&controller.CozystackConfigReconciler{

|

||||

Client: mgr.GetClient(),

|

||||

Scheme: mgr.GetScheme(),

|

||||

}).SetupWithManager(mgr); err != nil {

|

||||

setupLog.Error(err, "unable to create controller", "controller", "CozystackConfigReconciler")

|

||||

setupLog.Error(err, "unable to create controller", "controller", "Workload")

|

||||

os.Exit(1)

|

||||

}

|

||||

|

||||

|

||||

@@ -1,11 +0,0 @@

|

||||

## Major Features and Improvements

|

||||

|

||||

## Security

|

||||

|

||||

## Fixes

|

||||

|

||||

## Dependencies

|

||||

|

||||

## Documentation

|

||||

|

||||

## Development, Testing, and CI/CD

|

||||

@@ -1,129 +1,36 @@

|

||||

Cozystack v0.31.0 is a significant release that brings new features, key fixes, and updates to underlying components.

|

||||

This version enhances GPU support, improves many components of Cozystack, and introduces a more robust release process to improve stability.

|

||||

Below, we'll go over the highlights in each area for current users, developers, and our community.

|

||||

This is the second release candidate for the upcoming Cozystack v0.31.0 release.

|

||||

The release notes show changes accumulated since the release of Cozystack v0.30.0.

|

||||

|

||||

## Major Features and Improvements

|

||||

Cozystack 0.31.0 further advances GPU support, monitoring, and all-around convenience features.

|

||||

|

||||

### GPU support for tenant Kubernetes clusters

|

||||

## New Features and Changes

|

||||

|

||||

Cozystack now integrates NVIDIA GPU Operator support for tenant Kubernetes clusters.

|

||||

This enables platform users to run GPU-powered AI/ML applications in their own clusters.

|

||||

To enable GPU Operator, set `addons.gpuOperator.enabled: true` in the cluster configuration.

|

||||

(@kvaps in https://github.com/cozystack/cozystack/pull/834)

|

||||

|

||||

Check out Andrei Kvapil's CNCF webinar [showcasing the GPU support by running Stable Diffusion in Cozystack](https://www.youtube.com/watch?v=S__h_QaoYEk).

|

||||

|

||||

<!--

|

||||

* [kubernetes] Introduce GPU support for tenant Kubernetes clusters. (@kvaps in https://github.com/cozystack/cozystack/pull/834)

|

||||

-->

|

||||

|

||||

### Cilium Improvements

|

||||

|

||||

Cozystack’s Cilium integration received two significant enhancements.

|

||||

First, Gateway API support in Cilium is now enabled, allowing advanced L4/L7 routing features via Kubernetes Gateway API.

|

||||

We thank Zdenek Janda @zdenekjanda for contributing this feature in https://github.com/cozystack/cozystack/pull/924.

|

||||

|

||||

Second, Cozystack now permits custom user-provided parameters in the tenant cluster’s Cilium configuration.

|

||||

(@lllamnyp in https://github.com/cozystack/cozystack/pull/917)

|

||||

|

||||

<!--

|

||||

* [cilium] Enable Cilium Gateway API. (@zdenekjanda in https://github.com/cozystack/cozystack/pull/924)

|

||||

* [cilium] Enable user-added parameters in a tenant cluster Cilium. (@lllamnyp in https://github.com/cozystack/cozystack/pull/917)

|

||||

-->

|

||||

|

||||

### Cross-Architecture Builds (ARM Support Beta)

|

||||

|

||||

Cozystack's build system was refactored to support multi-architecture binaries and container images.

|

||||

This paves the road to running Cozystack on ARM64 servers.

|

||||

Changes include Makefile improvements (https://github.com/cozystack/cozystack/pull/907)

|

||||

and multi-arch Docker image builds (https://github.com/cozystack/cozystack/pull/932 and https://github.com/cozystack/cozystack/pull/970).

|

||||

|

||||

We thank Nikita Bykov @nbykov0 for his ongoing work on ARM support!

|

||||

|

||||

<!--

|

||||

* Introduce support for cross-architecture builds and Cozystack on ARM:

|

||||

* [build] Refactor Makefiles introducing build variables. (@nbykov0 in https://github.com/cozystack/cozystack/pull/907)

|

||||

* [build] Add support for multi-architecture and cross-platform image builds. (@nbykov0 in https://github.com/cozystack/cozystack/pull/932 and https://github.com/cozystack/cozystack/pull/970)

|

||||

-->

|

||||

|

||||

### VerticalPodAutoscaler (VPA) Expansion

|

||||

|

||||

The VerticalPodAutoscaler is now enabled for more Cozystack components to automate resource tuning.

|

||||

Specifically, VPA was added for tenant Kubernetes control planes (@klinch0 in https://github.com/cozystack/cozystack/pull/806),

|

||||

the Cozystack Dashboard (https://github.com/cozystack/cozystack/pull/828),

|

||||

and the Cozystack etcd-operator (https://github.com/cozystack/cozystack/pull/850).

|

||||

All Cozystack components that have VPA enabled can automatically adjust their CPU and memory requests based on usage, improving platform and application stability.

|

||||

|

||||

<!--

|

||||

* Add VerticalPodAutoscaler to a few more components:

|

||||

* [kubernetes] Kubernetes clusters in user tenants. (@klinch0 in https://github.com/cozystack/cozystack/pull/806)

|

||||

* [platform] Cozystack dashboard. (@klinch0 in https://github.com/cozystack/cozystack/pull/828)

|

||||

* [platform] Cozystack etcd-operator (@klinch0 in https://github.com/cozystack/cozystack/pull/850)

|

||||

-->

|

||||

|

||||

### Tenant HelmRelease Reconcile Controller

|

||||

|

||||

A new controller was introduced to monitor and synchronize HelmRelease resources across tenants.

|

||||

This controller propagates configuration changes to tenant workloads and ensures that any HelmRelease defined in a tenant

|

||||

stays in sync with platform updates.

|

||||

It improves the reliability of deploying managed applications in Cozystack.

|

||||

(@klinch0 in https://github.com/cozystack/cozystack/pull/870)

|

||||

|

||||

<!--

|

||||

* Introduce support for cross-architecture builds and Cozystack on ARM:

|

||||

* [build] Refactor Makefiles introducing build variables. (@nbykov0 in https://github.com/cozystack/cozystack/pull/907)

|

||||

* [build] Add support for multi-architecture and cross-platform image builds. (@nbykov0 in https://github.com/cozystack/cozystack/pull/932)

|

||||

* [platform] Introduce a new controller to synchronize tenant HelmReleases and propagate configuration changes. (@klinch0 in https://github.com/cozystack/cozystack/pull/870)

|

||||

-->

|

||||

|

||||

### Virtual Machine Improvements

|

||||

|

||||

**Configurable KubeVirt CPU Overcommit**: The CPU allocation ratio in KubeVirt (how virtual CPUs are overcommitted relative to physical) is now configurable

|

||||

via the `cpu-allocation-ratio` value in the Cozystack configmap.

|

||||

This means Cozystack administrators can now tune CPU overcommitment for VMs to balance performance vs. density.

|

||||

(@lllamnyp in https://github.com/cozystack/cozystack/pull/905)

|

||||

|

||||

**KubeVirt VM Export**: Cozystack now allows exporting KubeVirt virtual machines.

|

||||

This feature, enabled via KubeVirt's `VirtualMachineExport` capability, lets users snapshot or back up VM images.

|

||||

(@kvaps in https://github.com/cozystack/cozystack/pull/808)

|

||||

|

||||

**Support for various storage classes in Virtual Machines**: The `virtual-machine` application (since version 0.9.2) lets you pick any StorageClass for a VM's

|

||||

system disk instead of relying on a hard-coded PVC.

|

||||

Refer to values `systemDisk.storage` and `systemDisk.storageClass` in the [application's configs](https://cozystack.io/docs/reference/applications/virtual-machine/#common-parameters).

|

||||

(@kvaps in https://github.com/cozystack/cozystack/pull/974)

|

||||

|

||||

<!--

|

||||

* [platform] Introduce options `expose-services`, `expose-ingress` and `expose-external-ips` to the ingress service. (@kvaps in https://github.com/cozystack/cozystack/pull/929)

|

||||

* [kubevirt] Enable exporting VMs. (@kvaps in https://github.com/cozystack/cozystack/pull/808)

|

||||

* [kubevirt] Make KubeVirt's CPU allocation ratio configurable. (@lllamnyp in https://github.com/cozystack/cozystack/pull/905)

|

||||

* [virtual-machine] Add support for various storages. (@kvaps in https://github.com/cozystack/cozystack/pull/974)

|

||||

-->

|

||||

|

||||

### Other Features and Improvements

|

||||

|

||||

* [platform] Introduce options `expose-services`, `expose-ingress`, and `expose-external-ips` to the ingress service. (@kvaps in https://github.com/cozystack/cozystack/pull/929)

|

||||

* [cozystack-controller] Record the IP address pool and storage class in Workload objects. (@lllamnyp in https://github.com/cozystack/cozystack/pull/831)

|

||||

* [apps] Remove user-facing config of limits and requests. (@lllamnyp in https://github.com/cozystack/cozystack/pull/935)

|

||||

|

||||

## New Release Lifecycle

|

||||

|

||||

Cozystack release lifecycle is changing to provide a more stable and predictable lifecycle to customers running Cozystack in mission-critical environments.

|

||||

|

||||

* **Gradual Release with Alpha, Beta, and Release Candidates**: Cozystack will now publish pre-release versions (alpha, beta, release candidates) before a stable release.

|

||||

Starting with v0.31.0, the team made three release candidates before releasing version v0.31.0.

|

||||

This allows more testing and feedback before marking a release as stable.

|

||||

|

||||

* **Prolonged Release Support with Patch Versions**: After the initial `vX.Y.0` release, a long-lived branch `release-X.Y` will be created to backport fixes.

|

||||

For example, with 0.31.0’s release, a `release-0.31` branch will track patch fixes (`0.31.x`).

|

||||

This strategy lets Cozystack users receive timely patch releases and updates with minimal risks.

|

||||

|

||||

To implement these new changes, we have rebuilt our CI/CD workflows and introduced automation, enabling automatic backports.

|

||||

You can read more about how it's implemented in the Development section below.

|

||||

|

||||

For more information, read the [Cozystack Release Workflow](https://github.com/cozystack/cozystack/blob/main/docs/release.md) documentation.

|

||||

* [cilium] Enable Cilium Gateway API. (@zdenekjanda in https://github.com/cozystack/cozystack/pull/924)

|

||||

* [cilium] Enable user-added parameters in a tenant cluster Cilium. (@lllamnyp in https://github.com/cozystack/cozystack/pull/917)

|

||||

* Update the Cozystack release policy to include long-lived release branches and start with release candidates. Update CI workflows and docs accordingly.

|

||||

* Use release branches `release-X.Y` for gathering and releasing fixes after initial `vX.Y.0` release. (@kvaps in https://github.com/cozystack/cozystack/pull/816)

|

||||

* Automatically create release branches after initial `vX.Y.0` release is published. (@kvaps in https://github.com/cozystack/cozystack/pull/886)

|

||||

* Introduce Release Candidate versions. Automate patch backporting by applying patches from pull requests labeled `[backport]` to the current release branch. (@kvaps in https://github.com/cozystack/cozystack/pull/841 and https://github.com/cozystack/cozystack/pull/901, @nickvolynkin in https://github.com/cozystack/cozystack/pull/890)

|

||||

* Commit changes in release pipelines under `github-actions <github-actions@github.com>`. (@kvaps in https://github.com/cozystack/cozystack/pull/823)

|

||||

* Describe the Cozystack release workflow. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/817 and https://github.com/cozystack/cozystack/pull/897)

|

||||

|

||||

## Fixes

|

||||

|

||||

* [virtual-machine] Add GPU names to the virtual machine specifications. (@kvaps in https://github.com/cozystack/cozystack/pull/862)

|

||||

* [virtual-machine] Count Workload resources for pods by requests, not limits. Other improvements to VM resource tracking. (@lllamnyp in https://github.com/cozystack/cozystack/pull/904)

|

||||

* [virtual-machine] Set PortList method by default. (@kvaps in https://github.com/cozystack/cozystack/pull/996)

|

||||

* [virtual-machine] Specify ports even for wholeIP mode. (@kvaps in https://github.com/cozystack/cozystack/pull/1000)

|

||||

* [platform] Fix installing HelmReleases on initial setup. (@kvaps in https://github.com/cozystack/cozystack/pull/833)

|

||||

* [platform] Migration scripts update Kubernetes ConfigMap with the current stack version for improved version tracking. (@klinch0 in https://github.com/cozystack/cozystack/pull/840)

|

||||

* [platform] Reduce requested CPU and RAM for the `kamaji` provider. (@klinch0 in https://github.com/cozystack/cozystack/pull/825)

|

||||

@@ -135,8 +42,6 @@ For more information, read the [Cozystack Release Workflow](https://github.com/c

|

||||

* [kubernetes] Fix merging `valuesOverride` for tenant clusters. (@kvaps in https://github.com/cozystack/cozystack/pull/879)

|

||||

* [kubernetes] Fix `ubuntu-container-disk` tag. (@kvaps in https://github.com/cozystack/cozystack/pull/887)

|

||||

* [kubernetes] Refactor Helm manifests for tenant Kubernetes clusters. (@kvaps in https://github.com/cozystack/cozystack/pull/866)

|

||||

* [kubernetes] Fix Ingress-NGINX depends on Cert-Manager. (@kvaps in https://github.com/cozystack/cozystack/pull/976)

|

||||

* [kubernetes, apps] Enable `topologySpreadConstraints` for tenant Kubernetes clusters and fix it for managed PostgreSQL. (@klinch0 in https://github.com/cozystack/cozystack/pull/995)

|

||||

* [tenant] Fix an issue with accessing external IPs of a cluster from the cluster itself. (@kvaps in https://github.com/cozystack/cozystack/pull/854)

|

||||

* [cluster-api] Remove the no longer necessary workaround for Kamaji. (@kvaps in https://github.com/cozystack/cozystack/pull/867, patched in https://github.com/cozystack/cozystack/pull/956)

|

||||

* [monitoring] Remove legacy label "POD" from the exclude filter in metrics. (@xy2 in https://github.com/cozystack/cozystack/pull/826)

|

||||

@@ -145,13 +50,22 @@ For more information, read the [Cozystack Release Workflow](https://github.com/c

|

||||

* [postgres] Remove duplicated `template` entry from backup manifest. (@etoshutka in https://github.com/cozystack/cozystack/pull/872)

|

||||

* [kube-ovn] Fix versions mapping in Makefile. (@kvaps in https://github.com/cozystack/cozystack/pull/883)

|

||||

* [dx] Automatically detect version for migrations in the installer.sh. (@kvaps in https://github.com/cozystack/cozystack/pull/837)

|

||||

* [dx] remove version_map and building for library charts. (@kvaps in https://github.com/cozystack/cozystack/pull/998)

|

||||

* [docs] Review the tenant Kubernetes cluster docs. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/969)

|

||||

* [docs] Explain that tenants cannot have dashes in their names. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/980)

|

||||

* [e2e] Increase timeout durations for `capi` and `keycloak` to improve reliability during environment setup. (@kvaps in https://github.com/cozystack/cozystack/pull/858)

|

||||

* [e2e] Fix `device_ownership_from_security_context` CRI. (@dtrdnk in https://github.com/cozystack/cozystack/pull/896)

|

||||

* [e2e] Return `genisoimage` to the e2e-test Dockerfile (@gwynbleidd2106 in https://github.com/cozystack/cozystack/pull/962)

|

||||

* [ci] Improve the check for `versions_map` running on pull requests. (@kvaps and @klinch0 in https://github.com/cozystack/cozystack/pull/836, https://github.com/cozystack/cozystack/pull/842, and https://github.com/cozystack/cozystack/pull/845)

|

||||

* [ci] If the release step was skipped on a tag, skip tests as well. (@kvaps in https://github.com/cozystack/cozystack/pull/822)

|

||||

* [ci] Allow CI to cancel the previous job if a new one is scheduled. (@kvaps in https://github.com/cozystack/cozystack/pull/873)

|

||||

* [ci] Use the correct version name when uploading build assets to the release page. (@kvaps in https://github.com/cozystack/cozystack/pull/876)

|

||||

* [ci] Stop using `ok-to-test` label to trigger CI in pull requests. (@kvaps in https://github.com/cozystack/cozystack/pull/875)

|

||||

* [ci] Do not run tests in the release building pipeline. (@kvaps in https://github.com/cozystack/cozystack/pull/882)

|

||||

* [ci] Fix release branch creation. (@kvaps in https://github.com/cozystack/cozystack/pull/884)

|

||||

* [ci, dx] Reduce noise in the test logs by suppressing the `wget` progress bar. (@lllamnyp in https://github.com/cozystack/cozystack/pull/865)

|

||||

* [ci] Revert "automatically trigger tests in releasing PR". (@kvaps in https://github.com/cozystack/cozystack/pull/900)

|

||||

|

||||

## Dependencies

|

||||

|

||||

* MetalLB images are now built in-tree based on version 0.14.9 with additional critical patches. (@lllamnyp in https://github.com/cozystack/cozystack/pull/945)

|

||||

* MetalLB s now included directly as a patched image based on version 0.14.9. (@lllamnyp in https://github.com/cozystack/cozystack/pull/945)

|

||||

* Update Kubernetes to v1.32.4. (@kvaps in https://github.com/cozystack/cozystack/pull/949)

|

||||

* Update Talos Linux to v1.10.1. (@kvaps in https://github.com/cozystack/cozystack/pull/931)

|

||||

* Update Cilium to v1.17.3. (@kvaps in https://github.com/cozystack/cozystack/pull/848)

|

||||

@@ -160,84 +74,17 @@ For more information, read the [Cozystack Release Workflow](https://github.com/c

|

||||

* Update tenant Kubernetes to v1.32. (@kvaps in https://github.com/cozystack/cozystack/pull/871)

|

||||

* Update flux-operator to 0.20.0. (@kingdonb in https://github.com/cozystack/cozystack/pull/880 and https://github.com/cozystack/cozystack/pull/934)

|

||||

* Update multiple Cluster API components. (@kvaps in https://github.com/cozystack/cozystack/pull/867 and https://github.com/cozystack/cozystack/pull/947)

|

||||

* Update KamajiControlPlane to edge-25.4.1. (@kvaps in https://github.com/cozystack/cozystack/pull/953, fixed by @nbykov0 in https://github.com/cozystack/cozystack/pull/983)

|

||||

* Update cert-manager to v1.17.2. (@kvaps in https://github.com/cozystack/cozystack/pull/975)

|

||||

* Update KamajiControlPlane to edge-25.4.1. (@kvaps in https://github.com/cozystack/cozystack/pull/953)

|

||||

|

||||

## Documentation

|

||||

## Maintenance

|

||||

|

||||

* [Installing Talos in Air-Gapped Environment](https://cozystack.io/docs/operations/talos/configuration/air-gapped/):

|

||||

new guide for configuring and bootstrapping Talos Linux clusters in air-gapped environments.

|

||||

(@klinch0 in https://github.com/cozystack/website/pull/203)

|

||||

* Add @klinch0 to CODEOWNERS. (@kvaps in https://github.com/cozystack/cozystack/pull/838)

|

||||

|

||||

* [Cozystack Bundles](https://cozystack.io/docs/guides/bundles/): new page in the learning section explaining how Cozystack bundles work and how to choose a bundle.

|

||||

(@NickVolynkin in https://github.com/cozystack/website/pull/188, https://github.com/cozystack/website/pull/189, and others;

|

||||

updated by @kvaps in https://github.com/cozystack/website/pull/192 and https://github.com/cozystack/website/pull/193)

|

||||

|

||||

* [Managed Application Reference](https://cozystack.io/docs/reference/applications/): A set of new pages in the docs, mirroring application docs from the Cozystack dashboard.

|

||||

(@NickVolynkin in https://github.com/cozystack/website/pull/198, https://github.com/cozystack/website/pull/202, and https://github.com/cozystack/website/pull/204)

|

||||

|

||||

* **LINSTOR Networking**: Guides on [configuring dedicated network for LINSTOR](https://cozystack.io/docs/operations/storage/dedicated-network/)

|

||||

and [configuring network for distributed storage in multi-datacenter setup](https://cozystack.io/docs/operations/stretched/linstor-dedicated-network/).

|

||||

(@xy2, edited by @NickVolynkin in https://github.com/cozystack/website/pull/171, https://github.com/cozystack/website/pull/182, and https://github.com/cozystack/website/pull/184)

|

||||

|

||||

### Fixes

|

||||

|

||||

* Correct error in the doc for the command to edit the configmap. (@lb0o in https://github.com/cozystack/website/pull/207)

|

||||

* Fix group name in OIDC docs (@kingdonb in https://github.com/cozystack/website/pull/179)

|

||||

* A bit more explanation of Docker buildx builders. (@nbykov0 in https://github.com/cozystack/website/pull/187)

|

||||

|

||||

## Development, Testing, and CI/CD

|

||||

|

||||

### Testing

|

||||

|

||||

Improvements:

|

||||

|

||||

* Introduce `cozytest` — a new [BATS-based](https://github.com/bats-core/bats-core) testing framework. (@kvaps in https://github.com/cozystack/cozystack/pull/982)

|

||||

|

||||

Fixes:

|

||||

|

||||

* Fix `device_ownership_from_security_context` CRI. (@dtrdnk in https://github.com/cozystack/cozystack/pull/896)

|

||||

* Increase timeout durations for `capi` and `keycloak` to improve reliability during e2e-tests. (@kvaps in https://github.com/cozystack/cozystack/pull/858)

|

||||

* Return `genisoimage` to the e2e-test Dockerfile (@gwynbleidd2106 in https://github.com/cozystack/cozystack/pull/962)

|

||||

|

||||

### CI/CD Changes

|

||||

|

||||

Improvements:

|

||||

|

||||

* Use release branches `release-X.Y` for gathering and releasing fixes after initial `vX.Y.0` release. (@kvaps in https://github.com/cozystack/cozystack/pull/816)

|

||||

* Automatically create release branches after initial `vX.Y.0` release is published. (@kvaps in https://github.com/cozystack/cozystack/pull/886)

|

||||

* Introduce Release Candidate versions. Automate patch backporting by applying patches from pull requests labeled `[backport]` to the current release branch. (@kvaps in https://github.com/cozystack/cozystack/pull/841 and https://github.com/cozystack/cozystack/pull/901, @nickvolynkin in https://github.com/cozystack/cozystack/pull/890)

|

||||

* Support alpha and beta pre-releases. (@kvaps in https://github.com/cozystack/cozystack/pull/978)

|

||||

* Commit changes in release pipelines under `github-actions <github-actions@github.com>`. (@kvaps in https://github.com/cozystack/cozystack/pull/823)

|

||||

* Describe the Cozystack release workflow. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/817 and https://github.com/cozystack/cozystack/pull/897)

|

||||

|

||||

Fixes:

|

||||

|

||||

* Improve the check for `versions_map` running on pull requests. (@kvaps and @klinch0 in https://github.com/cozystack/cozystack/pull/836, https://github.com/cozystack/cozystack/pull/842, and https://github.com/cozystack/cozystack/pull/845)

|

||||

* If the release step was skipped on a tag, skip tests as well. (@kvaps in https://github.com/cozystack/cozystack/pull/822)

|

||||

* Allow CI to cancel the previous job if a new one is scheduled. (@kvaps in https://github.com/cozystack/cozystack/pull/873)

|

||||

* Use the correct version name when uploading build assets to the release page. (@kvaps in https://github.com/cozystack/cozystack/pull/876)

|

||||

* Stop using `ok-to-test` label to trigger CI in pull requests. (@kvaps in https://github.com/cozystack/cozystack/pull/875)

|

||||

* Do not run tests in the release building pipeline. (@kvaps in https://github.com/cozystack/cozystack/pull/882)

|

||||

* Fix release branch creation. (@kvaps in https://github.com/cozystack/cozystack/pull/884)

|

||||

* Reduce noise in the test logs by suppressing the `wget` progress bar. (@lllamnyp in https://github.com/cozystack/cozystack/pull/865)

|

||||

* Revert "automatically trigger tests in releasing PR". (@kvaps in https://github.com/cozystack/cozystack/pull/900)

|

||||

* Force-update release branch on tagged main commits. (@kvaps in https://github.com/cozystack/cozystack/pull/977)

|

||||

* Show detailed errors in the `pull-request-release` workflow. (@lllamnyp in https://github.com/cozystack/cozystack/pull/992)

|

||||

|

||||

## Community and Maintenance

|

||||

|

||||

### Repository Maintenance

|

||||

|

||||

Added @klinch0 to CODEOWNERS. (@kvaps in https://github.com/cozystack/cozystack/pull/838)

|

||||

|

||||

### New Contributors

|

||||

## New Contributors

|

||||

|

||||

* @etoshutka made their first contribution in https://github.com/cozystack/cozystack/pull/872

|

||||

* @dtrdnk made their first contribution in https://github.com/cozystack/cozystack/pull/896

|

||||

* @zdenekjanda made their first contribution in https://github.com/cozystack/cozystack/pull/924

|

||||

* @gwynbleidd2106 made their first contribution in https://github.com/cozystack/cozystack/pull/962

|

||||

|

||||

## Full Changelog

|

||||

|

||||

See https://github.com/cozystack/cozystack/compare/v0.30.0...v0.31.0

|

||||

**Full Changelog**: https://github.com/cozystack/cozystack/compare/v0.30.0...v0.31.0-rc.2

|

||||

|

||||

@@ -1,8 +0,0 @@

|

||||

## Fixes

|

||||

|

||||

* [build] Update Talos Linux v1.10.3 and fix assets. (@kvaps in https://github.com/cozystack/cozystack/pull/1006)

|

||||

* [ci] Fix uploading released artifacts to GitHub. (@kvaps in https://github.com/cozystack/cozystack/pull/1009)

|

||||

* [ci] Separate build and testing jobs. (@kvaps in https://github.com/cozystack/cozystack/pull/1005)

|

||||

* [docs] Write a full release post for v0.31.1. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/999)

|

||||

|

||||

**Full Changelog**: https://github.com/cozystack/cozystack/compare/v0.31.0...v0.31.1

|

||||

@@ -1,12 +0,0 @@

|

||||

## Security

|

||||

|

||||

* Resolve a security problem that allowed a tenant administrator to gain enhanced privileges outside the tenant. (@kvaps in https://github.com/cozystack/cozystack/pull/1062, backported in https://github.com/cozystack/cozystack/pull/1066)

|

||||

|

||||

## Fixes

|

||||

|

||||

* [platform] Fix dependencies in `distro-full` bundle. (@klinch0 in https://github.com/cozystack/cozystack/pull/1056, backported in https://github.com/cozystack/cozystack/pull/1064)

|

||||

* [platform] Fix RBAC for annotating namespaces. (@kvaps in https://github.com/cozystack/cozystack/pull/1031, backported in https://github.com/cozystack/cozystack/pull/1037)

|

||||

* [platform] Reduce system resource consumption by using smaller resource presets for VerticalPodAutoscaler, SeaweedFS, and KubeOVN. (@klinch0 in https://github.com/cozystack/cozystack/pull/1054, backported in https://github.com/cozystack/cozystack/pull/1058)

|

||||

* [dashboard] Fix a number of issues in the Cozystack Dashboard (@kvaps in https://github.com/cozystack/cozystack/pull/1042, backported in https://github.com/cozystack/cozystack/pull/1066)

|

||||

* [apps] Specify minimal working resource presets. (@kvaps in https://github.com/cozystack/cozystack/pull/1040, backported in https://github.com/cozystack/cozystack/pull/1041)

|

||||

* [apps] Update built-in documentation and configuration reference for managed Clickhouse application. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/1059, backported in https://github.com/cozystack/cozystack/pull/1065)

|

||||

@@ -1,71 +0,0 @@

|

||||

Cozystack v0.32.0 is a significant release that brings new features, key fixes, and updates to underlying components.

|

||||

|

||||

## Major Features and Improvements

|

||||

|

||||

* [platform] Use `cozypkg` instead of Helm (@kvaps in https://github.com/cozystack/cozystack/pull/1057)

|

||||

* [platform] Introduce the HelmRelease reconciler for system components. (@kvaps in https://github.com/cozystack/cozystack/pull/1033)

|

||||

* [kubernetes] Enable using container registry mirrors by tenant Kubernetes clusters. Configure containerd for tenant Kubernetes clusters. (@klinch0 in https://github.com/cozystack/cozystack/pull/979, patched by @lllamnyp in https://github.com/cozystack/cozystack/pull/1032)

|

||||

* [platform] Allow users to specify CPU requests in VCPUs. Use a library chart for resource management. (@lllamnyp in https://github.com/cozystack/cozystack/pull/972 and https://github.com/cozystack/cozystack/pull/1025)

|

||||

* [platform] Annotate all child objects of apps with uniform labels for tracking by WorkloadMonitors. (@lllamnyp in https://github.com/cozystack/cozystack/pull/1018 and https://github.com/cozystack/cozystack/pull/1024)

|

||||

* [platform] Introduce `cluster-domain` option and un-hardcode `cozy.local`. (@kvaps in https://github.com/cozystack/cozystack/pull/1039)

|

||||

* [platform] Get instance type when reconciling WorkloadMonitor (https://github.com/cozystack/cozystack/pull/1030)

|

||||

* [virtual-machine] Add RBAC rules to allow port forwarding in KubeVirt for SSH via `virtctl`. (@mattia-eleuteri in https://github.com/cozystack/cozystack/pull/1027, patched by @klinch0 in https://github.com/cozystack/cozystack/pull/1028)

|

||||

* [monitoring] Add events and audit inputs (@kevin880202 in https://github.com/cozystack/cozystack/pull/948)

|

||||

|

||||

## Security

|

||||

|

||||

* Resolve a security problem that allowed tenant administrator to gain enhanced privileges outside the tenant. (@kvaps in https://github.com/cozystack/cozystack/pull/1062)

|

||||

|

||||

## Fixes

|

||||

|

||||

* [dashboard] Fix a number of issues in the Cozystack Dashboard (@kvaps in https://github.com/cozystack/cozystack/pull/1042)

|

||||

* [kafka] Specify minimal working resource presets. (@kvaps in https://github.com/cozystack/cozystack/pull/1040)

|

||||

* [cilium] Fixed Gateway API manifest. (@zdenekjanda in https://github.com/cozystack/cozystack/pull/1016)

|

||||

* [platform] Fix RBAC for annotating namespaces. (@kvaps in https://github.com/cozystack/cozystack/pull/1031)

|

||||

* [platform] Fix dependencies for paas-hosted bundle. (@kvaps in https://github.com/cozystack/cozystack/pull/1034)

|

||||

* [platform] Reduce system resource consumption by using lesser resource presets for VerticalPodAutoscaler, SeaweedFS, and KubeOVN. (@klinch0 in https://github.com/cozystack/cozystack/pull/1054)

|

||||

* [virtual-machine] Fix handling of cloudinit and ssh-key input for `virtual-machine` and `vm-instance` applications. (@gwynbleidd2106 in https://github.com/cozystack/cozystack/pull/1019 and https://github.com/cozystack/cozystack/pull/1020)

|

||||

* [apps] Fix Clickhouse version parsing. (@kvaps in https://github.com/cozystack/cozystack/commit/28302e776e9d2bb8f424cf467619fa61d71ac49a)

|

||||

* [apps] Add resource quotas for PostgreSQL jobs and fix application readme generation check in CI. (@klinch0 in https://github.com/cozystack/cozystack/pull/1051)

|

||||

* [kube-ovn] Enable database health check. (@kvaps in https://github.com/cozystack/cozystack/pull/1047)

|

||||

* [kubernetes] Fix upstream issue by updating Kubevirt-CCM. (@kvaps in https://github.com/cozystack/cozystack/pull/1052)

|

||||

* [kubernetes] Fix resources and introduce a migration when upgrading tenant Kubernetes to v0.32.4. (@kvaps in https://github.com/cozystack/cozystack/pull/1073)

|

||||

* [cluster-api] Add a missing migration for `capi-providers`. (@kvaps in https://github.com/cozystack/cozystack/pull/1072)

|

||||

|

||||

## Dependencies

|

||||

|

||||

* Introduce cozykpg, update to v1.1.0. (@kvaps in https://github.com/cozystack/cozystack/pull/1057 and https://github.com/cozystack/cozystack/pull/1063)

|

||||

* Update flux-operator to 0.22.0, Flux to 2.6.x. (@kingdonb in https://github.com/cozystack/cozystack/pull/1035)

|

||||