mirror of

https://github.com/outbackdingo/cozystack.git

synced 2026-01-28 18:18:41 +00:00

Compare commits

10 Commits

project-do

...

cilium

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

8055151d32 | ||

|

|

2e3555600d | ||

|

|

98f488fcac | ||

|

|

1c6de1ccf5 | ||

|

|

235a2fcf47 | ||

|

|

24151b09f3 | ||

|

|

b37071f05e | ||

|

|

c64c6b549b | ||

|

|

df47d2f4a6 | ||

|

|

c0aea5a106 |

2

.gitignore

vendored

2

.gitignore

vendored

@@ -1 +1,3 @@

|

||||

_out

|

||||

.git

|

||||

.idea

|

||||

28

ADOPTERS.md

Normal file

28

ADOPTERS.md

Normal file

@@ -0,0 +1,28 @@

|

||||

# Adopters

|

||||

|

||||

Below you can find a list of organizations and users who have agreed to

|

||||

tell the world that they are using Cozystack in a production environment.

|

||||

|

||||

The goal of this list is to inspire others to do the same and to grow

|

||||

this open source community and project.

|

||||

|

||||

Please add your organization to this list. It takes 5 minutes of your time,

|

||||

but it means a lot to us.

|

||||

|

||||

## Updating this list

|

||||

|

||||

To add your organization to this list, you can either:

|

||||

|

||||

- [open a pull request](https://github.com/aenix-io/cozystack/pulls) to directly update this file, or

|

||||

- [edit this file](https://github.com/aenix-io/cozystack/blob/main/ADOPTERS.md) directly in GitHub

|

||||

|

||||

Feel free to ask in the Slack chat if you any questions and/or require

|

||||

assistance with updating this list.

|

||||

|

||||

## Cozystack Adopters

|

||||

|

||||

This list is sorted in chronological order, based on the submission date.

|

||||

|

||||

| Organization | Contact | Date | Description of Use |

|

||||

| ------------ | ------- | ---- | ------------------ |

|

||||

| [Ænix](https://aenix.io/) | @kvaps | 2024-02-14 | Ænix provides consulting services for cloud providers and uses Cozystack as the main tool for organizing managed services for them. |

|

||||

3

CODE_OF_CONDUCT.md

Normal file

3

CODE_OF_CONDUCT.md

Normal file

@@ -0,0 +1,3 @@

|

||||

# Code of Conduct

|

||||

|

||||

Cozystack follows the [CNCF Code of Conduct](https://github.com/cncf/foundation/blob/master/code-of-conduct.md).

|

||||

45

CONTRIBUTING.md

Normal file

45

CONTRIBUTING.md

Normal file

@@ -0,0 +1,45 @@

|

||||

# Contributing to Cozystack

|

||||

|

||||

Welcome! We are glad that you want to contribute to our Cozystack project! 💖

|

||||

|

||||

As you get started, you are in the best position to give us feedbacks on areas of our project that we need help with, including:

|

||||

|

||||

* Problems found while setting up the development environment

|

||||

* Gaps in our documentation

|

||||

* Bugs in our Github actions

|

||||

|

||||

First, though, it is important that you read the [code of conduct](CODE_OF_CONDUCT.md).

|

||||

|

||||

The guidelines below are a starting point. We don't want to limit your

|

||||

creativity, passion, and initiative. If you think there's a better way, please

|

||||

feel free to bring it up in a Github discussion, or open a pull request. We're

|

||||

certain there are always better ways to do things, we just need to start some

|

||||

constructive dialogue!

|

||||

|

||||

## Ways to contribute

|

||||

|

||||

We welcome many types of contributions including:

|

||||

|

||||

* New features

|

||||

* Builds, CI/CD

|

||||

* Bug fixes

|

||||

* [Documentation](https://github.com/aenix-io/cozystack-website/tree/main)

|

||||

* Issue Triage

|

||||

* Answering questions on Slack or Github Discussions

|

||||

* Web design

|

||||

* Communications / Social Media / Blog Posts

|

||||

* Events participation

|

||||

* Release management

|

||||

|

||||

## Ask for Help

|

||||

|

||||

The best way to reach us with a question when contributing is to drop a line in

|

||||

our [Telegram channel](https://t.me/cozystack), or start a new Github discussion.

|

||||

|

||||

## Raising Issues

|

||||

|

||||

When raising issues, please specify the following:

|

||||

|

||||

- A scenario where the issue occurred (with details on how to reproduce it)

|

||||

- Errors and log messages that are displayed by the involved software

|

||||

- Any other detail that might be useful

|

||||

7

MAINTAINERS.md

Normal file

7

MAINTAINERS.md

Normal file

@@ -0,0 +1,7 @@

|

||||

# The Cozystack Maintainers

|

||||

|

||||

| Maintainer | GitHub Username | Company |

|

||||

| ---------- | --------------- | ------- |

|

||||

| Andrei Kvapil | [@kvaps](https://github.com/kvaps) | Ænix |

|

||||

| George Gaál | [@gecube](https://github.com/gecube) | Ænix |

|

||||

| Eduard Generalov | [@egeneralov](https://github.com/egeneralov) | Ænix |

|

||||

553

README.md

553

README.md

@@ -10,7 +10,7 @@

|

||||

|

||||

# Cozystack

|

||||

|

||||

**Cozystack** is an open-source **PaaS platform** for cloud providers.

|

||||

**Cozystack** is a free PaaS platform and framework for building clouds.

|

||||

|

||||

With Cozystack, you can transform your bunch of servers into an intelligent system with a simple REST API for spawning Kubernetes clusters, Database-as-a-Service, virtual machines, load balancers, HTTP caching services, and other services with ease.

|

||||

|

||||

@@ -18,548 +18,53 @@ You can use Cozystack to build your own cloud or to provide a cost-effective dev

|

||||

|

||||

## Use-Cases

|

||||

|

||||

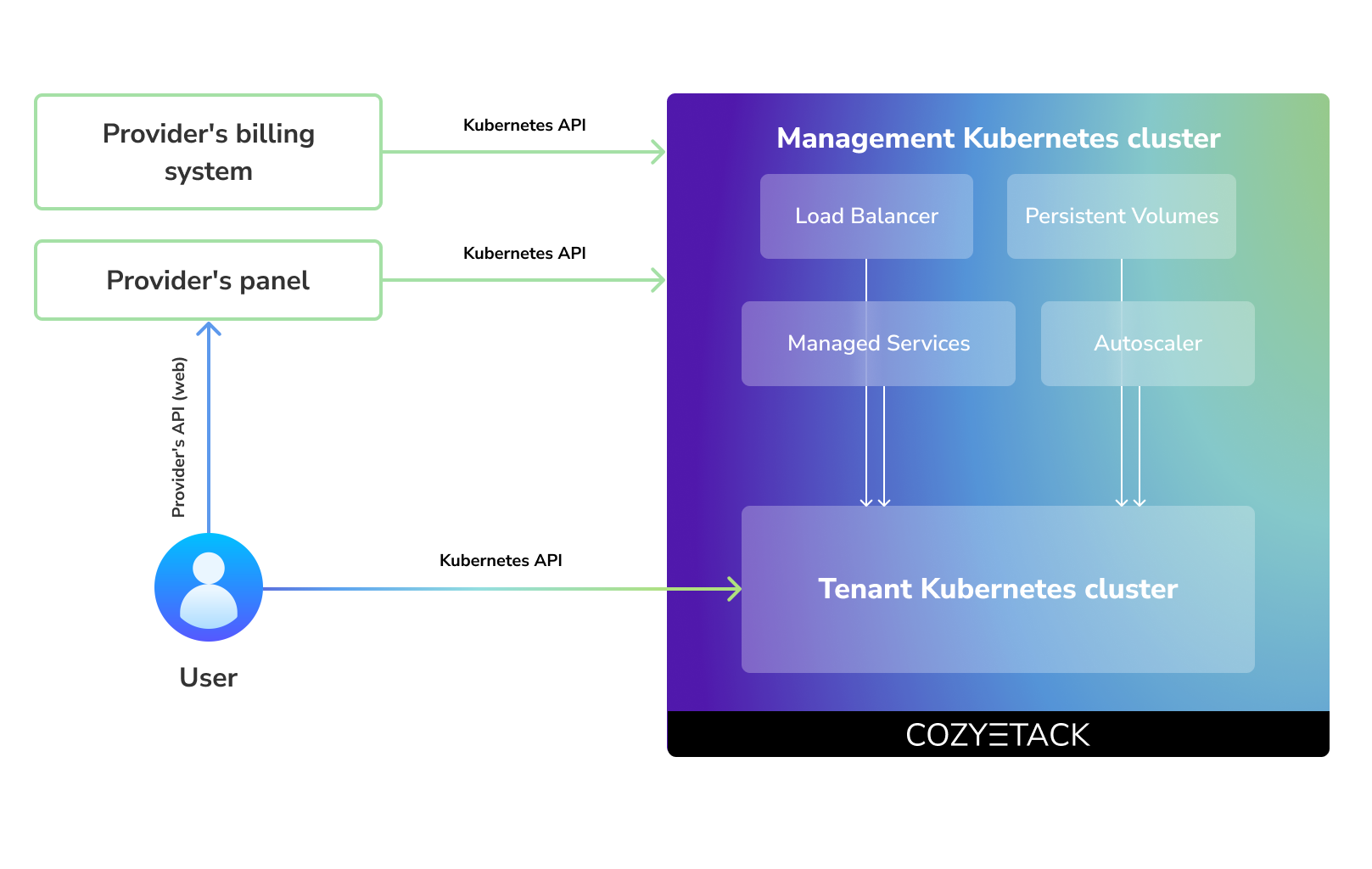

### As a backend for a public cloud

|

||||

* [**Using Cozystack to build public cloud**](https://cozystack.io/docs/use-cases/public-cloud/)

|

||||

You can use Cozystack as backend for a public cloud

|

||||

|

||||

Cozystack positions itself as a kind of framework for building public clouds. The key word here is framework. In this case, it's important to understand that Cozystack is made for cloud providers, not for end users.

|

||||

* [**Using Cozystack to build private cloud**](https://cozystack.io/docs/use-cases/private-cloud/)

|

||||

You can use Cozystack as platform to build a private cloud powered by Infrastructure-as-Code approach

|

||||

|

||||

Despite having a graphical interface, the current security model does not imply public user access to your management cluster.

|

||||

|

||||

Instead, end users get access to their own Kubernetes clusters, can order LoadBalancers and additional services from it, but they have no access and know nothing about your management cluster powered by Cozystack.

|

||||

|

||||

Thus, to integrate with your billing system, it's enough to teach your system to go to the management Kubernetes and place a YAML file signifying the service you're interested in. Cozystack will do the rest of the work for you.

|

||||

|

||||

|

||||

|

||||

### As a private cloud for Infrastructure-as-Code

|

||||

|

||||

One of the use cases is a self-portal for users within your company, where they can order the service they're interested in or a managed database.

|

||||

|

||||

You can implement best GitOps practices, where users will launch their own Kubernetes clusters and databases for their needs with a simple commit of configuration into your infrastructure Git repository.

|

||||

|

||||

Thanks to the standardization of the approach to deploying applications, you can expand the platform's capabilities using the functionality of standard Helm charts.

|

||||

|

||||

### As a Kubernetes distribution for Bare Metal

|

||||

|

||||

We created Cozystack primarily for our own needs, having vast experience in building reliable systems on bare metal infrastructure. This experience led to the formation of a separate boxed product, which is aimed at standardizing and providing a ready-to-use tool for managing your infrastructure.

|

||||

|

||||

Currently, Cozystack already solves a huge scope of infrastructure tasks: starting from provisioning bare metal servers, having a ready monitoring system, fast and reliable storage, a network fabric with the possibility of interconnect with your infrastructure, the ability to run virtual machines, databases, and much more right out of the box.

|

||||

|

||||

All this makes Cozystack a convenient platform for delivering and launching your application on Bare Metal.

|

||||

* [**Using Cozystack as Kubernetes distribution**](https://cozystack.io/docs/use-cases/kubernetes-distribution/)

|

||||

You can use Cozystack as Kubernetes distribution for Bare Metal

|

||||

|

||||

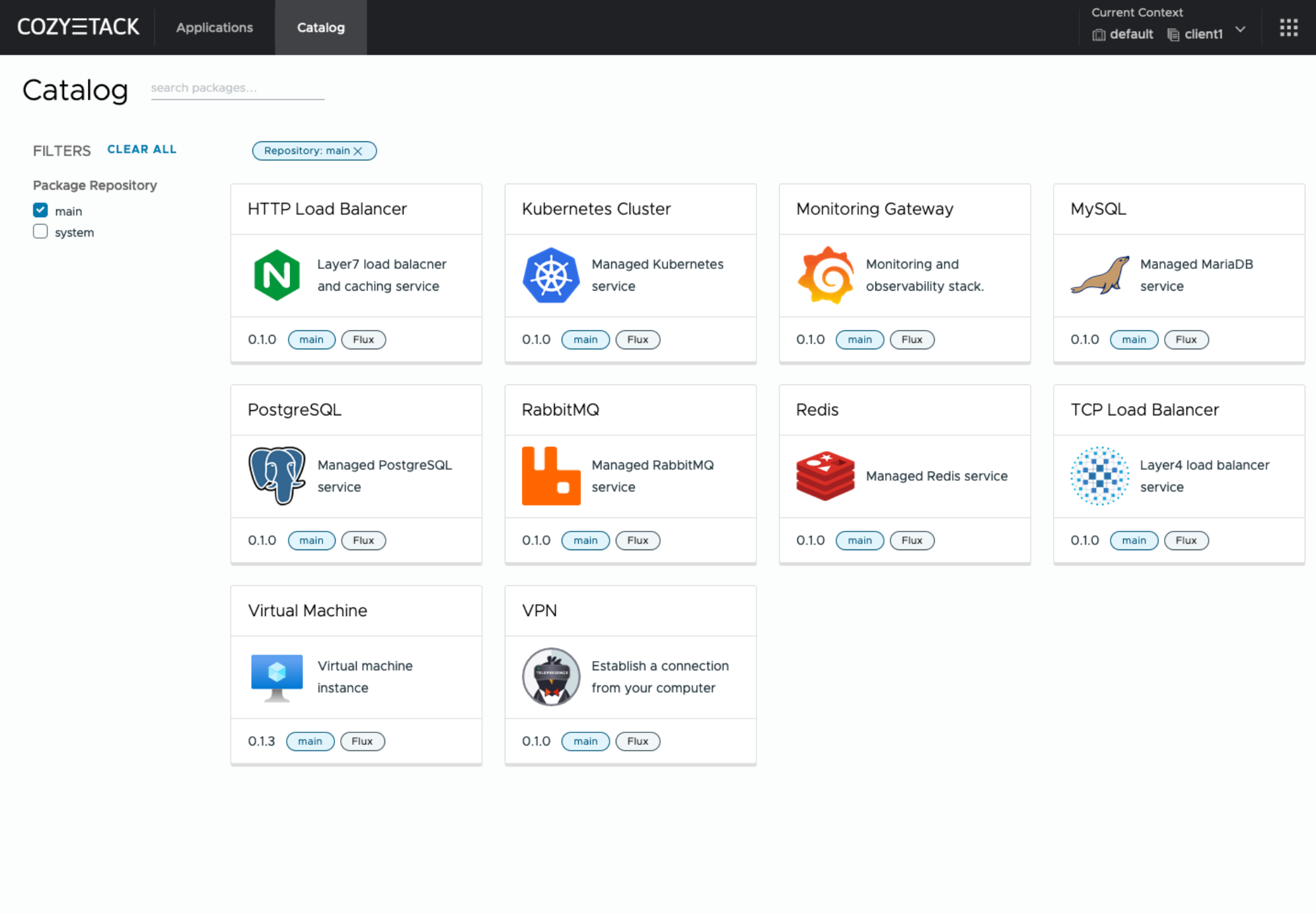

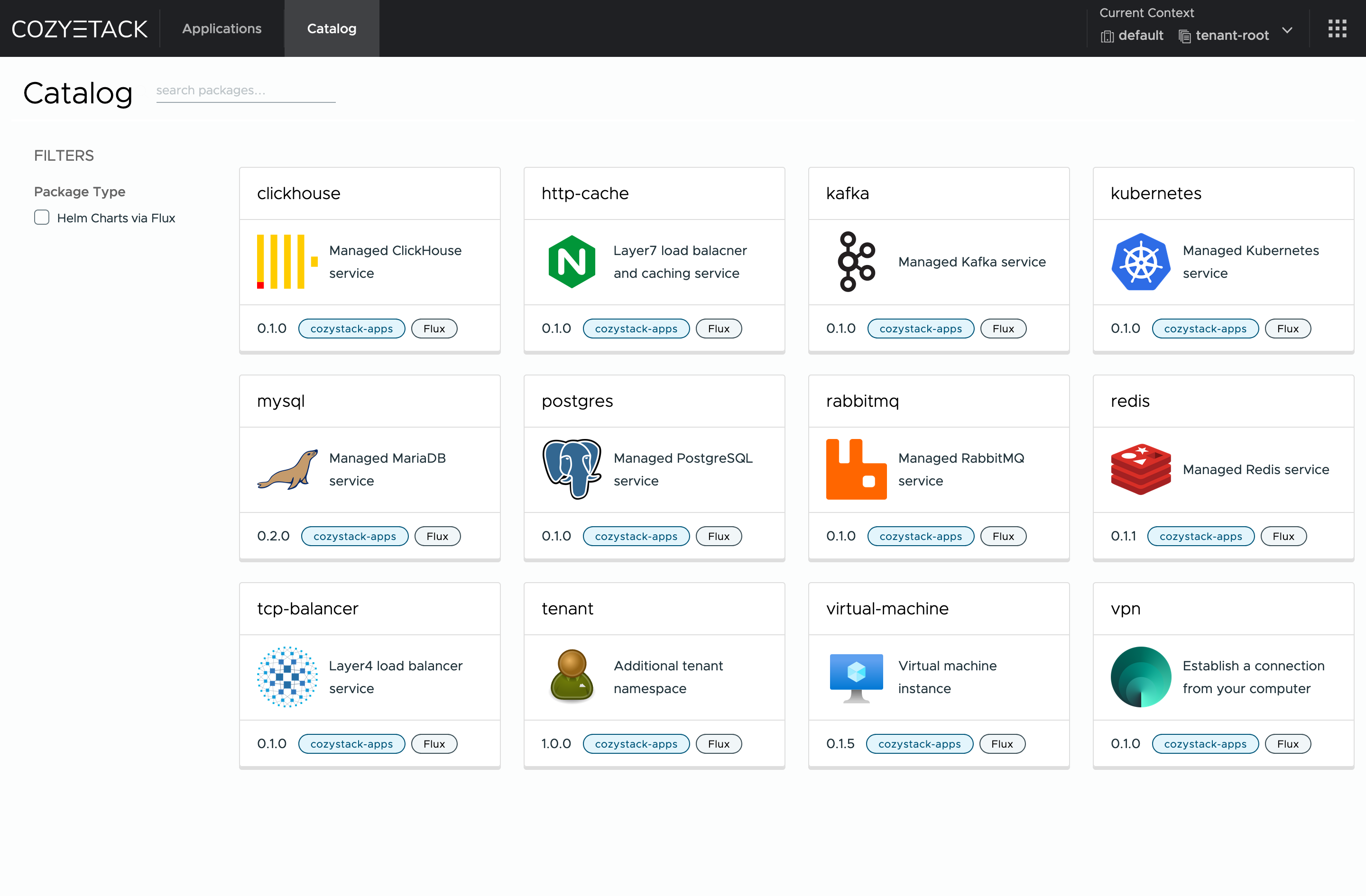

## Screenshot

|

||||

|

||||

|

||||

|

||||

|

||||

## Core values

|

||||

## Documentation

|

||||

|

||||

### Standardization and unification

|

||||

All components of the platform are based on open source tools and technologies which are widely known in the industry.

|

||||

The documentation is located on official [cozystack.io](cozystack.io) website.

|

||||

|

||||

### Collaborate, not compete

|

||||

If a feature being developed for the platform could be useful to a upstream project, it should be contributed to upstream project, rather than being implemented within the platform.

|

||||

Read [Get Started](https://cozystack.io/docs/get-started/) section for a quick start.

|

||||

|

||||

### API-first

|

||||

Cozystack is based on Kubernetes and involves close interaction with its API. We don't aim to completely hide the all elements behind a pretty UI or any sort of customizations; instead, we provide a standard interface and teach users how to work with basic primitives. The web interface is used solely for deploying applications and quickly diving into basic concepts of platform.

|

||||

If you encounter any difficulties, start with the [troubleshooting guide](https://cozystack.io/docs/troubleshooting/), and work your way through the process that we've outlined.

|

||||

|

||||

## Quick Start

|

||||

## Versioning

|

||||

|

||||

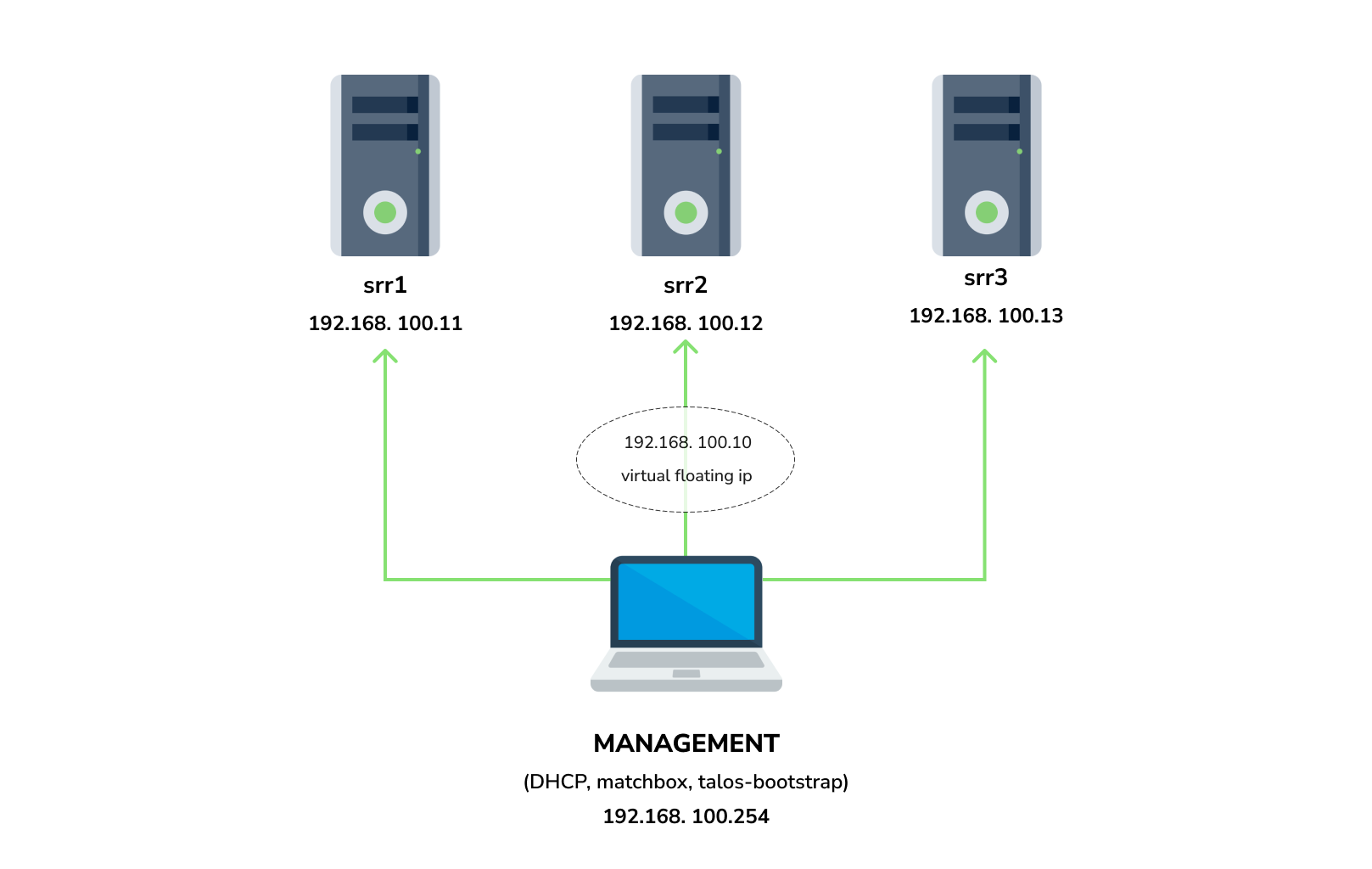

### Prepare infrastructure

|

||||

Versioning adheres to the [Semantic Versioning](http://semver.org/) principles.

|

||||

A full list of the available releases is available in the GitHub repository's [Release](https://github.com/aenix-io/cozystack/releases) section.

|

||||

|

||||

## Contributions

|

||||

|

||||

|

||||

Contributions are highly appreciated and very welcomed!

|

||||

|

||||

You need 3 physical servers or VMs with nested virtualisation:

|

||||

In case of bugs, please, check if the issue has been already opened by checking the [GitHub Issues](https://github.com/aenix-io/cozystack/issues) section.

|

||||

In case it isn't, you can open a new one: a detailed report will help us to replicate it, assess it, and work on a fix.

|

||||

|

||||

```

|

||||

CPU: 4 cores

|

||||

CPU model: host

|

||||

RAM: 8-16 GB

|

||||

HDD1: 32 GB

|

||||

HDD2: 100GB (raw)

|

||||

```

|

||||

You can express your intention in working on the fix on your own.

|

||||

Commits are used to generate the changelog, and their author will be referenced in it.

|

||||

|

||||

And one management VM or physical server connected to the same network.

|

||||

Any Linux system installed on it (eg. Ubuntu should be enough)

|

||||

In case of **Feature Requests** please use the [Discussion's Feature Request section](https://github.com/aenix-io/cozystack/discussions/categories/feature-requests).

|

||||

|

||||

**Note:** The VM should support `x86-64-v2` architecture, the most probably you can achieve this by setting cpu model to `host`

|

||||

## License

|

||||

|

||||

#### Install dependencies:

|

||||

Cozystack is licensed under Apache 2.0.

|

||||

The code is provided as-is with no warranties.

|

||||

|

||||

- `docker`

|

||||

- `talosctl`

|

||||

- `dialog`

|

||||

- `nmap`

|

||||

- `make`

|

||||

- `yq`

|

||||

- `kubectl`

|

||||

- `helm`

|

||||

## Commercial Support

|

||||

|

||||

### Netboot server

|

||||

[**Ænix**](https://aenix.io) offers enterprise-grade support, available 24/7.

|

||||

|

||||

Start matchbox with prebuilt Talos image for Cozystack:

|

||||

We provide all types of assistance, including consultations, development of missing features, design, assistance with installation, and integration.

|

||||

|

||||

```bash

|

||||

sudo docker run --name=matchbox -d --net=host ghcr.io/aenix-io/cozystack/matchbox:v0.0.2 \

|

||||

-address=:8080 \

|

||||

-log-level=debug

|

||||

```

|

||||

|

||||

Start DHCP-Server:

|

||||

```bash

|

||||

sudo docker run --name=dnsmasq -d --cap-add=NET_ADMIN --net=host quay.io/poseidon/dnsmasq \

|

||||

-d -q -p0 \

|

||||

--dhcp-range=192.168.100.3,192.168.100.254 \

|

||||

--dhcp-option=option:router,192.168.100.1 \

|

||||

--enable-tftp \

|

||||

--tftp-root=/var/lib/tftpboot \

|

||||

--dhcp-match=set:bios,option:client-arch,0 \

|

||||

--dhcp-boot=tag:bios,undionly.kpxe \

|

||||

--dhcp-match=set:efi32,option:client-arch,6 \

|

||||

--dhcp-boot=tag:efi32,ipxe.efi \

|

||||

--dhcp-match=set:efibc,option:client-arch,7 \

|

||||

--dhcp-boot=tag:efibc,ipxe.efi \

|

||||

--dhcp-match=set:efi64,option:client-arch,9 \

|

||||

--dhcp-boot=tag:efi64,ipxe.efi \

|

||||

--dhcp-userclass=set:ipxe,iPXE \

|

||||

--dhcp-boot=tag:ipxe,http://192.168.100.254:8080/boot.ipxe \

|

||||

--log-queries \

|

||||

--log-dhcp

|

||||

```

|

||||

|

||||

Where:

|

||||

- `192.168.100.3,192.168.100.254` range to allocate IPs from

|

||||

- `192.168.100.1` your gateway

|

||||

- `192.168.100.254` is address of your management server

|

||||

|

||||

Check status of containers:

|

||||

|

||||

```

|

||||

docker ps

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

|

||||

22044f26f74d quay.io/poseidon/dnsmasq "/usr/sbin/dnsmasq -…" 6 seconds ago Up 5 seconds dnsmasq

|

||||

231ad81ff9e0 ghcr.io/aenix-io/cozystack/matchbox:v0.0.2 "/matchbox -address=…" 58 seconds ago Up 57 seconds matchbox

|

||||

```

|

||||

|

||||

### Bootstrap cluster

|

||||

|

||||

Write configuration for Cozystack:

|

||||

|

||||

```yaml

|

||||

cat > patch.yaml <<\EOT

|

||||

machine:

|

||||

kubelet:

|

||||

nodeIP:

|

||||

validSubnets:

|

||||

- 192.168.100.0/24

|

||||

kernel:

|

||||

modules:

|

||||

- name: openvswitch

|

||||

- name: drbd

|

||||

parameters:

|

||||

- usermode_helper=disabled

|

||||

- name: zfs

|

||||

install:

|

||||

image: ghcr.io/aenix-io/cozystack/talos:v1.6.4

|

||||

files:

|

||||

- content: |

|

||||

[plugins]

|

||||

[plugins."io.containerd.grpc.v1.cri"]

|

||||

device_ownership_from_security_context = true

|

||||

path: /etc/cri/conf.d/20-customization.part

|

||||

op: create

|

||||

|

||||

cluster:

|

||||

network:

|

||||

cni:

|

||||

name: none

|

||||

podSubnets:

|

||||

- 10.244.0.0/16

|

||||

serviceSubnets:

|

||||

- 10.96.0.0/16

|

||||

EOT

|

||||

|

||||

cat > patch-controlplane.yaml <<\EOT

|

||||

cluster:

|

||||

allowSchedulingOnControlPlanes: true

|

||||

controllerManager:

|

||||

extraArgs:

|

||||

bind-address: 0.0.0.0

|

||||

scheduler:

|

||||

extraArgs:

|

||||

bind-address: 0.0.0.0

|

||||

apiServer:

|

||||

certSANs:

|

||||

- 127.0.0.1

|

||||

proxy:

|

||||

disabled: true

|

||||

discovery:

|

||||

enabled: false

|

||||

etcd:

|

||||

advertisedSubnets:

|

||||

- 192.168.100.0/24

|

||||

EOT

|

||||

```

|

||||

|

||||

Run [talos-bootstrap](https://github.com/aenix-io/talos-bootstrap/) to deploy cluster:

|

||||

|

||||

```bash

|

||||

talos-bootstrap install

|

||||

```

|

||||

|

||||

Save admin kubeconfig to access your Kubernetes cluster:

|

||||

```bash

|

||||

cp -i kubeconfig ~/.kube/config

|

||||

```

|

||||

|

||||

Check connection:

|

||||

```bash

|

||||

kubectl get ns

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME STATUS AGE

|

||||

default Active 7m56s

|

||||

kube-node-lease Active 7m56s

|

||||

kube-public Active 7m56s

|

||||

kube-system Active 7m56s

|

||||

```

|

||||

|

||||

|

||||

**Note:**: All nodes should currently show as "Not Ready", don't worry about that, this is because you disabled the default CNI plugin in the previous step. Cozystack will install it's own CNI-plugin on the next step.

|

||||

|

||||

|

||||

### Install Cozystack

|

||||

|

||||

|

||||

write config for cozystack:

|

||||

|

||||

**Note:** please make sure that you written the same setting specified in `patch.yaml` and `patch-controlplane.yaml` files.

|

||||

|

||||

```yaml

|

||||

cat > cozystack-config.yaml <<\EOT

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-system

|

||||

data:

|

||||

cluster-name: "cozystack"

|

||||

ipv4-pod-cidr: "10.244.0.0/16"

|

||||

ipv4-pod-gateway: "10.244.0.1"

|

||||

ipv4-svc-cidr: "10.96.0.0/16"

|

||||

ipv4-join-cidr: "100.64.0.0/16"

|

||||

EOT

|

||||

```

|

||||

|

||||

Create namesapce and install Cozystack system components:

|

||||

|

||||

```bash

|

||||

kubectl create ns cozy-system

|

||||

kubectl apply -f cozystack-config.yaml

|

||||

kubectl apply -f manifests/cozystack-installer.yaml

|

||||

```

|

||||

|

||||

(optional) You can track the logs of installer:

|

||||

```bash

|

||||

kubectl logs -n cozy-system deploy/cozystack -f

|

||||

```

|

||||

|

||||

Wait for a while, then check the status of installation:

|

||||

```bash

|

||||

kubectl get hr -A

|

||||

```

|

||||

|

||||

Wait until all releases become to `Ready` state:

|

||||

```console

|

||||

NAMESPACE NAME AGE READY STATUS

|

||||

cozy-cert-manager cert-manager 4m1s True Release reconciliation succeeded

|

||||

cozy-cert-manager cert-manager-issuers 4m1s True Release reconciliation succeeded

|

||||

cozy-cilium cilium 4m1s True Release reconciliation succeeded

|

||||

cozy-cluster-api capi-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-cluster-api capi-providers 4m1s True Release reconciliation succeeded

|

||||

cozy-dashboard dashboard 4m1s True Release reconciliation succeeded

|

||||

cozy-fluxcd cozy-fluxcd 4m1s True Release reconciliation succeeded

|

||||

cozy-grafana-operator grafana-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-kamaji kamaji 4m1s True Release reconciliation succeeded

|

||||

cozy-kubeovn kubeovn 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt-cdi kubevirt-cdi 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt-cdi kubevirt-cdi-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt kubevirt 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt kubevirt-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-linstor linstor 4m1s True Release reconciliation succeeded

|

||||

cozy-linstor piraeus-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-mariadb-operator mariadb-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-metallb metallb 4m1s True Release reconciliation succeeded

|

||||

cozy-monitoring monitoring 4m1s True Release reconciliation succeeded

|

||||

cozy-postgres-operator postgres-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-rabbitmq-operator rabbitmq-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-redis-operator redis-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-telepresence telepresence 4m1s True Release reconciliation succeeded

|

||||

cozy-victoria-metrics-operator victoria-metrics-operator 4m1s True Release reconciliation succeeded

|

||||

tenant-root tenant-root 4m1s True Release reconciliation succeeded

|

||||

```

|

||||

|

||||

#### Configure Storage

|

||||

|

||||

Setup alias to access LINSTOR:

|

||||

```bash

|

||||

alias linstor='kubectl exec -n cozy-linstor deploy/linstor-controller -- linstor'

|

||||

```

|

||||

|

||||

list your nodes

|

||||

```bash

|

||||

linstor node list

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

+-------------------------------------------------------+

|

||||

| Node | NodeType | Addresses | State |

|

||||

|=======================================================|

|

||||

| srv1 | SATELLITE | 192.168.100.11:3367 (SSL) | Online |

|

||||

| srv2 | SATELLITE | 192.168.100.12:3367 (SSL) | Online |

|

||||

| srv3 | SATELLITE | 192.168.100.13:3367 (SSL) | Online |

|

||||

+-------------------------------------------------------+

|

||||

```

|

||||

|

||||

list empty devices:

|

||||

|

||||

```bash

|

||||

linstor physical-storage list

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

+--------------------------------------------+

|

||||

| Size | Rotational | Nodes |

|

||||

|============================================|

|

||||

| 107374182400 | True | srv3[/dev/sdb] |

|

||||

| | | srv1[/dev/sdb] |

|

||||

| | | srv2[/dev/sdb] |

|

||||

+--------------------------------------------+

|

||||

```

|

||||

|

||||

|

||||

create storage pools:

|

||||

|

||||

```bash

|

||||

linstor ps cdp lvm srv1 /dev/sdb --pool-name data --storage-pool data

|

||||

linstor ps cdp lvm srv2 /dev/sdb --pool-name data --storage-pool data

|

||||

linstor ps cdp lvm srv3 /dev/sdb --pool-name data --storage-pool data

|

||||

```

|

||||

|

||||

list storage pools:

|

||||

|

||||

```bash

|

||||

linstor sp l

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

+-------------------------------------------------------------------------------------------------------------------------------------+

|

||||

| StoragePool | Node | Driver | PoolName | FreeCapacity | TotalCapacity | CanSnapshots | State | SharedName |

|

||||

|=====================================================================================================================================|

|

||||

| DfltDisklessStorPool | srv1 | DISKLESS | | | | False | Ok | srv1;DfltDisklessStorPool |

|

||||

| DfltDisklessStorPool | srv2 | DISKLESS | | | | False | Ok | srv2;DfltDisklessStorPool |

|

||||

| DfltDisklessStorPool | srv3 | DISKLESS | | | | False | Ok | srv3;DfltDisklessStorPool |

|

||||

| data | srv1 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv1;data |

|

||||

| data | srv2 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv2;data |

|

||||

| data | srv3 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv3;data |

|

||||

+-------------------------------------------------------------------------------------------------------------------------------------+

|

||||

```

|

||||

|

||||

|

||||

Create default storage classes:

|

||||

```yaml

|

||||

kubectl create -f- <<EOT

|

||||

---

|

||||

apiVersion: storage.k8s.io/v1

|

||||

kind: StorageClass

|

||||

metadata:

|

||||

name: local

|

||||

annotations:

|

||||

storageclass.kubernetes.io/is-default-class: "true"

|

||||

provisioner: linstor.csi.linbit.com

|

||||

parameters:

|

||||

linstor.csi.linbit.com/storagePool: "data"

|

||||

linstor.csi.linbit.com/layerList: "storage"

|

||||

linstor.csi.linbit.com/allowRemoteVolumeAccess: "false"

|

||||

volumeBindingMode: WaitForFirstConsumer

|

||||

allowVolumeExpansion: true

|

||||

---

|

||||

apiVersion: storage.k8s.io/v1

|

||||

kind: StorageClass

|

||||

metadata:

|

||||

name: replicated

|

||||

provisioner: linstor.csi.linbit.com

|

||||

parameters:

|

||||

linstor.csi.linbit.com/storagePool: "data"

|

||||

linstor.csi.linbit.com/autoPlace: "3"

|

||||

linstor.csi.linbit.com/layerList: "drbd storage"

|

||||

linstor.csi.linbit.com/allowRemoteVolumeAccess: "true"

|

||||

property.linstor.csi.linbit.com/DrbdOptions/auto-quorum: suspend-io

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Resource/on-no-data-accessible: suspend-io

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Resource/on-suspended-primary-outdated: force-secondary

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Net/rr-conflict: retry-connect

|

||||

volumeBindingMode: WaitForFirstConsumer

|

||||

allowVolumeExpansion: true

|

||||

EOT

|

||||

```

|

||||

|

||||

list storageclasses:

|

||||

|

||||

```bash

|

||||

kubectl get storageclasses

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

|

||||

local (default) linstor.csi.linbit.com Delete WaitForFirstConsumer true 11m

|

||||

replicated linstor.csi.linbit.com Delete WaitForFirstConsumer true 11m

|

||||

```

|

||||

|

||||

#### Configure Networking interconnection

|

||||

|

||||

To access your services select the range of unused IPs, eg. `192.168.100.200-192.168.100.250`

|

||||

|

||||

**Note:** These IPs should be from the same network as nodes or they should have all necessary routes for them.

|

||||

|

||||

Configure MetalLB to use and announce this range:

|

||||

```yaml

|

||||

kubectl create -f- <<EOT

|

||||

---

|

||||

apiVersion: metallb.io/v1beta1

|

||||

kind: L2Advertisement

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-metallb

|

||||

spec:

|

||||

ipAddressPools:

|

||||

- cozystack

|

||||

---

|

||||

apiVersion: metallb.io/v1beta1

|

||||

kind: IPAddressPool

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-metallb

|

||||

spec:

|

||||

addresses:

|

||||

- 192.168.100.200-192.168.100.250

|

||||

autoAssign: true

|

||||

avoidBuggyIPs: false

|

||||

EOT

|

||||

```

|

||||

|

||||

#### Setup basic applications

|

||||

|

||||

Get token from `tenant-root`:

|

||||

```bash

|

||||

kubectl get secret -n tenant-root tenant-root -o go-template='{{ printf "%s\n" (index .data "token" | base64decode) }}'

|

||||

```

|

||||

|

||||

Enable port forward to cozy-dashboard:

|

||||

```bash

|

||||

kubectl port-forward -n cozy-dashboard svc/dashboard 8080:80

|

||||

```

|

||||

|

||||

Open: http://localhost:8080/

|

||||

|

||||

- Select `tenant-root`

|

||||

- Click `Upgrade` button

|

||||

- Write a domain into `host` which you wish to use as parent domain for all deployed applications

|

||||

**Note:**

|

||||

- if you have no domain yet, you can use `192.168.100.200.nip.io` where `192.168.100.200` is a first IP address in your network addresses range.

|

||||

- alternatively you can leave the default value, however you'll be need to modify your `/etc/hosts` every time you want to access specific application.

|

||||

- Set `etcd`, `monitoring` and `ingress` to enabled position

|

||||

- Click Deploy

|

||||

|

||||

|

||||

Check persistent volumes provisioned:

|

||||

|

||||

```bash

|

||||

kubectl get pvc -n tenant-root

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

|

||||

data-etcd-0 Bound pvc-4cbd29cc-a29f-453d-b412-451647cd04bf 10Gi RWO local <unset> 2m10s

|

||||

data-etcd-1 Bound pvc-1579f95a-a69d-4a26-bcc2-b15ccdbede0d 10Gi RWO local <unset> 115s

|

||||

data-etcd-2 Bound pvc-907009e5-88bf-4d18-91e7-b56b0dbfb97e 10Gi RWO local <unset> 91s

|

||||

grafana-db-1 Bound pvc-7b3f4e23-228a-46fd-b820-d033ef4679af 10Gi RWO local <unset> 2m41s

|

||||

grafana-db-2 Bound pvc-ac9b72a4-f40e-47e8-ad24-f50d843b55e4 10Gi RWO local <unset> 113s

|

||||

vmselect-cachedir-vmselect-longterm-0 Bound pvc-622fa398-2104-459f-8744-565eee0a13f1 2Gi RWO local <unset> 2m21s

|

||||

vmselect-cachedir-vmselect-longterm-1 Bound pvc-fc9349f5-02b2-4e25-8bef-6cbc5cc6d690 2Gi RWO local <unset> 2m21s

|

||||

vmselect-cachedir-vmselect-shortterm-0 Bound pvc-7acc7ff6-6b9b-4676-bd1f-6867ea7165e2 2Gi RWO local <unset> 2m41s

|

||||

vmselect-cachedir-vmselect-shortterm-1 Bound pvc-e514f12b-f1f6-40ff-9838-a6bda3580eb7 2Gi RWO local <unset> 2m40s

|

||||

vmstorage-db-vmstorage-longterm-0 Bound pvc-e8ac7fc3-df0d-4692-aebf-9f66f72f9fef 10Gi RWO local <unset> 2m21s

|

||||

vmstorage-db-vmstorage-longterm-1 Bound pvc-68b5ceaf-3ed1-4e5a-9568-6b95911c7c3a 10Gi RWO local <unset> 2m21s

|

||||

vmstorage-db-vmstorage-shortterm-0 Bound pvc-cee3a2a4-5680-4880-bc2a-85c14dba9380 10Gi RWO local <unset> 2m41s

|

||||

vmstorage-db-vmstorage-shortterm-1 Bound pvc-d55c235d-cada-4c4a-8299-e5fc3f161789 10Gi RWO local <unset> 2m41s

|

||||

```

|

||||

|

||||

Check all pods are running:

|

||||

|

||||

|

||||

```bash

|

||||

kubectl get pod -n tenant-root

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME READY STATUS RESTARTS AGE

|

||||

etcd-0 1/1 Running 0 2m1s

|

||||

etcd-1 1/1 Running 0 106s

|

||||

etcd-2 1/1 Running 0 82s

|

||||

grafana-db-1 1/1 Running 0 119s

|

||||

grafana-db-2 1/1 Running 0 13s

|

||||

grafana-deployment-74b5656d6-5dcvn 1/1 Running 0 90s

|

||||

grafana-deployment-74b5656d6-q5589 1/1 Running 1 (105s ago) 111s

|

||||

root-ingress-controller-6ccf55bc6d-pg79l 2/2 Running 0 2m27s

|

||||

root-ingress-controller-6ccf55bc6d-xbs6x 2/2 Running 0 2m29s

|

||||

root-ingress-defaultbackend-686bcbbd6c-5zbvp 1/1 Running 0 2m29s

|

||||

vmalert-vmalert-644986d5c-7hvwk 2/2 Running 0 2m30s

|

||||

vmalertmanager-alertmanager-0 2/2 Running 0 2m32s

|

||||

vmalertmanager-alertmanager-1 2/2 Running 0 2m31s

|

||||

vminsert-longterm-75789465f-hc6cz 1/1 Running 0 2m10s

|

||||

vminsert-longterm-75789465f-m2v4t 1/1 Running 0 2m12s

|

||||

vminsert-shortterm-78456f8fd9-wlwww 1/1 Running 0 2m29s

|

||||

vminsert-shortterm-78456f8fd9-xg7cw 1/1 Running 0 2m28s

|

||||

vmselect-longterm-0 1/1 Running 0 2m12s

|

||||

vmselect-longterm-1 1/1 Running 0 2m12s

|

||||

vmselect-shortterm-0 1/1 Running 0 2m31s

|

||||

vmselect-shortterm-1 1/1 Running 0 2m30s

|

||||

vmstorage-longterm-0 1/1 Running 0 2m12s

|

||||

vmstorage-longterm-1 1/1 Running 0 2m12s

|

||||

vmstorage-shortterm-0 1/1 Running 0 2m32s

|

||||

vmstorage-shortterm-1 1/1 Running 0 2m31s

|

||||

```

|

||||

|

||||

Now you can get public IP of ingress controller:

|

||||

```

|

||||

kubectl get svc -n tenant-root root-ingress-controller

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

|

||||

root-ingress-controller LoadBalancer 10.96.16.141 192.168.100.200 80:31632/TCP,443:30113/TCP 3m33s

|

||||

```

|

||||

|

||||

Use `grafana.example.org` (under 192.168.100.200) to access system monitoring, where `example.org` is your domain specified for `tenant-root`

|

||||

|

||||

- login: `admin`

|

||||

- password:

|

||||

|

||||

```bash

|

||||

kubectl get secret -n tenant-root grafana-admin-password -o go-template='{{ printf "%s\n" (index .data "password" | base64decode) }}'

|

||||

```

|

||||

[Contact us](https://aenix.io/contact/)

|

||||

|

||||

@@ -12,9 +12,6 @@ talos_version=$(awk '/^version:/ {print $2}' packages/core/installer/images/talo

|

||||

|

||||

set -x

|

||||

|

||||

sed -i "s|\(ghcr.io/aenix-io/cozystack/matchbox:\)v[^ ]\+|\1${version}|g" README.md

|

||||

sed -i "s|\(ghcr.io/aenix-io/cozystack/talos:\)v[^ ]\+|\1${talos_version}|g" README.md

|

||||

|

||||

sed -i "/^TAG / s|=.*|= ${version}|" \

|

||||

packages/apps/http-cache/Makefile \

|

||||

packages/apps/kubernetes/Makefile \

|

||||

|

||||

@@ -61,8 +61,6 @@ spec:

|

||||

selector:

|

||||

matchLabels:

|

||||

app: cozystack

|

||||

strategy:

|

||||

type: Recreate

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

@@ -72,14 +70,26 @@ spec:

|

||||

serviceAccountName: cozystack

|

||||

containers:

|

||||

- name: cozystack

|

||||

image: "ghcr.io/aenix-io/cozystack/installer:v0.0.2"

|

||||

image: "ghcr.io/aenix-io/cozystack/cozystack:v0.1.0"

|

||||

env:

|

||||

- name: KUBERNETES_SERVICE_HOST

|

||||

value: localhost

|

||||

- name: KUBERNETES_SERVICE_PORT

|

||||

value: "7445"

|

||||

- name: K8S_AWAIT_ELECTION_ENABLED

|

||||

value: "1"

|

||||

- name: K8S_AWAIT_ELECTION_NAME

|

||||

value: cozystack

|

||||

- name: K8S_AWAIT_ELECTION_LOCK_NAME

|

||||

value: cozystack

|

||||

- name: K8S_AWAIT_ELECTION_LOCK_NAMESPACE

|

||||

value: cozy-system

|

||||

- name: K8S_AWAIT_ELECTION_IDENTITY

|

||||

valueFrom:

|

||||

fieldRef:

|

||||

fieldPath: metadata.name

|

||||

- name: darkhttpd

|

||||

image: "ghcr.io/aenix-io/cozystack/installer:v0.0.2"

|

||||

image: "ghcr.io/aenix-io/cozystack/cozystack:v0.1.0"

|

||||

command:

|

||||

- /usr/bin/darkhttpd

|

||||

- /cozystack/assets

|

||||

|

||||

@@ -2,7 +2,7 @@ PUSH := 1

|

||||

LOAD := 0

|

||||

REGISTRY := ghcr.io/aenix-io/cozystack

|

||||

NGINX_CACHE_TAG = v0.1.0

|

||||

TAG := v0.0.2

|

||||

TAG := v0.1.0

|

||||

|

||||

image: image-nginx

|

||||

|

||||

|

||||

@@ -1,14 +1,4 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:f4ad0559a74749de0d11b1835823bf9c95332962b0909450251d849113f22c19",

|

||||

"containerimage.descriptor": {

|

||||

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

|

||||

"digest": "sha256:3a0e8d791e0ccf681711766387ea9278e7d39f1956509cead2f72aa0001797ef",

|

||||

"size": 1093,

|

||||

"platform": {

|

||||

"architecture": "amd64",

|

||||

"os": "linux"

|

||||

}

|

||||

},

|

||||

"containerimage.digest": "sha256:3a0e8d791e0ccf681711766387ea9278e7d39f1956509cead2f72aa0001797ef",

|

||||

"image.name": "ghcr.io/aenix-io/cozystack/nginx-cache:v0.1.0,ghcr.io/aenix-io/cozystack/nginx-cache:v0.1.0-v0.0.2"

|

||||

"containerimage.config.digest": "sha256:318fd8d0d6f6127387042f6ad150e87023d1961c7c5059dd5324188a54b0ab4e",

|

||||

"containerimage.digest": "sha256:e3cf145238e6e45f7f13b9acaea445c94ff29f76a34ba9fa50828401a5a3cc68"

|

||||

}

|

||||

@@ -1,7 +1,7 @@

|

||||

PUSH := 1

|

||||

LOAD := 0

|

||||

REGISTRY := ghcr.io/aenix-io/cozystack

|

||||

TAG := v0.0.2

|

||||

TAG := v0.1.0

|

||||

UBUNTU_CONTAINER_DISK_TAG = v1.29.1

|

||||

|

||||

image: image-ubuntu-container-disk

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:e982cfa2320d3139ed311ae44bcc5ea18db7e4e76d2746e0af04c516288ff0f1",

|

||||

"containerimage.digest": "sha256:34f6aba5b5a2afbb46bbb891ef4ddc0855c2ffe4f9e5a99e8e553286ddd2c070"

|

||||

"containerimage.config.digest": "sha256:ee8968be63c7c45621ec45f3687211e0875acb24e8d9784e8d2ebcbf46a3538c",

|

||||

"containerimage.digest": "sha256:16c3c07e74212585786dc1f1ae31d3ab90a575014806193e8e37d1d7751cb084"

|

||||

}

|

||||

@@ -3,7 +3,7 @@ NAME=installer

|

||||

PUSH := 1

|

||||

LOAD := 0

|

||||

REGISTRY := ghcr.io/aenix-io/cozystack

|

||||

TAG := v0.0.2

|

||||

TAG := v0.1.0

|

||||

TALOS_VERSION=$(shell awk '/^version:/ {print $$2}' images/talos/profiles/installer.yaml)

|

||||

|

||||

show:

|

||||

@@ -18,18 +18,18 @@ diff:

|

||||

update:

|

||||

hack/gen-profiles.sh

|

||||

|

||||

image: image-installer image-talos image-matchbox

|

||||

image: image-cozystack image-talos image-matchbox

|

||||

|

||||

image-installer:

|

||||

docker buildx build -f images/installer/Dockerfile ../../.. \

|

||||

image-cozystack:

|

||||

docker buildx build -f images/cozystack/Dockerfile ../../.. \

|

||||

--provenance false \

|

||||

--tag $(REGISTRY)/installer:$(TAG) \

|

||||

--cache-from type=registry,ref=$(REGISTRY)/installer:$(TAG) \

|

||||

--tag $(REGISTRY)/cozystack:$(TAG) \

|

||||

--cache-from type=registry,ref=$(REGISTRY)/cozystack:$(TAG) \

|

||||

--cache-to type=inline \

|

||||

--metadata-file images/installer.json \

|

||||

--metadata-file images/cozystack.json \

|

||||

--push=$(PUSH) \

|

||||

--load=$(LOAD)

|

||||

echo "$(REGISTRY)/installer:$(TAG)" > images/installer.tag

|

||||

echo "$(REGISTRY)/cozystack:$(TAG)" > images/cozystack.tag

|

||||

|

||||

image-talos:

|

||||

test -f ../../../_out/assets/installer-amd64.tar || make talos-installer

|

||||

@@ -43,14 +43,18 @@ image-matchbox:

|

||||

docker buildx build -f images/matchbox/Dockerfile ../../.. \

|

||||

--provenance false \

|

||||

--tag $(REGISTRY)/matchbox:$(TAG) \

|

||||

--cache-from type=registry,ref=$(REGISTRY)/matchbox:$(TAG) \

|

||||

--tag $(REGISTRY)/matchbox:$(TALOS_VERSION)-$(TAG) \

|

||||

--cache-from type=registry,ref=$(REGISTRY)/matchbox:$(TALOS_VERSION) \

|

||||

--cache-to type=inline \

|

||||

--metadata-file images/matchbox.json \

|

||||

--push=$(PUSH) \

|

||||

--load=$(LOAD)

|

||||

echo "$(REGISTRY)/matchbox:$(TAG)" > images/matchbox.tag

|

||||

echo "$(REGISTRY)/matchbox:$(TALOS_VERSION)" > images/matchbox.tag

|

||||

|

||||

assets: talos-iso

|

||||

|

||||

talos-initramfs talos-kernel talos-installer talos-iso:

|

||||

cat images/talos/profiles/$(subst talos-,,$@).yaml | docker run --rm -i -v $${PWD}/../../../_out/assets:/out -v /dev:/dev --privileged "ghcr.io/siderolabs/imager:$(TALOS_VERSION)" -

|

||||

mkdir -p ../../../_out/assets

|

||||

cat images/talos/profiles/$(subst talos-,,$@).yaml | \

|

||||

docker run --rm -i -v /dev:/dev --privileged "ghcr.io/siderolabs/imager:$(TALOS_VERSION)" --tar-to-stdout - | \

|

||||

tar -C ../../../_out/assets -xzf-

|

||||

|

||||

4

packages/core/installer/images/cozystack.json

Normal file

4

packages/core/installer/images/cozystack.json

Normal file

@@ -0,0 +1,4 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:ec8a4983a663f06a1503507482667a206e83e0d8d3663dff60ced9221855d6b0",

|

||||

"containerimage.digest": "sha256:abb7b2fbc1f143c922f2a35afc4423a74b2b63c0bddfe620750613ed835aa861"

|

||||

}

|

||||

1

packages/core/installer/images/cozystack.tag

Normal file

1

packages/core/installer/images/cozystack.tag

Normal file

@@ -0,0 +1 @@

|

||||

ghcr.io/aenix-io/cozystack/cozystack:v0.1.0

|

||||

@@ -1,3 +1,15 @@

|

||||

FROM golang:alpine3.19 as k8s-await-election-builder

|

||||

|

||||

ARG K8S_AWAIT_ELECTION_GITREPO=https://github.com/LINBIT/k8s-await-election

|

||||

ARG K8S_AWAIT_ELECTION_VERSION=0.4.1

|

||||

|

||||

RUN apk add --no-cache git make

|

||||

RUN git clone ${K8S_AWAIT_ELECTION_GITREPO} /usr/local/go/k8s-await-election/ \

|

||||

&& cd /usr/local/go/k8s-await-election \

|

||||

&& git reset --hard v${K8S_AWAIT_ELECTION_VERSION} \

|

||||

&& make \

|

||||

&& mv ./out/k8s-await-election-amd64 /k8s-await-election

|

||||

|

||||

FROM alpine:3.19 AS builder

|

||||

|

||||

RUN apk add --no-cache make git

|

||||

@@ -18,7 +30,8 @@ COPY scripts /cozystack/scripts

|

||||

COPY --from=builder /src/packages/core /cozystack/packages/core

|

||||

COPY --from=builder /src/packages/system /cozystack/packages/system

|

||||

COPY --from=builder /src/_out/repos /cozystack/assets/repos

|

||||

COPY --from=k8s-await-election-builder /k8s-await-election /usr/bin/k8s-await-election

|

||||

COPY dashboards /cozystack/assets/dashboards

|

||||

|

||||

WORKDIR /cozystack

|

||||

ENTRYPOINT [ "/cozystack/scripts/installer.sh" ]

|

||||

ENTRYPOINT ["/usr/bin/k8s-await-election", "/cozystack/scripts/installer.sh" ]

|

||||

@@ -1,14 +0,0 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:5c7f51a9cbc945c13d52157035eba6ba4b6f3b68b76280f8e64b4f6ba239db1a",

|

||||

"containerimage.descriptor": {

|

||||

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

|

||||

"digest": "sha256:7cda3480faf0539ed4a3dd252aacc7a997645d3a390ece377c36cf55f9e57e11",

|

||||

"size": 2074,

|

||||

"platform": {

|

||||

"architecture": "amd64",

|

||||

"os": "linux"

|

||||

}

|

||||

},

|

||||

"containerimage.digest": "sha256:7cda3480faf0539ed4a3dd252aacc7a997645d3a390ece377c36cf55f9e57e11",

|

||||

"image.name": "ghcr.io/aenix-io/cozystack/installer:v0.0.2"

|

||||

}

|

||||

@@ -1 +0,0 @@

|

||||

ghcr.io/aenix-io/cozystack/installer:v0.0.2

|

||||

@@ -1,14 +1,4 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:cb8cb211017e51f6eb55604287c45cbf6ed8add5df482aaebff3d493a11b5a76",

|

||||

"containerimage.descriptor": {

|

||||

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

|

||||

"digest": "sha256:3be72cdce2f4ab4886a70fb7b66e4518a1fe4ba0771319c96fa19a0d6f409602",

|

||||

"size": 1488,

|

||||

"platform": {

|

||||

"architecture": "amd64",

|

||||

"os": "linux"

|

||||

}

|

||||

},

|

||||

"containerimage.digest": "sha256:3be72cdce2f4ab4886a70fb7b66e4518a1fe4ba0771319c96fa19a0d6f409602",

|

||||

"image.name": "ghcr.io/aenix-io/cozystack/matchbox:v0.0.2"

|

||||

"containerimage.config.digest": "sha256:b869a6324f9c0e6d1dd48eee67cbe3842ee14efd59bdde477736ad2f90568ff7",

|

||||

"containerimage.digest": "sha256:c30b237c5fa4fbbe47e1aba56e8f99569fe865620aa1953f31fc373794123cd7"

|

||||

}

|

||||

@@ -1 +1 @@

|

||||

ghcr.io/aenix-io/cozystack/matchbox:v0.0.2

|

||||

ghcr.io/aenix-io/cozystack/matchbox:v1.6.4

|

||||

|

||||

@@ -41,8 +41,6 @@ spec:

|

||||

selector:

|

||||

matchLabels:

|

||||

app: cozystack

|

||||

strategy:

|

||||

type: Recreate

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

@@ -52,14 +50,26 @@ spec:

|

||||

serviceAccountName: cozystack

|

||||

containers:

|

||||

- name: cozystack

|

||||

image: "{{ .Files.Get "images/installer.tag" | trim }}@{{ index (.Files.Get "images/installer.json" | fromJson) "containerimage.digest" }}"

|

||||

image: "{{ .Files.Get "images/cozystack.tag" | trim }}@{{ index (.Files.Get "images/cozystack.json" | fromJson) "containerimage.digest" }}"

|

||||

env:

|

||||

- name: KUBERNETES_SERVICE_HOST

|

||||

value: localhost

|

||||

- name: KUBERNETES_SERVICE_PORT

|

||||

value: "7445"

|

||||

- name: K8S_AWAIT_ELECTION_ENABLED

|

||||

value: "1"

|

||||

- name: K8S_AWAIT_ELECTION_NAME

|

||||

value: cozystack

|

||||

- name: K8S_AWAIT_ELECTION_LOCK_NAME

|

||||

value: cozystack

|

||||

- name: K8S_AWAIT_ELECTION_LOCK_NAMESPACE

|

||||

value: cozy-system

|

||||

- name: K8S_AWAIT_ELECTION_IDENTITY

|

||||

valueFrom:

|

||||

fieldRef:

|

||||

fieldPath: metadata.name

|

||||

- name: darkhttpd

|

||||

image: "{{ .Files.Get "images/installer.tag" | trim }}@{{ index (.Files.Get "images/installer.json" | fromJson) "containerimage.digest" }}"

|

||||

image: "{{ .Files.Get "images/cozystack.tag" | trim }}@{{ index (.Files.Get "images/cozystack.json" | fromJson) "containerimage.digest" }}"

|

||||

command:

|

||||

- /usr/bin/darkhttpd

|

||||

- /cozystack/assets

|

||||

|

||||

@@ -646,6 +646,20 @@ spec:

|

||||

namespace: cozy-cilium

|

||||

- name: kubeovn

|

||||

namespace: cozy-kubeovn

|

||||

{{- if .Capabilities.APIVersions.Has "source.toolkit.fluxcd.io/v1beta2" }}

|

||||

{{- with (lookup "source.toolkit.fluxcd.io/v1beta2" "HelmRepository" "cozy-public" "").items }}

|

||||

values:

|

||||

kubeapps:

|

||||

redis:

|

||||

master:

|

||||

podAnnotations:

|

||||

{{- range $index, $repo := . }}

|

||||

{{- with (($repo.status).artifact).revision }}

|

||||

repository.cozystack.io/{{ $repo.metadata.name }}: {{ quote . }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

---

|

||||

apiVersion: helm.toolkit.fluxcd.io/v2beta1

|

||||

kind: HelmRelease

|

||||

|

||||

@@ -1,131 +1,88 @@

|

||||

annotations:

|

||||

artifacthub.io/crds: |

|

||||

- kind: CiliumNetworkPolicy

|

||||

version: v2

|

||||

name: ciliumnetworkpolicies.cilium.io

|

||||

displayName: Cilium Network Policy

|

||||

description: |

|

||||

Cilium Network Policies provide additional functionality beyond what

|

||||

is provided by standard Kubernetes NetworkPolicy such as the ability

|

||||

to allow traffic based on FQDNs, or to filter at Layer 7.

|

||||

- kind: CiliumClusterwideNetworkPolicy

|

||||

version: v2

|

||||

name: ciliumclusterwidenetworkpolicies.cilium.io

|

||||

displayName: Cilium Clusterwide Network Policy

|

||||

description: |

|

||||

Cilium Clusterwide Network Policies support configuring network traffic

|

||||

policiies across the entire cluster, including applying node firewalls.

|

||||

- kind: CiliumExternalWorkload

|

||||

version: v2

|

||||

name: ciliumexternalworkloads.cilium.io

|

||||

displayName: Cilium External Workload

|

||||

description: |

|

||||

Cilium External Workload supports configuring the ability for external

|

||||

non-Kubernetes workloads to join the cluster.

|

||||

- kind: CiliumLocalRedirectPolicy

|

||||

version: v2

|

||||

name: ciliumlocalredirectpolicies.cilium.io

|

||||

displayName: Cilium Local Redirect Policy

|

||||

description: |

|

||||

Cilium Local Redirect Policy allows local redirects to be configured

|

||||

within a node to support use cases like Node-Local DNS or KIAM.

|

||||

- kind: CiliumNode

|

||||

version: v2

|

||||

name: ciliumnodes.cilium.io

|

||||

displayName: Cilium Node

|

||||

description: |

|

||||

Cilium Node represents a node managed by Cilium. It contains a

|

||||

specification to control various node specific configuration aspects

|

||||

and a status section to represent the status of the node.

|

||||

- kind: CiliumIdentity

|

||||

version: v2

|

||||

name: ciliumidentities.cilium.io

|

||||

displayName: Cilium Identity

|

||||

description: |

|

||||

Cilium Identity allows introspection into security identities that

|

||||

Cilium allocates which identify sets of labels that are assigned to

|

||||

individual endpoints in the cluster.

|

||||

- kind: CiliumEndpoint

|

||||

version: v2

|

||||

name: ciliumendpoints.cilium.io

|

||||

displayName: Cilium Endpoint

|

||||

description: |

|

||||

Cilium Endpoint represents the status of individual pods or nodes in

|

||||

the cluster which are managed by Cilium, including enforcement status,

|

||||

IP addressing and whether the networking is succesfully operational.

|

||||

- kind: CiliumEndpointSlice

|

||||

version: v2alpha1

|

||||

name: ciliumendpointslices.cilium.io

|

||||

displayName: Cilium Endpoint Slice

|

||||

description: |

|

||||

Cilium Endpoint Slice represents the status of groups of pods or nodes

|

||||

in the cluster which are managed by Cilium, including enforcement status,

|

||||

IP addressing and whether the networking is succesfully operational.

|

||||

- kind: CiliumEgressGatewayPolicy

|

||||

version: v2

|

||||

name: ciliumegressgatewaypolicies.cilium.io

|

||||

displayName: Cilium Egress Gateway Policy

|

||||

description: |

|

||||

Cilium Egress Gateway Policy provides control over the way that traffic

|

||||

leaves the cluster and which source addresses to use for that traffic.

|

||||

- kind: CiliumClusterwideEnvoyConfig

|

||||

version: v2

|

||||

name: ciliumclusterwideenvoyconfigs.cilium.io

|

||||

displayName: Cilium Clusterwide Envoy Config

|

||||

description: |

|

||||

Cilium Clusterwide Envoy Config specifies Envoy resources and K8s service mappings

|

||||

to be provisioned into Cilium host proxy instances in cluster context.

|

||||

- kind: CiliumEnvoyConfig

|

||||

version: v2

|

||||

name: ciliumenvoyconfigs.cilium.io

|

||||

displayName: Cilium Envoy Config

|

||||

description: |

|

||||

Cilium Envoy Config specifies Envoy resources and K8s service mappings

|

||||

to be provisioned into Cilium host proxy instances in namespace context.

|

||||

- kind: CiliumBGPPeeringPolicy

|

||||

version: v2alpha1

|

||||

name: ciliumbgppeeringpolicies.cilium.io

|

||||

displayName: Cilium BGP Peering Policy

|

||||

description: |

|

||||

Cilium BGP Peering Policy instructs Cilium to create specific BGP peering

|

||||

configurations.

|

||||

- kind: CiliumLoadBalancerIPPool

|

||||

version: v2alpha1

|

||||

name: ciliumloadbalancerippools.cilium.io

|

||||

displayName: Cilium Load Balancer IP Pool

|

||||

description: |

|

||||

Defining a Cilium Load Balancer IP Pool instructs Cilium to assign IPs to LoadBalancer Services.

|

||||

- kind: CiliumNodeConfig

|

||||

version: v2alpha1

|

||||

name: ciliumnodeconfigs.cilium.io

|

||||

displayName: Cilium Node Configuration

|

||||

description: |

|

||||

CiliumNodeConfig is a list of configuration key-value pairs. It is applied to

|

||||

nodes indicated by a label selector.

|

||||

- kind: CiliumCIDRGroup

|

||||

version: v2alpha1

|

||||

name: ciliumcidrgroups.cilium.io

|

||||

displayName: Cilium CIDR Group

|

||||

description: |

|

||||

CiliumCIDRGroup is a list of CIDRs that can be referenced as a single entity from CiliumNetworkPolicies.

|

||||

- kind: CiliumL2AnnouncementPolicy

|

||||

version: v2alpha1

|

||||

name: ciliuml2announcementpolicies.cilium.io

|

||||

displayName: Cilium L2 Announcement Policy

|

||||

description: |

|

||||

CiliumL2AnnouncementPolicy is a policy which determines which service IPs will be announced to

|

||||

the local area network, by which nodes, and via which interfaces.

|

||||

- kind: CiliumPodIPPool

|

||||

version: v2alpha1

|

||||

name: ciliumpodippools.cilium.io

|

||||

displayName: Cilium Pod IP Pool

|

||||

description: |

|

||||

CiliumPodIPPool defines an IP pool that can be used for pooled IPAM (i.e. the multi-pool IPAM mode).

|

||||

artifacthub.io/crds: "- kind: CiliumNetworkPolicy\n version: v2\n name: ciliumnetworkpolicies.cilium.io\n

|

||||

\ displayName: Cilium Network Policy\n description: |\n Cilium Network Policies

|

||||

provide additional functionality beyond what\n is provided by standard Kubernetes

|

||||

NetworkPolicy such as the ability\n to allow traffic based on FQDNs, or to

|

||||

filter at Layer 7.\n- kind: CiliumClusterwideNetworkPolicy\n version: v2\n name:

|

||||

ciliumclusterwidenetworkpolicies.cilium.io\n displayName: Cilium Clusterwide

|

||||

Network Policy\n description: |\n Cilium Clusterwide Network Policies support

|

||||

configuring network traffic\n policiies across the entire cluster, including

|

||||

applying node firewalls.\n- kind: CiliumExternalWorkload\n version: v2\n name:

|

||||

ciliumexternalworkloads.cilium.io\n displayName: Cilium External Workload\n description:

|

||||

|\n Cilium External Workload supports configuring the ability for external\n

|

||||

\ non-Kubernetes workloads to join the cluster.\n- kind: CiliumLocalRedirectPolicy\n

|

||||

\ version: v2\n name: ciliumlocalredirectpolicies.cilium.io\n displayName: Cilium

|

||||

Local Redirect Policy\n description: |\n Cilium Local Redirect Policy allows

|

||||

local redirects to be configured\n within a node to support use cases like

|

||||

Node-Local DNS or KIAM.\n- kind: CiliumNode\n version: v2\n name: ciliumnodes.cilium.io\n

|

||||

\ displayName: Cilium Node\n description: |\n Cilium Node represents a node

|

||||

managed by Cilium. It contains a\n specification to control various node specific

|

||||

configuration aspects\n and a status section to represent the status of the

|

||||

node.\n- kind: CiliumIdentity\n version: v2\n name: ciliumidentities.cilium.io\n

|

||||

\ displayName: Cilium Identity\n description: |\n Cilium Identity allows introspection

|

||||

into security identities that\n Cilium allocates which identify sets of labels

|

||||

that are assigned to\n individual endpoints in the cluster.\n- kind: CiliumEndpoint\n

|

||||

\ version: v2\n name: ciliumendpoints.cilium.io\n displayName: Cilium Endpoint\n

|

||||

\ description: |\n Cilium Endpoint represents the status of individual pods

|

||||

or nodes in\n the cluster which are managed by Cilium, including enforcement

|

||||

status,\n IP addressing and whether the networking is successfully operational.\n-

|

||||

kind: CiliumEndpointSlice\n version: v2alpha1\n name: ciliumendpointslices.cilium.io\n

|

||||

\ displayName: Cilium Endpoint Slice\n description: |\n Cilium Endpoint Slice

|

||||

represents the status of groups of pods or nodes\n in the cluster which are

|

||||

managed by Cilium, including enforcement status,\n IP addressing and whether

|

||||

the networking is successfully operational.\n- kind: CiliumEgressGatewayPolicy\n

|

||||

\ version: v2\n name: ciliumegressgatewaypolicies.cilium.io\n displayName: Cilium

|

||||

Egress Gateway Policy\n description: |\n Cilium Egress Gateway Policy provides

|

||||

control over the way that traffic\n leaves the cluster and which source addresses

|

||||

to use for that traffic.\n- kind: CiliumClusterwideEnvoyConfig\n version: v2\n

|

||||

\ name: ciliumclusterwideenvoyconfigs.cilium.io\n displayName: Cilium Clusterwide

|

||||

Envoy Config\n description: |\n Cilium Clusterwide Envoy Config specifies

|

||||

Envoy resources and K8s service mappings\n to be provisioned into Cilium host

|

||||

proxy instances in cluster context.\n- kind: CiliumEnvoyConfig\n version: v2\n

|

||||

\ name: ciliumenvoyconfigs.cilium.io\n displayName: Cilium Envoy Config\n description:

|

||||

|\n Cilium Envoy Config specifies Envoy resources and K8s service mappings\n

|

||||

\ to be provisioned into Cilium host proxy instances in namespace context.\n-

|

||||

kind: CiliumBGPPeeringPolicy\n version: v2alpha1\n name: ciliumbgppeeringpolicies.cilium.io\n

|

||||

\ displayName: Cilium BGP Peering Policy\n description: |\n Cilium BGP Peering

|

||||

Policy instructs Cilium to create specific BGP peering\n configurations.\n-

|

||||

kind: CiliumBGPClusterConfig\n version: v2alpha1\n name: ciliumbgpclusterconfigs.cilium.io\n

|

||||

\ displayName: Cilium BGP Cluster Config\n description: |\n Cilium BGP Cluster

|

||||

Config instructs Cilium operator to create specific BGP cluster\n configurations.\n-

|

||||

kind: CiliumBGPPeerConfig\n version: v2alpha1\n name: ciliumbgppeerconfigs.cilium.io\n

|

||||

\ displayName: Cilium BGP Peer Config\n description: |\n CiliumBGPPeerConfig

|

||||

is a common set of BGP peer configurations. It can be referenced \n by multiple

|

||||

peers from CiliumBGPClusterConfig.\n- kind: CiliumBGPAdvertisement\n version:

|

||||

v2alpha1\n name: ciliumbgpadvertisements.cilium.io\n displayName: Cilium BGP

|

||||

Advertisement\n description: |\n CiliumBGPAdvertisement is used to define

|

||||

source of BGP advertisement as well as BGP attributes \n to be advertised with

|

||||

those prefixes.\n- kind: CiliumBGPNodeConfig\n version: v2alpha1\n name: ciliumbgpnodeconfigs.cilium.io\n

|

||||

\ displayName: Cilium BGP Node Config\n description: |\n CiliumBGPNodeConfig

|

||||

is read only node specific BGP configuration. It is constructed by Cilium operator.\n

|

||||

\ It will also contain node local BGP state information.\n- kind: CiliumBGPNodeConfigOverride\n

|

||||

\ version: v2alpha1\n name: ciliumbgpnodeconfigoverrides.cilium.io\n displayName:

|

||||

Cilium BGP Node Config Override\n description: |\n CiliumBGPNodeConfigOverride

|

||||

can be used to override node specific BGP configuration.\n- kind: CiliumLoadBalancerIPPool\n

|

||||

\ version: v2alpha1\n name: ciliumloadbalancerippools.cilium.io\n displayName:

|

||||

Cilium Load Balancer IP Pool\n description: |\n Defining a Cilium Load Balancer

|

||||

IP Pool instructs Cilium to assign IPs to LoadBalancer Services.\n- kind: CiliumNodeConfig\n

|

||||

\ version: v2alpha1\n name: ciliumnodeconfigs.cilium.io\n displayName: Cilium

|

||||

Node Configuration\n description: |\n CiliumNodeConfig is a list of configuration

|

||||

key-value pairs. It is applied to\n nodes indicated by a label selector.\n-

|

||||

kind: CiliumCIDRGroup\n version: v2alpha1\n name: ciliumcidrgroups.cilium.io\n

|

||||

\ displayName: Cilium CIDR Group\n description: |\n CiliumCIDRGroup is a list

|

||||

of CIDRs that can be referenced as a single entity from CiliumNetworkPolicies.\n-

|

||||

kind: CiliumL2AnnouncementPolicy\n version: v2alpha1\n name: ciliuml2announcementpolicies.cilium.io\n

|

||||

\ displayName: Cilium L2 Announcement Policy\n description: |\n CiliumL2AnnouncementPolicy

|

||||

is a policy which determines which service IPs will be announced to\n the local

|

||||

area network, by which nodes, and via which interfaces.\n- kind: CiliumPodIPPool\n

|

||||

\ version: v2alpha1\n name: ciliumpodippools.cilium.io\n displayName: Cilium

|

||||

Pod IP Pool\n description: |\n CiliumPodIPPool defines an IP pool that can

|

||||

be used for pooled IPAM (i.e. the multi-pool IPAM mode).\n"

|

||||

apiVersion: v2

|

||||

appVersion: 1.14.5

|

||||

appVersion: 1.15.2

|

||||

description: eBPF-based Networking, Security, and Observability

|

||||

home: https://cilium.io/

|

||||

icon: https://cdn.jsdelivr.net/gh/cilium/cilium@v1.14/Documentation/images/logo-solo.svg

|

||||

icon: https://cdn.jsdelivr.net/gh/cilium/cilium@v1.15/Documentation/images/logo-solo.svg

|

||||

keywords:

|

||||

- BPF

|

||||

- eBPF

|

||||

@@ -138,4 +95,4 @@ kubeVersion: '>= 1.16.0-0'

|

||||

name: cilium

|

||||

sources:

|

||||

- https://github.com/cilium/cilium

|

||||

version: 1.14.5

|

||||

version: 1.15.2

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# cilium

|

||||

|

||||

|

||||

|

||||

|

||||

Cilium is open source software for providing and transparently securing

|

||||

network connectivity and loadbalancing between application workloads such as

|

||||

@@ -60,24 +60,30 @@ contributors across the globe, there is almost always someone available to help.

|

||||

| aksbyocni.enabled | bool | `false` | Enable AKS BYOCNI integration. Note that this is incompatible with AKS clusters not created in BYOCNI mode: use Azure integration (`azure.enabled`) instead. |

|

||||

| alibabacloud.enabled | bool | `false` | Enable AlibabaCloud ENI integration |

|

||||

| annotateK8sNode | bool | `false` | Annotate k8s node upon initialization with Cilium's metadata. |

|

||||

| annotations | object | `{}` | Annotations to be added to all top-level cilium-agent objects (resources under templates/cilium-agent) |

|

||||

| apiRateLimit | string | `nil` | The api-rate-limit option can be used to overwrite individual settings of the default configuration for rate limiting calls to the Cilium Agent API |

|

||||

| authentication.enabled | bool | `true` | Enable authentication processing and garbage collection. Note that if disabled, policy enforcement will still block requests that require authentication. But the resulting authentication requests for these requests will not be processed, therefore the requests not be allowed. |

|

||||

| authentication.gcInterval | string | `"5m0s"` | Interval for garbage collection of auth map entries. |

|

||||

| authentication.mutual.connectTimeout | string | `"5s"` | Timeout for connecting to the remote node TCP socket |

|

||||

| authentication.mutual.port | int | `4250` | Port on the agent where mutual authentication handshakes between agents will be performed |

|

||||

| authentication.mutual.spire.adminSocketPath | string | `"/run/spire/sockets/admin.sock"` | SPIRE socket path where the SPIRE delegated api agent is listening |

|

||||