mirror of

https://github.com/outbackdingo/cozystack.git

synced 2026-02-05 08:17:59 +00:00

Compare commits

6 Commits

v0.32.0-be

...

changelogs

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

4a0b11fa95 | ||

|

|

dcebfe9b58 | ||

|

|

1809b0056b | ||

|

|

3c5393ec2d | ||

|

|

244f814f93 | ||

|

|

6ef08e38cd |

7

.github/workflows/pre-commit.yml

vendored

7

.github/workflows/pre-commit.yml

vendored

@@ -30,13 +30,12 @@ jobs:

|

||||

run: |

|

||||

sudo apt update

|

||||

sudo apt install curl -y

|

||||

curl -fsSL https://deb.nodesource.com/setup_16.x | sudo -E bash -

|

||||

sudo apt install nodejs -y

|

||||

sudo apt install npm -y

|

||||

|

||||

git clone --branch 2.7.0 --depth 1 https://github.com/bitnami/readme-generator-for-helm.git

|

||||

git clone https://github.com/bitnami/readme-generator-for-helm

|

||||

cd ./readme-generator-for-helm

|

||||

npm install

|

||||

npm install -g @yao-pkg/pkg

|

||||

npm install -g pkg

|

||||

pkg . -o /usr/local/bin/readme-generator

|

||||

|

||||

- name: Run pre-commit hooks

|

||||

|

||||

12

README.md

12

README.md

@@ -12,15 +12,11 @@

|

||||

|

||||

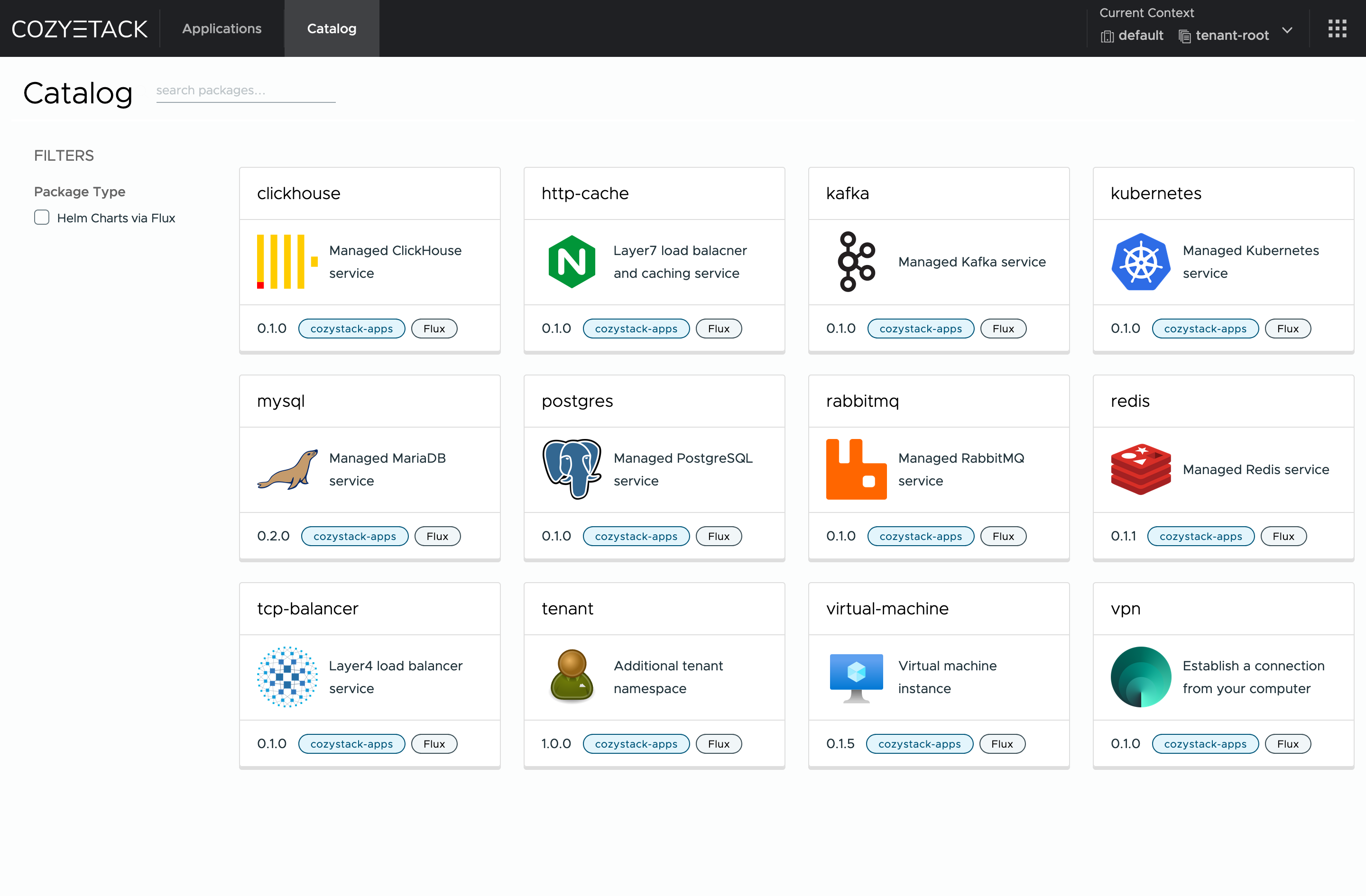

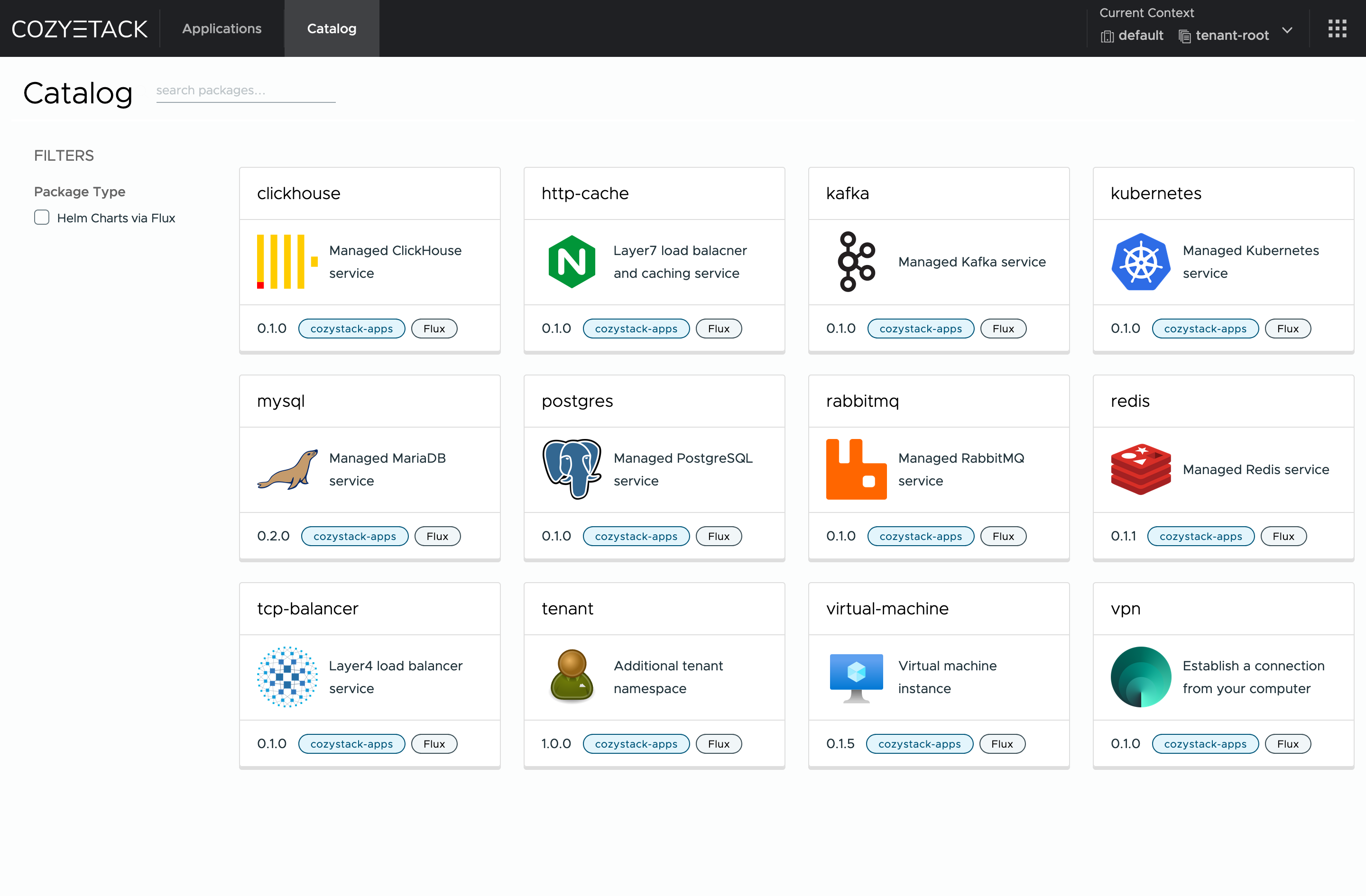

**Cozystack** is a free PaaS platform and framework for building clouds.

|

||||

|

||||

Cozystack is a [CNCF Sandbox Level Project](https://www.cncf.io/sandbox-projects/) that was originally built and sponsored by [Ænix](https://aenix.io/).

|

||||

|

||||

With Cozystack, you can transform a bunch of servers into an intelligent system with a simple REST API for spawning Kubernetes clusters,

|

||||

Database-as-a-Service, virtual machines, load balancers, HTTP caching services, and other services with ease.

|

||||

|

||||

Use Cozystack to build your own cloud or provide a cost-effective development environment.

|

||||

|

||||

|

||||

|

||||

## Use-Cases

|

||||

|

||||

* [**Using Cozystack to build a public cloud**](https://cozystack.io/docs/guides/use-cases/public-cloud/)

|

||||

@@ -32,6 +28,9 @@ You can use Cozystack as a platform to build a private cloud powered by Infrastr

|

||||

* [**Using Cozystack as a Kubernetes distribution**](https://cozystack.io/docs/guides/use-cases/kubernetes-distribution/)

|

||||

You can use Cozystack as a Kubernetes distribution for Bare Metal

|

||||

|

||||

## Screenshot

|

||||

|

||||

|

||||

|

||||

## Documentation

|

||||

|

||||

@@ -60,10 +59,7 @@ Commits are used to generate the changelog, and their author will be referenced

|

||||

|

||||

If you have **Feature Requests** please use the [Discussion's Feature Request section](https://github.com/cozystack/cozystack/discussions/categories/feature-requests).

|

||||

|

||||

## Community

|

||||

|

||||

You are welcome to join our [Telegram group](https://t.me/cozystack) and come to our weekly community meetings.

|

||||

Add them to your [Google Calendar](https://calendar.google.com/calendar?cid=ZTQzZDIxZTVjOWI0NWE5NWYyOGM1ZDY0OWMyY2IxZTFmNDMzZTJlNjUzYjU2ZGJiZGE3NGNhMzA2ZjBkMGY2OEBncm91cC5jYWxlbmRhci5nb29nbGUuY29t) or [iCal](https://calendar.google.com/calendar/ical/e43d21e5c9b45a95f28c5d649c2cb1e1f433e2e653b56dbbda74ca306f0d0f68%40group.calendar.google.com/public/basic.ics) for convenience.

|

||||

You are welcome to join our weekly community meetings (just add this events to your [Google Calendar](https://calendar.google.com/calendar?cid=ZTQzZDIxZTVjOWI0NWE5NWYyOGM1ZDY0OWMyY2IxZTFmNDMzZTJlNjUzYjU2ZGJiZGE3NGNhMzA2ZjBkMGY2OEBncm91cC5jYWxlbmRhci5nb29nbGUuY29t) or [iCal](https://calendar.google.com/calendar/ical/e43d21e5c9b45a95f28c5d649c2cb1e1f433e2e653b56dbbda74ca306f0d0f68%40group.calendar.google.com/public/basic.ics)) or [Telegram group](https://t.me/cozystack).

|

||||

|

||||

## License

|

||||

|

||||

|

||||

8

docs/changelogs/v0.31.1.md

Normal file

8

docs/changelogs/v0.31.1.md

Normal file

@@ -0,0 +1,8 @@

|

||||

## Fixes

|

||||

|

||||

* [build] Update Talos Linux v1.10.3 and fix assets. (@kvaps in https://github.com/cozystack/cozystack/pull/1006)

|

||||

* [ci] Fix uploading released artifacts to GitHub. (@kvaps in https://github.com/cozystack/cozystack/pull/1009)

|

||||

* [ci] Separate build and testing jobs. (@kvaps in https://github.com/cozystack/cozystack/pull/1005)

|

||||

* [docs] Write a full release post for v0.31.1. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/999)

|

||||

|

||||

**Full Changelog**: https://github.com/cozystack/cozystack/compare/v0.31.0...v0.31.1

|

||||

13

docs/changelogs/v0.31.2.md

Normal file

13

docs/changelogs/v0.31.2.md

Normal file

@@ -0,0 +1,13 @@

|

||||

## Security

|

||||

|

||||

* Resolve a security problem that allowed a tenant administrator to gain enhanced privileges outside the tenant. (@kvaps in https://github.com/cozystack/cozystack/pull/1062, backported in https://github.com/cozystack/cozystack/pull/1066)

|

||||

|

||||

## Fixes

|

||||

|

||||

* [platform] Fix dependencies in `distro-full` bundle. (@klinch0 in https://github.com/cozystack/cozystack/pull/1056, backported in https://github.com/cozystack/cozystack/pull/1064)

|

||||

* [platform] Fix RBAC for annotating namespaces. (@kvaps in https://github.com/cozystack/cozystack/pull/1031, backported in https://github.com/cozystack/cozystack/pull/1037)

|

||||

* [platform] Reduce system resource consumption by using smaller resource presets for VerticalPodAutoscaler, SeaweedFS, and KubeOVN. (@klinch0 in https://github.com/cozystack/cozystack/pull/1054, backported in https://github.com/cozystack/cozystack/pull/1058)

|

||||

* [dashboard] Fix a number of issues in the Cozystack Dashboard (@kvaps in https://github.com/cozystack/cozystack/pull/1042, backported in https://github.com/cozystack/cozystack/pull/1066)

|

||||

* [apps] Specify minimal working resource presets. (@kvaps in https://github.com/cozystack/cozystack/pull/1040, backported in https://github.com/cozystack/cozystack/pull/1041)

|

||||

* [apps] Update built-in documentation and configuration reference for managed Clickhouse application. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/1059, backported in https://github.com/cozystack/cozystack/pull/1065)

|

||||

ы

|

||||

13

go.mod

13

go.mod

@@ -37,7 +37,6 @@ require (

|

||||

github.com/coreos/go-systemd/v22 v22.5.0 // indirect

|

||||

github.com/davecgh/go-spew v1.1.2-0.20180830191138-d8f796af33cc // indirect

|

||||

github.com/emicklei/go-restful/v3 v3.11.0 // indirect

|

||||

github.com/evanphx/json-patch v4.12.0+incompatible // indirect

|

||||

github.com/evanphx/json-patch/v5 v5.9.0 // indirect

|

||||

github.com/felixge/httpsnoop v1.0.4 // indirect

|

||||

github.com/fluxcd/pkg/apis/kustomize v1.6.1 // indirect

|

||||

@@ -92,14 +91,14 @@ require (

|

||||

go.opentelemetry.io/proto/otlp v1.3.1 // indirect

|

||||

go.uber.org/multierr v1.11.0 // indirect

|

||||

go.uber.org/zap v1.27.0 // indirect

|

||||

golang.org/x/crypto v0.31.0 // indirect

|

||||

golang.org/x/crypto v0.28.0 // indirect

|

||||

golang.org/x/exp v0.0.0-20240719175910-8a7402abbf56 // indirect

|

||||

golang.org/x/net v0.33.0 // indirect

|

||||

golang.org/x/net v0.30.0 // indirect

|

||||

golang.org/x/oauth2 v0.23.0 // indirect

|

||||

golang.org/x/sync v0.10.0 // indirect

|

||||

golang.org/x/sys v0.28.0 // indirect

|

||||

golang.org/x/term v0.27.0 // indirect

|

||||

golang.org/x/text v0.21.0 // indirect

|

||||

golang.org/x/sync v0.8.0 // indirect

|

||||

golang.org/x/sys v0.26.0 // indirect

|

||||

golang.org/x/term v0.25.0 // indirect

|

||||

golang.org/x/text v0.19.0 // indirect

|

||||

golang.org/x/time v0.7.0 // indirect

|

||||

golang.org/x/tools v0.26.0 // indirect

|

||||

gomodules.xyz/jsonpatch/v2 v2.4.0 // indirect

|

||||

|

||||

28

go.sum

28

go.sum

@@ -26,8 +26,8 @@ github.com/dustin/go-humanize v1.0.1 h1:GzkhY7T5VNhEkwH0PVJgjz+fX1rhBrR7pRT3mDkp

|

||||

github.com/dustin/go-humanize v1.0.1/go.mod h1:Mu1zIs6XwVuF/gI1OepvI0qD18qycQx+mFykh5fBlto=

|

||||

github.com/emicklei/go-restful/v3 v3.11.0 h1:rAQeMHw1c7zTmncogyy8VvRZwtkmkZ4FxERmMY4rD+g=

|

||||

github.com/emicklei/go-restful/v3 v3.11.0/go.mod h1:6n3XBCmQQb25CM2LCACGz8ukIrRry+4bhvbpWn3mrbc=

|

||||

github.com/evanphx/json-patch v4.12.0+incompatible h1:4onqiflcdA9EOZ4RxV643DvftH5pOlLGNtQ5lPWQu84=

|

||||

github.com/evanphx/json-patch v4.12.0+incompatible/go.mod h1:50XU6AFN0ol/bzJsmQLiYLvXMP4fmwYFNcr97nuDLSk=

|

||||

github.com/evanphx/json-patch v0.5.2 h1:xVCHIVMUu1wtM/VkR9jVZ45N3FhZfYMMYGorLCR8P3k=

|

||||

github.com/evanphx/json-patch v0.5.2/go.mod h1:ZWS5hhDbVDyob71nXKNL0+PWn6ToqBHMikGIFbs31qQ=

|

||||

github.com/evanphx/json-patch/v5 v5.9.0 h1:kcBlZQbplgElYIlo/n1hJbls2z/1awpXxpRi0/FOJfg=

|

||||

github.com/evanphx/json-patch/v5 v5.9.0/go.mod h1:VNkHZ/282BpEyt/tObQO8s5CMPmYYq14uClGH4abBuQ=

|

||||

github.com/felixge/httpsnoop v1.0.4 h1:NFTV2Zj1bL4mc9sqWACXbQFVBBg2W3GPvqp8/ESS2Wg=

|

||||

@@ -212,8 +212,8 @@ go.uber.org/zap v1.27.0/go.mod h1:GB2qFLM7cTU87MWRP2mPIjqfIDnGu+VIO4V/SdhGo2E=

|

||||

golang.org/x/crypto v0.0.0-20190308221718-c2843e01d9a2/go.mod h1:djNgcEr1/C05ACkg1iLfiJU5Ep61QUkGW8qpdssI0+w=

|

||||

golang.org/x/crypto v0.0.0-20191011191535-87dc89f01550/go.mod h1:yigFU9vqHzYiE8UmvKecakEJjdnWj3jj499lnFckfCI=

|

||||

golang.org/x/crypto v0.0.0-20200622213623-75b288015ac9/go.mod h1:LzIPMQfyMNhhGPhUkYOs5KpL4U8rLKemX1yGLhDgUto=

|

||||

golang.org/x/crypto v0.31.0 h1:ihbySMvVjLAeSH1IbfcRTkD/iNscyz8rGzjF/E5hV6U=

|

||||

golang.org/x/crypto v0.31.0/go.mod h1:kDsLvtWBEx7MV9tJOj9bnXsPbxwJQ6csT/x4KIN4Ssk=

|

||||

golang.org/x/crypto v0.28.0 h1:GBDwsMXVQi34v5CCYUm2jkJvu4cbtru2U4TN2PSyQnw=

|

||||

golang.org/x/crypto v0.28.0/go.mod h1:rmgy+3RHxRZMyY0jjAJShp2zgEdOqj2AO7U0pYmeQ7U=

|

||||

golang.org/x/exp v0.0.0-20240719175910-8a7402abbf56 h1:2dVuKD2vS7b0QIHQbpyTISPd0LeHDbnYEryqj5Q1ug8=

|

||||

golang.org/x/exp v0.0.0-20240719175910-8a7402abbf56/go.mod h1:M4RDyNAINzryxdtnbRXRL/OHtkFuWGRjvuhBJpk2IlY=

|

||||

golang.org/x/mod v0.2.0/go.mod h1:s0Qsj1ACt9ePp/hMypM3fl4fZqREWJwdYDEqhRiZZUA=

|

||||

@@ -222,26 +222,26 @@ golang.org/x/net v0.0.0-20190404232315-eb5bcb51f2a3/go.mod h1:t9HGtf8HONx5eT2rtn

|

||||

golang.org/x/net v0.0.0-20190620200207-3b0461eec859/go.mod h1:z5CRVTTTmAJ677TzLLGU+0bjPO0LkuOLi4/5GtJWs/s=

|

||||

golang.org/x/net v0.0.0-20200226121028-0de0cce0169b/go.mod h1:z5CRVTTTmAJ677TzLLGU+0bjPO0LkuOLi4/5GtJWs/s=

|

||||

golang.org/x/net v0.0.0-20201021035429-f5854403a974/go.mod h1:sp8m0HH+o8qH0wwXwYZr8TS3Oi6o0r6Gce1SSxlDquU=

|

||||

golang.org/x/net v0.33.0 h1:74SYHlV8BIgHIFC/LrYkOGIwL19eTYXQ5wc6TBuO36I=

|

||||

golang.org/x/net v0.33.0/go.mod h1:HXLR5J+9DxmrqMwG9qjGCxZ+zKXxBru04zlTvWlWuN4=

|

||||

golang.org/x/net v0.30.0 h1:AcW1SDZMkb8IpzCdQUaIq2sP4sZ4zw+55h6ynffypl4=

|

||||

golang.org/x/net v0.30.0/go.mod h1:2wGyMJ5iFasEhkwi13ChkO/t1ECNC4X4eBKkVFyYFlU=

|

||||

golang.org/x/oauth2 v0.23.0 h1:PbgcYx2W7i4LvjJWEbf0ngHV6qJYr86PkAV3bXdLEbs=

|

||||

golang.org/x/oauth2 v0.23.0/go.mod h1:XYTD2NtWslqkgxebSiOHnXEap4TF09sJSc7H1sXbhtI=

|

||||

golang.org/x/sync v0.0.0-20190423024810-112230192c58/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||

golang.org/x/sync v0.0.0-20190911185100-cd5d95a43a6e/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||

golang.org/x/sync v0.0.0-20201020160332-67f06af15bc9/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||

golang.org/x/sync v0.10.0 h1:3NQrjDixjgGwUOCaF8w2+VYHv0Ve/vGYSbdkTa98gmQ=

|

||||

golang.org/x/sync v0.10.0/go.mod h1:Czt+wKu1gCyEFDUtn0jG5QVvpJ6rzVqr5aXyt9drQfk=

|

||||

golang.org/x/sync v0.8.0 h1:3NFvSEYkUoMifnESzZl15y791HH1qU2xm6eCJU5ZPXQ=

|

||||

golang.org/x/sync v0.8.0/go.mod h1:Czt+wKu1gCyEFDUtn0jG5QVvpJ6rzVqr5aXyt9drQfk=

|

||||

golang.org/x/sys v0.0.0-20190215142949-d0b11bdaac8a/go.mod h1:STP8DvDyc/dI5b8T5hshtkjS+E42TnysNCUPdjciGhY=

|

||||

golang.org/x/sys v0.0.0-20190412213103-97732733099d/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

|

||||

golang.org/x/sys v0.0.0-20200930185726-fdedc70b468f/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

|

||||

golang.org/x/sys v0.28.0 h1:Fksou7UEQUWlKvIdsqzJmUmCX3cZuD2+P3XyyzwMhlA=

|

||||

golang.org/x/sys v0.28.0/go.mod h1:/VUhepiaJMQUp4+oa/7Zr1D23ma6VTLIYjOOTFZPUcA=

|

||||

golang.org/x/term v0.27.0 h1:WP60Sv1nlK1T6SupCHbXzSaN0b9wUmsPoRS9b61A23Q=

|

||||

golang.org/x/term v0.27.0/go.mod h1:iMsnZpn0cago0GOrHO2+Y7u7JPn5AylBrcoWkElMTSM=

|

||||

golang.org/x/sys v0.26.0 h1:KHjCJyddX0LoSTb3J+vWpupP9p0oznkqVk/IfjymZbo=

|

||||

golang.org/x/sys v0.26.0/go.mod h1:/VUhepiaJMQUp4+oa/7Zr1D23ma6VTLIYjOOTFZPUcA=

|

||||

golang.org/x/term v0.25.0 h1:WtHI/ltw4NvSUig5KARz9h521QvRC8RmF/cuYqifU24=

|

||||

golang.org/x/term v0.25.0/go.mod h1:RPyXicDX+6vLxogjjRxjgD2TKtmAO6NZBsBRfrOLu7M=

|

||||

golang.org/x/text v0.3.0/go.mod h1:NqM8EUOU14njkJ3fqMW+pc6Ldnwhi/IjpwHt7yyuwOQ=

|

||||

golang.org/x/text v0.3.3/go.mod h1:5Zoc/QRtKVWzQhOtBMvqHzDpF6irO9z98xDceosuGiQ=

|

||||

golang.org/x/text v0.21.0 h1:zyQAAkrwaneQ066sspRyJaG9VNi/YJ1NfzcGB3hZ/qo=

|

||||

golang.org/x/text v0.21.0/go.mod h1:4IBbMaMmOPCJ8SecivzSH54+73PCFmPWxNTLm+vZkEQ=

|

||||

golang.org/x/text v0.19.0 h1:kTxAhCbGbxhK0IwgSKiMO5awPoDQ0RpfiVYBfK860YM=

|

||||

golang.org/x/text v0.19.0/go.mod h1:BuEKDfySbSR4drPmRPG/7iBdf8hvFMuRexcpahXilzY=

|

||||

golang.org/x/time v0.7.0 h1:ntUhktv3OPE6TgYxXWv9vKvUSJyIFJlyohwbkEwPrKQ=

|

||||

golang.org/x/time v0.7.0/go.mod h1:3BpzKBy/shNhVucY/MWOyx10tF3SFh9QdLuxbVysPQM=

|

||||

golang.org/x/tools v0.0.0-20180917221912-90fa682c2a6e/go.mod h1:n7NCudcB/nEzxVGmLbDWY5pfWTLqBcC2KZ6jyYvM4mQ=

|

||||

|

||||

@@ -102,7 +102,7 @@ EOF

|

||||

|

||||

@test "Boot QEMU VMs" {

|

||||

for i in 1 2 3; do

|

||||

qemu-system-x86_64 -machine type=pc,accel=kvm -cpu host -smp 8 -m 24576 \

|

||||

qemu-system-x86_64 -machine type=pc,accel=kvm -cpu host -smp 8 -m 16384 \

|

||||

-device virtio-net,netdev=net0,mac=52:54:00:12:34:5${i} \

|

||||

-netdev tap,id=net0,ifname=cozy-srv${i},script=no,downscript=no \

|

||||

-drive file=srv${i}/system.img,if=virtio,format=raw \

|

||||

|

||||

@@ -248,24 +248,15 @@ func (r *WorkloadMonitorReconciler) reconcilePodForMonitor(

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Name: fmt.Sprintf("pod-%s", pod.Name),

|

||||

Namespace: pod.Namespace,

|

||||

Labels: map[string]string{},

|

||||

},

|

||||

}

|

||||

|

||||

metaLabels := r.getWorkloadMetadata(&pod)

|

||||

_, err := ctrl.CreateOrUpdate(ctx, r.Client, workload, func() error {

|

||||

// Update owner references with the new monitor

|

||||

updateOwnerReferences(workload.GetObjectMeta(), monitor)

|

||||

|

||||

// Copy labels from the Pod if needed

|

||||

for k, v := range pod.Labels {

|

||||

workload.Labels[k] = v

|

||||

}

|

||||

|

||||

// Add workload meta to labels

|

||||

for k, v := range metaLabels {

|

||||

workload.Labels[k] = v

|

||||

}

|

||||

workload.Labels = pod.Labels

|

||||

|

||||

// Fill Workload status fields:

|

||||

workload.Status.Kind = monitor.Spec.Kind

|

||||

@@ -442,12 +433,3 @@ func mapObjectToMonitor[T client.Object](_ T, c client.Client) func(ctx context.

|

||||

return requests

|

||||

}

|

||||

}

|

||||

|

||||

func (r *WorkloadMonitorReconciler) getWorkloadMetadata(obj client.Object) map[string]string {

|

||||

labels := make(map[string]string)

|

||||

annotations := obj.GetAnnotations()

|

||||

if instanceType, ok := annotations["kubevirt.io/cluster-instancetype-name"]; ok {

|

||||

labels["workloads.cozystack.io/kubevirt-vmi-instance-type"] = instanceType

|

||||

}

|

||||

return labels

|

||||

}

|

||||

|

||||

@@ -16,10 +16,10 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.2.0

|

||||

version: 0.1.0

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

# follow Semantic Versioning. They should reflect the version the application is using.

|

||||

# It is recommended to use it with quotes.

|

||||

appVersion: "0.2.0"

|

||||

appVersion: "0.1.0"

|

||||

|

||||

@@ -1 +0,0 @@

|

||||

../../../library/cozy-lib

|

||||

@@ -18,14 +18,3 @@ rules:

|

||||

resourceNames:

|

||||

- {{ .Release.Name }}-ui

|

||||

verbs: ["get", "list", "watch"]

|

||||

---

|

||||

kind: RoleBinding

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

metadata:

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

subjects:

|

||||

{{ include "cozy-lib.rbac.subjectsForTenantAndAccessLevel" (list "use" .Release.Namespace) }}

|

||||

roleRef:

|

||||

kind: Role

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

|

||||

@@ -16,7 +16,7 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.10.0

|

||||

version: 0.9.2

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

|

||||

@@ -1 +1 @@

|

||||

ghcr.io/cozystack/cozystack/clickhouse-backup:0.10.0@sha256:3faf7a4cebf390b9053763107482de175aa0fdb88c1e77424fd81100b1c3a205

|

||||

ghcr.io/cozystack/cozystack/clickhouse-backup:0.9.2@sha256:3faf7a4cebf390b9053763107482de175aa0fdb88c1e77424fd81100b1c3a205

|

||||

|

||||

@@ -24,14 +24,3 @@ rules:

|

||||

resourceNames:

|

||||

- {{ .Release.Name }}

|

||||

verbs: ["get", "list", "watch"]

|

||||

---

|

||||

kind: RoleBinding

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

metadata:

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

subjects:

|

||||

{{ include "cozy-lib.rbac.subjectsForTenantAndAccessLevel" (list "use" .Release.Namespace) }}

|

||||

roleRef:

|

||||

kind: Role

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

|

||||

@@ -16,7 +16,7 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.7.0

|

||||

version: 0.6.1

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

|

||||

@@ -1 +1 @@

|

||||

ghcr.io/cozystack/cozystack/postgres-backup:0.14.0@sha256:10179ed56457460d95cd5708db2a00130901255fa30c4dd76c65d2ef5622b61f

|

||||

ghcr.io/cozystack/cozystack/postgres-backup:0.12.1@sha256:10179ed56457460d95cd5708db2a00130901255fa30c4dd76c65d2ef5622b61f

|

||||

|

||||

@@ -24,14 +24,3 @@ rules:

|

||||

resourceNames:

|

||||

- {{ .Release.Name }}

|

||||

verbs: ["get", "list", "watch"]

|

||||

---

|

||||

kind: RoleBinding

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

metadata:

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

subjects:

|

||||

{{ include "cozy-lib.rbac.subjectsForTenantAndAccessLevel" (list "use" .Release.Namespace) }}

|

||||

roleRef:

|

||||

kind: Role

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

|

||||

@@ -1 +1 @@

|

||||

ghcr.io/cozystack/cozystack/nginx-cache:0.5.1@sha256:b7633717cd7449c0042ae92d8ca9b36e4d69566561f5c7d44e21058e7d05c6d5

|

||||

ghcr.io/cozystack/cozystack/nginx-cache:0.5.1@sha256:50ac1581e3100bd6c477a71161cb455a341ffaf9e5e2f6086802e4e25271e8af

|

||||

|

||||

@@ -16,7 +16,7 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.7.0

|

||||

version: 0.6.1

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

|

||||

@@ -25,14 +25,3 @@ rules:

|

||||

- {{ .Release.Name }}

|

||||

- {{ $.Release.Name }}-zookeeper

|

||||

verbs: ["get", "list", "watch"]

|

||||

---

|

||||

kind: RoleBinding

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

metadata:

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

subjects:

|

||||

{{ include "cozy-lib.rbac.subjectsForTenantAndAccessLevel" (list "use" .Release.Namespace) }}

|

||||

roleRef:

|

||||

kind: Role

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

|

||||

@@ -16,7 +16,7 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.24.0

|

||||

version: 0.23.1

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

|

||||

@@ -1 +0,0 @@

|

||||

../../../library/cozy-lib

|

||||

@@ -1 +1 @@

|

||||

ghcr.io/cozystack/cozystack/cluster-autoscaler:0.24.0@sha256:3a8170433e1632e5cc2b6d9db34d0605e8e6c63c158282c38450415e700e932e

|

||||

ghcr.io/cozystack/cozystack/cluster-autoscaler:0.23.1@sha256:7315850634728a5864a3de3150c12f0e1454f3f1ce33cdf21a278f57611dd5e9

|

||||

|

||||

@@ -1 +1 @@

|

||||

ghcr.io/cozystack/cozystack/kubevirt-cloud-provider:0.24.0@sha256:b478952fab735f85c3ba15835012b1de8af5578b33a8a2670eaf532ffc17681e

|

||||

ghcr.io/cozystack/cozystack/kubevirt-cloud-provider:0.23.1@sha256:6962bdf51ab2ff40b420b9cff7c850aeea02187da2a65a67f10e0471744649d7

|

||||

|

||||

@@ -8,7 +8,7 @@ ENV GOARCH=$TARGETARCH

|

||||

|

||||

RUN git clone https://github.com/kubevirt/cloud-provider-kubevirt /go/src/kubevirt.io/cloud-provider-kubevirt \

|

||||

&& cd /go/src/kubevirt.io/cloud-provider-kubevirt \

|

||||

&& git checkout a0acf33

|

||||

&& git checkout 443a1fe

|

||||

|

||||

WORKDIR /go/src/kubevirt.io/cloud-provider-kubevirt

|

||||

|

||||

|

||||

@@ -37,7 +37,7 @@ index 74166b5d9..4e744f8de 100644

|

||||

klog.Infof("Initializing kubevirtEPSController")

|

||||

|

||||

diff --git a/pkg/controller/kubevirteps/kubevirteps_controller.go b/pkg/controller/kubevirteps/kubevirteps_controller.go

|

||||

index 53388eb8e..b56882c12 100644

|

||||

index 6f6e3d322..b56882c12 100644

|

||||

--- a/pkg/controller/kubevirteps/kubevirteps_controller.go

|

||||

+++ b/pkg/controller/kubevirteps/kubevirteps_controller.go

|

||||

@@ -54,10 +54,10 @@ type Controller struct {

|

||||

@@ -286,6 +286,22 @@ index 53388eb8e..b56882c12 100644

|

||||

for _, eps := range slicesToDelete {

|

||||

err := c.infraClient.DiscoveryV1().EndpointSlices(eps.Namespace).Delete(context.TODO(), eps.Name, metav1.DeleteOptions{})

|

||||

if err != nil {

|

||||

@@ -474,11 +538,11 @@ func (c *Controller) reconcileByAddressType(service *v1.Service, tenantSlices []

|

||||

// Create the desired port configuration

|

||||

var desiredPorts []discovery.EndpointPort

|

||||

|

||||

- for _, port := range service.Spec.Ports {

|

||||

+ for i := range service.Spec.Ports {

|

||||

desiredPorts = append(desiredPorts, discovery.EndpointPort{

|

||||

- Port: &port.TargetPort.IntVal,

|

||||

- Protocol: &port.Protocol,

|

||||

- Name: &port.Name,

|

||||

+ Port: &service.Spec.Ports[i].TargetPort.IntVal,

|

||||

+ Protocol: &service.Spec.Ports[i].Protocol,

|

||||

+ Name: &service.Spec.Ports[i].Name,

|

||||

})

|

||||

}

|

||||

|

||||

@@ -588,55 +652,114 @@ func ownedBy(endpointSlice *discovery.EndpointSlice, svc *v1.Service) bool {

|

||||

return false

|

||||

}

|

||||

@@ -421,10 +437,18 @@ index 53388eb8e..b56882c12 100644

|

||||

|

||||

return nil

|

||||

diff --git a/pkg/controller/kubevirteps/kubevirteps_controller_test.go b/pkg/controller/kubevirteps/kubevirteps_controller_test.go

|

||||

index 1c97035b4..14d92d340 100644

|

||||

index 1fb86e25f..14d92d340 100644

|

||||

--- a/pkg/controller/kubevirteps/kubevirteps_controller_test.go

|

||||

+++ b/pkg/controller/kubevirteps/kubevirteps_controller_test.go

|

||||

@@ -190,7 +190,7 @@ func setupTestKubevirtEPSController() *testKubevirtEPSController {

|

||||

@@ -13,6 +13,7 @@ import (

|

||||

"k8s.io/apimachinery/pkg/runtime"

|

||||

"k8s.io/apimachinery/pkg/runtime/schema"

|

||||

"k8s.io/apimachinery/pkg/util/intstr"

|

||||

+ "k8s.io/apimachinery/pkg/util/sets"

|

||||

dfake "k8s.io/client-go/dynamic/fake"

|

||||

"k8s.io/client-go/kubernetes/fake"

|

||||

"k8s.io/client-go/testing"

|

||||

@@ -189,7 +190,7 @@ func setupTestKubevirtEPSController() *testKubevirtEPSController {

|

||||

}: "VirtualMachineInstanceList",

|

||||

})

|

||||

|

||||

@@ -433,87 +457,83 @@ index 1c97035b4..14d92d340 100644

|

||||

|

||||

err := controller.Init()

|

||||

if err != nil {

|

||||

@@ -697,51 +697,43 @@ var _ = g.Describe("KubevirtEPSController", g.Ordered, func() {

|

||||

*createPort("http", 80, v1.ProtocolTCP),

|

||||

[]discoveryv1.Endpoint{*createEndpoint("123.45.67.89", "worker-0-test", true, true, false)})

|

||||

|

||||

- // Define several unique ports for the Service

|

||||

@@ -686,5 +687,229 @@ var _ = g.Describe("KubevirtEPSController", g.Ordered, func() {

|

||||

return false, err

|

||||

}).Should(BeTrue(), "EndpointSlice in infra cluster should be recreated by the controller after deletion")

|

||||

})

|

||||

+

|

||||

+ g.It("Should correctly handle multiple unique ports in EndpointSlice", func() {

|

||||

+ // Create a VMI in the infra cluster

|

||||

+ createAndAssertVMI("worker-0-test", "ip-10-32-5-13", "123.45.67.89")

|

||||

+

|

||||

+ // Create an EndpointSlice in the tenant cluster

|

||||

+ createAndAssertTenantSlice("test-epslice", "tenant-service-name", discoveryv1.AddressTypeIPv4,

|

||||

+ *createPort("http", 80, v1.ProtocolTCP),

|

||||

+ []discoveryv1.Endpoint{*createEndpoint("123.45.67.89", "worker-0-test", true, true, false)})

|

||||

+

|

||||

+ // Define multiple ports for the Service

|

||||

servicePorts := []v1.ServicePort{

|

||||

{

|

||||

- Name: "client",

|

||||

- Protocol: v1.ProtocolTCP,

|

||||

- Port: 10001,

|

||||

- TargetPort: intstr.FromInt(30396),

|

||||

- NodePort: 30396,

|

||||

- AppProtocol: nil,

|

||||

+ servicePorts := []v1.ServicePort{

|

||||

+ {

|

||||

+ Name: "client",

|

||||

+ Protocol: v1.ProtocolTCP,

|

||||

+ Port: 10001,

|

||||

+ TargetPort: intstr.FromInt(30396),

|

||||

+ NodePort: 30396,

|

||||

},

|

||||

{

|

||||

- Name: "dashboard",

|

||||

- Protocol: v1.ProtocolTCP,

|

||||

- Port: 8265,

|

||||

- TargetPort: intstr.FromInt(31003),

|

||||

- NodePort: 31003,

|

||||

- AppProtocol: nil,

|

||||

+ },

|

||||

+ {

|

||||

+ Name: "dashboard",

|

||||

+ Protocol: v1.ProtocolTCP,

|

||||

+ Port: 8265,

|

||||

+ TargetPort: intstr.FromInt(31003),

|

||||

+ NodePort: 31003,

|

||||

},

|

||||

{

|

||||

- Name: "metrics",

|

||||

- Protocol: v1.ProtocolTCP,

|

||||

- Port: 8080,

|

||||

- TargetPort: intstr.FromInt(30452),

|

||||

- NodePort: 30452,

|

||||

- AppProtocol: nil,

|

||||

+ },

|

||||

+ {

|

||||

+ Name: "metrics",

|

||||

+ Protocol: v1.ProtocolTCP,

|

||||

+ Port: 8080,

|

||||

+ TargetPort: intstr.FromInt(30452),

|

||||

+ NodePort: 30452,

|

||||

},

|

||||

}

|

||||

|

||||

- // Create a Service with the first port

|

||||

createAndAssertInfraServiceLB("infra-multiport-service", "tenant-service-name", "test-cluster",

|

||||

- servicePorts[0],

|

||||

- v1.ServiceExternalTrafficPolicyLocal)

|

||||

+ },

|

||||

+ }

|

||||

+

|

||||

+ createAndAssertInfraServiceLB("infra-multiport-service", "tenant-service-name", "test-cluster",

|

||||

+ servicePorts[0], v1.ServiceExternalTrafficPolicyLocal)

|

||||

|

||||

- // Update the Service by adding the remaining ports

|

||||

svc, err := testVals.infraClient.CoreV1().Services(infraNamespace).Get(context.TODO(), "infra-multiport-service", metav1.GetOptions{})

|

||||

Expect(err).To(BeNil())

|

||||

|

||||

svc.Spec.Ports = servicePorts

|

||||

-

|

||||

_, err = testVals.infraClient.CoreV1().Services(infraNamespace).Update(context.TODO(), svc, metav1.UpdateOptions{})

|

||||

Expect(err).To(BeNil())

|

||||

|

||||

var epsListMultiPort *discoveryv1.EndpointSliceList

|

||||

|

||||

- // Verify that the EndpointSlice is created with correct unique ports

|

||||

Eventually(func() (bool, error) {

|

||||

epsListMultiPort, err = testVals.infraClient.DiscoveryV1().EndpointSlices(infraNamespace).List(context.TODO(), metav1.ListOptions{})

|

||||

if len(epsListMultiPort.Items) != 1 {

|

||||

@@ -758,7 +750,6 @@ var _ = g.Describe("KubevirtEPSController", g.Ordered, func() {

|

||||

}

|

||||

}

|

||||

|

||||

- // Verify that all expected ports are present and without duplicates

|

||||

if len(foundPortNames) != len(expectedPortNames) {

|

||||

return false, err

|

||||

}

|

||||

@@ -769,5 +760,156 @@ var _ = g.Describe("KubevirtEPSController", g.Ordered, func() {

|

||||

}).Should(BeTrue(), "EndpointSlice should contain all unique ports from the Service without duplicates")

|

||||

})

|

||||

|

||||

+

|

||||

+ svc, err := testVals.infraClient.CoreV1().Services(infraNamespace).Get(context.TODO(), "infra-multiport-service", metav1.GetOptions{})

|

||||

+ Expect(err).To(BeNil())

|

||||

+

|

||||

+ svc.Spec.Ports = servicePorts

|

||||

+ _, err = testVals.infraClient.CoreV1().Services(infraNamespace).Update(context.TODO(), svc, metav1.UpdateOptions{})

|

||||

+ Expect(err).To(BeNil())

|

||||

+

|

||||

+ var epsListMultiPort *discoveryv1.EndpointSliceList

|

||||

+

|

||||

+ Eventually(func() (bool, error) {

|

||||

+ epsListMultiPort, err = testVals.infraClient.DiscoveryV1().EndpointSlices(infraNamespace).List(context.TODO(), metav1.ListOptions{})

|

||||

+ if len(epsListMultiPort.Items) != 1 {

|

||||

+ return false, err

|

||||

+ }

|

||||

+

|

||||

+ createdSlice := epsListMultiPort.Items[0]

|

||||

+ expectedPortNames := []string{"client", "dashboard", "metrics"}

|

||||

+ foundPortNames := []string{}

|

||||

+

|

||||

+ for _, port := range createdSlice.Ports {

|

||||

+ if port.Name != nil {

|

||||

+ foundPortNames = append(foundPortNames, *port.Name)

|

||||

+ }

|

||||

+ }

|

||||

+

|

||||

+ if len(foundPortNames) != len(expectedPortNames) {

|

||||

+ return false, err

|

||||

+ }

|

||||

+

|

||||

+ portSet := sets.NewString(foundPortNames...)

|

||||

+ expectedPortSet := sets.NewString(expectedPortNames...)

|

||||

+ return portSet.Equal(expectedPortSet), err

|

||||

+ }).Should(BeTrue(), "EndpointSlice should contain all unique ports from the Service without duplicates")

|

||||

+ })

|

||||

+

|

||||

+ g.It("Should not panic when Service changes to have a non-nil selector, causing EndpointSlice deletion with no new slices to create", func() {

|

||||

+ createAndAssertVMI("worker-0-test", "ip-10-32-5-13", "123.45.67.89")

|

||||

+ createAndAssertTenantSlice("test-epslice", "tenant-service-name", discoveryv1.AddressTypeIPv4,

|

||||

|

||||

@@ -1,142 +0,0 @@

|

||||

diff --git a/pkg/controller/kubevirteps/kubevirteps_controller.go b/pkg/controller/kubevirteps/kubevirteps_controller.go

|

||||

index 53388eb8e..28644236f 100644

|

||||

--- a/pkg/controller/kubevirteps/kubevirteps_controller.go

|

||||

+++ b/pkg/controller/kubevirteps/kubevirteps_controller.go

|

||||

@@ -12,7 +12,6 @@ import (

|

||||

apiequality "k8s.io/apimachinery/pkg/api/equality"

|

||||

k8serrors "k8s.io/apimachinery/pkg/api/errors"

|

||||

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

|

||||

- "k8s.io/apimachinery/pkg/apis/meta/v1/unstructured"

|

||||

"k8s.io/apimachinery/pkg/runtime"

|

||||

"k8s.io/apimachinery/pkg/runtime/schema"

|

||||

utilruntime "k8s.io/apimachinery/pkg/util/runtime"

|

||||

@@ -669,35 +668,50 @@ func (c *Controller) getDesiredEndpoints(service *v1.Service, tenantSlices []*di

|

||||

for _, slice := range tenantSlices {

|

||||

for _, endpoint := range slice.Endpoints {

|

||||

// find all unique nodes that correspond to an endpoint in a tenant slice

|

||||

+ if endpoint.NodeName == nil {

|

||||

+ klog.Warningf("Skipping endpoint without NodeName in slice %s/%s", slice.Namespace, slice.Name)

|

||||

+ continue

|

||||

+ }

|

||||

nodeSet.Insert(*endpoint.NodeName)

|

||||

}

|

||||

}

|

||||

|

||||

- klog.Infof("Desired nodes for service %s in namespace %s: %v", service.Name, service.Namespace, sets.List(nodeSet))

|

||||

+ klog.Infof("Desired nodes for service %s/%s: %v", service.Namespace, service.Name, sets.List(nodeSet))

|

||||

|

||||

for _, node := range sets.List(nodeSet) {

|

||||

// find vmi for node name

|

||||

- obj := &unstructured.Unstructured{}

|

||||

- vmi := &kubevirtv1.VirtualMachineInstance{}

|

||||

-

|

||||

- obj, err := c.infraDynamic.Resource(kubevirtv1.VirtualMachineInstanceGroupVersionKind.GroupVersion().WithResource("virtualmachineinstances")).Namespace(c.infraNamespace).Get(context.TODO(), node, metav1.GetOptions{})

|

||||

+ obj, err := c.infraDynamic.

|

||||

+ Resource(kubevirtv1.VirtualMachineInstanceGroupVersionKind.GroupVersion().WithResource("virtualmachineinstances")).

|

||||

+ Namespace(c.infraNamespace).

|

||||

+ Get(context.TODO(), node, metav1.GetOptions{})

|

||||

if err != nil {

|

||||

- klog.Errorf("Failed to get VirtualMachineInstance %s in namespace %s:%v", node, c.infraNamespace, err)

|

||||

+ klog.Errorf("Failed to get VMI %q in namespace %q: %v", node, c.infraNamespace, err)

|

||||

continue

|

||||

}

|

||||

|

||||

+ vmi := &kubevirtv1.VirtualMachineInstance{}

|

||||

err = runtime.DefaultUnstructuredConverter.FromUnstructured(obj.Object, vmi)

|

||||

if err != nil {

|

||||

klog.Errorf("Failed to convert Unstructured to VirtualMachineInstance: %v", err)

|

||||

- klog.Fatal(err)

|

||||

+ continue

|

||||

}

|

||||

|

||||

+ if vmi.Status.NodeName == "" {

|

||||

+ klog.Warningf("Skipping VMI %s/%s: NodeName is empty", vmi.Namespace, vmi.Name)

|

||||

+ continue

|

||||

+ }

|

||||

+ nodeNamePtr := &vmi.Status.NodeName

|

||||

+

|

||||

ready := vmi.Status.Phase == kubevirtv1.Running

|

||||

serving := vmi.Status.Phase == kubevirtv1.Running

|

||||

terminating := vmi.Status.Phase == kubevirtv1.Failed || vmi.Status.Phase == kubevirtv1.Succeeded

|

||||

|

||||

for _, i := range vmi.Status.Interfaces {

|

||||

if i.Name == "default" {

|

||||

+ if i.IP == "" {

|

||||

+ klog.Warningf("VMI %s/%s interface %q has no IP, skipping", vmi.Namespace, vmi.Name, i.Name)

|

||||

+ continue

|

||||

+ }

|

||||

desiredEndpoints = append(desiredEndpoints, &discovery.Endpoint{

|

||||

Addresses: []string{i.IP},

|

||||

Conditions: discovery.EndpointConditions{

|

||||

@@ -705,9 +719,9 @@ func (c *Controller) getDesiredEndpoints(service *v1.Service, tenantSlices []*di

|

||||

Serving: &serving,

|

||||

Terminating: &terminating,

|

||||

},

|

||||

- NodeName: &vmi.Status.NodeName,

|

||||

+ NodeName: nodeNamePtr,

|

||||

})

|

||||

- continue

|

||||

+ break

|

||||

}

|

||||

}

|

||||

}

|

||||

diff --git a/pkg/controller/kubevirteps/kubevirteps_controller_test.go b/pkg/controller/kubevirteps/kubevirteps_controller_test.go

|

||||

index 1c97035b4..d205d0bed 100644

|

||||

--- a/pkg/controller/kubevirteps/kubevirteps_controller_test.go

|

||||

+++ b/pkg/controller/kubevirteps/kubevirteps_controller_test.go

|

||||

@@ -771,3 +771,55 @@ var _ = g.Describe("KubevirtEPSController", g.Ordered, func() {

|

||||

|

||||

})

|

||||

})

|

||||

+

|

||||

+var _ = g.Describe("getDesiredEndpoints", func() {

|

||||

+ g.It("should skip endpoints without NodeName and VMIs without NodeName or IP", func() {

|

||||

+ // Setup controller

|

||||

+ ctrl := setupTestKubevirtEPSController().controller

|

||||

+

|

||||

+ // Manually inject dynamic client content (1 VMI with missing NodeName)

|

||||

+ vmi := createUnstructuredVMINode("vmi-without-node", "", "10.0.0.1") // empty NodeName

|

||||

+ _, err := ctrl.infraDynamic.

|

||||

+ Resource(kubevirtv1.VirtualMachineInstanceGroupVersionKind.GroupVersion().WithResource("virtualmachineinstances")).

|

||||

+ Namespace(infraNamespace).

|

||||

+ Create(context.TODO(), vmi, metav1.CreateOptions{})

|

||||

+ Expect(err).To(BeNil())

|

||||

+

|

||||

+ // Create service

|

||||

+ svc := createInfraServiceLB("test-svc", "test-svc", "test-cluster",

|

||||

+ v1.ServicePort{

|

||||

+ Name: "http",

|

||||

+ Port: 80,

|

||||

+ TargetPort: intstr.FromInt(8080),

|

||||

+ Protocol: v1.ProtocolTCP,

|

||||

+ },

|

||||

+ v1.ServiceExternalTrafficPolicyLocal,

|

||||

+ )

|

||||

+

|

||||

+ // One endpoint has nil NodeName, another is valid

|

||||

+ nodeName := "vmi-without-node"

|

||||

+ tenantSlice := &discoveryv1.EndpointSlice{

|

||||

+ ObjectMeta: metav1.ObjectMeta{

|

||||

+ Name: "slice",

|

||||

+ Namespace: tenantNamespace,

|

||||

+ Labels: map[string]string{

|

||||

+ discoveryv1.LabelServiceName: "test-svc",

|

||||

+ },

|

||||

+ },

|

||||

+ AddressType: discoveryv1.AddressTypeIPv4,

|

||||

+ Endpoints: []discoveryv1.Endpoint{

|

||||

+ { // should be skipped due to nil NodeName

|

||||

+ Addresses: []string{"10.1.1.1"},

|

||||

+ NodeName: nil,

|

||||

+ },

|

||||

+ { // will hit VMI without NodeName and also be skipped

|

||||

+ Addresses: []string{"10.1.1.2"},

|

||||

+ NodeName: &nodeName,

|

||||

+ },

|

||||

+ },

|

||||

+ }

|

||||

+

|

||||

+ endpoints := ctrl.getDesiredEndpoints(svc, []*discoveryv1.EndpointSlice{tenantSlice})

|

||||

+ Expect(endpoints).To(HaveLen(0), "Expected no endpoints due to missing NodeName or IP")

|

||||

+ })

|

||||

+})

|

||||

@@ -1 +1 @@

|

||||

ghcr.io/cozystack/cozystack/kubevirt-csi-driver:0.24.0@sha256:4d3728b2050d4e0adb00b9f4abbb4a020b29e1a39f24ca1447806fc81f110fa6

|

||||

ghcr.io/cozystack/cozystack/kubevirt-csi-driver:0.23.1@sha256:b1525163cd21938ac934bb1b860f2f3151464fa463b82880ab058167aeaf3e29

|

||||

|

||||

@@ -1 +1 @@

|

||||

ghcr.io/cozystack/cozystack/ubuntu-container-disk:v1.32@sha256:e53f2394c7aa76ad10818ffb945e40006cd77406999e47e036d41b8b0bf094cc

|

||||

ghcr.io/cozystack/cozystack/ubuntu-container-disk:v1.32@sha256:290264eba144c5c58f68009b17f59a5990f6fafbf768f9cbefd7050c34733930

|

||||

|

||||

@@ -121,21 +121,21 @@ metadata:

|

||||

spec:

|

||||

apiServer:

|

||||

{{- if .Values.controlPlane.apiServer.resources }}

|

||||

resources: {{- include "cozy-lib.resources.sanitize" (list .Values.controlPlane.apiServer.resources $) | nindent 6 }}

|

||||

resources: {{- toYaml .Values.controlPlane.apiServer.resources | nindent 6 }}

|

||||

{{- else if ne .Values.controlPlane.apiServer.resourcesPreset "none" }}

|

||||

resources: {{- include "cozy-lib.resources.preset" (list .Values.controlPlane.apiServer.resourcesPreset $) | nindent 6 }}

|

||||

resources: {{- include "resources.preset" (dict "type" .Values.controlPlane.apiServer.resourcesPreset "Release" .Release) | nindent 6 }}

|

||||

{{- end }}

|

||||

controllerManager:

|

||||

{{- if .Values.controlPlane.controllerManager.resources }}

|

||||

resources: {{- include "cozy-lib.resources.sanitize" (list .Values.controlPlane.controllerManager.resources $) | nindent 6 }}

|

||||

resources: {{- toYaml .Values.controlPlane.controllerManager.resources | nindent 6 }}

|

||||

{{- else if ne .Values.controlPlane.controllerManager.resourcesPreset "none" }}

|

||||

resources: {{- include "cozy-lib.resources.preset" (list .Values.controlPlane.controllerManager.resourcesPreset $) | nindent 6 }}

|

||||

resources: {{- include "resources.preset" (dict "type" .Values.controlPlane.controllerManager.resourcesPreset "Release" .Release) | nindent 6 }}

|

||||

{{- end }}

|

||||

scheduler:

|

||||

{{- if .Values.controlPlane.scheduler.resources }}

|

||||

resources: {{- include "cozy-lib.resources.sanitize" (list .Values.controlPlane.scheduler.resources $) | nindent 6 }}

|

||||

resources: {{- toYaml .Values.controlPlane.scheduler.resources | nindent 6 }}

|

||||

{{- else if ne .Values.controlPlane.scheduler.resourcesPreset "none" }}

|

||||

resources: {{- include "cozy-lib.resources.preset" (list .Values.controlPlane.scheduler.resourcesPreset $) | nindent 6 }}

|

||||

resources: {{- include "resources.preset" (dict "type" .Values.controlPlane.scheduler.resourcesPreset "Release" .Release) | nindent 6 }}

|

||||

{{- end }}

|

||||

dataStoreName: "{{ $etcd }}"

|

||||

addons:

|

||||

@@ -146,9 +146,9 @@ spec:

|

||||

server:

|

||||

port: 8132

|

||||

{{- if .Values.controlPlane.konnectivity.server.resources }}

|

||||

resources: {{- include "cozy-lib.resources.sanitize" (list .Values.controlPlane.konnectivity.server.resources $) | nindent 10 }}

|

||||

resources: {{- toYaml .Values.controlPlane.konnectivity.server.resources | nindent 10 }}

|

||||

{{- else if ne .Values.controlPlane.konnectivity.server.resourcesPreset "none" }}

|

||||

resources: {{- include "cozy-lib.resources.preset" (list .Values.controlPlane.konnectivity.server.resourcesPreset $) | nindent 10 }}

|

||||

resources: {{- include "resources.preset" (dict "type" .Values.controlPlane.konnectivity.server.resourcesPreset "Release" .Release) | nindent 10 }}

|

||||

{{- end }}

|

||||

kubelet:

|

||||

cgroupfs: systemd

|

||||

|

||||

@@ -34,14 +34,3 @@ rules:

|

||||

- {{ $.Release.Name }}-{{ $groupName }}

|

||||

{{- end }}

|

||||

verbs: ["get", "list", "watch"]

|

||||

---

|

||||

kind: RoleBinding

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

metadata:

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

subjects:

|

||||

{{ include "cozy-lib.rbac.subjectsForTenantAndAccessLevel" (list "use" .Release.Namespace) }}

|

||||

roleRef:

|

||||

kind: Role

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

|

||||

@@ -16,7 +16,7 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.8.0

|

||||

version: 0.7.1

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

|

||||

@@ -1 +1 @@

|

||||

ghcr.io/cozystack/cozystack/mariadb-backup:0.8.0@sha256:cfd1c37d8ad24e10681d82d6e6ce8a641b4602c1b0ffa8516ae15b4958bb12d4

|

||||

ghcr.io/cozystack/cozystack/mariadb-backup:0.7.1@sha256:cfd1c37d8ad24e10681d82d6e6ce8a641b4602c1b0ffa8516ae15b4958bb12d4

|

||||

|

||||

@@ -25,14 +25,3 @@ rules:

|

||||

resourceNames:

|

||||

- {{ .Release.Name }}

|

||||

verbs: ["get", "list", "watch"]

|

||||

---

|

||||

kind: RoleBinding

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

metadata:

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

subjects:

|

||||

{{ include "cozy-lib.rbac.subjectsForTenantAndAccessLevel" (list "use" .Release.Namespace) }}

|

||||

roleRef:

|

||||

kind: Role

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

|

||||

@@ -16,7 +16,7 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.7.0

|

||||

version: 0.6.1

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

|

||||

@@ -24,14 +24,3 @@ rules:

|

||||

resourceNames:

|

||||

- {{ .Release.Name }}

|

||||

verbs: ["get", "list", "watch"]

|

||||

---

|

||||

kind: RoleBinding

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

metadata:

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

subjects:

|

||||

{{ include "cozy-lib.rbac.subjectsForTenantAndAccessLevel" (list "use" .Release.Namespace) }}

|

||||

roleRef:

|

||||

kind: Role

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

@@ -16,7 +16,7 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.14.0

|

||||

version: 0.12.1

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

|

||||

@@ -1 +1 @@

|

||||

ghcr.io/cozystack/cozystack/postgres-backup:0.14.0@sha256:10179ed56457460d95cd5708db2a00130901255fa30c4dd76c65d2ef5622b61f

|

||||

ghcr.io/cozystack/cozystack/postgres-backup:0.12.1@sha256:10179ed56457460d95cd5708db2a00130901255fa30c4dd76c65d2ef5622b61f

|

||||

|

||||

@@ -1,11 +0,0 @@

|

||||

{{/*

|

||||

Generate resource requirements based on ResourceQuota named as the namespace.

|

||||

*/}}

|

||||

{{- define "postgresjobs.defaultResources" }}

|

||||

cpu: "1"

|

||||

memory: 512Mi

|

||||

{{- end }}

|

||||

{{- define "postgresjobs.resources" }}

|

||||

resources:

|

||||

{{ include "cozy-lib.resources.sanitize" (list (include "postgresjobs.defaultResources" $ | fromYaml) $) | nindent 2 }}

|

||||

{{- end }}

|

||||

@@ -81,7 +81,6 @@ spec:

|

||||

privileged: false

|

||||

readOnlyRootFilesystem: true

|

||||

runAsNonRoot: true

|

||||

{{- include "postgresjobs.resources" . | nindent 12 }}

|

||||

volumes:

|

||||

- name: scripts

|

||||

secret:

|

||||

|

||||

@@ -26,14 +26,3 @@ rules:

|

||||

resourceNames:

|

||||

- {{ .Release.Name }}

|

||||

verbs: ["get", "list", "watch"]

|

||||

---

|

||||

kind: RoleBinding

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

metadata:

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

subjects:

|

||||

{{ include "cozy-lib.rbac.subjectsForTenantAndAccessLevel" (list "use" .Release.Namespace) }}

|

||||

roleRef:

|

||||

kind: Role

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

|

||||

@@ -50,7 +50,6 @@ spec:

|

||||

name: secret

|

||||

- mountPath: /scripts

|

||||

name: scripts

|

||||

{{- include "postgresjobs.resources" . | nindent 8 }}

|

||||

securityContext:

|

||||

fsGroup: 26

|

||||

runAsGroup: 26

|

||||

|

||||

@@ -16,7 +16,7 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.7.0

|

||||

version: 0.6.0

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

|

||||

@@ -27,14 +27,3 @@ rules:

|

||||

resourceNames:

|

||||

- {{ .Release.Name }}

|

||||

verbs: ["get", "list", "watch"]

|

||||

---

|

||||

kind: RoleBinding

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

metadata:

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

subjects:

|

||||

{{ include "cozy-lib.rbac.subjectsForTenantAndAccessLevel" (list "use" .Release.Namespace) }}

|

||||

roleRef:

|

||||

kind: Role

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

|

||||

@@ -16,7 +16,7 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.8.0

|

||||

version: 0.7.1

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

|

||||

@@ -28,14 +28,3 @@ rules:

|

||||

- {{ .Release.Name }}-redis

|

||||

- {{ .Release.Name }}-sentinel

|

||||

verbs: ["get", "list", "watch"]

|

||||

---

|

||||

kind: RoleBinding

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

metadata:

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

subjects:

|

||||

{{ include "cozy-lib.rbac.subjectsForTenantAndAccessLevel" (list "use" .Release.Namespace) }}

|

||||

roleRef:

|

||||

kind: Role

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

|

||||

@@ -4,4 +4,4 @@ description: Separated tenant namespace

|

||||

icon: /logos/tenant.svg

|

||||

|

||||

type: application

|

||||

version: 1.10.0

|

||||

version: 1.9.3

|

||||

|

||||

@@ -1 +0,0 @@

|

||||

../../../library/cozy-lib

|

||||

@@ -23,8 +23,8 @@ metadata:

|

||||

namespace: {{ include "tenant.name" . }}

|

||||

rules:

|

||||

- apiGroups: [""]

|

||||

resources: ["pods", "services", "persistentvolumes", "endpoints", "events", "resourcequotas"]

|

||||

verbs: ["get", "list", "watch"]

|

||||

resources: ["*"]

|

||||

verbs: ["get", "list", "watch", "create", "update", "patch"]

|

||||

- apiGroups: ["networking.k8s.io"]

|

||||

resources: ["ingresses"]

|

||||

verbs: ["get", "list", "watch"]

|

||||

@@ -94,12 +94,7 @@ rules:

|

||||

- apiGroups:

|

||||

- ""

|

||||

resources:

|

||||

- pods

|

||||

- services

|

||||

- persistentvolumes

|

||||

- endpoints

|

||||

- events

|

||||

- resourcequotas

|

||||

- "*"

|

||||

verbs:

|

||||

- get

|

||||

- list

|

||||

@@ -124,7 +119,24 @@ metadata:

|

||||

name: {{ include "tenant.name" . }}-view

|

||||

namespace: {{ include "tenant.name" . }}

|

||||

subjects:

|

||||

{{ include "cozy-lib.rbac.subjectsForTenant" (list "view" (include "tenant.name" .)) | nindent 2 }}

|

||||

{{- if ne .Release.Namespace "tenant-root" }}

|

||||

- kind: Group

|

||||

name: tenant-root-view

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

{{- end }}

|

||||

- kind: Group

|

||||

name: {{ include "tenant.name" . }}-view

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

{{- if hasPrefix "tenant-" .Release.Namespace }}

|

||||

{{- $parts := splitList "-" .Release.Namespace }}

|

||||

{{- range $i, $v := $parts }}

|

||||

{{- if ne $i 0 }}

|

||||

- kind: Group

|

||||

name: {{ join "-" (slice $parts 0 (add $i 1)) }}-view

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

roleRef:

|

||||

kind: Role

|

||||

name: {{ include "tenant.name" . }}-view

|

||||

@@ -153,12 +165,7 @@ rules:

|

||||

- watch

|

||||

- apiGroups: [""]

|

||||

resources:

|

||||

- pods

|

||||

- services

|

||||

- persistentvolumes

|

||||

- endpoints

|

||||

- events

|

||||

- resourcequotas

|

||||

- "*"

|

||||

verbs:

|

||||

- get

|

||||

- list

|

||||

@@ -195,7 +202,24 @@ metadata:

|

||||

name: {{ include "tenant.name" . }}-use

|

||||

namespace: {{ include "tenant.name" . }}

|

||||

subjects:

|

||||

{{ include "cozy-lib.rbac.subjectsForTenant" (list "use" (include "tenant.name" .)) | nindent 2 }}

|

||||

{{- if ne .Release.Namespace "tenant-root" }}

|

||||

- kind: Group

|

||||

name: tenant-root-use

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

{{- end }}

|

||||

- kind: Group

|

||||

name: {{ include "tenant.name" . }}-use

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

{{- if hasPrefix "tenant-" .Release.Namespace }}

|

||||

{{- $parts := splitList "-" .Release.Namespace }}

|

||||

{{- range $i, $v := $parts }}

|

||||

{{- if ne $i 0 }}

|

||||

- kind: Group

|

||||

name: {{ join "-" (slice $parts 0 (add $i 1)) }}-use

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

roleRef:

|

||||

kind: Role

|

||||

name: {{ include "tenant.name" . }}-use

|

||||

@@ -216,12 +240,7 @@ rules:

|

||||

- get

|

||||

- apiGroups: [""]

|

||||

resources:

|

||||

- pods

|

||||

- services

|

||||

- persistentvolumes

|

||||

- endpoints

|

||||

- events

|

||||

- resourcequotas

|

||||

- "*"

|

||||

verbs:

|

||||

- get

|

||||

- list

|

||||

@@ -286,7 +305,24 @@ metadata:

|

||||

name: {{ include "tenant.name" . }}-admin

|

||||

namespace: {{ include "tenant.name" . }}

|

||||

subjects:

|

||||

{{ include "cozy-lib.rbac.subjectsForTenant" (list "admin" (include "tenant.name" .)) | nindent 2 }}

|

||||

{{- if ne .Release.Namespace "tenant-root" }}

|

||||

- kind: Group

|

||||

name: tenant-root-admin

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

{{- end }}

|

||||

- kind: Group

|

||||

name: {{ include "tenant.name" . }}-admin

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

{{- if hasPrefix "tenant-" .Release.Namespace }}

|

||||

{{- $parts := splitList "-" .Release.Namespace }}

|

||||

{{- range $i, $v := $parts }}

|

||||

{{- if ne $i 0 }}

|

||||

- kind: Group

|

||||

name: {{ join "-" (slice $parts 0 (add $i 1)) }}-admin

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

roleRef:

|

||||

kind: Role

|

||||

name: {{ include "tenant.name" . }}-admin

|

||||

@@ -307,12 +343,7 @@ rules:

|

||||

- get

|

||||

- apiGroups: [""]

|

||||

resources:

|

||||

- pods

|

||||

- services

|

||||

- persistentvolumes

|

||||

- endpoints

|

||||

- events

|

||||

- resourcequotas

|

||||

- "*"

|

||||

verbs:

|

||||

- get

|

||||

- list

|

||||

@@ -353,7 +384,24 @@ metadata:

|

||||

name: {{ include "tenant.name" . }}-super-admin

|

||||

namespace: {{ include "tenant.name" . }}

|

||||

subjects:

|

||||

{{ include "cozy-lib.rbac.subjectsForTenant" (list "super-admin" (include "tenant.name" .) ) | nindent 2 }}

|

||||

{{- if ne .Release.Namespace "tenant-root" }}

|

||||

- kind: Group

|

||||

name: tenant-root-super-admin

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

{{- end }}

|

||||

- kind: Group

|

||||

name: {{ include "tenant.name" . }}-super-admin

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

{{- if hasPrefix "tenant-" .Release.Namespace }}

|

||||

{{- $parts := splitList "-" .Release.Namespace }}

|

||||

{{- range $i, $v := $parts }}

|

||||

{{- if ne $i 0 }}

|

||||

- kind: Group

|

||||

name: {{ join "-" (slice $parts 0 (add $i 1)) }}-super-admin

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

roleRef:

|

||||

kind: Role

|

||||

name: {{ include "tenant.name" . }}-super-admin

|

||||

|

||||

@@ -1,5 +1,4 @@

|

||||

bucket 0.1.0 632224a3

|

||||

bucket 0.2.0 HEAD

|

||||

bucket 0.1.0 HEAD

|

||||

clickhouse 0.1.0 f7eaab0a

|

||||

clickhouse 0.2.0 53f2365e

|

||||

clickhouse 0.2.1 dfbc210b

|

||||

@@ -11,8 +10,7 @@ clickhouse 0.6.1 c62a83a7

|

||||

clickhouse 0.6.2 8267072d

|

||||

clickhouse 0.7.0 93bdf411

|

||||

clickhouse 0.9.0 6130f43d

|

||||

clickhouse 0.9.2 632224a3

|

||||

clickhouse 0.10.0 HEAD

|

||||

clickhouse 0.9.2 HEAD

|

||||

ferretdb 0.1.0 e9716091

|

||||

ferretdb 0.1.1 91b0499a

|

||||

ferretdb 0.2.0 6c5cf5bf

|

||||

@@ -22,8 +20,7 @@ ferretdb 0.4.1 1ec10165

|

||||

ferretdb 0.4.2 8267072d

|

||||

ferretdb 0.5.0 93bdf411

|

||||

ferretdb 0.6.0 6130f43d

|

||||

ferretdb 0.6.1 632224a3

|

||||

ferretdb 0.7.0 HEAD

|

||||

ferretdb 0.6.1 HEAD

|

||||

http-cache 0.1.0 263e47be

|

||||

http-cache 0.2.0 53f2365e

|

||||

http-cache 0.3.0 6c5cf5bf

|

||||

@@ -43,8 +40,7 @@ kafka 0.3.3 8267072d

|

||||

kafka 0.4.0 85ec09b8

|

||||

kafka 0.5.0 93bdf411

|

||||

kafka 0.6.0 6130f43d

|

||||

kafka 0.6.1 632224a3

|

||||

kafka 0.7.0 HEAD

|

||||

kafka 0.6.1 HEAD

|

||||

kubernetes 0.1.0 263e47be

|

||||

kubernetes 0.2.0 53f2365e

|

||||

kubernetes 0.3.0 007d414f

|

||||

@@ -75,8 +71,7 @@ kubernetes 0.19.0 93bdf411

|

||||

kubernetes 0.20.0 609e7ede

|

||||

kubernetes 0.20.1 f9f8bb2f

|

||||

kubernetes 0.21.0 6130f43d

|

||||

kubernetes 0.23.1 632224a3

|

||||

kubernetes 0.24.0 HEAD

|

||||

kubernetes 0.23.1 HEAD

|

||||

mysql 0.1.0 263e47be

|

||||

mysql 0.2.0 c24a103f

|

||||

mysql 0.3.0 53f2365e

|

||||

@@ -87,8 +82,7 @@ mysql 0.5.2 1ec10165

|

||||

mysql 0.5.3 8267072d

|

||||

mysql 0.6.0 93bdf411

|

||||

mysql 0.7.0 6130f43d

|

||||

mysql 0.7.1 632224a3

|

||||

mysql 0.8.0 HEAD

|

||||

mysql 0.7.1 HEAD

|

||||

nats 0.1.0 e9716091

|

||||

nats 0.2.0 6c5cf5bf

|

||||

nats 0.3.0 78366f19

|

||||

@@ -97,8 +91,7 @@ nats 0.4.0 898374b5

|

||||

nats 0.4.1 8267072d

|

||||

nats 0.5.0 93bdf411

|

||||

nats 0.6.0 6130f43d

|

||||

nats 0.6.1 632224a3

|

||||

nats 0.7.0 HEAD

|

||||

nats 0.6.1 HEAD

|

||||

postgres 0.1.0 263e47be

|

||||

postgres 0.2.0 53f2365e

|

||||

postgres 0.2.1 d7cfa53c

|

||||

@@ -116,8 +109,7 @@ postgres 0.10.0 721c12a7

|

||||

postgres 0.10.1 93bdf411

|

||||

postgres 0.11.0 f9f8bb2f

|

||||

postgres 0.12.0 6130f43d

|

||||

postgres 0.12.1 632224a3

|

||||

postgres 0.14.0 HEAD

|