mirror of

https://github.com/outbackdingo/cozystack.git

synced 2026-02-05 00:15:51 +00:00

Compare commits

1 Commits

v0.32.0-be

...

tests-kube

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

8bf2e67c4d |

7

.github/workflows/pre-commit.yml

vendored

7

.github/workflows/pre-commit.yml

vendored

@@ -30,13 +30,12 @@ jobs:

|

||||

run: |

|

||||

sudo apt update

|

||||

sudo apt install curl -y

|

||||

curl -fsSL https://deb.nodesource.com/setup_16.x | sudo -E bash -

|

||||

sudo apt install nodejs -y

|

||||

sudo apt install npm -y

|

||||

|

||||

git clone --branch 2.7.0 --depth 1 https://github.com/bitnami/readme-generator-for-helm.git

|

||||

git clone https://github.com/bitnami/readme-generator-for-helm

|

||||

cd ./readme-generator-for-helm

|

||||

npm install

|

||||

npm install -g @yao-pkg/pkg

|

||||

npm install -g pkg

|

||||

pkg . -o /usr/local/bin/readme-generator

|

||||

|

||||

- name: Run pre-commit hooks

|

||||

|

||||

59

.github/workflows/pull-requests-release.yaml

vendored

59

.github/workflows/pull-requests-release.yaml

vendored

@@ -16,6 +16,7 @@ jobs:

|

||||

contents: read

|

||||

packages: write

|

||||

|

||||

# Run only when the PR carries the "release" label and not closed.

|

||||

if: |

|

||||

contains(github.event.pull_request.labels.*.name, 'release') &&

|

||||

github.event.action != 'closed'

|

||||

@@ -34,64 +35,6 @@ jobs:

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

registry: ghcr.io

|

||||

|

||||

- name: Extract tag from PR branch

|

||||

id: get_tag

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

script: |

|

||||

const branch = context.payload.pull_request.head.ref;

|

||||

const m = branch.match(/^release-(\d+\.\d+\.\d+(?:[-\w\.]+)?)$/);

|

||||

if (!m) {

|

||||

core.setFailed(`❌ Branch '${branch}' does not match 'release-X.Y.Z[-suffix]'`);

|

||||

return;

|

||||

}

|

||||

const tag = `v${m[1]}`;

|

||||

core.setOutput('tag', tag);

|

||||

|

||||

- name: Find draft release and get asset IDs

|

||||

id: fetch_assets

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

github-token: ${{ secrets.GH_PAT }}

|

||||

script: |

|

||||

const tag = '${{ steps.get_tag.outputs.tag }}';

|

||||

const releases = await github.rest.repos.listReleases({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

per_page: 100

|

||||

});

|

||||

const draft = releases.data.find(r => r.tag_name === tag && r.draft);

|

||||

if (!draft) {

|

||||

core.setFailed(`Draft release '${tag}' not found`);

|

||||

return;

|

||||

}

|

||||

const findAssetId = (name) =>

|

||||

draft.assets.find(a => a.name === name)?.id;

|

||||

const installerId = findAssetId("cozystack-installer.yaml");

|

||||

const diskId = findAssetId("nocloud-amd64.raw.xz");

|

||||

if (!installerId || !diskId) {

|

||||

core.setFailed("Missing required assets");

|

||||

return;

|

||||

}

|

||||

core.setOutput("installer_id", installerId);

|

||||

core.setOutput("disk_id", diskId);

|

||||

|

||||

- name: Download assets from GitHub API

|

||||

run: |

|

||||

mkdir -p _out/assets

|

||||

curl -sSL \

|

||||

-H "Authorization: token ${GH_PAT}" \

|

||||

-H "Accept: application/octet-stream" \

|

||||

-o _out/assets/cozystack-installer.yaml \

|

||||

"https://api.github.com/repos/${GITHUB_REPOSITORY}/releases/assets/${{ steps.fetch_assets.outputs.installer_id }}"

|

||||

curl -sSL \

|

||||

-H "Authorization: token ${GH_PAT}" \

|

||||

-H "Accept: application/octet-stream" \

|

||||

-o _out/assets/nocloud-amd64.raw.xz \

|

||||

"https://api.github.com/repos/${GITHUB_REPOSITORY}/releases/assets/${{ steps.fetch_assets.outputs.disk_id }}"

|

||||

env:

|

||||

GH_PAT: ${{ secrets.GH_PAT }}

|

||||

|

||||

- name: Run tests

|

||||

run: make test

|

||||

|

||||

|

||||

45

.github/workflows/pull-requests.yaml

vendored

45

.github/workflows/pull-requests.yaml

vendored

@@ -9,8 +9,8 @@ concurrency:

|

||||

cancel-in-progress: true

|

||||

|

||||

jobs:

|

||||

build:

|

||||

name: Build

|

||||

e2e:

|

||||

name: Build and Test

|

||||

runs-on: [self-hosted]

|

||||

permissions:

|

||||

contents: read

|

||||

@@ -33,50 +33,9 @@ jobs:

|

||||

username: ${{ github.repository_owner }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

registry: ghcr.io

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

|

||||

- name: Build

|

||||

run: make build

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

|

||||

- name: Build Talos image

|

||||

run: make -C packages/core/installer talos-nocloud

|

||||

|

||||

- name: Upload installer

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: cozystack-installer

|

||||

path: _out/assets/cozystack-installer.yaml

|

||||

|

||||

- name: Upload Talos image

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: talos-image

|

||||

path: _out/assets/nocloud-amd64.raw.xz

|

||||

|

||||

test:

|

||||

name: Test

|

||||

runs-on: [self-hosted]

|

||||

needs: build

|

||||

|

||||

# Never run when the PR carries the "release" label.

|

||||

if: |

|

||||

!contains(github.event.pull_request.labels.*.name, 'release')

|

||||

|

||||

steps:

|

||||

- name: Download installer

|

||||

uses: actions/download-artifact@v4

|

||||

with:

|

||||

name: cozystack-installer

|

||||

path: _out/assets/

|

||||

|

||||

- name: Download Talos image

|

||||

uses: actions/download-artifact@v4

|

||||

with:

|

||||

name: talos-image

|

||||

path: _out/assets/

|

||||

|

||||

- name: Test

|

||||

run: make test

|

||||

|

||||

4

.github/workflows/tags.yaml

vendored

4

.github/workflows/tags.yaml

vendored

@@ -99,15 +99,11 @@ jobs:

|

||||

username: ${{ github.repository_owner }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

registry: ghcr.io

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

|

||||

# Build project artifacts

|

||||

- name: Build

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

run: make build

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

|

||||

# Commit built artifacts

|

||||

- name: Commit release artifacts

|

||||

|

||||

2

Makefile

2

Makefile

@@ -43,7 +43,7 @@ manifests:

|

||||

(cd packages/core/installer/; helm template -n cozy-installer installer .) > _out/assets/cozystack-installer.yaml

|

||||

|

||||

assets:

|

||||

make -C packages/core/installer assets

|

||||

make -C packages/core/installer/ assets

|

||||

|

||||

test:

|

||||

make -C packages/core/testing apply

|

||||

|

||||

12

README.md

12

README.md

@@ -12,15 +12,11 @@

|

||||

|

||||

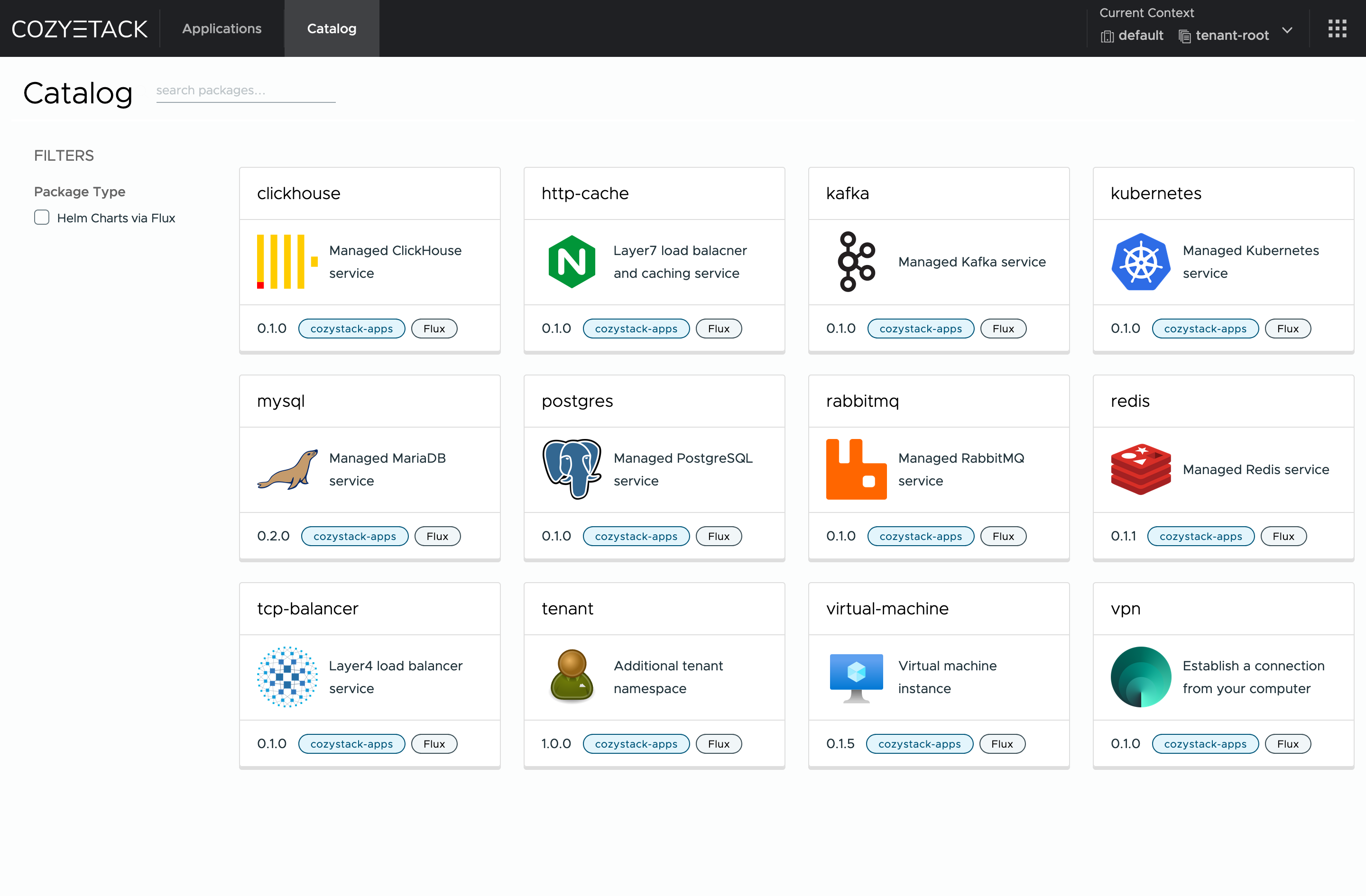

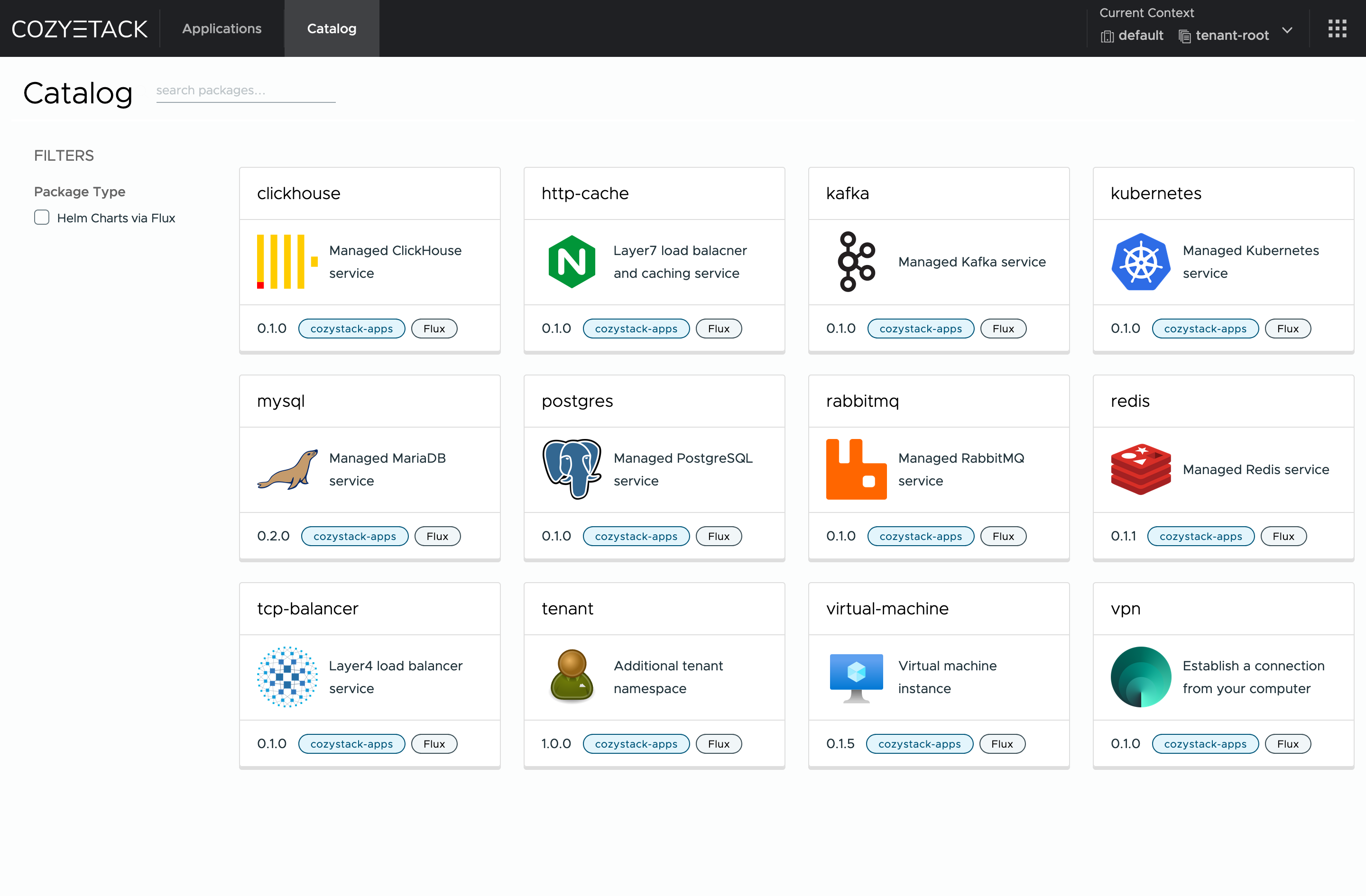

**Cozystack** is a free PaaS platform and framework for building clouds.

|

||||

|

||||

Cozystack is a [CNCF Sandbox Level Project](https://www.cncf.io/sandbox-projects/) that was originally built and sponsored by [Ænix](https://aenix.io/).

|

||||

|

||||

With Cozystack, you can transform a bunch of servers into an intelligent system with a simple REST API for spawning Kubernetes clusters,

|

||||

Database-as-a-Service, virtual machines, load balancers, HTTP caching services, and other services with ease.

|

||||

|

||||

Use Cozystack to build your own cloud or provide a cost-effective development environment.

|

||||

|

||||

|

||||

|

||||

## Use-Cases

|

||||

|

||||

* [**Using Cozystack to build a public cloud**](https://cozystack.io/docs/guides/use-cases/public-cloud/)

|

||||

@@ -32,6 +28,9 @@ You can use Cozystack as a platform to build a private cloud powered by Infrastr

|

||||

* [**Using Cozystack as a Kubernetes distribution**](https://cozystack.io/docs/guides/use-cases/kubernetes-distribution/)

|

||||

You can use Cozystack as a Kubernetes distribution for Bare Metal

|

||||

|

||||

## Screenshot

|

||||

|

||||

|

||||

|

||||

## Documentation

|

||||

|

||||

@@ -60,10 +59,7 @@ Commits are used to generate the changelog, and their author will be referenced

|

||||

|

||||

If you have **Feature Requests** please use the [Discussion's Feature Request section](https://github.com/cozystack/cozystack/discussions/categories/feature-requests).

|

||||

|

||||

## Community

|

||||

|

||||

You are welcome to join our [Telegram group](https://t.me/cozystack) and come to our weekly community meetings.

|

||||

Add them to your [Google Calendar](https://calendar.google.com/calendar?cid=ZTQzZDIxZTVjOWI0NWE5NWYyOGM1ZDY0OWMyY2IxZTFmNDMzZTJlNjUzYjU2ZGJiZGE3NGNhMzA2ZjBkMGY2OEBncm91cC5jYWxlbmRhci5nb29nbGUuY29t) or [iCal](https://calendar.google.com/calendar/ical/e43d21e5c9b45a95f28c5d649c2cb1e1f433e2e653b56dbbda74ca306f0d0f68%40group.calendar.google.com/public/basic.ics) for convenience.

|

||||

You are welcome to join our weekly community meetings (just add this events to your [Google Calendar](https://calendar.google.com/calendar?cid=ZTQzZDIxZTVjOWI0NWE5NWYyOGM1ZDY0OWMyY2IxZTFmNDMzZTJlNjUzYjU2ZGJiZGE3NGNhMzA2ZjBkMGY2OEBncm91cC5jYWxlbmRhci5nb29nbGUuY29t) or [iCal](https://calendar.google.com/calendar/ical/e43d21e5c9b45a95f28c5d649c2cb1e1f433e2e653b56dbbda74ca306f0d0f68%40group.calendar.google.com/public/basic.ics)) or [Telegram group](https://t.me/cozystack).

|

||||

|

||||

## License

|

||||

|

||||

|

||||

@@ -194,15 +194,7 @@ func main() {

|

||||

Client: mgr.GetClient(),

|

||||

Scheme: mgr.GetScheme(),

|

||||

}).SetupWithManager(mgr); err != nil {

|

||||

setupLog.Error(err, "unable to create controller", "controller", "TenantHelmReconciler")

|

||||

os.Exit(1)

|

||||

}

|

||||

|

||||

if err = (&controller.CozystackConfigReconciler{

|

||||

Client: mgr.GetClient(),

|

||||

Scheme: mgr.GetScheme(),

|

||||

}).SetupWithManager(mgr); err != nil {

|

||||

setupLog.Error(err, "unable to create controller", "controller", "CozystackConfigReconciler")

|

||||

setupLog.Error(err, "unable to create controller", "controller", "Workload")

|

||||

os.Exit(1)

|

||||

}

|

||||

|

||||

|

||||

@@ -1,129 +1,39 @@

|

||||

Cozystack v0.31.0 is a significant release that brings new features, key fixes, and updates to underlying components.

|

||||

This version enhances GPU support, improves many components of Cozystack, and introduces a more robust release process to improve stability.

|

||||

Below, we'll go over the highlights in each area for current users, developers, and our community.

|

||||

This is the third release candidate for the upcoming Cozystack v0.31.0 release.

|

||||

The release notes show changes accumulated since the release of previous version, Cozystack v0.30.0.

|

||||

|

||||

## Major Features and Improvements

|

||||

Cozystack 0.31.0 further advances GPU support, monitoring, and all-around convenience features.

|

||||

|

||||

### GPU support for tenant Kubernetes clusters

|

||||

## New Features and Changes

|

||||

|

||||

Cozystack now integrates NVIDIA GPU Operator support for tenant Kubernetes clusters.

|

||||

This enables platform users to run GPU-powered AI/ML applications in their own clusters.

|

||||

To enable GPU Operator, set `addons.gpuOperator.enabled: true` in the cluster configuration.

|

||||

(@kvaps in https://github.com/cozystack/cozystack/pull/834)

|

||||

|

||||

Check out Andrei Kvapil's CNCF webinar [showcasing the GPU support by running Stable Diffusion in Cozystack](https://www.youtube.com/watch?v=S__h_QaoYEk).

|

||||

|

||||

<!--

|

||||

* [kubernetes] Introduce GPU support for tenant Kubernetes clusters. (@kvaps in https://github.com/cozystack/cozystack/pull/834)

|

||||

-->

|

||||

|

||||

### Cilium Improvements

|

||||

|

||||

Cozystack’s Cilium integration received two significant enhancements.

|

||||

First, Gateway API support in Cilium is now enabled, allowing advanced L4/L7 routing features via Kubernetes Gateway API.

|

||||

We thank Zdenek Janda @zdenekjanda for contributing this feature in https://github.com/cozystack/cozystack/pull/924.

|

||||

|

||||

Second, Cozystack now permits custom user-provided parameters in the tenant cluster’s Cilium configuration.

|

||||

(@lllamnyp in https://github.com/cozystack/cozystack/pull/917)

|

||||

|

||||

<!--

|

||||

* [cilium] Enable Cilium Gateway API. (@zdenekjanda in https://github.com/cozystack/cozystack/pull/924)

|

||||

* [cilium] Enable user-added parameters in a tenant cluster Cilium. (@lllamnyp in https://github.com/cozystack/cozystack/pull/917)

|

||||

-->

|

||||

|

||||

### Cross-Architecture Builds (ARM Support Beta)

|

||||

|

||||

Cozystack's build system was refactored to support multi-architecture binaries and container images.

|

||||

This paves the road to running Cozystack on ARM64 servers.

|

||||

Changes include Makefile improvements (https://github.com/cozystack/cozystack/pull/907)

|

||||

and multi-arch Docker image builds (https://github.com/cozystack/cozystack/pull/932 and https://github.com/cozystack/cozystack/pull/970).

|

||||

|

||||

We thank Nikita Bykov @nbykov0 for his ongoing work on ARM support!

|

||||

|

||||

<!--

|

||||

* Introduce support for cross-architecture builds and Cozystack on ARM:

|

||||

* [build] Refactor Makefiles introducing build variables. (@nbykov0 in https://github.com/cozystack/cozystack/pull/907)

|

||||

* [build] Add support for multi-architecture and cross-platform image builds. (@nbykov0 in https://github.com/cozystack/cozystack/pull/932 and https://github.com/cozystack/cozystack/pull/970)

|

||||

-->

|

||||

|

||||

### VerticalPodAutoscaler (VPA) Expansion

|

||||

|

||||

The VerticalPodAutoscaler is now enabled for more Cozystack components to automate resource tuning.

|

||||

Specifically, VPA was added for tenant Kubernetes control planes (@klinch0 in https://github.com/cozystack/cozystack/pull/806),

|

||||

the Cozystack Dashboard (https://github.com/cozystack/cozystack/pull/828),

|

||||

and the Cozystack etcd-operator (https://github.com/cozystack/cozystack/pull/850).

|

||||

All Cozystack components that have VPA enabled can automatically adjust their CPU and memory requests based on usage, improving platform and application stability.

|

||||

|

||||

<!--

|

||||

* Add VerticalPodAutoscaler to a few more components:

|

||||

* [kubernetes] Kubernetes clusters in user tenants. (@klinch0 in https://github.com/cozystack/cozystack/pull/806)

|

||||

* [platform] Cozystack dashboard. (@klinch0 in https://github.com/cozystack/cozystack/pull/828)

|

||||

* [platform] Cozystack etcd-operator (@klinch0 in https://github.com/cozystack/cozystack/pull/850)

|

||||

-->

|

||||

|

||||

### Tenant HelmRelease Reconcile Controller

|

||||

|

||||

A new controller was introduced to monitor and synchronize HelmRelease resources across tenants.

|

||||

This controller propagates configuration changes to tenant workloads and ensures that any HelmRelease defined in a tenant

|

||||

stays in sync with platform updates.

|

||||

It improves the reliability of deploying managed applications in Cozystack.

|

||||

(@klinch0 in https://github.com/cozystack/cozystack/pull/870)

|

||||

|

||||

<!--

|

||||

* Introduce support for cross-architecture builds and Cozystack on ARM:

|

||||

* [build] Refactor Makefiles introducing build variables. (@nbykov0 in https://github.com/cozystack/cozystack/pull/907)

|

||||

* [build] Add support for multi-architecture and cross-platform image builds. (@nbykov0 in https://github.com/cozystack/cozystack/pull/932 and https://github.com/cozystack/cozystack/pull/970)

|

||||

* [platform] Introduce a new controller to synchronize tenant HelmReleases and propagate configuration changes. (@klinch0 in https://github.com/cozystack/cozystack/pull/870)

|

||||

-->

|

||||

|

||||

### Virtual Machine Improvements

|

||||

|

||||

**Configurable KubeVirt CPU Overcommit**: The CPU allocation ratio in KubeVirt (how virtual CPUs are overcommitted relative to physical) is now configurable

|

||||

via the `cpu-allocation-ratio` value in the Cozystack configmap.

|

||||

This means Cozystack administrators can now tune CPU overcommitment for VMs to balance performance vs. density.

|

||||

(@lllamnyp in https://github.com/cozystack/cozystack/pull/905)

|

||||

|

||||

**KubeVirt VM Export**: Cozystack now allows exporting KubeVirt virtual machines.

|

||||

This feature, enabled via KubeVirt's `VirtualMachineExport` capability, lets users snapshot or back up VM images.

|

||||

(@kvaps in https://github.com/cozystack/cozystack/pull/808)

|

||||

|

||||

**Support for various storage classes in Virtual Machines**: The `virtual-machine` application (since version 0.9.2) lets you pick any StorageClass for a VM's

|

||||

system disk instead of relying on a hard-coded PVC.

|

||||

Refer to values `systemDisk.storage` and `systemDisk.storageClass` in the [application's configs](https://cozystack.io/docs/reference/applications/virtual-machine/#common-parameters).

|

||||

(@kvaps in https://github.com/cozystack/cozystack/pull/974)

|

||||

|

||||

<!--

|

||||

* [platform] Introduce options `expose-services`, `expose-ingress` and `expose-external-ips` to the ingress service. (@kvaps in https://github.com/cozystack/cozystack/pull/929)

|

||||

* [kubevirt] Enable exporting VMs. (@kvaps in https://github.com/cozystack/cozystack/pull/808)

|

||||

* [kubevirt] Make KubeVirt's CPU allocation ratio configurable. (@lllamnyp in https://github.com/cozystack/cozystack/pull/905)

|

||||

* [virtual-machine] Add support for various storages. (@kvaps in https://github.com/cozystack/cozystack/pull/974)

|

||||

-->

|

||||

|

||||

### Other Features and Improvements

|

||||

|

||||

* [platform] Introduce options `expose-services`, `expose-ingress`, and `expose-external-ips` to the ingress service. (@kvaps in https://github.com/cozystack/cozystack/pull/929)

|

||||

* [cozystack-controller] Record the IP address pool and storage class in Workload objects. (@lllamnyp in https://github.com/cozystack/cozystack/pull/831)

|

||||

* [cilium] Enable Cilium Gateway API. (@zdenekjanda in https://github.com/cozystack/cozystack/pull/924)

|

||||

* [cilium] Enable user-added parameters in a tenant cluster Cilium. (@lllamnyp in https://github.com/cozystack/cozystack/pull/917)

|

||||

* [apps] Remove user-facing config of limits and requests. (@lllamnyp in https://github.com/cozystack/cozystack/pull/935)

|

||||

|

||||

## New Release Lifecycle

|

||||

|

||||

Cozystack release lifecycle is changing to provide a more stable and predictable lifecycle to customers running Cozystack in mission-critical environments.

|

||||

|

||||

* **Gradual Release with Alpha, Beta, and Release Candidates**: Cozystack will now publish pre-release versions (alpha, beta, release candidates) before a stable release.

|

||||

Starting with v0.31.0, the team made three release candidates before releasing version v0.31.0.

|

||||

This allows more testing and feedback before marking a release as stable.

|

||||

|

||||

* **Prolonged Release Support with Patch Versions**: After the initial `vX.Y.0` release, a long-lived branch `release-X.Y` will be created to backport fixes.

|

||||

For example, with 0.31.0’s release, a `release-0.31` branch will track patch fixes (`0.31.x`).

|

||||

This strategy lets Cozystack users receive timely patch releases and updates with minimal risks.

|

||||

|

||||

To implement these new changes, we have rebuilt our CI/CD workflows and introduced automation, enabling automatic backports.

|

||||

You can read more about how it's implemented in the Development section below.

|

||||

|

||||

For more information, read the [Cozystack Release Workflow](https://github.com/cozystack/cozystack/blob/main/docs/release.md) documentation.

|

||||

* Update the Cozystack release policy to include long-lived release branches and start with release candidates. Update CI workflows and docs accordingly.

|

||||

* Use release branches `release-X.Y` for gathering and releasing fixes after initial `vX.Y.0` release. (@kvaps in https://github.com/cozystack/cozystack/pull/816)

|

||||

* Automatically create release branches after initial `vX.Y.0` release is published. (@kvaps in https://github.com/cozystack/cozystack/pull/886)

|

||||

* Introduce Release Candidate versions. Automate patch backporting by applying patches from pull requests labeled `[backport]` to the current release branch. (@kvaps in https://github.com/cozystack/cozystack/pull/841 and https://github.com/cozystack/cozystack/pull/901, @nickvolynkin in https://github.com/cozystack/cozystack/pull/890)

|

||||

* Support alpha and beta pre-releases. (@kvaps in https://github.com/cozystack/cozystack/pull/978)

|

||||

* Commit changes in release pipelines under `github-actions <github-actions@github.com>`. (@kvaps in https://github.com/cozystack/cozystack/pull/823)

|

||||

* Describe the Cozystack release workflow. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/817 and https://github.com/cozystack/cozystack/pull/897)

|

||||

|

||||

## Fixes

|

||||

|

||||

* [virtual-machine] Add GPU names to the virtual machine specifications. (@kvaps in https://github.com/cozystack/cozystack/pull/862)

|

||||

* [virtual-machine] Count Workload resources for pods by requests, not limits. Other improvements to VM resource tracking. (@lllamnyp in https://github.com/cozystack/cozystack/pull/904)

|

||||

* [virtual-machine] Set PortList method by default. (@kvaps in https://github.com/cozystack/cozystack/pull/996)

|

||||

* [virtual-machine] Specify ports even for wholeIP mode. (@kvaps in https://github.com/cozystack/cozystack/pull/1000)

|

||||

* [platform] Fix installing HelmReleases on initial setup. (@kvaps in https://github.com/cozystack/cozystack/pull/833)

|

||||

* [platform] Migration scripts update Kubernetes ConfigMap with the current stack version for improved version tracking. (@klinch0 in https://github.com/cozystack/cozystack/pull/840)

|

||||

* [platform] Reduce requested CPU and RAM for the `kamaji` provider. (@klinch0 in https://github.com/cozystack/cozystack/pull/825)

|

||||

@@ -135,8 +45,7 @@ For more information, read the [Cozystack Release Workflow](https://github.com/c

|

||||

* [kubernetes] Fix merging `valuesOverride` for tenant clusters. (@kvaps in https://github.com/cozystack/cozystack/pull/879)

|

||||

* [kubernetes] Fix `ubuntu-container-disk` tag. (@kvaps in https://github.com/cozystack/cozystack/pull/887)

|

||||

* [kubernetes] Refactor Helm manifests for tenant Kubernetes clusters. (@kvaps in https://github.com/cozystack/cozystack/pull/866)

|

||||

* [kubernetes] Fix Ingress-NGINX depends on Cert-Manager. (@kvaps in https://github.com/cozystack/cozystack/pull/976)

|

||||

* [kubernetes, apps] Enable `topologySpreadConstraints` for tenant Kubernetes clusters and fix it for managed PostgreSQL. (@klinch0 in https://github.com/cozystack/cozystack/pull/995)

|

||||

* [kubernetes] Fix Ingress-NGINX depends on Cert-Manager . (@kvaps in https://github.com/cozystack/cozystack/pull/976)

|

||||

* [tenant] Fix an issue with accessing external IPs of a cluster from the cluster itself. (@kvaps in https://github.com/cozystack/cozystack/pull/854)

|

||||

* [cluster-api] Remove the no longer necessary workaround for Kamaji. (@kvaps in https://github.com/cozystack/cozystack/pull/867, patched in https://github.com/cozystack/cozystack/pull/956)

|

||||

* [monitoring] Remove legacy label "POD" from the exclude filter in metrics. (@xy2 in https://github.com/cozystack/cozystack/pull/826)

|

||||

@@ -145,13 +54,24 @@ For more information, read the [Cozystack Release Workflow](https://github.com/c

|

||||

* [postgres] Remove duplicated `template` entry from backup manifest. (@etoshutka in https://github.com/cozystack/cozystack/pull/872)

|

||||

* [kube-ovn] Fix versions mapping in Makefile. (@kvaps in https://github.com/cozystack/cozystack/pull/883)

|

||||

* [dx] Automatically detect version for migrations in the installer.sh. (@kvaps in https://github.com/cozystack/cozystack/pull/837)

|

||||

* [dx] remove version_map and building for library charts. (@kvaps in https://github.com/cozystack/cozystack/pull/998)

|

||||

* [docs] Review the tenant Kubernetes cluster docs. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/969)

|

||||

* [docs] Explain that tenants cannot have dashes in their names. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/980)

|

||||

* [e2e] Increase timeout durations for `capi` and `keycloak` to improve reliability during environment setup. (@kvaps in https://github.com/cozystack/cozystack/pull/858)

|

||||

* [e2e] Fix `device_ownership_from_security_context` CRI. (@dtrdnk in https://github.com/cozystack/cozystack/pull/896)

|

||||

* [e2e] Return `genisoimage` to the e2e-test Dockerfile (@gwynbleidd2106 in https://github.com/cozystack/cozystack/pull/962)

|

||||

* [ci] Improve the check for `versions_map` running on pull requests. (@kvaps and @klinch0 in https://github.com/cozystack/cozystack/pull/836, https://github.com/cozystack/cozystack/pull/842, and https://github.com/cozystack/cozystack/pull/845)

|

||||

* [ci] If the release step was skipped on a tag, skip tests as well. (@kvaps in https://github.com/cozystack/cozystack/pull/822)

|

||||

* [ci] Allow CI to cancel the previous job if a new one is scheduled. (@kvaps in https://github.com/cozystack/cozystack/pull/873)

|

||||

* [ci] Use the correct version name when uploading build assets to the release page. (@kvaps in https://github.com/cozystack/cozystack/pull/876)

|

||||

* [ci] Stop using `ok-to-test` label to trigger CI in pull requests. (@kvaps in https://github.com/cozystack/cozystack/pull/875)

|

||||

* [ci] Do not run tests in the release building pipeline. (@kvaps in https://github.com/cozystack/cozystack/pull/882)

|

||||

* [ci] Fix release branch creation. (@kvaps in https://github.com/cozystack/cozystack/pull/884)

|

||||

* [ci, dx] Reduce noise in the test logs by suppressing the `wget` progress bar. (@lllamnyp in https://github.com/cozystack/cozystack/pull/865)

|

||||

* [ci] Revert "automatically trigger tests in releasing PR". (@kvaps in https://github.com/cozystack/cozystack/pull/900)

|

||||

* [ci] Force-update release branch on tagged main commits . (@kvaps in https://github.com/cozystack/cozystack/pull/977)

|

||||

* [docs] Explain that tenants cannot have dashes in the names. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/980)

|

||||

|

||||

## Dependencies

|

||||

|

||||

* MetalLB images are now built in-tree based on version 0.14.9 with additional critical patches. (@lllamnyp in https://github.com/cozystack/cozystack/pull/945)

|

||||

* MetalLB s now included directly as a patched image based on version 0.14.9. (@lllamnyp in https://github.com/cozystack/cozystack/pull/945)

|

||||

* Update Kubernetes to v1.32.4. (@kvaps in https://github.com/cozystack/cozystack/pull/949)

|

||||

* Update Talos Linux to v1.10.1. (@kvaps in https://github.com/cozystack/cozystack/pull/931)

|

||||

* Update Cilium to v1.17.3. (@kvaps in https://github.com/cozystack/cozystack/pull/848)

|

||||

@@ -163,81 +83,15 @@ For more information, read the [Cozystack Release Workflow](https://github.com/c

|

||||

* Update KamajiControlPlane to edge-25.4.1. (@kvaps in https://github.com/cozystack/cozystack/pull/953, fixed by @nbykov0 in https://github.com/cozystack/cozystack/pull/983)

|

||||

* Update cert-manager to v1.17.2. (@kvaps in https://github.com/cozystack/cozystack/pull/975)

|

||||

|

||||

## Documentation

|

||||

## Maintenance

|

||||

|

||||

* [Installing Talos in Air-Gapped Environment](https://cozystack.io/docs/operations/talos/configuration/air-gapped/):

|

||||

new guide for configuring and bootstrapping Talos Linux clusters in air-gapped environments.

|

||||

(@klinch0 in https://github.com/cozystack/website/pull/203)

|

||||

* Add @klinch0 to CODEOWNERS. (@kvaps in https://github.com/cozystack/cozystack/pull/838)

|

||||

|

||||

* [Cozystack Bundles](https://cozystack.io/docs/guides/bundles/): new page in the learning section explaining how Cozystack bundles work and how to choose a bundle.

|

||||

(@NickVolynkin in https://github.com/cozystack/website/pull/188, https://github.com/cozystack/website/pull/189, and others;

|

||||

updated by @kvaps in https://github.com/cozystack/website/pull/192 and https://github.com/cozystack/website/pull/193)

|

||||

|

||||

* [Managed Application Reference](https://cozystack.io/docs/reference/applications/): A set of new pages in the docs, mirroring application docs from the Cozystack dashboard.

|

||||

(@NickVolynkin in https://github.com/cozystack/website/pull/198, https://github.com/cozystack/website/pull/202, and https://github.com/cozystack/website/pull/204)

|

||||

|

||||

* **LINSTOR Networking**: Guides on [configuring dedicated network for LINSTOR](https://cozystack.io/docs/operations/storage/dedicated-network/)

|

||||

and [configuring network for distributed storage in multi-datacenter setup](https://cozystack.io/docs/operations/stretched/linstor-dedicated-network/).

|

||||

(@xy2, edited by @NickVolynkin in https://github.com/cozystack/website/pull/171, https://github.com/cozystack/website/pull/182, and https://github.com/cozystack/website/pull/184)

|

||||

|

||||

### Fixes

|

||||

|

||||

* Correct error in the doc for the command to edit the configmap. (@lb0o in https://github.com/cozystack/website/pull/207)

|

||||

* Fix group name in OIDC docs (@kingdonb in https://github.com/cozystack/website/pull/179)

|

||||

* A bit more explanation of Docker buildx builders. (@nbykov0 in https://github.com/cozystack/website/pull/187)

|

||||

|

||||

## Development, Testing, and CI/CD

|

||||

|

||||

### Testing

|

||||

|

||||

Improvements:

|

||||

|

||||

* Introduce `cozytest` — a new [BATS-based](https://github.com/bats-core/bats-core) testing framework. (@kvaps in https://github.com/cozystack/cozystack/pull/982)

|

||||

|

||||

Fixes:

|

||||

|

||||

* Fix `device_ownership_from_security_context` CRI. (@dtrdnk in https://github.com/cozystack/cozystack/pull/896)

|

||||

* Increase timeout durations for `capi` and `keycloak` to improve reliability during e2e-tests. (@kvaps in https://github.com/cozystack/cozystack/pull/858)

|

||||

* Return `genisoimage` to the e2e-test Dockerfile (@gwynbleidd2106 in https://github.com/cozystack/cozystack/pull/962)

|

||||

|

||||

### CI/CD Changes

|

||||

|

||||

Improvements:

|

||||

|

||||

* Use release branches `release-X.Y` for gathering and releasing fixes after initial `vX.Y.0` release. (@kvaps in https://github.com/cozystack/cozystack/pull/816)

|

||||

* Automatically create release branches after initial `vX.Y.0` release is published. (@kvaps in https://github.com/cozystack/cozystack/pull/886)

|

||||

* Introduce Release Candidate versions. Automate patch backporting by applying patches from pull requests labeled `[backport]` to the current release branch. (@kvaps in https://github.com/cozystack/cozystack/pull/841 and https://github.com/cozystack/cozystack/pull/901, @nickvolynkin in https://github.com/cozystack/cozystack/pull/890)

|

||||

* Support alpha and beta pre-releases. (@kvaps in https://github.com/cozystack/cozystack/pull/978)

|

||||

* Commit changes in release pipelines under `github-actions <github-actions@github.com>`. (@kvaps in https://github.com/cozystack/cozystack/pull/823)

|

||||

* Describe the Cozystack release workflow. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/817 and https://github.com/cozystack/cozystack/pull/897)

|

||||

|

||||

Fixes:

|

||||

|

||||

* Improve the check for `versions_map` running on pull requests. (@kvaps and @klinch0 in https://github.com/cozystack/cozystack/pull/836, https://github.com/cozystack/cozystack/pull/842, and https://github.com/cozystack/cozystack/pull/845)

|

||||

* If the release step was skipped on a tag, skip tests as well. (@kvaps in https://github.com/cozystack/cozystack/pull/822)

|

||||

* Allow CI to cancel the previous job if a new one is scheduled. (@kvaps in https://github.com/cozystack/cozystack/pull/873)

|

||||

* Use the correct version name when uploading build assets to the release page. (@kvaps in https://github.com/cozystack/cozystack/pull/876)

|

||||

* Stop using `ok-to-test` label to trigger CI in pull requests. (@kvaps in https://github.com/cozystack/cozystack/pull/875)

|

||||

* Do not run tests in the release building pipeline. (@kvaps in https://github.com/cozystack/cozystack/pull/882)

|

||||

* Fix release branch creation. (@kvaps in https://github.com/cozystack/cozystack/pull/884)

|

||||

* Reduce noise in the test logs by suppressing the `wget` progress bar. (@lllamnyp in https://github.com/cozystack/cozystack/pull/865)

|

||||

* Revert "automatically trigger tests in releasing PR". (@kvaps in https://github.com/cozystack/cozystack/pull/900)

|

||||

* Force-update release branch on tagged main commits. (@kvaps in https://github.com/cozystack/cozystack/pull/977)

|

||||

* Show detailed errors in the `pull-request-release` workflow. (@lllamnyp in https://github.com/cozystack/cozystack/pull/992)

|

||||

|

||||

## Community and Maintenance

|

||||

|

||||

### Repository Maintenance

|

||||

|

||||

Added @klinch0 to CODEOWNERS. (@kvaps in https://github.com/cozystack/cozystack/pull/838)

|

||||

|

||||

### New Contributors

|

||||

## New Contributors

|

||||

|

||||

* @etoshutka made their first contribution in https://github.com/cozystack/cozystack/pull/872

|

||||

* @dtrdnk made their first contribution in https://github.com/cozystack/cozystack/pull/896

|

||||

* @zdenekjanda made their first contribution in https://github.com/cozystack/cozystack/pull/924

|

||||

* @gwynbleidd2106 made their first contribution in https://github.com/cozystack/cozystack/pull/962

|

||||

|

||||

## Full Changelog

|

||||

|

||||

See https://github.com/cozystack/cozystack/compare/v0.30.0...v0.31.0

|

||||

**Full Changelog**: https://github.com/cozystack/cozystack/compare/v0.30.0...v0.31.0-rc.3

|

||||

|

||||

13

go.mod

13

go.mod

@@ -37,7 +37,6 @@ require (

|

||||

github.com/coreos/go-systemd/v22 v22.5.0 // indirect

|

||||

github.com/davecgh/go-spew v1.1.2-0.20180830191138-d8f796af33cc // indirect

|

||||

github.com/emicklei/go-restful/v3 v3.11.0 // indirect

|

||||

github.com/evanphx/json-patch v4.12.0+incompatible // indirect

|

||||

github.com/evanphx/json-patch/v5 v5.9.0 // indirect

|

||||

github.com/felixge/httpsnoop v1.0.4 // indirect

|

||||

github.com/fluxcd/pkg/apis/kustomize v1.6.1 // indirect

|

||||

@@ -92,14 +91,14 @@ require (

|

||||

go.opentelemetry.io/proto/otlp v1.3.1 // indirect

|

||||

go.uber.org/multierr v1.11.0 // indirect

|

||||

go.uber.org/zap v1.27.0 // indirect

|

||||

golang.org/x/crypto v0.31.0 // indirect

|

||||

golang.org/x/crypto v0.28.0 // indirect

|

||||

golang.org/x/exp v0.0.0-20240719175910-8a7402abbf56 // indirect

|

||||

golang.org/x/net v0.33.0 // indirect

|

||||

golang.org/x/net v0.30.0 // indirect

|

||||

golang.org/x/oauth2 v0.23.0 // indirect

|

||||

golang.org/x/sync v0.10.0 // indirect

|

||||

golang.org/x/sys v0.28.0 // indirect

|

||||

golang.org/x/term v0.27.0 // indirect

|

||||

golang.org/x/text v0.21.0 // indirect

|

||||

golang.org/x/sync v0.8.0 // indirect

|

||||

golang.org/x/sys v0.26.0 // indirect

|

||||

golang.org/x/term v0.25.0 // indirect

|

||||

golang.org/x/text v0.19.0 // indirect

|

||||

golang.org/x/time v0.7.0 // indirect

|

||||

golang.org/x/tools v0.26.0 // indirect

|

||||

gomodules.xyz/jsonpatch/v2 v2.4.0 // indirect

|

||||

|

||||

28

go.sum

28

go.sum

@@ -26,8 +26,8 @@ github.com/dustin/go-humanize v1.0.1 h1:GzkhY7T5VNhEkwH0PVJgjz+fX1rhBrR7pRT3mDkp

|

||||

github.com/dustin/go-humanize v1.0.1/go.mod h1:Mu1zIs6XwVuF/gI1OepvI0qD18qycQx+mFykh5fBlto=

|

||||

github.com/emicklei/go-restful/v3 v3.11.0 h1:rAQeMHw1c7zTmncogyy8VvRZwtkmkZ4FxERmMY4rD+g=

|

||||

github.com/emicklei/go-restful/v3 v3.11.0/go.mod h1:6n3XBCmQQb25CM2LCACGz8ukIrRry+4bhvbpWn3mrbc=

|

||||

github.com/evanphx/json-patch v4.12.0+incompatible h1:4onqiflcdA9EOZ4RxV643DvftH5pOlLGNtQ5lPWQu84=

|

||||

github.com/evanphx/json-patch v4.12.0+incompatible/go.mod h1:50XU6AFN0ol/bzJsmQLiYLvXMP4fmwYFNcr97nuDLSk=

|

||||

github.com/evanphx/json-patch v0.5.2 h1:xVCHIVMUu1wtM/VkR9jVZ45N3FhZfYMMYGorLCR8P3k=

|

||||

github.com/evanphx/json-patch v0.5.2/go.mod h1:ZWS5hhDbVDyob71nXKNL0+PWn6ToqBHMikGIFbs31qQ=

|

||||

github.com/evanphx/json-patch/v5 v5.9.0 h1:kcBlZQbplgElYIlo/n1hJbls2z/1awpXxpRi0/FOJfg=

|

||||

github.com/evanphx/json-patch/v5 v5.9.0/go.mod h1:VNkHZ/282BpEyt/tObQO8s5CMPmYYq14uClGH4abBuQ=

|

||||

github.com/felixge/httpsnoop v1.0.4 h1:NFTV2Zj1bL4mc9sqWACXbQFVBBg2W3GPvqp8/ESS2Wg=

|

||||

@@ -212,8 +212,8 @@ go.uber.org/zap v1.27.0/go.mod h1:GB2qFLM7cTU87MWRP2mPIjqfIDnGu+VIO4V/SdhGo2E=

|

||||

golang.org/x/crypto v0.0.0-20190308221718-c2843e01d9a2/go.mod h1:djNgcEr1/C05ACkg1iLfiJU5Ep61QUkGW8qpdssI0+w=

|

||||

golang.org/x/crypto v0.0.0-20191011191535-87dc89f01550/go.mod h1:yigFU9vqHzYiE8UmvKecakEJjdnWj3jj499lnFckfCI=

|

||||

golang.org/x/crypto v0.0.0-20200622213623-75b288015ac9/go.mod h1:LzIPMQfyMNhhGPhUkYOs5KpL4U8rLKemX1yGLhDgUto=

|

||||

golang.org/x/crypto v0.31.0 h1:ihbySMvVjLAeSH1IbfcRTkD/iNscyz8rGzjF/E5hV6U=

|

||||

golang.org/x/crypto v0.31.0/go.mod h1:kDsLvtWBEx7MV9tJOj9bnXsPbxwJQ6csT/x4KIN4Ssk=

|

||||

golang.org/x/crypto v0.28.0 h1:GBDwsMXVQi34v5CCYUm2jkJvu4cbtru2U4TN2PSyQnw=

|

||||

golang.org/x/crypto v0.28.0/go.mod h1:rmgy+3RHxRZMyY0jjAJShp2zgEdOqj2AO7U0pYmeQ7U=

|

||||

golang.org/x/exp v0.0.0-20240719175910-8a7402abbf56 h1:2dVuKD2vS7b0QIHQbpyTISPd0LeHDbnYEryqj5Q1ug8=

|

||||

golang.org/x/exp v0.0.0-20240719175910-8a7402abbf56/go.mod h1:M4RDyNAINzryxdtnbRXRL/OHtkFuWGRjvuhBJpk2IlY=

|

||||

golang.org/x/mod v0.2.0/go.mod h1:s0Qsj1ACt9ePp/hMypM3fl4fZqREWJwdYDEqhRiZZUA=

|

||||

@@ -222,26 +222,26 @@ golang.org/x/net v0.0.0-20190404232315-eb5bcb51f2a3/go.mod h1:t9HGtf8HONx5eT2rtn

|

||||

golang.org/x/net v0.0.0-20190620200207-3b0461eec859/go.mod h1:z5CRVTTTmAJ677TzLLGU+0bjPO0LkuOLi4/5GtJWs/s=

|

||||

golang.org/x/net v0.0.0-20200226121028-0de0cce0169b/go.mod h1:z5CRVTTTmAJ677TzLLGU+0bjPO0LkuOLi4/5GtJWs/s=

|

||||

golang.org/x/net v0.0.0-20201021035429-f5854403a974/go.mod h1:sp8m0HH+o8qH0wwXwYZr8TS3Oi6o0r6Gce1SSxlDquU=

|

||||

golang.org/x/net v0.33.0 h1:74SYHlV8BIgHIFC/LrYkOGIwL19eTYXQ5wc6TBuO36I=

|

||||

golang.org/x/net v0.33.0/go.mod h1:HXLR5J+9DxmrqMwG9qjGCxZ+zKXxBru04zlTvWlWuN4=

|

||||

golang.org/x/net v0.30.0 h1:AcW1SDZMkb8IpzCdQUaIq2sP4sZ4zw+55h6ynffypl4=

|

||||

golang.org/x/net v0.30.0/go.mod h1:2wGyMJ5iFasEhkwi13ChkO/t1ECNC4X4eBKkVFyYFlU=

|

||||

golang.org/x/oauth2 v0.23.0 h1:PbgcYx2W7i4LvjJWEbf0ngHV6qJYr86PkAV3bXdLEbs=

|

||||

golang.org/x/oauth2 v0.23.0/go.mod h1:XYTD2NtWslqkgxebSiOHnXEap4TF09sJSc7H1sXbhtI=

|

||||

golang.org/x/sync v0.0.0-20190423024810-112230192c58/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||

golang.org/x/sync v0.0.0-20190911185100-cd5d95a43a6e/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||

golang.org/x/sync v0.0.0-20201020160332-67f06af15bc9/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||

golang.org/x/sync v0.10.0 h1:3NQrjDixjgGwUOCaF8w2+VYHv0Ve/vGYSbdkTa98gmQ=

|

||||

golang.org/x/sync v0.10.0/go.mod h1:Czt+wKu1gCyEFDUtn0jG5QVvpJ6rzVqr5aXyt9drQfk=

|

||||

golang.org/x/sync v0.8.0 h1:3NFvSEYkUoMifnESzZl15y791HH1qU2xm6eCJU5ZPXQ=

|

||||

golang.org/x/sync v0.8.0/go.mod h1:Czt+wKu1gCyEFDUtn0jG5QVvpJ6rzVqr5aXyt9drQfk=

|

||||

golang.org/x/sys v0.0.0-20190215142949-d0b11bdaac8a/go.mod h1:STP8DvDyc/dI5b8T5hshtkjS+E42TnysNCUPdjciGhY=

|

||||

golang.org/x/sys v0.0.0-20190412213103-97732733099d/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

|

||||

golang.org/x/sys v0.0.0-20200930185726-fdedc70b468f/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

|

||||

golang.org/x/sys v0.28.0 h1:Fksou7UEQUWlKvIdsqzJmUmCX3cZuD2+P3XyyzwMhlA=

|

||||

golang.org/x/sys v0.28.0/go.mod h1:/VUhepiaJMQUp4+oa/7Zr1D23ma6VTLIYjOOTFZPUcA=

|

||||

golang.org/x/term v0.27.0 h1:WP60Sv1nlK1T6SupCHbXzSaN0b9wUmsPoRS9b61A23Q=

|

||||

golang.org/x/term v0.27.0/go.mod h1:iMsnZpn0cago0GOrHO2+Y7u7JPn5AylBrcoWkElMTSM=

|

||||

golang.org/x/sys v0.26.0 h1:KHjCJyddX0LoSTb3J+vWpupP9p0oznkqVk/IfjymZbo=

|

||||

golang.org/x/sys v0.26.0/go.mod h1:/VUhepiaJMQUp4+oa/7Zr1D23ma6VTLIYjOOTFZPUcA=

|

||||

golang.org/x/term v0.25.0 h1:WtHI/ltw4NvSUig5KARz9h521QvRC8RmF/cuYqifU24=

|

||||

golang.org/x/term v0.25.0/go.mod h1:RPyXicDX+6vLxogjjRxjgD2TKtmAO6NZBsBRfrOLu7M=

|

||||

golang.org/x/text v0.3.0/go.mod h1:NqM8EUOU14njkJ3fqMW+pc6Ldnwhi/IjpwHt7yyuwOQ=

|

||||

golang.org/x/text v0.3.3/go.mod h1:5Zoc/QRtKVWzQhOtBMvqHzDpF6irO9z98xDceosuGiQ=

|

||||

golang.org/x/text v0.21.0 h1:zyQAAkrwaneQ066sspRyJaG9VNi/YJ1NfzcGB3hZ/qo=

|

||||

golang.org/x/text v0.21.0/go.mod h1:4IBbMaMmOPCJ8SecivzSH54+73PCFmPWxNTLm+vZkEQ=

|

||||

golang.org/x/text v0.19.0 h1:kTxAhCbGbxhK0IwgSKiMO5awPoDQ0RpfiVYBfK860YM=

|

||||

golang.org/x/text v0.19.0/go.mod h1:BuEKDfySbSR4drPmRPG/7iBdf8hvFMuRexcpahXilzY=

|

||||

golang.org/x/time v0.7.0 h1:ntUhktv3OPE6TgYxXWv9vKvUSJyIFJlyohwbkEwPrKQ=

|

||||

golang.org/x/time v0.7.0/go.mod h1:3BpzKBy/shNhVucY/MWOyx10tF3SFh9QdLuxbVysPQM=

|

||||

golang.org/x/tools v0.0.0-20180917221912-90fa682c2a6e/go.mod h1:n7NCudcB/nEzxVGmLbDWY5pfWTLqBcC2KZ6jyYvM4mQ=

|

||||

|

||||

@@ -23,7 +23,7 @@ EOF

|

||||

kubectl wait namespace tenant-test --timeout=20s --for=jsonpath='{.status.phase}'=Active

|

||||

}

|

||||

|

||||

@test "Create a tenant Kubernetes control plane" {

|

||||

@test "Create a tenant Kubernetes cluster" {

|

||||

kubectl create -f - <<EOF

|

||||

apiVersion: apps.cozystack.io/v1alpha1

|

||||

kind: Kubernetes

|

||||

@@ -90,5 +90,5 @@ EOF

|

||||

kubectl wait tcp -n tenant-test kubernetes-test --timeout=2m --for=jsonpath='{.status.kubernetesResources.version.status}'=Ready

|

||||

kubectl wait deploy --timeout=4m --for=condition=available -n tenant-test kubernetes-test kubernetes-test-cluster-autoscaler kubernetes-test-kccm kubernetes-test-kcsi-controller

|

||||

kubectl wait machinedeployment kubernetes-test-md0 -n tenant-test --timeout=1m --for=jsonpath='{.status.replicas}'=2

|

||||

kubectl wait machinedeployment kubernetes-test-md0 -n tenant-test --timeout=10m --for=jsonpath='{.status.v1beta2.readyReplicas}'=2

|

||||

kubectl wait machinedeployment kubernetes-test-md0 -n tenant-test --timeout=8m --for=jsonpath='{.status.v1beta2.readyReplicas}'=2

|

||||

}

|

||||

|

||||

@@ -3,14 +3,12 @@

|

||||

# Cozystack end‑to‑end provisioning test (Bats)

|

||||

# -----------------------------------------------------------------------------

|

||||

|

||||

@test "Required installer assets exist" {

|

||||

if [ ! -f _out/assets/cozystack-installer.yaml ]; then

|

||||

echo "Missing: _out/assets/cozystack-installer.yaml" >&2

|

||||

exit 1

|

||||

fi

|

||||

|

||||

if [ ! -f _out/assets/nocloud-amd64.raw.xz ]; then

|

||||

echo "Missing: _out/assets/nocloud-amd64.raw.xz" >&2

|

||||

@test "Environment variable COZYSTACK_INSTALLER_YAML is defined" {

|

||||

if [ -z "${COZYSTACK_INSTALLER_YAML:-}" ]; then

|

||||

echo 'COZYSTACK_INSTALLER_YAML environment variable is not set!' >&2

|

||||

echo >&2

|

||||

echo 'Please export it with the following command:' >&2

|

||||

echo ' export COZYSTACK_INSTALLER_YAML=$(helm template -n cozy-system installer packages/core/installer)' >&2

|

||||

exit 1

|

||||

fi

|

||||

}

|

||||

@@ -72,21 +70,19 @@ EOF

|

||||

done

|

||||

}

|

||||

|

||||

@test "Use Talos NoCloud image from assets" {

|

||||

if [ ! -f _out/assets/nocloud-amd64.raw.xz ]; then

|

||||

echo "Missing _out/assets/nocloud-amd64.raw.xz" 2>&1

|

||||

exit 1

|

||||

@test "Download Talos NoCloud image" {

|

||||

if [ ! -f nocloud-amd64.raw ]; then

|

||||

wget https://github.com/cozystack/cozystack/releases/latest/download/nocloud-amd64.raw.xz \

|

||||

-O nocloud-amd64.raw.xz --show-progress --output-file /dev/stdout --progress=dot:giga 2>/dev/null

|

||||

rm -f nocloud-amd64.raw

|

||||

xz --decompress nocloud-amd64.raw.xz

|

||||

fi

|

||||

|

||||

rm -f nocloud-amd64.raw

|

||||

cp _out/assets/nocloud-amd64.raw.xz .

|

||||

xz --decompress nocloud-amd64.raw.xz

|

||||

}

|

||||

|

||||

@test "Prepare VM disks" {

|

||||

for i in 1 2 3; do

|

||||

cp nocloud-amd64.raw srv${i}/system.img

|

||||

qemu-img resize srv${i}/system.img 50G

|

||||

qemu-img resize srv${i}/system.img 20G

|

||||

qemu-img create srv${i}/data.img 100G

|

||||

done

|

||||

}

|

||||

@@ -102,7 +98,7 @@ EOF

|

||||

|

||||

@test "Boot QEMU VMs" {

|

||||

for i in 1 2 3; do

|

||||

qemu-system-x86_64 -machine type=pc,accel=kvm -cpu host -smp 8 -m 24576 \

|

||||

qemu-system-x86_64 -machine type=pc,accel=kvm -cpu host -smp 8 -m 16384 \

|

||||

-device virtio-net,netdev=net0,mac=52:54:00:12:34:5${i} \

|

||||

-netdev tap,id=net0,ifname=cozy-srv${i},script=no,downscript=no \

|

||||

-drive file=srv${i}/system.img,if=virtio,format=raw \

|

||||

@@ -247,8 +243,8 @@ EOF

|

||||

--from-literal=api-server-endpoint=https://192.168.123.10:6443 \

|

||||

--dry-run=client -o yaml | kubectl apply -f -

|

||||

|

||||

# Apply installer manifests from file

|

||||

kubectl apply -f _out/assets/cozystack-installer.yaml

|

||||

# Apply installer manifests from env variable

|

||||

echo "$COZYSTACK_INSTALLER_YAML" | kubectl apply -f -

|

||||

|

||||

# Wait for the installer deployment to become available

|

||||

kubectl wait deployment/cozystack -n cozy-system --timeout=1m --for=condition=Available

|

||||

|

||||

@@ -1,139 +0,0 @@

|

||||

package controller

|

||||

|

||||

import (

|

||||

"context"

|

||||

"crypto/sha256"

|

||||

"encoding/hex"

|

||||

"fmt"

|

||||

"sort"

|

||||

"time"

|

||||

|

||||

helmv2 "github.com/fluxcd/helm-controller/api/v2"

|

||||

corev1 "k8s.io/api/core/v1"

|

||||

kerrors "k8s.io/apimachinery/pkg/api/errors"

|

||||

"k8s.io/apimachinery/pkg/runtime"

|

||||

ctrl "sigs.k8s.io/controller-runtime"

|

||||

"sigs.k8s.io/controller-runtime/pkg/client"

|

||||

"sigs.k8s.io/controller-runtime/pkg/event"

|

||||

"sigs.k8s.io/controller-runtime/pkg/log"

|

||||

"sigs.k8s.io/controller-runtime/pkg/predicate"

|

||||

)

|

||||

|

||||

type CozystackConfigReconciler struct {

|

||||

client.Client

|

||||

Scheme *runtime.Scheme

|

||||

}

|

||||

|

||||

var configMapNames = []string{"cozystack", "cozystack-branding", "cozystack-scheduling"}

|

||||

|

||||

const configMapNamespace = "cozy-system"

|

||||

const digestAnnotation = "cozystack.io/cozy-config-digest"

|

||||

const forceReconcileKey = "reconcile.fluxcd.io/forceAt"

|

||||

const requestedAt = "reconcile.fluxcd.io/requestedAt"

|

||||

|

||||

func (r *CozystackConfigReconciler) Reconcile(ctx context.Context, _ ctrl.Request) (ctrl.Result, error) {

|

||||

log := log.FromContext(ctx)

|

||||

|

||||

digest, err := r.computeDigest(ctx)

|

||||

if err != nil {

|

||||

log.Error(err, "failed to compute config digest")

|

||||

return ctrl.Result{}, nil

|

||||

}

|

||||

|

||||

var helmList helmv2.HelmReleaseList

|

||||

if err := r.List(ctx, &helmList); err != nil {

|

||||

return ctrl.Result{}, fmt.Errorf("failed to list HelmReleases: %w", err)

|

||||

}

|

||||

|

||||

now := time.Now().Format(time.RFC3339Nano)

|

||||

updated := 0

|

||||

|

||||

for _, hr := range helmList.Items {

|

||||

isSystemApp := hr.Labels["cozystack.io/system-app"] == "true"

|

||||

isTenantRoot := hr.Namespace == "tenant-root" && hr.Name == "tenant-root"

|

||||

if !isSystemApp && !isTenantRoot {

|

||||

continue

|

||||

}

|

||||

|

||||

if hr.Annotations == nil {

|

||||

hr.Annotations = map[string]string{}

|

||||

}

|

||||

|

||||

if hr.Annotations[digestAnnotation] == digest {

|

||||

continue

|

||||

}

|

||||

|

||||

patch := client.MergeFrom(hr.DeepCopy())

|

||||

hr.Annotations[digestAnnotation] = digest

|

||||

hr.Annotations[forceReconcileKey] = now

|

||||

hr.Annotations[requestedAt] = now

|

||||

|

||||

if err := r.Patch(ctx, &hr, patch); err != nil {

|

||||

log.Error(err, "failed to patch HelmRelease", "name", hr.Name, "namespace", hr.Namespace)

|

||||

continue

|

||||

}

|

||||

updated++

|

||||

log.Info("patched HelmRelease with new config digest", "name", hr.Name, "namespace", hr.Namespace)

|

||||

}

|

||||

|

||||

log.Info("finished reconciliation", "updatedHelmReleases", updated)

|

||||

return ctrl.Result{}, nil

|

||||

}

|

||||

|

||||

func (r *CozystackConfigReconciler) computeDigest(ctx context.Context) (string, error) {

|

||||

hash := sha256.New()

|

||||

|

||||

for _, name := range configMapNames {

|

||||

var cm corev1.ConfigMap

|

||||

err := r.Get(ctx, client.ObjectKey{Namespace: configMapNamespace, Name: name}, &cm)

|

||||

if err != nil {

|

||||

if kerrors.IsNotFound(err) {

|

||||

continue // ignore missing

|

||||

}

|

||||

return "", err

|

||||

}

|

||||

|

||||

// Sort keys for consistent hashing

|

||||

var keys []string

|

||||

for k := range cm.Data {

|

||||

keys = append(keys, k)

|

||||

}

|

||||

sort.Strings(keys)

|

||||

|

||||

for _, k := range keys {

|

||||

v := cm.Data[k]

|

||||

fmt.Fprintf(hash, "%s:%s=%s\n", name, k, v)

|

||||

}

|

||||

}

|

||||

|

||||

return hex.EncodeToString(hash.Sum(nil)), nil

|

||||

}

|

||||

|

||||

func (r *CozystackConfigReconciler) SetupWithManager(mgr ctrl.Manager) error {

|

||||

return ctrl.NewControllerManagedBy(mgr).

|

||||

WithEventFilter(predicate.Funcs{

|

||||

UpdateFunc: func(e event.UpdateEvent) bool {

|

||||

cm, ok := e.ObjectNew.(*corev1.ConfigMap)

|

||||

return ok && cm.Namespace == configMapNamespace && contains(configMapNames, cm.Name)

|

||||

},

|

||||

CreateFunc: func(e event.CreateEvent) bool {

|

||||

cm, ok := e.Object.(*corev1.ConfigMap)

|

||||

return ok && cm.Namespace == configMapNamespace && contains(configMapNames, cm.Name)

|

||||

},

|

||||

DeleteFunc: func(e event.DeleteEvent) bool {

|

||||

cm, ok := e.Object.(*corev1.ConfigMap)

|

||||

return ok && cm.Namespace == configMapNamespace && contains(configMapNames, cm.Name)

|

||||

},

|

||||

}).

|

||||

For(&corev1.ConfigMap{}).

|

||||

Complete(r)

|

||||

}

|

||||

|

||||

func contains(slice []string, val string) bool {

|

||||

for _, s := range slice {

|

||||

if s == val {

|

||||

return true

|

||||

}

|

||||

}

|

||||

return false

|

||||

}

|

||||

@@ -248,24 +248,15 @@ func (r *WorkloadMonitorReconciler) reconcilePodForMonitor(

|

||||

ObjectMeta: metav1.ObjectMeta{

|

||||

Name: fmt.Sprintf("pod-%s", pod.Name),

|

||||

Namespace: pod.Namespace,

|

||||

Labels: map[string]string{},

|

||||

},

|

||||

}

|

||||

|

||||

metaLabels := r.getWorkloadMetadata(&pod)

|

||||

_, err := ctrl.CreateOrUpdate(ctx, r.Client, workload, func() error {

|

||||

// Update owner references with the new monitor

|

||||

updateOwnerReferences(workload.GetObjectMeta(), monitor)

|

||||

|

||||

// Copy labels from the Pod if needed

|

||||

for k, v := range pod.Labels {

|

||||

workload.Labels[k] = v

|

||||

}

|

||||

|

||||

// Add workload meta to labels

|

||||

for k, v := range metaLabels {

|

||||

workload.Labels[k] = v

|

||||

}

|

||||

workload.Labels = pod.Labels

|

||||

|

||||

// Fill Workload status fields:

|

||||

workload.Status.Kind = monitor.Spec.Kind

|

||||

@@ -442,12 +433,3 @@ func mapObjectToMonitor[T client.Object](_ T, c client.Client) func(ctx context.

|

||||

return requests

|

||||

}

|

||||

}

|

||||

|

||||

func (r *WorkloadMonitorReconciler) getWorkloadMetadata(obj client.Object) map[string]string {

|

||||

labels := make(map[string]string)

|

||||

annotations := obj.GetAnnotations()

|

||||

if instanceType, ok := annotations["kubevirt.io/cluster-instancetype-name"]; ok {

|

||||

labels["workloads.cozystack.io/kubevirt-vmi-instance-type"] = instanceType

|

||||

}

|

||||

return labels

|

||||

}

|

||||

|

||||

@@ -16,10 +16,10 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.2.0

|

||||

version: 0.1.0

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

# follow Semantic Versioning. They should reflect the version the application is using.

|

||||

# It is recommended to use it with quotes.

|

||||

appVersion: "0.2.0"

|

||||

appVersion: "0.1.0"

|

||||

|

||||

@@ -1 +0,0 @@

|

||||

../../../library/cozy-lib

|

||||

@@ -18,14 +18,3 @@ rules:

|

||||

resourceNames:

|

||||

- {{ .Release.Name }}-ui

|

||||

verbs: ["get", "list", "watch"]

|

||||

---

|

||||

kind: RoleBinding

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

metadata:

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

subjects:

|

||||

{{ include "cozy-lib.rbac.subjectsForTenantAndAccessLevel" (list "use" .Release.Namespace) }}

|

||||

roleRef:

|

||||

kind: Role

|

||||

name: {{ .Release.Name }}-dashboard-resources

|

||||

apiGroup: rbac.authorization.k8s.io

|

||||

|

||||

@@ -16,7 +16,7 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.10.0

|

||||

version: 0.9.0

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

CLICKHOUSE_BACKUP_TAG = $(shell awk '$$0 ~ /^version:/ {print $$2}' Chart.yaml)

|

||||

CLICKHOUSE_BACKUP_TAG = $(shell awk '$$1 == "version:" {print $$2}' Chart.yaml)

|

||||

|

||||

include ../../../scripts/common-envs.mk

|

||||

include ../../../scripts/package.mk

|

||||

|

||||

@@ -1,35 +1,32 @@

|

||||

# Managed Clickhouse Service

|

||||

|

||||

ClickHouse is an open source high-performance and column-oriented SQL database management system (DBMS).

|

||||

It is used for online analytical processing (OLAP).

|

||||

Cozystack platform uses Altinity operator to provide ClickHouse.

|

||||

|

||||

### How to restore backup:

|

||||

|

||||

1. Find a snapshot:

|

||||

```

|

||||

restic -r s3:s3.example.org/clickhouse-backups/table_name snapshots

|

||||

```

|

||||

find snapshot:

|

||||

```

|

||||

restic -r s3:s3.example.org/clickhouse-backups/table_name snapshots

|

||||

```

|

||||

|

||||

2. Restore it:

|

||||

```

|

||||

restic -r s3:s3.example.org/clickhouse-backups/table_name restore latest --target /tmp/

|

||||

```

|

||||

restore:

|

||||

```

|

||||

restic -r s3:s3.example.org/clickhouse-backups/table_name restore latest --target /tmp/

|

||||

```

|

||||

|

||||

For more details, read [Restic: Effective Backup from Stdin](https://blog.aenix.io/restic-effective-backup-from-stdin-4bc1e8f083c1).

|

||||

more details:

|

||||

- https://itnext.io/restic-effective-backup-from-stdin-4bc1e8f083c1

|

||||

|

||||

## Parameters

|

||||

|

||||

### Common parameters

|

||||

|

||||

| Name | Description | Value |

|

||||

| ---------------- | -------------------------------------------------------- | ------ |

|

||||

| `size` | Size of Persistent Volume for data | `10Gi` |

|

||||

| `logStorageSize` | Size of Persistent Volume for logs | `2Gi` |

|

||||

| `shards` | Number of Clickhouse shards | `1` |

|

||||

| `replicas` | Number of Clickhouse replicas | `2` |

|

||||

| `storageClass` | StorageClass used to store the data | `""` |

|

||||

| `logTTL` | TTL (expiration time) for query_log and query_thread_log | `15` |

|

||||

| Name | Description | Value |

|

||||

| ---------------- | ----------------------------------- | ------ |

|

||||

| `size` | Persistent Volume size | `10Gi` |

|

||||

| `logStorageSize` | Persistent Volume for logs size | `2Gi` |

|

||||

| `shards` | Number of Clickhouse replicas | `1` |

|

||||

| `replicas` | Number of Clickhouse shards | `2` |

|

||||

| `storageClass` | StorageClass used to store the data | `""` |

|

||||

| `logTTL` | for query_log and query_thread_log | `15` |

|

||||

|

||||

### Configuration parameters

|

||||

|

||||

@@ -39,32 +36,15 @@ For more details, read [Restic: Effective Backup from Stdin](https://blog.aenix.

|

||||

|

||||

### Backup parameters

|

||||

|

||||

| Name | Description | Value |

|

||||

| ------------------------ | --------------------------------------------------------------------------- | ------------------------------------------------------ |

|

||||

| `backup.enabled` | Enable periodic backups | `false` |

|

||||

| `backup.s3Region` | AWS S3 region where backups are stored | `us-east-1` |

|

||||

| `backup.s3Bucket` | S3 bucket used for storing backups | `s3.example.org/clickhouse-backups` |

|

||||

| `backup.schedule` | Cron schedule for automated backups | `0 2 * * *` |

|

||||

| `backup.cleanupStrategy` | Retention strategy for cleaning up old backups | `--keep-last=3 --keep-daily=3 --keep-within-weekly=1m` |

|

||||

| `backup.s3AccessKey` | Access key for S3, used for authentication | `oobaiRus9pah8PhohL1ThaeTa4UVa7gu` |

|

||||

| `backup.s3SecretKey` | Secret key for S3, used for authentication | `ju3eum4dekeich9ahM1te8waeGai0oog` |

|

||||

| `backup.resticPassword` | Password for Restic backup encryption | `ChaXoveekoh6eigh4siesheeda2quai0` |

|

||||

| `resources` | Explicit CPU/memory resource requests and limits for the Clickhouse service | `{}` |

|

||||

| `resourcesPreset` | Use a common resources preset when `resources` is not set explicitly. | `nano` |

|

||||

|

||||

|

||||

In production environments, it's recommended to set `resources` explicitly.

|

||||

Example of `resources`:

|

||||

|

||||

```yaml

|

||||

resources:

|

||||

limits:

|

||||

cpu: 4000m

|

||||

memory: 4Gi

|

||||

requests:

|

||||

cpu: 100m

|

||||

memory: 512Mi

|

||||

```

|

||||

|

||||

Allowed values for `resourcesPreset` are `none`, `nano`, `micro`, `small`, `medium`, `large`, `xlarge`, `2xlarge`.

|

||||

This value is ignored if `resources` value is set.

|

||||

| Name | Description | Value |

|

||||

| ------------------------ | ----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | ------------------------------------------------------ |

|

||||

| `backup.enabled` | Enable pereiodic backups | `false` |

|

||||

| `backup.s3Region` | The AWS S3 region where backups are stored | `us-east-1` |

|

||||

| `backup.s3Bucket` | The S3 bucket used for storing backups | `s3.example.org/clickhouse-backups` |

|

||||

| `backup.schedule` | Cron schedule for automated backups | `0 2 * * *` |

|

||||

| `backup.cleanupStrategy` | The strategy for cleaning up old backups | `--keep-last=3 --keep-daily=3 --keep-within-weekly=1m` |

|

||||

| `backup.s3AccessKey` | The access key for S3, used for authentication | `oobaiRus9pah8PhohL1ThaeTa4UVa7gu` |

|

||||

| `backup.s3SecretKey` | The secret key for S3, used for authentication | `ju3eum4dekeich9ahM1te8waeGai0oog` |

|

||||

| `backup.resticPassword` | The password for Restic backup encryption | `ChaXoveekoh6eigh4siesheeda2quai0` |

|

||||

| `resources` | Resources | `{}` |

|

||||

| `resourcesPreset` | Set container resources according to one common preset (allowed values: none, nano, micro, small, medium, large, xlarge, 2xlarge). This is ignored if resources is set (resources is recommended for production). | `nano` |

|

||||

|

||||

@@ -1 +1 @@

|

||||

ghcr.io/cozystack/cozystack/clickhouse-backup:0.10.0@sha256:3faf7a4cebf390b9053763107482de175aa0fdb88c1e77424fd81100b1c3a205

|

||||

ghcr.io/cozystack/cozystack/clickhouse-backup:0.9.0@sha256:3faf7a4cebf390b9053763107482de175aa0fdb88c1e77424fd81100b1c3a205

|

||||

|

||||

@@ -1,5 +1,3 @@

|

||||

{{- $cozyConfig := lookup "v1" "ConfigMap" "cozy-system" "cozystack" }}

|

||||

{{- $clusterDomain := (index $cozyConfig.data "cluster-domain") | default "cozy.local" }}

|

||||

{{- $existingSecret := lookup "v1" "Secret" .Release.Namespace (printf "%s-credentials" .Release.Name) }}

|

||||

{{- $passwords := dict }}

|

||||

{{- $users := .Values.users }}

|

||||

@@ -34,7 +32,7 @@ kind: "ClickHouseInstallation"

|

||||

metadata:

|

||||

name: "{{ .Release.Name }}"

|

||||

spec:

|

||||

namespaceDomainPattern: "%s.svc.{{ $clusterDomain }}"

|

||||

namespaceDomainPattern: "%s.svc.cozy.local"

|

||||

defaults:

|

||||

templates:

|

||||

dataVolumeClaimTemplate: data-volume-template

|

||||

@@ -94,9 +92,6 @@ spec:

|

||||

templates:

|

||||

volumeClaimTemplates:

|

||||

- name: data-volume-template

|

||||

metadata:

|

||||

labels:

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

spec:

|

||||

accessModes:

|

||||

- ReadWriteOnce

|

||||

@@ -104,9 +99,6 @@ spec:

|

||||

requests:

|

||||

storage: {{ .Values.size }}

|

||||

- name: log-volume-template

|

||||

metadata:

|

||||

labels:

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|