mirror of

https://github.com/outbackdingo/cozystack.git

synced 2026-01-28 18:18:41 +00:00

Compare commits

1 Commits

clickhouse

...

project-do

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

236f3ce92f |

2

.gitignore

vendored

2

.gitignore

vendored

@@ -1,3 +1 @@

|

||||

_out

|

||||

.git

|

||||

.idea

|

||||

553

README.md

553

README.md

@@ -10,7 +10,7 @@

|

||||

|

||||

# Cozystack

|

||||

|

||||

**Cozystack** is a free PaaS platform and framework for building clouds.

|

||||

**Cozystack** is an open-source **PaaS platform** for cloud providers.

|

||||

|

||||

With Cozystack, you can transform your bunch of servers into an intelligent system with a simple REST API for spawning Kubernetes clusters, Database-as-a-Service, virtual machines, load balancers, HTTP caching services, and other services with ease.

|

||||

|

||||

@@ -18,55 +18,548 @@ You can use Cozystack to build your own cloud or to provide a cost-effective dev

|

||||

|

||||

## Use-Cases

|

||||

|

||||

* [**Using Cozystack to build public cloud**](https://cozystack.io/docs/use-cases/public-cloud/)

|

||||

You can use Cozystack as backend for a public cloud

|

||||

### As a backend for a public cloud

|

||||

|

||||

* [**Using Cozystack to build private cloud**](https://cozystack.io/docs/use-cases/private-cloud/)

|

||||

You can use Cozystack as platform to build a private cloud powered by Infrastructure-as-Code approach

|

||||

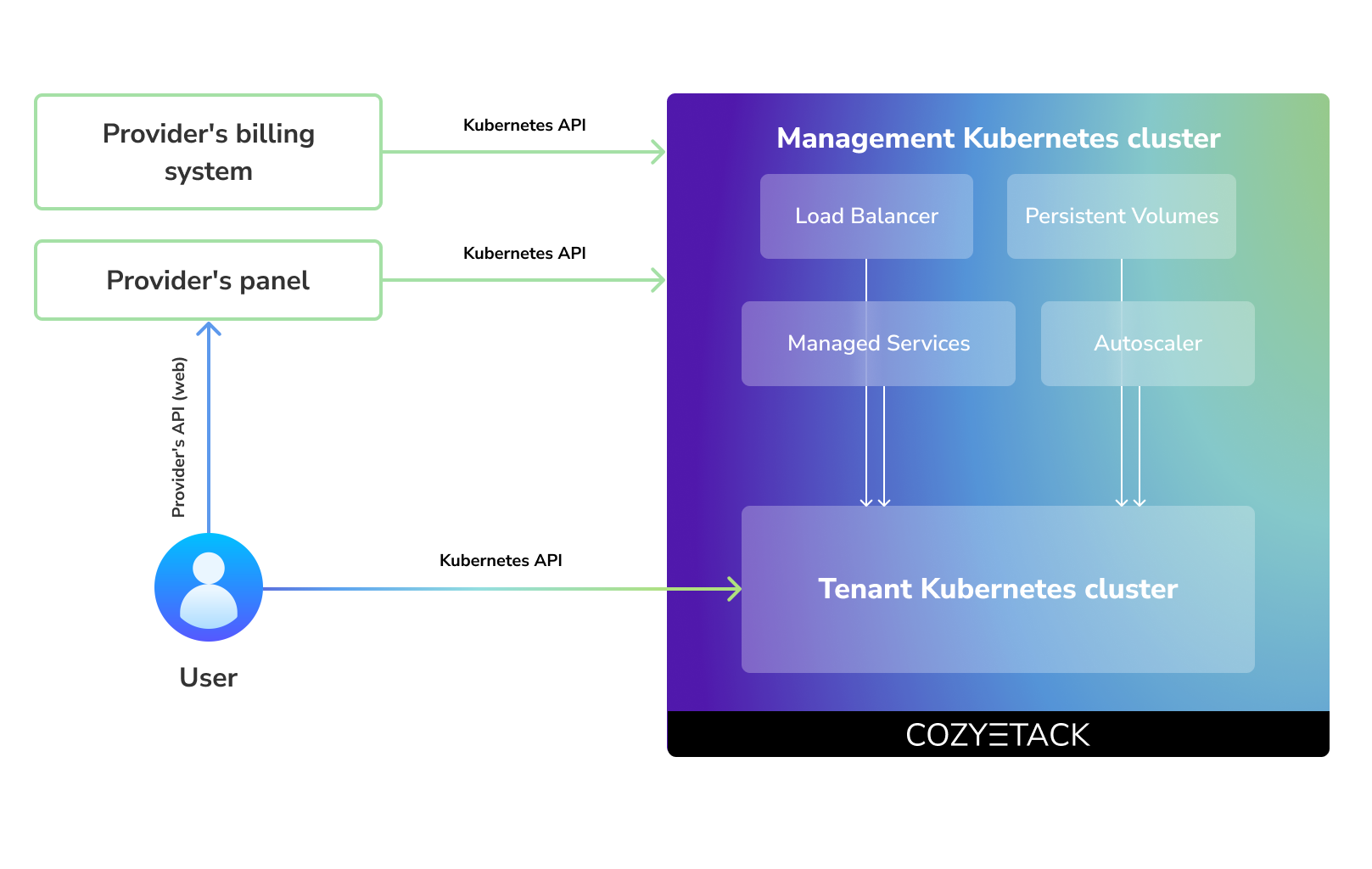

Cozystack positions itself as a kind of framework for building public clouds. The key word here is framework. In this case, it's important to understand that Cozystack is made for cloud providers, not for end users.

|

||||

|

||||

* [**Using Cozystack as Kubernetes distribution**](https://cozystack.io/docs/use-cases/kubernetes-distribution/)

|

||||

You can use Cozystack as Kubernetes distribution for Bare Metal

|

||||

Despite having a graphical interface, the current security model does not imply public user access to your management cluster.

|

||||

|

||||

Instead, end users get access to their own Kubernetes clusters, can order LoadBalancers and additional services from it, but they have no access and know nothing about your management cluster powered by Cozystack.

|

||||

|

||||

Thus, to integrate with your billing system, it's enough to teach your system to go to the management Kubernetes and place a YAML file signifying the service you're interested in. Cozystack will do the rest of the work for you.

|

||||

|

||||

|

||||

|

||||

### As a private cloud for Infrastructure-as-Code

|

||||

|

||||

One of the use cases is a self-portal for users within your company, where they can order the service they're interested in or a managed database.

|

||||

|

||||

You can implement best GitOps practices, where users will launch their own Kubernetes clusters and databases for their needs with a simple commit of configuration into your infrastructure Git repository.

|

||||

|

||||

Thanks to the standardization of the approach to deploying applications, you can expand the platform's capabilities using the functionality of standard Helm charts.

|

||||

|

||||

### As a Kubernetes distribution for Bare Metal

|

||||

|

||||

We created Cozystack primarily for our own needs, having vast experience in building reliable systems on bare metal infrastructure. This experience led to the formation of a separate boxed product, which is aimed at standardizing and providing a ready-to-use tool for managing your infrastructure.

|

||||

|

||||

Currently, Cozystack already solves a huge scope of infrastructure tasks: starting from provisioning bare metal servers, having a ready monitoring system, fast and reliable storage, a network fabric with the possibility of interconnect with your infrastructure, the ability to run virtual machines, databases, and much more right out of the box.

|

||||

|

||||

All this makes Cozystack a convenient platform for delivering and launching your application on Bare Metal.

|

||||

|

||||

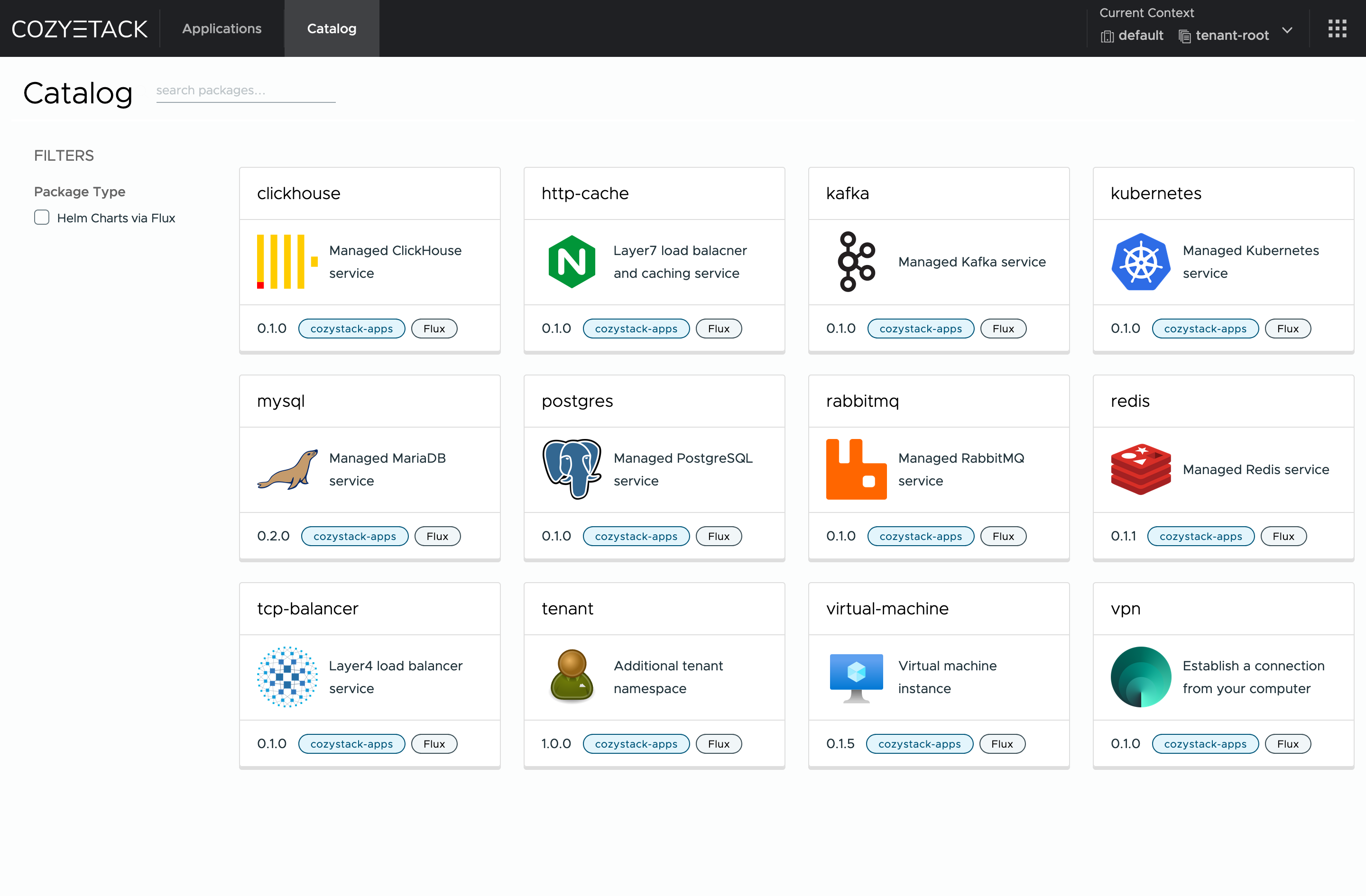

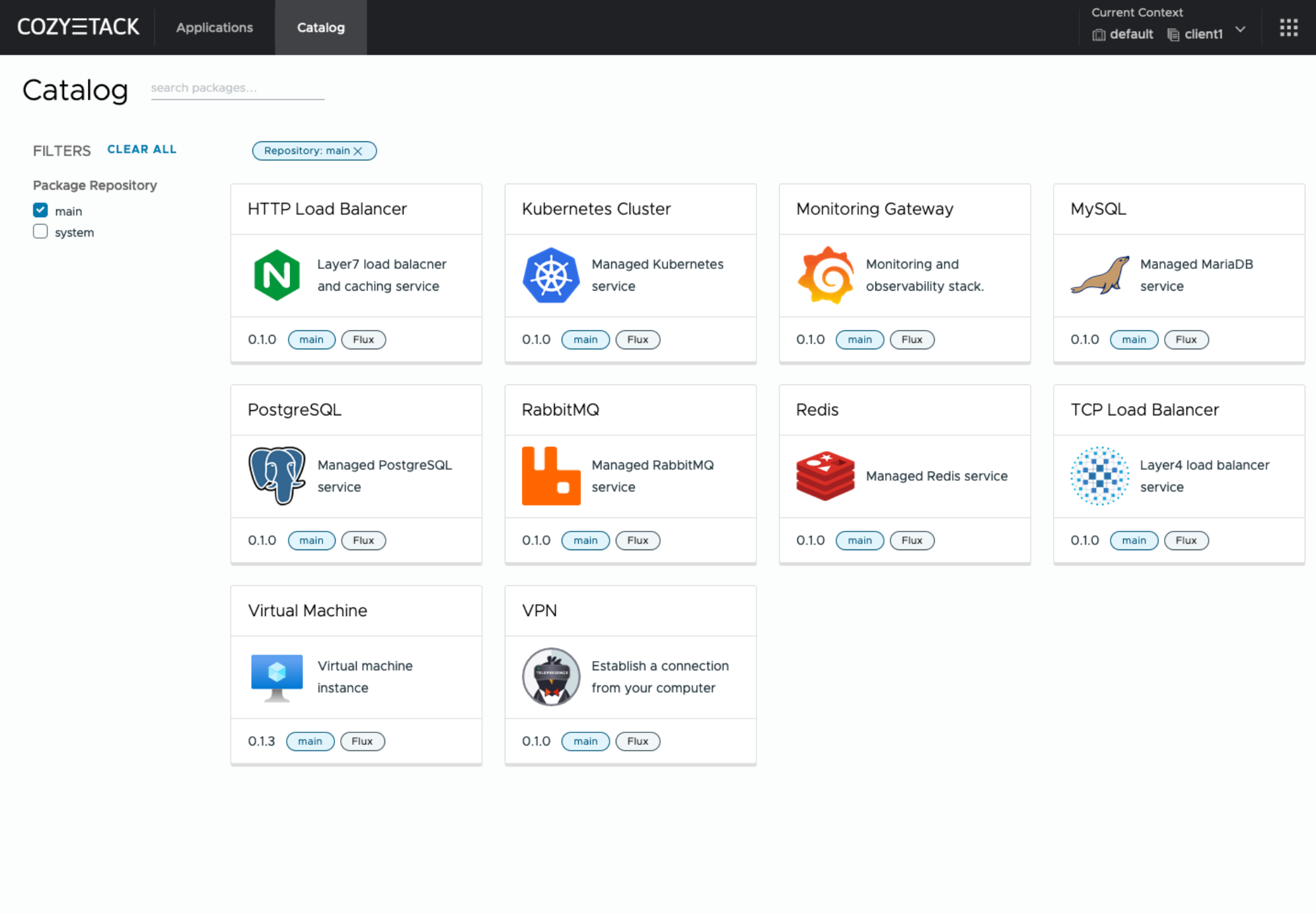

## Screenshot

|

||||

|

||||

|

||||

|

||||

|

||||

## Documentation

|

||||

## Core values

|

||||

|

||||

The documentation is located on official [cozystack.io](https://cozystack.io) website.

|

||||

### Standardization and unification

|

||||

All components of the platform are based on open source tools and technologies which are widely known in the industry.

|

||||

|

||||

Read [Get Started](https://cozystack.io/docs/get-started/) section for a quick start.

|

||||

### Collaborate, not compete

|

||||

If a feature being developed for the platform could be useful to a upstream project, it should be contributed to upstream project, rather than being implemented within the platform.

|

||||

|

||||

If you encounter any difficulties, start with the [troubleshooting guide](https://cozystack.io/docs/troubleshooting/), and work your way through the process that we've outlined.

|

||||

### API-first

|

||||

Cozystack is based on Kubernetes and involves close interaction with its API. We don't aim to completely hide the all elements behind a pretty UI or any sort of customizations; instead, we provide a standard interface and teach users how to work with basic primitives. The web interface is used solely for deploying applications and quickly diving into basic concepts of platform.

|

||||

|

||||

## Versioning

|

||||

## Quick Start

|

||||

|

||||

Versioning adheres to the [Semantic Versioning](http://semver.org/) principles.

|

||||

A full list of the available releases is available in the GitHub repository's [Release](https://github.com/aenix-io/cozystack/releases) section.

|

||||

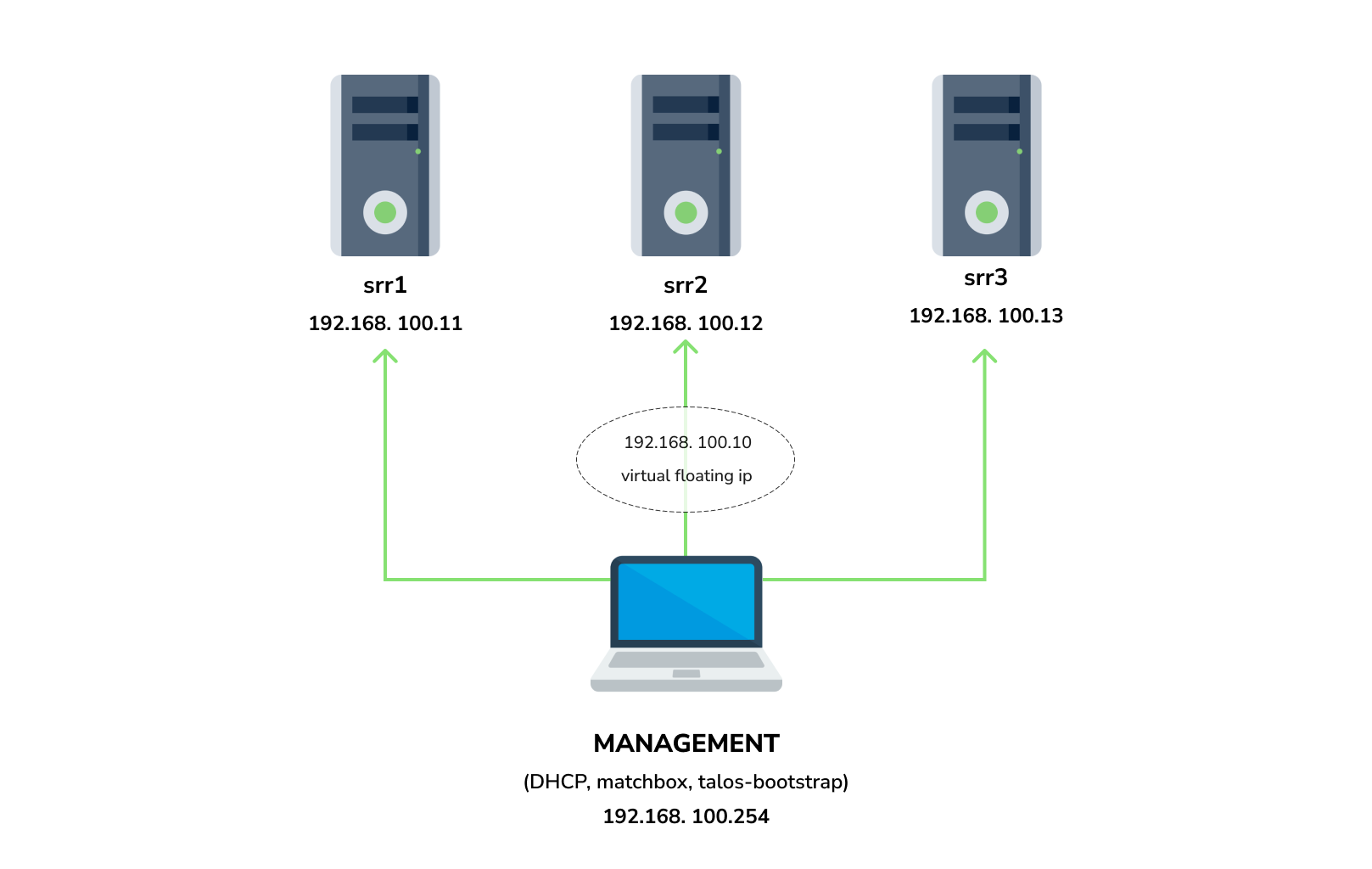

### Prepare infrastructure

|

||||

|

||||

- [Roadmap](https://github.com/orgs/aenix-io/projects/2)

|

||||

|

||||

## Contributions

|

||||

|

||||

|

||||

Contributions are highly appreciated and very welcomed!

|

||||

You need 3 physical servers or VMs with nested virtualisation:

|

||||

|

||||

In case of bugs, please, check if the issue has been already opened by checking the [GitHub Issues](https://github.com/aenix-io/cozystack/issues) section.

|

||||

In case it isn't, you can open a new one: a detailed report will help us to replicate it, assess it, and work on a fix.

|

||||

```

|

||||

CPU: 4 cores

|

||||

CPU model: host

|

||||

RAM: 8-16 GB

|

||||

HDD1: 32 GB

|

||||

HDD2: 100GB (raw)

|

||||

```

|

||||

|

||||

You can express your intention in working on the fix on your own.

|

||||

Commits are used to generate the changelog, and their author will be referenced in it.

|

||||

And one management VM or physical server connected to the same network.

|

||||

Any Linux system installed on it (eg. Ubuntu should be enough)

|

||||

|

||||

In case of **Feature Requests** please use the [Discussion's Feature Request section](https://github.com/aenix-io/cozystack/discussions/categories/feature-requests).

|

||||

**Note:** The VM should support `x86-64-v2` architecture, the most probably you can achieve this by setting cpu model to `host`

|

||||

|

||||

## License

|

||||

#### Install dependencies:

|

||||

|

||||

Cozystack is licensed under Apache 2.0.

|

||||

The code is provided as-is with no warranties.

|

||||

- `docker`

|

||||

- `talosctl`

|

||||

- `dialog`

|

||||

- `nmap`

|

||||

- `make`

|

||||

- `yq`

|

||||

- `kubectl`

|

||||

- `helm`

|

||||

|

||||

## Commercial Support

|

||||

### Netboot server

|

||||

|

||||

[**Ænix**](https://aenix.io) offers enterprise-grade support, available 24/7.

|

||||

Start matchbox with prebuilt Talos image for Cozystack:

|

||||

|

||||

We provide all types of assistance, including consultations, development of missing features, design, assistance with installation, and integration.

|

||||

```bash

|

||||

sudo docker run --name=matchbox -d --net=host ghcr.io/aenix-io/cozystack/matchbox:v0.0.2 \

|

||||

-address=:8080 \

|

||||

-log-level=debug

|

||||

```

|

||||

|

||||

[Contact us](https://aenix.io/contact/)

|

||||

Start DHCP-Server:

|

||||

```bash

|

||||

sudo docker run --name=dnsmasq -d --cap-add=NET_ADMIN --net=host quay.io/poseidon/dnsmasq \

|

||||

-d -q -p0 \

|

||||

--dhcp-range=192.168.100.3,192.168.100.254 \

|

||||

--dhcp-option=option:router,192.168.100.1 \

|

||||

--enable-tftp \

|

||||

--tftp-root=/var/lib/tftpboot \

|

||||

--dhcp-match=set:bios,option:client-arch,0 \

|

||||

--dhcp-boot=tag:bios,undionly.kpxe \

|

||||

--dhcp-match=set:efi32,option:client-arch,6 \

|

||||

--dhcp-boot=tag:efi32,ipxe.efi \

|

||||

--dhcp-match=set:efibc,option:client-arch,7 \

|

||||

--dhcp-boot=tag:efibc,ipxe.efi \

|

||||

--dhcp-match=set:efi64,option:client-arch,9 \

|

||||

--dhcp-boot=tag:efi64,ipxe.efi \

|

||||

--dhcp-userclass=set:ipxe,iPXE \

|

||||

--dhcp-boot=tag:ipxe,http://192.168.100.254:8080/boot.ipxe \

|

||||

--log-queries \

|

||||

--log-dhcp

|

||||

```

|

||||

|

||||

Where:

|

||||

- `192.168.100.3,192.168.100.254` range to allocate IPs from

|

||||

- `192.168.100.1` your gateway

|

||||

- `192.168.100.254` is address of your management server

|

||||

|

||||

Check status of containers:

|

||||

|

||||

```

|

||||

docker ps

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

|

||||

22044f26f74d quay.io/poseidon/dnsmasq "/usr/sbin/dnsmasq -…" 6 seconds ago Up 5 seconds dnsmasq

|

||||

231ad81ff9e0 ghcr.io/aenix-io/cozystack/matchbox:v0.0.2 "/matchbox -address=…" 58 seconds ago Up 57 seconds matchbox

|

||||

```

|

||||

|

||||

### Bootstrap cluster

|

||||

|

||||

Write configuration for Cozystack:

|

||||

|

||||

```yaml

|

||||

cat > patch.yaml <<\EOT

|

||||

machine:

|

||||

kubelet:

|

||||

nodeIP:

|

||||

validSubnets:

|

||||

- 192.168.100.0/24

|

||||

kernel:

|

||||

modules:

|

||||

- name: openvswitch

|

||||

- name: drbd

|

||||

parameters:

|

||||

- usermode_helper=disabled

|

||||

- name: zfs

|

||||

install:

|

||||

image: ghcr.io/aenix-io/cozystack/talos:v1.6.4

|

||||

files:

|

||||

- content: |

|

||||

[plugins]

|

||||

[plugins."io.containerd.grpc.v1.cri"]

|

||||

device_ownership_from_security_context = true

|

||||

path: /etc/cri/conf.d/20-customization.part

|

||||

op: create

|

||||

|

||||

cluster:

|

||||

network:

|

||||

cni:

|

||||

name: none

|

||||

podSubnets:

|

||||

- 10.244.0.0/16

|

||||

serviceSubnets:

|

||||

- 10.96.0.0/16

|

||||

EOT

|

||||

|

||||

cat > patch-controlplane.yaml <<\EOT

|

||||

cluster:

|

||||

allowSchedulingOnControlPlanes: true

|

||||

controllerManager:

|

||||

extraArgs:

|

||||

bind-address: 0.0.0.0

|

||||

scheduler:

|

||||

extraArgs:

|

||||

bind-address: 0.0.0.0

|

||||

apiServer:

|

||||

certSANs:

|

||||

- 127.0.0.1

|

||||

proxy:

|

||||

disabled: true

|

||||

discovery:

|

||||

enabled: false

|

||||

etcd:

|

||||

advertisedSubnets:

|

||||

- 192.168.100.0/24

|

||||

EOT

|

||||

```

|

||||

|

||||

Run [talos-bootstrap](https://github.com/aenix-io/talos-bootstrap/) to deploy cluster:

|

||||

|

||||

```bash

|

||||

talos-bootstrap install

|

||||

```

|

||||

|

||||

Save admin kubeconfig to access your Kubernetes cluster:

|

||||

```bash

|

||||

cp -i kubeconfig ~/.kube/config

|

||||

```

|

||||

|

||||

Check connection:

|

||||

```bash

|

||||

kubectl get ns

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME STATUS AGE

|

||||

default Active 7m56s

|

||||

kube-node-lease Active 7m56s

|

||||

kube-public Active 7m56s

|

||||

kube-system Active 7m56s

|

||||

```

|

||||

|

||||

|

||||

**Note:**: All nodes should currently show as "Not Ready", don't worry about that, this is because you disabled the default CNI plugin in the previous step. Cozystack will install it's own CNI-plugin on the next step.

|

||||

|

||||

|

||||

### Install Cozystack

|

||||

|

||||

|

||||

write config for cozystack:

|

||||

|

||||

**Note:** please make sure that you written the same setting specified in `patch.yaml` and `patch-controlplane.yaml` files.

|

||||

|

||||

```yaml

|

||||

cat > cozystack-config.yaml <<\EOT

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-system

|

||||

data:

|

||||

cluster-name: "cozystack"

|

||||

ipv4-pod-cidr: "10.244.0.0/16"

|

||||

ipv4-pod-gateway: "10.244.0.1"

|

||||

ipv4-svc-cidr: "10.96.0.0/16"

|

||||

ipv4-join-cidr: "100.64.0.0/16"

|

||||

EOT

|

||||

```

|

||||

|

||||

Create namesapce and install Cozystack system components:

|

||||

|

||||

```bash

|

||||

kubectl create ns cozy-system

|

||||

kubectl apply -f cozystack-config.yaml

|

||||

kubectl apply -f manifests/cozystack-installer.yaml

|

||||

```

|

||||

|

||||

(optional) You can track the logs of installer:

|

||||

```bash

|

||||

kubectl logs -n cozy-system deploy/cozystack -f

|

||||

```

|

||||

|

||||

Wait for a while, then check the status of installation:

|

||||

```bash

|

||||

kubectl get hr -A

|

||||

```

|

||||

|

||||

Wait until all releases become to `Ready` state:

|

||||

```console

|

||||

NAMESPACE NAME AGE READY STATUS

|

||||

cozy-cert-manager cert-manager 4m1s True Release reconciliation succeeded

|

||||

cozy-cert-manager cert-manager-issuers 4m1s True Release reconciliation succeeded

|

||||

cozy-cilium cilium 4m1s True Release reconciliation succeeded

|

||||

cozy-cluster-api capi-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-cluster-api capi-providers 4m1s True Release reconciliation succeeded

|

||||

cozy-dashboard dashboard 4m1s True Release reconciliation succeeded

|

||||

cozy-fluxcd cozy-fluxcd 4m1s True Release reconciliation succeeded

|

||||

cozy-grafana-operator grafana-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-kamaji kamaji 4m1s True Release reconciliation succeeded

|

||||

cozy-kubeovn kubeovn 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt-cdi kubevirt-cdi 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt-cdi kubevirt-cdi-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt kubevirt 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt kubevirt-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-linstor linstor 4m1s True Release reconciliation succeeded

|

||||

cozy-linstor piraeus-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-mariadb-operator mariadb-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-metallb metallb 4m1s True Release reconciliation succeeded

|

||||

cozy-monitoring monitoring 4m1s True Release reconciliation succeeded

|

||||

cozy-postgres-operator postgres-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-rabbitmq-operator rabbitmq-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-redis-operator redis-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-telepresence telepresence 4m1s True Release reconciliation succeeded

|

||||

cozy-victoria-metrics-operator victoria-metrics-operator 4m1s True Release reconciliation succeeded

|

||||

tenant-root tenant-root 4m1s True Release reconciliation succeeded

|

||||

```

|

||||

|

||||

#### Configure Storage

|

||||

|

||||

Setup alias to access LINSTOR:

|

||||

```bash

|

||||

alias linstor='kubectl exec -n cozy-linstor deploy/linstor-controller -- linstor'

|

||||

```

|

||||

|

||||

list your nodes

|

||||

```bash

|

||||

linstor node list

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

+-------------------------------------------------------+

|

||||

| Node | NodeType | Addresses | State |

|

||||

|=======================================================|

|

||||

| srv1 | SATELLITE | 192.168.100.11:3367 (SSL) | Online |

|

||||

| srv2 | SATELLITE | 192.168.100.12:3367 (SSL) | Online |

|

||||

| srv3 | SATELLITE | 192.168.100.13:3367 (SSL) | Online |

|

||||

+-------------------------------------------------------+

|

||||

```

|

||||

|

||||

list empty devices:

|

||||

|

||||

```bash

|

||||

linstor physical-storage list

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

+--------------------------------------------+

|

||||

| Size | Rotational | Nodes |

|

||||

|============================================|

|

||||

| 107374182400 | True | srv3[/dev/sdb] |

|

||||

| | | srv1[/dev/sdb] |

|

||||

| | | srv2[/dev/sdb] |

|

||||

+--------------------------------------------+

|

||||

```

|

||||

|

||||

|

||||

create storage pools:

|

||||

|

||||

```bash

|

||||

linstor ps cdp lvm srv1 /dev/sdb --pool-name data --storage-pool data

|

||||

linstor ps cdp lvm srv2 /dev/sdb --pool-name data --storage-pool data

|

||||

linstor ps cdp lvm srv3 /dev/sdb --pool-name data --storage-pool data

|

||||

```

|

||||

|

||||

list storage pools:

|

||||

|

||||

```bash

|

||||

linstor sp l

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

+-------------------------------------------------------------------------------------------------------------------------------------+

|

||||

| StoragePool | Node | Driver | PoolName | FreeCapacity | TotalCapacity | CanSnapshots | State | SharedName |

|

||||

|=====================================================================================================================================|

|

||||

| DfltDisklessStorPool | srv1 | DISKLESS | | | | False | Ok | srv1;DfltDisklessStorPool |

|

||||

| DfltDisklessStorPool | srv2 | DISKLESS | | | | False | Ok | srv2;DfltDisklessStorPool |

|

||||

| DfltDisklessStorPool | srv3 | DISKLESS | | | | False | Ok | srv3;DfltDisklessStorPool |

|

||||

| data | srv1 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv1;data |

|

||||

| data | srv2 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv2;data |

|

||||

| data | srv3 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv3;data |

|

||||

+-------------------------------------------------------------------------------------------------------------------------------------+

|

||||

```

|

||||

|

||||

|

||||

Create default storage classes:

|

||||

```yaml

|

||||

kubectl create -f- <<EOT

|

||||

---

|

||||

apiVersion: storage.k8s.io/v1

|

||||

kind: StorageClass

|

||||

metadata:

|

||||

name: local

|

||||

annotations:

|

||||

storageclass.kubernetes.io/is-default-class: "true"

|

||||

provisioner: linstor.csi.linbit.com

|

||||

parameters:

|

||||

linstor.csi.linbit.com/storagePool: "data"

|

||||

linstor.csi.linbit.com/layerList: "storage"

|

||||

linstor.csi.linbit.com/allowRemoteVolumeAccess: "false"

|

||||

volumeBindingMode: WaitForFirstConsumer

|

||||

allowVolumeExpansion: true

|

||||

---

|

||||

apiVersion: storage.k8s.io/v1

|

||||

kind: StorageClass

|

||||

metadata:

|

||||

name: replicated

|

||||

provisioner: linstor.csi.linbit.com

|

||||

parameters:

|

||||

linstor.csi.linbit.com/storagePool: "data"

|

||||

linstor.csi.linbit.com/autoPlace: "3"

|

||||

linstor.csi.linbit.com/layerList: "drbd storage"

|

||||

linstor.csi.linbit.com/allowRemoteVolumeAccess: "true"

|

||||

property.linstor.csi.linbit.com/DrbdOptions/auto-quorum: suspend-io

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Resource/on-no-data-accessible: suspend-io

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Resource/on-suspended-primary-outdated: force-secondary

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Net/rr-conflict: retry-connect

|

||||

volumeBindingMode: WaitForFirstConsumer

|

||||

allowVolumeExpansion: true

|

||||

EOT

|

||||

```

|

||||

|

||||

list storageclasses:

|

||||

|

||||

```bash

|

||||

kubectl get storageclasses

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

|

||||

local (default) linstor.csi.linbit.com Delete WaitForFirstConsumer true 11m

|

||||

replicated linstor.csi.linbit.com Delete WaitForFirstConsumer true 11m

|

||||

```

|

||||

|

||||

#### Configure Networking interconnection

|

||||

|

||||

To access your services select the range of unused IPs, eg. `192.168.100.200-192.168.100.250`

|

||||

|

||||

**Note:** These IPs should be from the same network as nodes or they should have all necessary routes for them.

|

||||

|

||||

Configure MetalLB to use and announce this range:

|

||||

```yaml

|

||||

kubectl create -f- <<EOT

|

||||

---

|

||||

apiVersion: metallb.io/v1beta1

|

||||

kind: L2Advertisement

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-metallb

|

||||

spec:

|

||||

ipAddressPools:

|

||||

- cozystack

|

||||

---

|

||||

apiVersion: metallb.io/v1beta1

|

||||

kind: IPAddressPool

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-metallb

|

||||

spec:

|

||||

addresses:

|

||||

- 192.168.100.200-192.168.100.250

|

||||

autoAssign: true

|

||||

avoidBuggyIPs: false

|

||||

EOT

|

||||

```

|

||||

|

||||

#### Setup basic applications

|

||||

|

||||

Get token from `tenant-root`:

|

||||

```bash

|

||||

kubectl get secret -n tenant-root tenant-root -o go-template='{{ printf "%s\n" (index .data "token" | base64decode) }}'

|

||||

```

|

||||

|

||||

Enable port forward to cozy-dashboard:

|

||||

```bash

|

||||

kubectl port-forward -n cozy-dashboard svc/dashboard 8080:80

|

||||

```

|

||||

|

||||

Open: http://localhost:8080/

|

||||

|

||||

- Select `tenant-root`

|

||||

- Click `Upgrade` button

|

||||

- Write a domain into `host` which you wish to use as parent domain for all deployed applications

|

||||

**Note:**

|

||||

- if you have no domain yet, you can use `192.168.100.200.nip.io` where `192.168.100.200` is a first IP address in your network addresses range.

|

||||

- alternatively you can leave the default value, however you'll be need to modify your `/etc/hosts` every time you want to access specific application.

|

||||

- Set `etcd`, `monitoring` and `ingress` to enabled position

|

||||

- Click Deploy

|

||||

|

||||

|

||||

Check persistent volumes provisioned:

|

||||

|

||||

```bash

|

||||

kubectl get pvc -n tenant-root

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

|

||||

data-etcd-0 Bound pvc-4cbd29cc-a29f-453d-b412-451647cd04bf 10Gi RWO local <unset> 2m10s

|

||||

data-etcd-1 Bound pvc-1579f95a-a69d-4a26-bcc2-b15ccdbede0d 10Gi RWO local <unset> 115s

|

||||

data-etcd-2 Bound pvc-907009e5-88bf-4d18-91e7-b56b0dbfb97e 10Gi RWO local <unset> 91s

|

||||

grafana-db-1 Bound pvc-7b3f4e23-228a-46fd-b820-d033ef4679af 10Gi RWO local <unset> 2m41s

|

||||

grafana-db-2 Bound pvc-ac9b72a4-f40e-47e8-ad24-f50d843b55e4 10Gi RWO local <unset> 113s

|

||||

vmselect-cachedir-vmselect-longterm-0 Bound pvc-622fa398-2104-459f-8744-565eee0a13f1 2Gi RWO local <unset> 2m21s

|

||||

vmselect-cachedir-vmselect-longterm-1 Bound pvc-fc9349f5-02b2-4e25-8bef-6cbc5cc6d690 2Gi RWO local <unset> 2m21s

|

||||

vmselect-cachedir-vmselect-shortterm-0 Bound pvc-7acc7ff6-6b9b-4676-bd1f-6867ea7165e2 2Gi RWO local <unset> 2m41s

|

||||

vmselect-cachedir-vmselect-shortterm-1 Bound pvc-e514f12b-f1f6-40ff-9838-a6bda3580eb7 2Gi RWO local <unset> 2m40s

|

||||

vmstorage-db-vmstorage-longterm-0 Bound pvc-e8ac7fc3-df0d-4692-aebf-9f66f72f9fef 10Gi RWO local <unset> 2m21s

|

||||

vmstorage-db-vmstorage-longterm-1 Bound pvc-68b5ceaf-3ed1-4e5a-9568-6b95911c7c3a 10Gi RWO local <unset> 2m21s

|

||||

vmstorage-db-vmstorage-shortterm-0 Bound pvc-cee3a2a4-5680-4880-bc2a-85c14dba9380 10Gi RWO local <unset> 2m41s

|

||||

vmstorage-db-vmstorage-shortterm-1 Bound pvc-d55c235d-cada-4c4a-8299-e5fc3f161789 10Gi RWO local <unset> 2m41s

|

||||

```

|

||||

|

||||

Check all pods are running:

|

||||

|

||||

|

||||

```bash

|

||||

kubectl get pod -n tenant-root

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME READY STATUS RESTARTS AGE

|

||||

etcd-0 1/1 Running 0 2m1s

|

||||

etcd-1 1/1 Running 0 106s

|

||||

etcd-2 1/1 Running 0 82s

|

||||

grafana-db-1 1/1 Running 0 119s

|

||||

grafana-db-2 1/1 Running 0 13s

|

||||

grafana-deployment-74b5656d6-5dcvn 1/1 Running 0 90s

|

||||

grafana-deployment-74b5656d6-q5589 1/1 Running 1 (105s ago) 111s

|

||||

root-ingress-controller-6ccf55bc6d-pg79l 2/2 Running 0 2m27s

|

||||

root-ingress-controller-6ccf55bc6d-xbs6x 2/2 Running 0 2m29s

|

||||

root-ingress-defaultbackend-686bcbbd6c-5zbvp 1/1 Running 0 2m29s

|

||||

vmalert-vmalert-644986d5c-7hvwk 2/2 Running 0 2m30s

|

||||

vmalertmanager-alertmanager-0 2/2 Running 0 2m32s

|

||||

vmalertmanager-alertmanager-1 2/2 Running 0 2m31s

|

||||

vminsert-longterm-75789465f-hc6cz 1/1 Running 0 2m10s

|

||||

vminsert-longterm-75789465f-m2v4t 1/1 Running 0 2m12s

|

||||

vminsert-shortterm-78456f8fd9-wlwww 1/1 Running 0 2m29s

|

||||

vminsert-shortterm-78456f8fd9-xg7cw 1/1 Running 0 2m28s

|

||||

vmselect-longterm-0 1/1 Running 0 2m12s

|

||||

vmselect-longterm-1 1/1 Running 0 2m12s

|

||||

vmselect-shortterm-0 1/1 Running 0 2m31s

|

||||

vmselect-shortterm-1 1/1 Running 0 2m30s

|

||||

vmstorage-longterm-0 1/1 Running 0 2m12s

|

||||

vmstorage-longterm-1 1/1 Running 0 2m12s

|

||||

vmstorage-shortterm-0 1/1 Running 0 2m32s

|

||||

vmstorage-shortterm-1 1/1 Running 0 2m31s

|

||||

```

|

||||

|

||||

Now you can get public IP of ingress controller:

|

||||

```

|

||||

kubectl get svc -n tenant-root root-ingress-controller

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

|

||||

root-ingress-controller LoadBalancer 10.96.16.141 192.168.100.200 80:31632/TCP,443:30113/TCP 3m33s

|

||||

```

|

||||

|

||||

Use `grafana.example.org` (under 192.168.100.200) to access system monitoring, where `example.org` is your domain specified for `tenant-root`

|

||||

|

||||

- login: `admin`

|

||||

- password:

|

||||

|

||||

```bash

|

||||

kubectl get secret -n tenant-root grafana-admin-password -o go-template='{{ printf "%s\n" (index .data "password" | base64decode) }}'

|

||||

```

|

||||

|

||||

@@ -3,7 +3,7 @@ set -e

|

||||

|

||||

if [ -e $1 ]; then

|

||||

echo "Please pass version in the first argument"

|

||||

echo "Example: $0 0.2.0"

|

||||

echo "Example: $0 v0.0.2"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

@@ -12,14 +12,11 @@ talos_version=$(awk '/^version:/ {print $2}' packages/core/installer/images/talo

|

||||

|

||||

set -x

|

||||

|

||||

sed -i "/^TAG / s|=.*|= v${version}|" \

|

||||

sed -i "s|\(ghcr.io/aenix-io/cozystack/matchbox:\)v[^ ]\+|\1${version}|g" README.md

|

||||

sed -i "s|\(ghcr.io/aenix-io/cozystack/talos:\)v[^ ]\+|\1${talos_version}|g" README.md

|

||||

|

||||

sed -i "/^TAG / s|=.*|= ${version}|" \

|

||||

packages/apps/http-cache/Makefile \

|

||||

packages/apps/kubernetes/Makefile \

|

||||

packages/core/installer/Makefile \

|

||||

packages/system/dashboard/Makefile

|

||||

|

||||

sed -i "/^VERSION / s|=.*|= ${version}|" \

|

||||

packages/core/Makefile \

|

||||

packages/system/Makefile

|

||||

make -C packages/core fix-chartnames

|

||||

make -C packages/system fix-chartnames

|

||||

|

||||

@@ -61,6 +61,8 @@ spec:

|

||||

selector:

|

||||

matchLabels:

|

||||

app: cozystack

|

||||

strategy:

|

||||

type: Recreate

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

@@ -70,26 +72,14 @@ spec:

|

||||

serviceAccountName: cozystack

|

||||

containers:

|

||||

- name: cozystack

|

||||

image: "ghcr.io/aenix-io/cozystack/cozystack:v0.2.0"

|

||||

image: "ghcr.io/aenix-io/cozystack/installer:v0.0.2"

|

||||

env:

|

||||

- name: KUBERNETES_SERVICE_HOST

|

||||

value: localhost

|

||||

- name: KUBERNETES_SERVICE_PORT

|

||||

value: "7445"

|

||||

- name: K8S_AWAIT_ELECTION_ENABLED

|

||||

value: "1"

|

||||

- name: K8S_AWAIT_ELECTION_NAME

|

||||

value: cozystack

|

||||

- name: K8S_AWAIT_ELECTION_LOCK_NAME

|

||||

value: cozystack

|

||||

- name: K8S_AWAIT_ELECTION_LOCK_NAMESPACE

|

||||

value: cozy-system

|

||||

- name: K8S_AWAIT_ELECTION_IDENTITY

|

||||

valueFrom:

|

||||

fieldRef:

|

||||

fieldPath: metadata.name

|

||||

- name: darkhttpd

|

||||

image: "ghcr.io/aenix-io/cozystack/cozystack:v0.2.0"

|

||||

image: "ghcr.io/aenix-io/cozystack/installer:v0.0.2"

|

||||

command:

|

||||

- /usr/bin/darkhttpd

|

||||

- /cozystack/assets

|

||||

@@ -102,6 +92,3 @@ spec:

|

||||

- key: "node.kubernetes.io/not-ready"

|

||||

operator: "Exists"

|

||||

effect: "NoSchedule"

|

||||

- key: "node.cilium.io/agent-not-ready"

|

||||

operator: "Exists"

|

||||

effect: "NoSchedule"

|

||||

|

||||

@@ -2,7 +2,7 @@ PUSH := 1

|

||||

LOAD := 0

|

||||

REGISTRY := ghcr.io/aenix-io/cozystack

|

||||

NGINX_CACHE_TAG = v0.1.0

|

||||

TAG := v0.2.0

|

||||

TAG := v0.0.2

|

||||

|

||||

image: image-nginx

|

||||

|

||||

|

||||

@@ -1,4 +1,14 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:0487fc50bb5f870720b05e947185424a400fad38b682af8f1ca4b418ed3c5b4b",

|

||||

"containerimage.digest": "sha256:be12f3834be0e2f129685f682fab83c871610985fc43668ce6a294c9de603798"

|

||||

"containerimage.config.digest": "sha256:f4ad0559a74749de0d11b1835823bf9c95332962b0909450251d849113f22c19",

|

||||

"containerimage.descriptor": {

|

||||

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

|

||||

"digest": "sha256:3a0e8d791e0ccf681711766387ea9278e7d39f1956509cead2f72aa0001797ef",

|

||||

"size": 1093,

|

||||

"platform": {

|

||||

"architecture": "amd64",

|

||||

"os": "linux"

|

||||

}

|

||||

},

|

||||

"containerimage.digest": "sha256:3a0e8d791e0ccf681711766387ea9278e7d39f1956509cead2f72aa0001797ef",

|

||||

"image.name": "ghcr.io/aenix-io/cozystack/nginx-cache:v0.1.0,ghcr.io/aenix-io/cozystack/nginx-cache:v0.1.0-v0.0.2"

|

||||

}

|

||||

@@ -1,7 +1,7 @@

|

||||

PUSH := 1

|

||||

LOAD := 0

|

||||

REGISTRY := ghcr.io/aenix-io/cozystack

|

||||

TAG := v0.2.0

|

||||

TAG := v0.0.2

|

||||

UBUNTU_CONTAINER_DISK_TAG = v1.29.1

|

||||

|

||||

image: image-ubuntu-container-disk

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:43d0bfd01c5e364ba961f1e3dc2c7ccd7fd4ca65bd26bc8c4a5298d7ff2c9f4f",

|

||||

"containerimage.digest": "sha256:908b3c186bee86f1c9476317eb6582d07f19776b291aa068e5642f8fd08fa9e7"

|

||||

"containerimage.config.digest": "sha256:e982cfa2320d3139ed311ae44bcc5ea18db7e4e76d2746e0af04c516288ff0f1",

|

||||

"containerimage.digest": "sha256:34f6aba5b5a2afbb46bbb891ef4ddc0855c2ffe4f9e5a99e8e553286ddd2c070"

|

||||

}

|

||||

@@ -16,7 +16,7 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.2.0

|

||||

version: 0.1.0

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

{{- range $name := .Values.databases }}

|

||||

{{ $dnsName := replace "_" "-" $name }}

|

||||

---

|

||||

apiVersion: k8s.mariadb.com/v1alpha1

|

||||

apiVersion: mariadb.mmontes.io/v1alpha1

|

||||

kind: Database

|

||||

metadata:

|

||||

name: {{ $.Release.Name }}-{{ $dnsName }}

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

---

|

||||

apiVersion: k8s.mariadb.com/v1alpha1

|

||||

apiVersion: mariadb.mmontes.io/v1alpha1

|

||||

kind: MariaDB

|

||||

metadata:

|

||||

name: {{ .Release.Name }}

|

||||

@@ -35,9 +35,8 @@ spec:

|

||||

# automaticFailover: true

|

||||

|

||||

metrics:

|

||||

enabled: true

|

||||

exporter:

|

||||

image: prom/mysqld-exporter:v0.15.1

|

||||

image: prom/mysqld-exporter:v0.14.0

|

||||

resources:

|

||||

requests:

|

||||

cpu: 50m

|

||||

@@ -54,10 +53,14 @@ spec:

|

||||

name: {{ .Release.Name }}-my-cnf

|

||||

key: config

|

||||

|

||||

storage:

|

||||

size: {{ .Values.size }}

|

||||

resizeInUseVolumes: true

|

||||

waitForVolumeResize: true

|

||||

volumeClaimTemplate:

|

||||

resources:

|

||||

requests:

|

||||

storage: {{ .Values.size }}

|

||||

accessModes:

|

||||

- ReadWriteOnce

|

||||

|

||||

|

||||

|

||||

{{- if .Values.external }}

|

||||

primaryService:

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

{{ if not (eq $name "root") }}

|

||||

{{ $dnsName := replace "_" "-" $name }}

|

||||

---

|

||||

apiVersion: k8s.mariadb.com/v1alpha1

|

||||

apiVersion: mariadb.mmontes.io/v1alpha1

|

||||

kind: User

|

||||

metadata:

|

||||

name: {{ $.Release.Name }}-{{ $dnsName }}

|

||||

@@ -15,7 +15,7 @@ spec:

|

||||

key: {{ $name }}-password

|

||||

maxUserConnections: {{ $u.maxUserConnections }}

|

||||

---

|

||||

apiVersion: k8s.mariadb.com/v1alpha1

|

||||

apiVersion: mariadb.mmontes.io/v1alpha1

|

||||

kind: Grant

|

||||

metadata:

|

||||

name: {{ $.Release.Name }}-{{ $dnsName }}

|

||||

|

||||

@@ -1,7 +1,6 @@

|

||||

http-cache 0.1.0 HEAD

|

||||

kubernetes 0.1.0 HEAD

|

||||

mysql 0.1.0 f642698

|

||||

mysql 0.2.0 HEAD

|

||||

mysql 0.1.0 HEAD

|

||||

postgres 0.1.0 HEAD

|

||||

rabbitmq 0.1.0 HEAD

|

||||

redis 0.1.1 HEAD

|

||||

|

||||

@@ -1,6 +1,4 @@

|

||||

VERSION := 0.2.0

|

||||

|

||||

gen: fix-chartnames

|

||||

|

||||

fix-chartnames:

|

||||

find . -name Chart.yaml -maxdepth 2 | awk -F/ '{print $$2}' | while read i; do printf "name: cozy-%s\nversion: $(VERSION)\n" "$$i" > "$$i/Chart.yaml"; done

|

||||

find . -name Chart.yaml -maxdepth 2 | awk -F/ '{print $$2}' | while read i; do printf "name: cozy-%s\nversion: 1.0.0\n" "$$i" > "$$i/Chart.yaml"; done

|

||||

|

||||

@@ -1,13 +0,0 @@

|

||||

NAMESPACE=cozy-fluxcd

|

||||

NAME=fluxcd

|

||||

|

||||

API_VERSIONS_FLAGS=$(addprefix -a ,$(shell kubectl api-versions))

|

||||

|

||||

show:

|

||||

helm template -n $(NAMESPACE) $(NAME) . --no-hooks --dry-run=server $(API_VERSIONS_FLAGS)

|

||||

|

||||

apply:

|

||||

helm template -n $(NAMESPACE) $(NAME) . --no-hooks --dry-run=server $(API_VERSIONS_FLAGS) | kubectl apply -n $(NAMESPACE) -f-

|

||||

|

||||

diff:

|

||||

helm template -n $(NAMESPACE) $(NAME) . --no-hooks --dry-run=server $(API_VERSIONS_FLAGS) | kubectl diff -n $(NAMESPACE) -f-

|

||||

File diff suppressed because it is too large

Load Diff

@@ -1,2 +1,2 @@

|

||||

name: cozy-installer

|

||||

version: 0.2.0

|

||||

version: 1.0.0

|

||||

|

||||

@@ -1,9 +1,9 @@

|

||||

NAMESPACE=cozy-system

|

||||

NAMESPACE=cozy-installer

|

||||

NAME=installer

|

||||

PUSH := 1

|

||||

LOAD := 0

|

||||

REGISTRY := ghcr.io/aenix-io/cozystack

|

||||

TAG := v0.2.0

|

||||

TAG := v0.0.2

|

||||

TALOS_VERSION=$(shell awk '/^version:/ {print $$2}' images/talos/profiles/installer.yaml)

|

||||

|

||||

show:

|

||||

@@ -18,19 +18,18 @@ diff:

|

||||

update:

|

||||

hack/gen-profiles.sh

|

||||

|

||||

image: image-cozystack image-talos image-matchbox

|

||||

image: image-installer image-talos image-matchbox

|

||||

|

||||

image-cozystack:

|

||||

make -C ../../.. repos

|

||||

docker buildx build -f images/cozystack/Dockerfile ../../.. \

|

||||

image-installer:

|

||||

docker buildx build -f images/installer/Dockerfile ../../.. \

|

||||

--provenance false \

|

||||

--tag $(REGISTRY)/cozystack:$(TAG) \

|

||||

--cache-from type=registry,ref=$(REGISTRY)/cozystack:$(TAG) \

|

||||

--tag $(REGISTRY)/installer:$(TAG) \

|

||||

--cache-from type=registry,ref=$(REGISTRY)/installer:$(TAG) \

|

||||

--cache-to type=inline \

|

||||

--metadata-file images/cozystack.json \

|

||||

--metadata-file images/installer.json \

|

||||

--push=$(PUSH) \

|

||||

--load=$(LOAD)

|

||||

echo "$(REGISTRY)/cozystack:$(TAG)" > images/cozystack.tag

|

||||

echo "$(REGISTRY)/installer:$(TAG)" > images/installer.tag

|

||||

|

||||

image-talos:

|

||||

test -f ../../../_out/assets/installer-amd64.tar || make talos-installer

|

||||

@@ -44,18 +43,14 @@ image-matchbox:

|

||||

docker buildx build -f images/matchbox/Dockerfile ../../.. \

|

||||

--provenance false \

|

||||

--tag $(REGISTRY)/matchbox:$(TAG) \

|

||||

--tag $(REGISTRY)/matchbox:$(TALOS_VERSION)-$(TAG) \

|

||||

--cache-from type=registry,ref=$(REGISTRY)/matchbox:$(TALOS_VERSION) \

|

||||

--cache-from type=registry,ref=$(REGISTRY)/matchbox:$(TAG) \

|

||||

--cache-to type=inline \

|

||||

--metadata-file images/matchbox.json \

|

||||

--push=$(PUSH) \

|

||||

--load=$(LOAD)

|

||||

echo "$(REGISTRY)/matchbox:$(TALOS_VERSION)" > images/matchbox.tag

|

||||

echo "$(REGISTRY)/matchbox:$(TAG)" > images/matchbox.tag

|

||||

|

||||

assets: talos-iso

|

||||

|

||||

talos-initramfs talos-kernel talos-installer talos-iso:

|

||||

mkdir -p ../../../_out/assets

|

||||

cat images/talos/profiles/$(subst talos-,,$@).yaml | \

|

||||

docker run --rm -i -v /dev:/dev --privileged "ghcr.io/siderolabs/imager:$(TALOS_VERSION)" --tar-to-stdout - | \

|

||||

tar -C ../../../_out/assets -xzf-

|

||||

cat images/talos/profiles/$(subst talos-,,$@).yaml | docker run --rm -i -v $${PWD}/../../../_out/assets:/out -v /dev:/dev --privileged "ghcr.io/siderolabs/imager:$(TALOS_VERSION)" -

|

||||

|

||||

@@ -1,4 +0,0 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:326a169fb5d4277a5c3b0359e0c885b31d1360b58475bbc316be1971c710cd8d",

|

||||

"containerimage.digest": "sha256:a608bdb75b3e06f6365f5f0b3fea82ac93c564d11f316f17e3d46e8a497a321d"

|

||||

}

|

||||

@@ -1 +0,0 @@

|

||||

ghcr.io/aenix-io/cozystack/cozystack:v0.2.0

|

||||

14

packages/core/installer/images/installer.json

Normal file

14

packages/core/installer/images/installer.json

Normal file

@@ -0,0 +1,14 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:5c7f51a9cbc945c13d52157035eba6ba4b6f3b68b76280f8e64b4f6ba239db1a",

|

||||

"containerimage.descriptor": {

|

||||

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

|

||||

"digest": "sha256:7cda3480faf0539ed4a3dd252aacc7a997645d3a390ece377c36cf55f9e57e11",

|

||||

"size": 2074,

|

||||

"platform": {

|

||||

"architecture": "amd64",

|

||||

"os": "linux"

|

||||

}

|

||||

},

|

||||

"containerimage.digest": "sha256:7cda3480faf0539ed4a3dd252aacc7a997645d3a390ece377c36cf55f9e57e11",

|

||||

"image.name": "ghcr.io/aenix-io/cozystack/installer:v0.0.2"

|

||||

}

|

||||

1

packages/core/installer/images/installer.tag

Normal file

1

packages/core/installer/images/installer.tag

Normal file

@@ -0,0 +1 @@

|

||||

ghcr.io/aenix-io/cozystack/installer:v0.0.2

|

||||

@@ -1,15 +1,3 @@

|

||||

FROM golang:alpine3.19 as k8s-await-election-builder

|

||||

|

||||

ARG K8S_AWAIT_ELECTION_GITREPO=https://github.com/LINBIT/k8s-await-election

|

||||

ARG K8S_AWAIT_ELECTION_VERSION=0.4.1

|

||||

|

||||

RUN apk add --no-cache git make

|

||||

RUN git clone ${K8S_AWAIT_ELECTION_GITREPO} /usr/local/go/k8s-await-election/ \

|

||||

&& cd /usr/local/go/k8s-await-election \

|

||||

&& git reset --hard v${K8S_AWAIT_ELECTION_VERSION} \

|

||||

&& make \

|

||||

&& mv ./out/k8s-await-election-amd64 /k8s-await-election

|

||||

|

||||

FROM alpine:3.19 AS builder

|

||||

|

||||

RUN apk add --no-cache make git

|

||||

@@ -30,8 +18,7 @@ COPY scripts /cozystack/scripts

|

||||

COPY --from=builder /src/packages/core /cozystack/packages/core

|

||||

COPY --from=builder /src/packages/system /cozystack/packages/system

|

||||

COPY --from=builder /src/_out/repos /cozystack/assets/repos

|

||||

COPY --from=k8s-await-election-builder /k8s-await-election /usr/bin/k8s-await-election

|

||||

COPY dashboards /cozystack/assets/dashboards

|

||||

|

||||

WORKDIR /cozystack

|

||||

ENTRYPOINT ["/usr/bin/k8s-await-election", "/cozystack/scripts/installer.sh" ]

|

||||

ENTRYPOINT [ "/cozystack/scripts/installer.sh" ]

|

||||

@@ -1,4 +1,14 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:dc584f743bb73e04dcbebca7ab4f602f2c067190fd9609c3fd84412e83c20445",

|

||||

"containerimage.digest": "sha256:39ab0bf769b269a8082eeb31a9672e39caa61dd342ba2157b954c642f54a32ff"

|

||||

"containerimage.config.digest": "sha256:cb8cb211017e51f6eb55604287c45cbf6ed8add5df482aaebff3d493a11b5a76",

|

||||

"containerimage.descriptor": {

|

||||

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

|

||||

"digest": "sha256:3be72cdce2f4ab4886a70fb7b66e4518a1fe4ba0771319c96fa19a0d6f409602",

|

||||

"size": 1488,

|

||||

"platform": {

|

||||

"architecture": "amd64",

|

||||

"os": "linux"

|

||||

}

|

||||

},

|

||||

"containerimage.digest": "sha256:3be72cdce2f4ab4886a70fb7b66e4518a1fe4ba0771319c96fa19a0d6f409602",

|

||||

"image.name": "ghcr.io/aenix-io/cozystack/matchbox:v0.0.2"

|

||||

}

|

||||

@@ -1 +1 @@

|

||||

ghcr.io/aenix-io/cozystack/matchbox:v1.6.4

|

||||

ghcr.io/aenix-io/cozystack/matchbox:v0.0.2

|

||||

|

||||

@@ -41,6 +41,8 @@ spec:

|

||||

selector:

|

||||

matchLabels:

|

||||

app: cozystack

|

||||

strategy:

|

||||

type: Recreate

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

@@ -50,26 +52,14 @@ spec:

|

||||

serviceAccountName: cozystack

|

||||

containers:

|

||||

- name: cozystack

|

||||

image: "{{ .Files.Get "images/cozystack.tag" | trim }}@{{ index (.Files.Get "images/cozystack.json" | fromJson) "containerimage.digest" }}"

|

||||

image: "{{ .Files.Get "images/installer.tag" | trim }}@{{ index (.Files.Get "images/installer.json" | fromJson) "containerimage.digest" }}"

|

||||

env:

|

||||

- name: KUBERNETES_SERVICE_HOST

|

||||

value: localhost

|

||||

- name: KUBERNETES_SERVICE_PORT

|

||||

value: "7445"

|

||||

- name: K8S_AWAIT_ELECTION_ENABLED

|

||||

value: "1"

|

||||

- name: K8S_AWAIT_ELECTION_NAME

|

||||

value: cozystack

|

||||

- name: K8S_AWAIT_ELECTION_LOCK_NAME

|

||||

value: cozystack

|

||||

- name: K8S_AWAIT_ELECTION_LOCK_NAMESPACE

|

||||

value: cozy-system

|

||||

- name: K8S_AWAIT_ELECTION_IDENTITY

|

||||

valueFrom:

|

||||

fieldRef:

|

||||

fieldPath: metadata.name

|

||||

- name: darkhttpd

|

||||

image: "{{ .Files.Get "images/cozystack.tag" | trim }}@{{ index (.Files.Get "images/cozystack.json" | fromJson) "containerimage.digest" }}"

|

||||

image: "{{ .Files.Get "images/installer.tag" | trim }}@{{ index (.Files.Get "images/installer.json" | fromJson) "containerimage.digest" }}"

|

||||

command:

|

||||

- /usr/bin/darkhttpd

|

||||

- /cozystack/assets

|

||||

@@ -82,9 +72,6 @@ spec:

|

||||

- key: "node.kubernetes.io/not-ready"

|

||||

operator: "Exists"

|

||||

effect: "NoSchedule"

|

||||

- key: "node.cilium.io/agent-not-ready"

|

||||

operator: "Exists"

|

||||

effect: "NoSchedule"

|

||||

---

|

||||

apiVersion: v1

|

||||

kind: Service

|

||||

|

||||

@@ -1,2 +1,2 @@

|

||||

name: cozy-platform

|

||||

version: 0.2.0

|

||||

version: 1.0.0

|

||||

|

||||

@@ -13,7 +13,7 @@ namespaces-show:

|

||||

helm template -n $(NAMESPACE) $(NAME) . --dry-run=server $(API_VERSIONS_FLAGS) -s templates/namespaces.yaml

|

||||

|

||||

namespaces-apply:

|

||||

helm template -n $(NAMESPACE) $(NAME) . --dry-run=server $(API_VERSIONS_FLAGS) -s templates/namespaces.yaml | kubectl apply -n $(NAMESPACE) -f-

|

||||

helm template -n $(NAMESPACE) $(NAME) . --dry-run=server $(API_VERSIONS_FLAGS) -s templates/namespaces.yaml | kubectl apply -f-

|

||||

|

||||

diff:

|

||||

helm template -n $(NAMESPACE) $(NAME) . --dry-run=server $(API_VERSIONS_FLAGS) | kubectl diff -f-

|

||||

helm template -n $(NAMESPACE) $(NAME) . --dry-run=server $(API_VERSIONS_FLAGS) -s templates/namespaces.yaml | kubectl diff -f-

|

||||

|

||||

@@ -1,114 +0,0 @@

|

||||

{{- $cozyConfig := lookup "v1" "ConfigMap" "cozy-system" "cozystack" }}

|

||||

|

||||

releases:

|

||||

- name: cilium

|

||||

releaseName: cilium

|

||||

chart: cozy-cilium

|

||||

namespace: cozy-cilium

|

||||

privileged: true

|

||||

dependsOn: []

|

||||

values:

|

||||

cilium:

|

||||

bpf:

|

||||

masquerade: true

|

||||

cni:

|

||||

chainingMode: ~

|

||||

customConf: false

|

||||

configMap: ""

|

||||

enableIPv4Masquerade: true

|

||||

enableIdentityMark: true

|

||||

ipv4NativeRoutingCIDR: "{{ index $cozyConfig.data "ipv4-pod-cidr" }}"

|

||||

autoDirectNodeRoutes: true

|

||||

|

||||

- name: cert-manager

|

||||

releaseName: cert-manager

|

||||

chart: cozy-cert-manager

|

||||

namespace: cozy-cert-manager

|

||||

dependsOn: [cilium]

|

||||

|

||||

- name: cert-manager-issuers

|

||||

releaseName: cert-manager-issuers

|

||||

chart: cozy-cert-manager-issuers

|

||||

namespace: cozy-cert-manager

|

||||

dependsOn: [cilium,cert-manager]

|

||||

|

||||

- name: victoria-metrics-operator

|

||||

releaseName: victoria-metrics-operator

|

||||

chart: cozy-victoria-metrics-operator

|

||||

namespace: cozy-victoria-metrics-operator

|

||||

dependsOn: [cilium,cert-manager]

|

||||

|

||||

- name: monitoring

|

||||

releaseName: monitoring

|

||||

chart: cozy-monitoring

|

||||

namespace: cozy-monitoring

|

||||

privileged: true

|

||||

dependsOn: [cilium,victoria-metrics-operator]

|

||||

|

||||

- name: metallb

|

||||

releaseName: metallb

|

||||

chart: cozy-metallb

|

||||

namespace: cozy-metallb

|

||||

privileged: true

|

||||

dependsOn: [cilium]

|

||||

|

||||

- name: grafana-operator

|

||||

releaseName: grafana-operator

|

||||

chart: cozy-grafana-operator

|

||||

namespace: cozy-grafana-operator

|

||||

dependsOn: [cilium]

|

||||

|

||||

- name: mariadb-operator

|

||||

releaseName: mariadb-operator

|

||||

chart: cozy-mariadb-operator

|

||||

namespace: cozy-mariadb-operator

|

||||

dependsOn: [cilium,cert-manager,victoria-metrics-operator]

|

||||

|

||||

- name: postgres-operator

|

||||

releaseName: postgres-operator

|

||||

chart: cozy-postgres-operator

|

||||

namespace: cozy-postgres-operator

|

||||

dependsOn: [cilium,cert-manager]

|

||||

|

||||

- name: kafka-operator

|

||||

releaseName: kafka-operator

|

||||

chart: cozy-kafka-operator

|

||||

namespace: cozy-kafka-operator

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: clickhouse-operator

|

||||

releaseName: clickhouse-operator

|

||||

chart: cozy-clickhouse-operator

|

||||

namespace: cozy-clickhouse-operator

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: rabbitmq-operator

|

||||

releaseName: rabbitmq-operator

|

||||

chart: cozy-rabbitmq-operator

|

||||

namespace: cozy-rabbitmq-operator

|

||||

dependsOn: [cilium]

|

||||

|

||||

- name: redis-operator

|

||||

releaseName: redis-operator

|

||||

chart: cozy-redis-operator

|

||||

namespace: cozy-redis-operator

|

||||

dependsOn: [cilium]

|

||||

|

||||

- name: piraeus-operator

|

||||

releaseName: piraeus-operator

|

||||

chart: cozy-piraeus-operator

|

||||

namespace: cozy-linstor

|

||||

dependsOn: [cilium,cert-manager]

|

||||

|

||||

- name: linstor

|

||||

releaseName: linstor

|

||||

chart: cozy-linstor

|

||||

namespace: cozy-linstor

|

||||

privileged: true

|

||||

dependsOn: [piraeus-operator,cilium,cert-manager]

|

||||

|

||||

- name: telepresence

|

||||

releaseName: traffic-manager

|

||||

chart: cozy-telepresence

|

||||

namespace: cozy-telepresence

|

||||

dependsOn: []

|

||||

@@ -1,75 +0,0 @@

|

||||

{{- $cozyConfig := lookup "v1" "ConfigMap" "cozy-system" "cozystack" }}

|

||||

|

||||

releases:

|

||||

- name: cert-manager

|

||||

releaseName: cert-manager

|

||||

chart: cozy-cert-manager

|

||||

namespace: cozy-cert-manager

|

||||

dependsOn: []

|

||||

|

||||

- name: cert-manager-issuers

|

||||

releaseName: cert-manager-issuers

|

||||

chart: cozy-cert-manager-issuers

|

||||

namespace: cozy-cert-manager

|

||||

dependsOn: [cert-manager]

|

||||

|

||||

- name: victoria-metrics-operator

|

||||

releaseName: victoria-metrics-operator

|

||||

chart: cozy-victoria-metrics-operator

|

||||

namespace: cozy-victoria-metrics-operator

|

||||

dependsOn: [cert-manager]

|

||||

|

||||

- name: monitoring

|

||||

releaseName: monitoring

|

||||

chart: cozy-monitoring

|

||||

namespace: cozy-monitoring

|

||||

privileged: true

|

||||

dependsOn: [victoria-metrics-operator]

|

||||

|

||||

- name: grafana-operator

|

||||

releaseName: grafana-operator

|

||||

chart: cozy-grafana-operator

|

||||

namespace: cozy-grafana-operator

|

||||

dependsOn: []

|

||||

|

||||

- name: mariadb-operator

|

||||

releaseName: mariadb-operator

|

||||

chart: cozy-mariadb-operator

|

||||

namespace: cozy-mariadb-operator

|

||||

dependsOn: [victoria-metrics-operator]

|

||||

|

||||

- name: postgres-operator

|

||||

releaseName: postgres-operator

|

||||

chart: cozy-postgres-operator

|

||||

namespace: cozy-postgres-operator

|

||||

dependsOn: [cert-manager]

|

||||

|

||||

- name: kafka-operator

|

||||

releaseName: kafka-operator

|

||||

chart: cozy-kafka-operator

|

||||

namespace: cozy-kafka-operator

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: clickhouse-operator

|

||||

releaseName: clickhouse-operator

|

||||

chart: cozy-clickhouse-operator

|

||||

namespace: cozy-clickhouse-operator

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: rabbitmq-operator

|

||||

releaseName: rabbitmq-operator

|

||||

chart: cozy-rabbitmq-operator

|

||||

namespace: cozy-rabbitmq-operator

|

||||

dependsOn: []

|

||||

|

||||

- name: redis-operator

|

||||

releaseName: redis-operator

|

||||

chart: cozy-redis-operator

|

||||

namespace: cozy-redis-operator

|

||||

dependsOn: []

|

||||

|

||||

- name: telepresence

|

||||

releaseName: traffic-manager

|

||||

chart: cozy-telepresence

|

||||

namespace: cozy-telepresence

|

||||

dependsOn: []

|

||||

@@ -1,183 +0,0 @@

|

||||

{{- $cozyConfig := lookup "v1" "ConfigMap" "cozy-system" "cozystack" }}

|

||||

|

||||

releases:

|

||||

- name: cilium

|

||||

releaseName: cilium

|

||||

chart: cozy-cilium

|

||||

namespace: cozy-cilium

|

||||

privileged: true

|

||||

dependsOn: []

|

||||

|

||||

- name: kubeovn

|

||||

releaseName: kubeovn

|

||||

chart: cozy-kubeovn

|

||||

namespace: cozy-kubeovn

|

||||

privileged: true

|

||||

dependsOn: [cilium]

|

||||

values:

|

||||

cozystack:

|

||||

nodesHash: {{ include "cozystack.master-node-ips" . | sha256sum }}

|

||||

kube-ovn:

|

||||

ipv4:

|

||||

POD_CIDR: "{{ index $cozyConfig.data "ipv4-pod-cidr" }}"

|

||||

POD_GATEWAY: "{{ index $cozyConfig.data "ipv4-pod-gateway" }}"

|

||||

SVC_CIDR: "{{ index $cozyConfig.data "ipv4-svc-cidr" }}"

|

||||

JOIN_CIDR: "{{ index $cozyConfig.data "ipv4-join-cidr" }}"

|

||||

|

||||

- name: cert-manager

|

||||

releaseName: cert-manager

|

||||

chart: cozy-cert-manager

|

||||

namespace: cozy-cert-manager

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: cert-manager-issuers

|

||||

releaseName: cert-manager-issuers

|

||||

chart: cozy-cert-manager-issuers

|

||||

namespace: cozy-cert-manager

|

||||

dependsOn: [cilium,kubeovn,cert-manager]

|

||||

|

||||

- name: victoria-metrics-operator

|

||||

releaseName: victoria-metrics-operator

|

||||

chart: cozy-victoria-metrics-operator

|

||||

namespace: cozy-victoria-metrics-operator

|

||||

dependsOn: [cilium,kubeovn,cert-manager]

|

||||

|

||||

- name: monitoring

|

||||

releaseName: monitoring

|

||||

chart: cozy-monitoring

|

||||

namespace: cozy-monitoring

|

||||

privileged: true

|

||||

dependsOn: [cilium,kubeovn,victoria-metrics-operator]

|

||||

|

||||

- name: kubevirt-operator

|

||||

releaseName: kubevirt-operator

|

||||

chart: cozy-kubevirt-operator

|

||||

namespace: cozy-kubevirt

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: kubevirt

|

||||

releaseName: kubevirt

|

||||

chart: cozy-kubevirt

|

||||

namespace: cozy-kubevirt

|

||||

privileged: true

|

||||

dependsOn: [cilium,kubeovn,kubevirt-operator]

|

||||

|

||||

- name: kubevirt-cdi-operator

|

||||

releaseName: kubevirt-cdi-operator

|

||||

chart: cozy-kubevirt-cdi-operator

|

||||

namespace: cozy-kubevirt-cdi

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: kubevirt-cdi

|

||||

releaseName: kubevirt-cdi

|

||||

chart: cozy-kubevirt-cdi

|

||||

namespace: cozy-kubevirt-cdi

|

||||

dependsOn: [cilium,kubeovn,kubevirt-cdi-operator]

|

||||

|

||||

- name: metallb

|

||||

releaseName: metallb

|

||||

chart: cozy-metallb

|

||||

namespace: cozy-metallb

|

||||

privileged: true

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: grafana-operator

|

||||

releaseName: grafana-operator

|

||||

chart: cozy-grafana-operator

|

||||

namespace: cozy-grafana-operator

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: mariadb-operator

|

||||

releaseName: mariadb-operator

|

||||

chart: cozy-mariadb-operator

|

||||

namespace: cozy-mariadb-operator

|

||||

dependsOn: [cilium,kubeovn,cert-manager,victoria-metrics-operator]

|

||||

|

||||

- name: postgres-operator

|

||||

releaseName: postgres-operator

|

||||

chart: cozy-postgres-operator

|

||||

namespace: cozy-postgres-operator

|

||||

dependsOn: [cilium,kubeovn,cert-manager]

|

||||

|

||||

- name: kafka-operator

|

||||

releaseName: kafka-operator

|

||||

chart: cozy-kafka-operator

|

||||

namespace: cozy-kafka-operator

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: clickhouse-operator

|

||||

releaseName: clickhouse-operator

|

||||

chart: cozy-clickhouse-operator

|

||||

namespace: cozy-clickhouse-operator

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: rabbitmq-operator

|

||||

releaseName: rabbitmq-operator

|

||||

chart: cozy-rabbitmq-operator

|

||||

namespace: cozy-rabbitmq-operator

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: redis-operator

|

||||

releaseName: redis-operator

|

||||

chart: cozy-redis-operator

|

||||

namespace: cozy-redis-operator

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: piraeus-operator

|

||||

releaseName: piraeus-operator

|

||||

chart: cozy-piraeus-operator

|

||||

namespace: cozy-linstor

|

||||

dependsOn: [cilium,kubeovn,cert-manager]

|

||||

|

||||

- name: linstor

|

||||

releaseName: linstor

|

||||

chart: cozy-linstor

|

||||

namespace: cozy-linstor

|

||||

privileged: true

|

||||

dependsOn: [piraeus-operator,cilium,kubeovn,cert-manager]

|

||||

|

||||

- name: telepresence

|

||||

releaseName: traffic-manager

|

||||

chart: cozy-telepresence

|

||||

namespace: cozy-telepresence

|

||||

dependsOn: [cilium,kubeovn]

|

||||

|

||||

- name: dashboard

|

||||

releaseName: dashboard

|

||||

chart: cozy-dashboard

|

||||

namespace: cozy-dashboard

|

||||

dependsOn: [cilium,kubeovn]

|

||||

{{- if .Capabilities.APIVersions.Has "source.toolkit.fluxcd.io/v1beta2" }}

|

||||

{{- with (lookup "source.toolkit.fluxcd.io/v1beta2" "HelmRepository" "cozy-public" "").items }}

|

||||

values:

|

||||

kubeapps:

|

||||

redis:

|

||||

master:

|

||||

podAnnotations:

|

||||

{{- range $index, $repo := . }}

|

||||

{{- with (($repo.status).artifact).revision }}

|

||||

repository.cozystack.io/{{ $repo.metadata.name }}: {{ quote . }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

|

||||

- name: kamaji

|

||||

releaseName: kamaji

|

||||

chart: cozy-kamaji

|

||||

namespace: cozy-kamaji

|

||||

dependsOn: [cilium,kubeovn,cert-manager]

|

||||

|

||||

- name: capi-operator

|