mirror of

https://github.com/outbackdingo/cozystack.git

synced 2026-01-29 18:19:00 +00:00

Compare commits

1 Commits

v0.4.0

...

project-do

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

236f3ce92f |

2

.gitignore

vendored

2

.gitignore

vendored

@@ -1,3 +1 @@

|

||||

_out

|

||||

.git

|

||||

.idea

|

||||

553

README.md

553

README.md

@@ -10,7 +10,7 @@

|

||||

|

||||

# Cozystack

|

||||

|

||||

**Cozystack** is a free PaaS platform and framework for building clouds.

|

||||

**Cozystack** is an open-source **PaaS platform** for cloud providers.

|

||||

|

||||

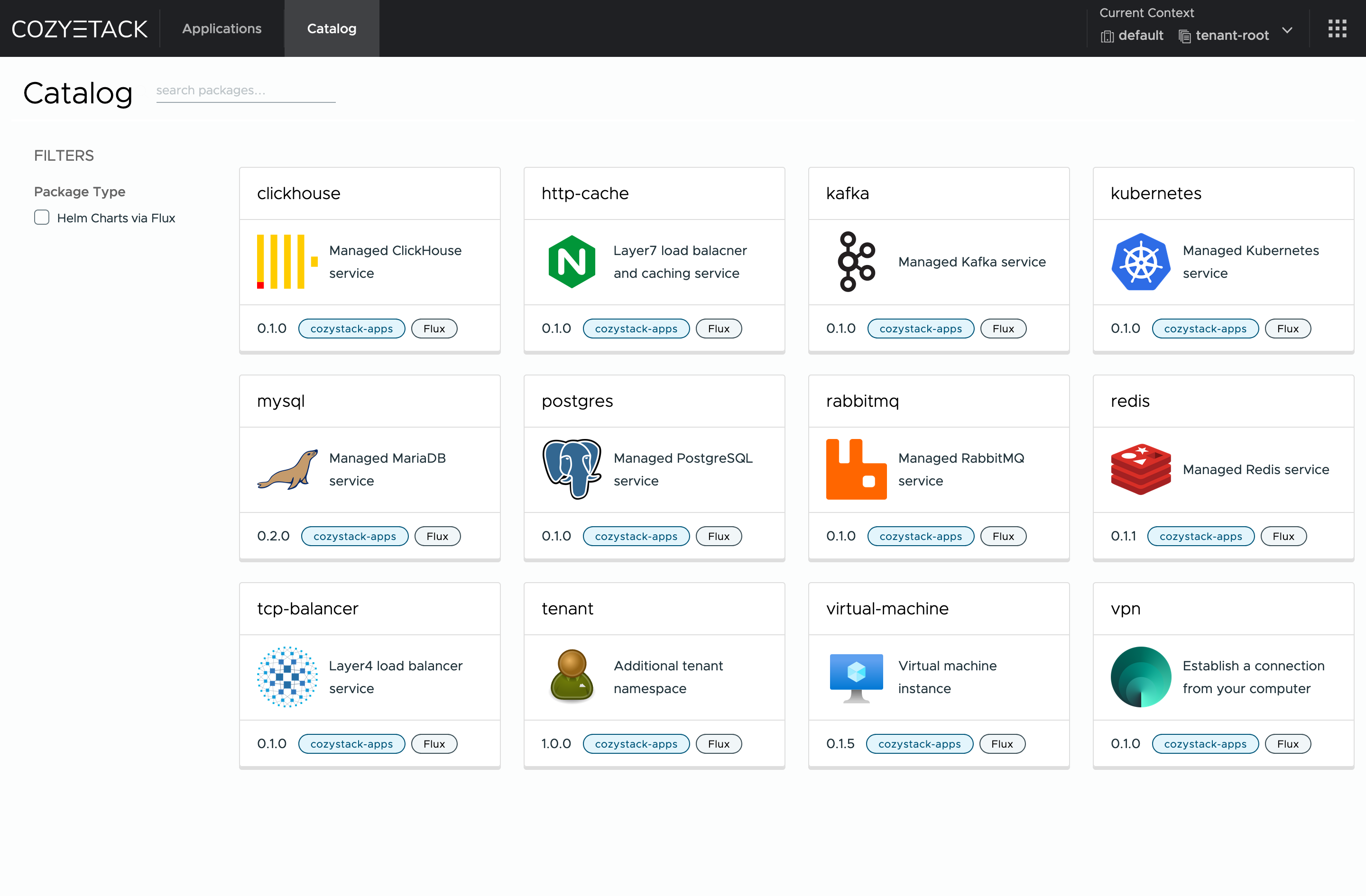

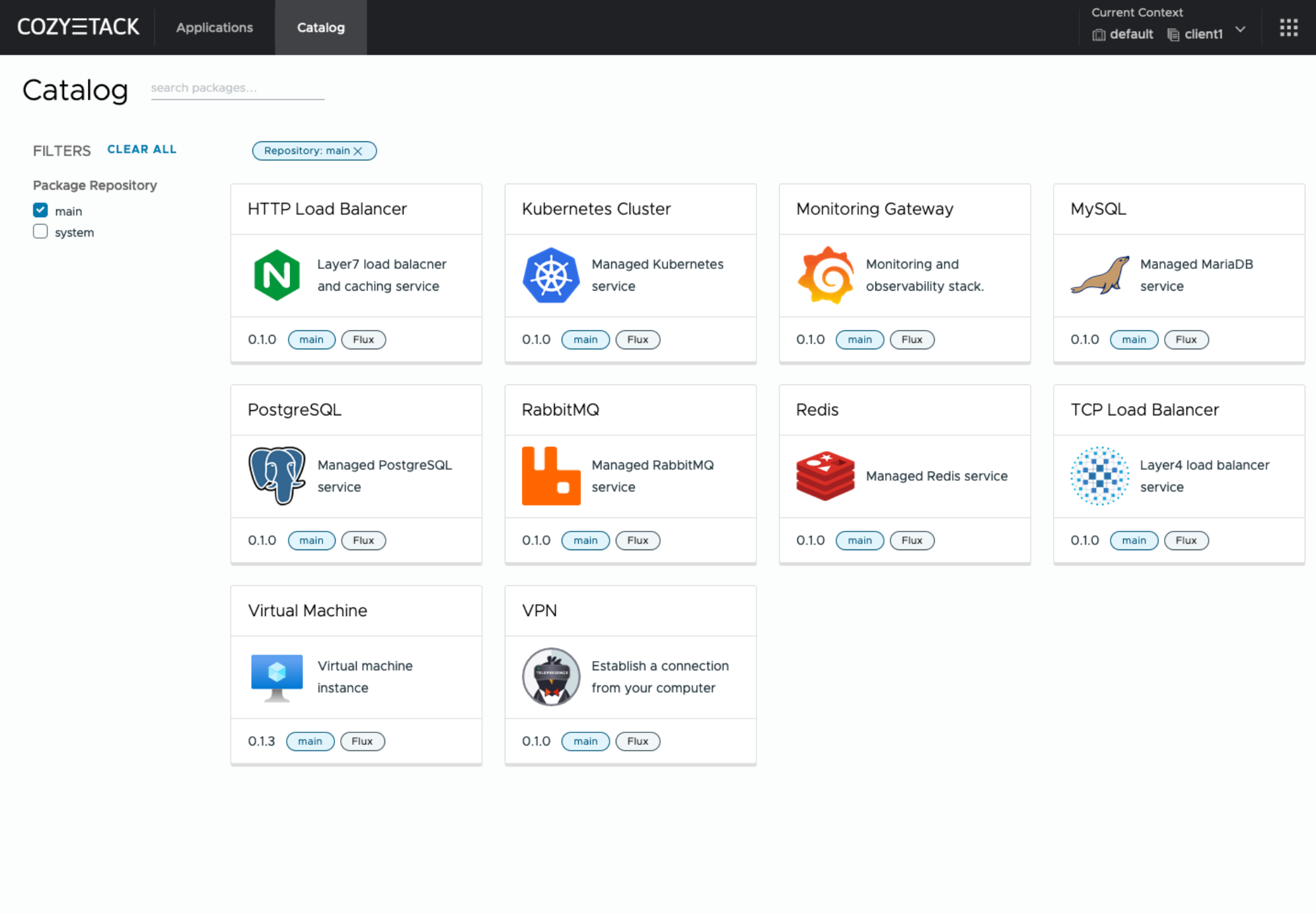

With Cozystack, you can transform your bunch of servers into an intelligent system with a simple REST API for spawning Kubernetes clusters, Database-as-a-Service, virtual machines, load balancers, HTTP caching services, and other services with ease.

|

||||

|

||||

@@ -18,55 +18,548 @@ You can use Cozystack to build your own cloud or to provide a cost-effective dev

|

||||

|

||||

## Use-Cases

|

||||

|

||||

* [**Using Cozystack to build public cloud**](https://cozystack.io/docs/use-cases/public-cloud/)

|

||||

You can use Cozystack as backend for a public cloud

|

||||

### As a backend for a public cloud

|

||||

|

||||

* [**Using Cozystack to build private cloud**](https://cozystack.io/docs/use-cases/private-cloud/)

|

||||

You can use Cozystack as platform to build a private cloud powered by Infrastructure-as-Code approach

|

||||

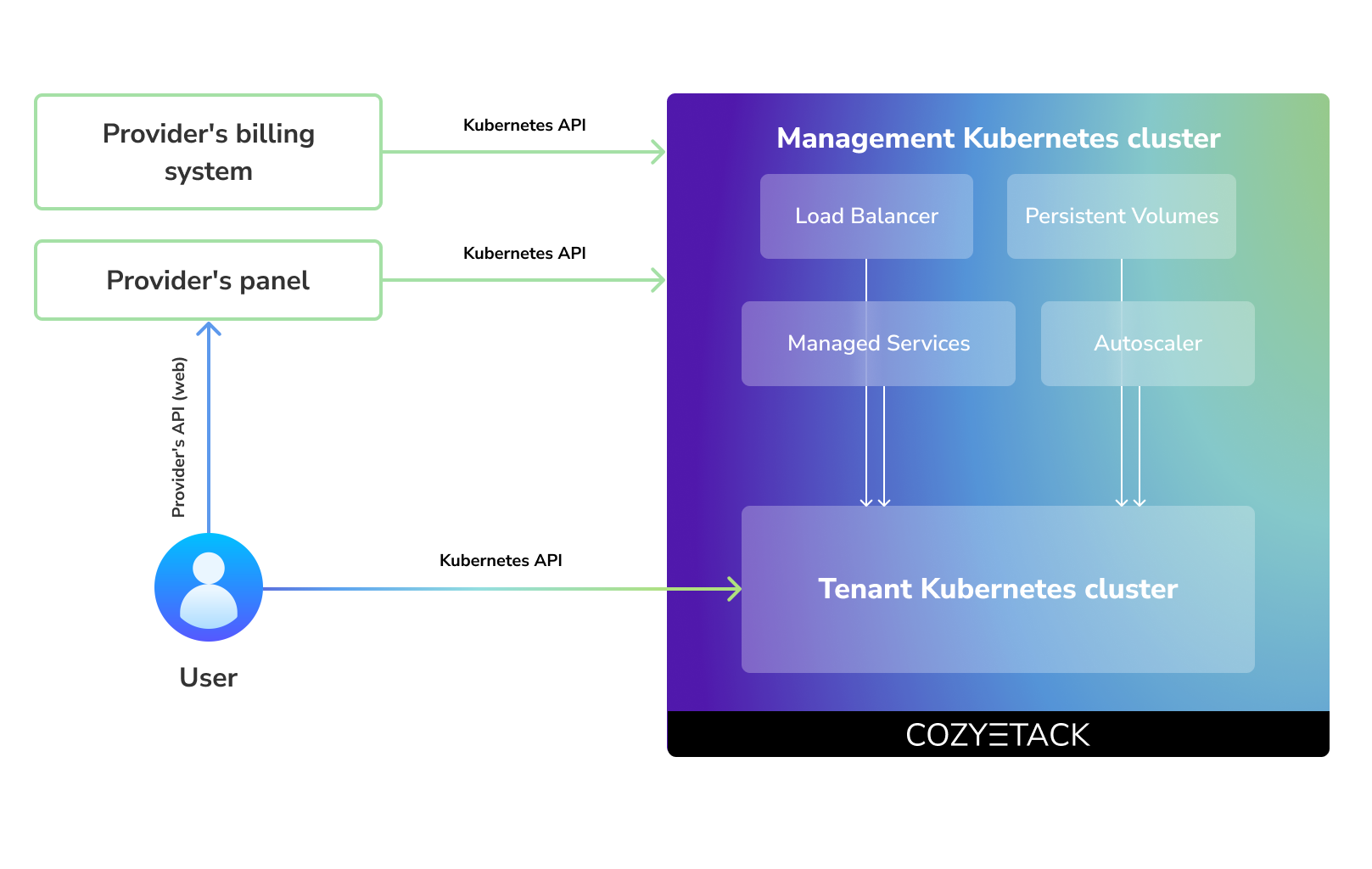

Cozystack positions itself as a kind of framework for building public clouds. The key word here is framework. In this case, it's important to understand that Cozystack is made for cloud providers, not for end users.

|

||||

|

||||

* [**Using Cozystack as Kubernetes distribution**](https://cozystack.io/docs/use-cases/kubernetes-distribution/)

|

||||

You can use Cozystack as Kubernetes distribution for Bare Metal

|

||||

Despite having a graphical interface, the current security model does not imply public user access to your management cluster.

|

||||

|

||||

Instead, end users get access to their own Kubernetes clusters, can order LoadBalancers and additional services from it, but they have no access and know nothing about your management cluster powered by Cozystack.

|

||||

|

||||

Thus, to integrate with your billing system, it's enough to teach your system to go to the management Kubernetes and place a YAML file signifying the service you're interested in. Cozystack will do the rest of the work for you.

|

||||

|

||||

|

||||

|

||||

### As a private cloud for Infrastructure-as-Code

|

||||

|

||||

One of the use cases is a self-portal for users within your company, where they can order the service they're interested in or a managed database.

|

||||

|

||||

You can implement best GitOps practices, where users will launch their own Kubernetes clusters and databases for their needs with a simple commit of configuration into your infrastructure Git repository.

|

||||

|

||||

Thanks to the standardization of the approach to deploying applications, you can expand the platform's capabilities using the functionality of standard Helm charts.

|

||||

|

||||

### As a Kubernetes distribution for Bare Metal

|

||||

|

||||

We created Cozystack primarily for our own needs, having vast experience in building reliable systems on bare metal infrastructure. This experience led to the formation of a separate boxed product, which is aimed at standardizing and providing a ready-to-use tool for managing your infrastructure.

|

||||

|

||||

Currently, Cozystack already solves a huge scope of infrastructure tasks: starting from provisioning bare metal servers, having a ready monitoring system, fast and reliable storage, a network fabric with the possibility of interconnect with your infrastructure, the ability to run virtual machines, databases, and much more right out of the box.

|

||||

|

||||

All this makes Cozystack a convenient platform for delivering and launching your application on Bare Metal.

|

||||

|

||||

## Screenshot

|

||||

|

||||

|

||||

|

||||

|

||||

## Documentation

|

||||

## Core values

|

||||

|

||||

The documentation is located on official [cozystack.io](https://cozystack.io) website.

|

||||

### Standardization and unification

|

||||

All components of the platform are based on open source tools and technologies which are widely known in the industry.

|

||||

|

||||

Read [Get Started](https://cozystack.io/docs/get-started/) section for a quick start.

|

||||

### Collaborate, not compete

|

||||

If a feature being developed for the platform could be useful to a upstream project, it should be contributed to upstream project, rather than being implemented within the platform.

|

||||

|

||||

If you encounter any difficulties, start with the [troubleshooting guide](https://cozystack.io/docs/troubleshooting/), and work your way through the process that we've outlined.

|

||||

### API-first

|

||||

Cozystack is based on Kubernetes and involves close interaction with its API. We don't aim to completely hide the all elements behind a pretty UI or any sort of customizations; instead, we provide a standard interface and teach users how to work with basic primitives. The web interface is used solely for deploying applications and quickly diving into basic concepts of platform.

|

||||

|

||||

## Versioning

|

||||

## Quick Start

|

||||

|

||||

Versioning adheres to the [Semantic Versioning](http://semver.org/) principles.

|

||||

A full list of the available releases is available in the GitHub repository's [Release](https://github.com/aenix-io/cozystack/releases) section.

|

||||

### Prepare infrastructure

|

||||

|

||||

- [Roadmap](https://github.com/orgs/aenix-io/projects/2)

|

||||

|

||||

## Contributions

|

||||

|

||||

|

||||

Contributions are highly appreciated and very welcomed!

|

||||

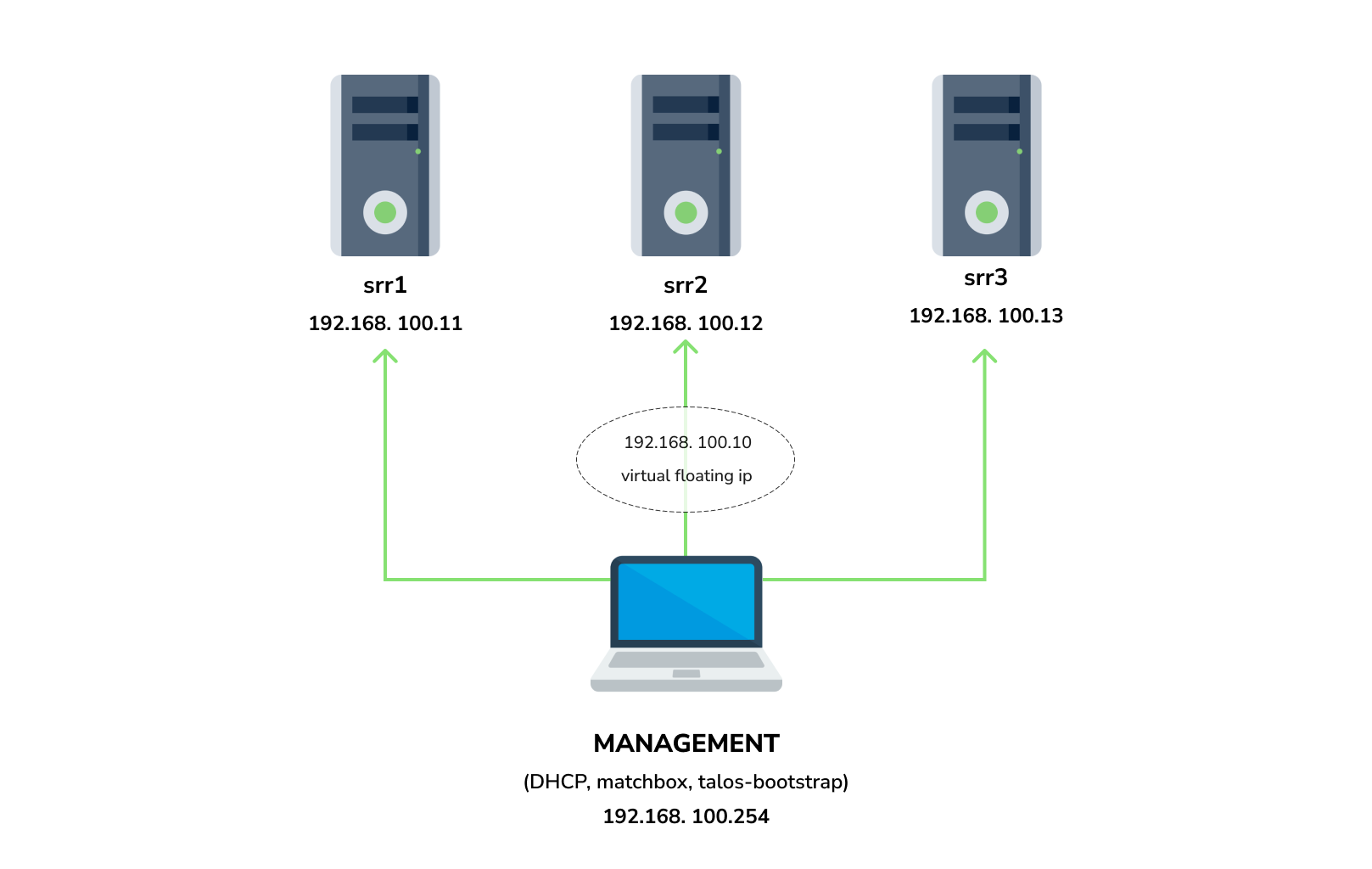

You need 3 physical servers or VMs with nested virtualisation:

|

||||

|

||||

In case of bugs, please, check if the issue has been already opened by checking the [GitHub Issues](https://github.com/aenix-io/cozystack/issues) section.

|

||||

In case it isn't, you can open a new one: a detailed report will help us to replicate it, assess it, and work on a fix.

|

||||

```

|

||||

CPU: 4 cores

|

||||

CPU model: host

|

||||

RAM: 8-16 GB

|

||||

HDD1: 32 GB

|

||||

HDD2: 100GB (raw)

|

||||

```

|

||||

|

||||

You can express your intention in working on the fix on your own.

|

||||

Commits are used to generate the changelog, and their author will be referenced in it.

|

||||

And one management VM or physical server connected to the same network.

|

||||

Any Linux system installed on it (eg. Ubuntu should be enough)

|

||||

|

||||

In case of **Feature Requests** please use the [Discussion's Feature Request section](https://github.com/aenix-io/cozystack/discussions/categories/feature-requests).

|

||||

**Note:** The VM should support `x86-64-v2` architecture, the most probably you can achieve this by setting cpu model to `host`

|

||||

|

||||

## License

|

||||

#### Install dependencies:

|

||||

|

||||

Cozystack is licensed under Apache 2.0.

|

||||

The code is provided as-is with no warranties.

|

||||

- `docker`

|

||||

- `talosctl`

|

||||

- `dialog`

|

||||

- `nmap`

|

||||

- `make`

|

||||

- `yq`

|

||||

- `kubectl`

|

||||

- `helm`

|

||||

|

||||

## Commercial Support

|

||||

### Netboot server

|

||||

|

||||

[**Ænix**](https://aenix.io) offers enterprise-grade support, available 24/7.

|

||||

Start matchbox with prebuilt Talos image for Cozystack:

|

||||

|

||||

We provide all types of assistance, including consultations, development of missing features, design, assistance with installation, and integration.

|

||||

```bash

|

||||

sudo docker run --name=matchbox -d --net=host ghcr.io/aenix-io/cozystack/matchbox:v0.0.2 \

|

||||

-address=:8080 \

|

||||

-log-level=debug

|

||||

```

|

||||

|

||||

[Contact us](https://aenix.io/contact/)

|

||||

Start DHCP-Server:

|

||||

```bash

|

||||

sudo docker run --name=dnsmasq -d --cap-add=NET_ADMIN --net=host quay.io/poseidon/dnsmasq \

|

||||

-d -q -p0 \

|

||||

--dhcp-range=192.168.100.3,192.168.100.254 \

|

||||

--dhcp-option=option:router,192.168.100.1 \

|

||||

--enable-tftp \

|

||||

--tftp-root=/var/lib/tftpboot \

|

||||

--dhcp-match=set:bios,option:client-arch,0 \

|

||||

--dhcp-boot=tag:bios,undionly.kpxe \

|

||||

--dhcp-match=set:efi32,option:client-arch,6 \

|

||||

--dhcp-boot=tag:efi32,ipxe.efi \

|

||||

--dhcp-match=set:efibc,option:client-arch,7 \

|

||||

--dhcp-boot=tag:efibc,ipxe.efi \

|

||||

--dhcp-match=set:efi64,option:client-arch,9 \

|

||||

--dhcp-boot=tag:efi64,ipxe.efi \

|

||||

--dhcp-userclass=set:ipxe,iPXE \

|

||||

--dhcp-boot=tag:ipxe,http://192.168.100.254:8080/boot.ipxe \

|

||||

--log-queries \

|

||||

--log-dhcp

|

||||

```

|

||||

|

||||

Where:

|

||||

- `192.168.100.3,192.168.100.254` range to allocate IPs from

|

||||

- `192.168.100.1` your gateway

|

||||

- `192.168.100.254` is address of your management server

|

||||

|

||||

Check status of containers:

|

||||

|

||||

```

|

||||

docker ps

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

|

||||

22044f26f74d quay.io/poseidon/dnsmasq "/usr/sbin/dnsmasq -…" 6 seconds ago Up 5 seconds dnsmasq

|

||||

231ad81ff9e0 ghcr.io/aenix-io/cozystack/matchbox:v0.0.2 "/matchbox -address=…" 58 seconds ago Up 57 seconds matchbox

|

||||

```

|

||||

|

||||

### Bootstrap cluster

|

||||

|

||||

Write configuration for Cozystack:

|

||||

|

||||

```yaml

|

||||

cat > patch.yaml <<\EOT

|

||||

machine:

|

||||

kubelet:

|

||||

nodeIP:

|

||||

validSubnets:

|

||||

- 192.168.100.0/24

|

||||

kernel:

|

||||

modules:

|

||||

- name: openvswitch

|

||||

- name: drbd

|

||||

parameters:

|

||||

- usermode_helper=disabled

|

||||

- name: zfs

|

||||

install:

|

||||

image: ghcr.io/aenix-io/cozystack/talos:v1.6.4

|

||||

files:

|

||||

- content: |

|

||||

[plugins]

|

||||

[plugins."io.containerd.grpc.v1.cri"]

|

||||

device_ownership_from_security_context = true

|

||||

path: /etc/cri/conf.d/20-customization.part

|

||||

op: create

|

||||

|

||||

cluster:

|

||||

network:

|

||||

cni:

|

||||

name: none

|

||||

podSubnets:

|

||||

- 10.244.0.0/16

|

||||

serviceSubnets:

|

||||

- 10.96.0.0/16

|

||||

EOT

|

||||

|

||||

cat > patch-controlplane.yaml <<\EOT

|

||||

cluster:

|

||||

allowSchedulingOnControlPlanes: true

|

||||

controllerManager:

|

||||

extraArgs:

|

||||

bind-address: 0.0.0.0

|

||||

scheduler:

|

||||

extraArgs:

|

||||

bind-address: 0.0.0.0

|

||||

apiServer:

|

||||

certSANs:

|

||||

- 127.0.0.1

|

||||

proxy:

|

||||

disabled: true

|

||||

discovery:

|

||||

enabled: false

|

||||

etcd:

|

||||

advertisedSubnets:

|

||||

- 192.168.100.0/24

|

||||

EOT

|

||||

```

|

||||

|

||||

Run [talos-bootstrap](https://github.com/aenix-io/talos-bootstrap/) to deploy cluster:

|

||||

|

||||

```bash

|

||||

talos-bootstrap install

|

||||

```

|

||||

|

||||

Save admin kubeconfig to access your Kubernetes cluster:

|

||||

```bash

|

||||

cp -i kubeconfig ~/.kube/config

|

||||

```

|

||||

|

||||

Check connection:

|

||||

```bash

|

||||

kubectl get ns

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME STATUS AGE

|

||||

default Active 7m56s

|

||||

kube-node-lease Active 7m56s

|

||||

kube-public Active 7m56s

|

||||

kube-system Active 7m56s

|

||||

```

|

||||

|

||||

|

||||

**Note:**: All nodes should currently show as "Not Ready", don't worry about that, this is because you disabled the default CNI plugin in the previous step. Cozystack will install it's own CNI-plugin on the next step.

|

||||

|

||||

|

||||

### Install Cozystack

|

||||

|

||||

|

||||

write config for cozystack:

|

||||

|

||||

**Note:** please make sure that you written the same setting specified in `patch.yaml` and `patch-controlplane.yaml` files.

|

||||

|

||||

```yaml

|

||||

cat > cozystack-config.yaml <<\EOT

|

||||

apiVersion: v1

|

||||

kind: ConfigMap

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-system

|

||||

data:

|

||||

cluster-name: "cozystack"

|

||||

ipv4-pod-cidr: "10.244.0.0/16"

|

||||

ipv4-pod-gateway: "10.244.0.1"

|

||||

ipv4-svc-cidr: "10.96.0.0/16"

|

||||

ipv4-join-cidr: "100.64.0.0/16"

|

||||

EOT

|

||||

```

|

||||

|

||||

Create namesapce and install Cozystack system components:

|

||||

|

||||

```bash

|

||||

kubectl create ns cozy-system

|

||||

kubectl apply -f cozystack-config.yaml

|

||||

kubectl apply -f manifests/cozystack-installer.yaml

|

||||

```

|

||||

|

||||

(optional) You can track the logs of installer:

|

||||

```bash

|

||||

kubectl logs -n cozy-system deploy/cozystack -f

|

||||

```

|

||||

|

||||

Wait for a while, then check the status of installation:

|

||||

```bash

|

||||

kubectl get hr -A

|

||||

```

|

||||

|

||||

Wait until all releases become to `Ready` state:

|

||||

```console

|

||||

NAMESPACE NAME AGE READY STATUS

|

||||

cozy-cert-manager cert-manager 4m1s True Release reconciliation succeeded

|

||||

cozy-cert-manager cert-manager-issuers 4m1s True Release reconciliation succeeded

|

||||

cozy-cilium cilium 4m1s True Release reconciliation succeeded

|

||||

cozy-cluster-api capi-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-cluster-api capi-providers 4m1s True Release reconciliation succeeded

|

||||

cozy-dashboard dashboard 4m1s True Release reconciliation succeeded

|

||||

cozy-fluxcd cozy-fluxcd 4m1s True Release reconciliation succeeded

|

||||

cozy-grafana-operator grafana-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-kamaji kamaji 4m1s True Release reconciliation succeeded

|

||||

cozy-kubeovn kubeovn 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt-cdi kubevirt-cdi 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt-cdi kubevirt-cdi-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt kubevirt 4m1s True Release reconciliation succeeded

|

||||

cozy-kubevirt kubevirt-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-linstor linstor 4m1s True Release reconciliation succeeded

|

||||

cozy-linstor piraeus-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-mariadb-operator mariadb-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-metallb metallb 4m1s True Release reconciliation succeeded

|

||||

cozy-monitoring monitoring 4m1s True Release reconciliation succeeded

|

||||

cozy-postgres-operator postgres-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-rabbitmq-operator rabbitmq-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-redis-operator redis-operator 4m1s True Release reconciliation succeeded

|

||||

cozy-telepresence telepresence 4m1s True Release reconciliation succeeded

|

||||

cozy-victoria-metrics-operator victoria-metrics-operator 4m1s True Release reconciliation succeeded

|

||||

tenant-root tenant-root 4m1s True Release reconciliation succeeded

|

||||

```

|

||||

|

||||

#### Configure Storage

|

||||

|

||||

Setup alias to access LINSTOR:

|

||||

```bash

|

||||

alias linstor='kubectl exec -n cozy-linstor deploy/linstor-controller -- linstor'

|

||||

```

|

||||

|

||||

list your nodes

|

||||

```bash

|

||||

linstor node list

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

+-------------------------------------------------------+

|

||||

| Node | NodeType | Addresses | State |

|

||||

|=======================================================|

|

||||

| srv1 | SATELLITE | 192.168.100.11:3367 (SSL) | Online |

|

||||

| srv2 | SATELLITE | 192.168.100.12:3367 (SSL) | Online |

|

||||

| srv3 | SATELLITE | 192.168.100.13:3367 (SSL) | Online |

|

||||

+-------------------------------------------------------+

|

||||

```

|

||||

|

||||

list empty devices:

|

||||

|

||||

```bash

|

||||

linstor physical-storage list

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

+--------------------------------------------+

|

||||

| Size | Rotational | Nodes |

|

||||

|============================================|

|

||||

| 107374182400 | True | srv3[/dev/sdb] |

|

||||

| | | srv1[/dev/sdb] |

|

||||

| | | srv2[/dev/sdb] |

|

||||

+--------------------------------------------+

|

||||

```

|

||||

|

||||

|

||||

create storage pools:

|

||||

|

||||

```bash

|

||||

linstor ps cdp lvm srv1 /dev/sdb --pool-name data --storage-pool data

|

||||

linstor ps cdp lvm srv2 /dev/sdb --pool-name data --storage-pool data

|

||||

linstor ps cdp lvm srv3 /dev/sdb --pool-name data --storage-pool data

|

||||

```

|

||||

|

||||

list storage pools:

|

||||

|

||||

```bash

|

||||

linstor sp l

|

||||

```

|

||||

|

||||

example output:

|

||||

|

||||

```console

|

||||

+-------------------------------------------------------------------------------------------------------------------------------------+

|

||||

| StoragePool | Node | Driver | PoolName | FreeCapacity | TotalCapacity | CanSnapshots | State | SharedName |

|

||||

|=====================================================================================================================================|

|

||||

| DfltDisklessStorPool | srv1 | DISKLESS | | | | False | Ok | srv1;DfltDisklessStorPool |

|

||||

| DfltDisklessStorPool | srv2 | DISKLESS | | | | False | Ok | srv2;DfltDisklessStorPool |

|

||||

| DfltDisklessStorPool | srv3 | DISKLESS | | | | False | Ok | srv3;DfltDisklessStorPool |

|

||||

| data | srv1 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv1;data |

|

||||

| data | srv2 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv2;data |

|

||||

| data | srv3 | LVM | data | 100.00 GiB | 100.00 GiB | False | Ok | srv3;data |

|

||||

+-------------------------------------------------------------------------------------------------------------------------------------+

|

||||

```

|

||||

|

||||

|

||||

Create default storage classes:

|

||||

```yaml

|

||||

kubectl create -f- <<EOT

|

||||

---

|

||||

apiVersion: storage.k8s.io/v1

|

||||

kind: StorageClass

|

||||

metadata:

|

||||

name: local

|

||||

annotations:

|

||||

storageclass.kubernetes.io/is-default-class: "true"

|

||||

provisioner: linstor.csi.linbit.com

|

||||

parameters:

|

||||

linstor.csi.linbit.com/storagePool: "data"

|

||||

linstor.csi.linbit.com/layerList: "storage"

|

||||

linstor.csi.linbit.com/allowRemoteVolumeAccess: "false"

|

||||

volumeBindingMode: WaitForFirstConsumer

|

||||

allowVolumeExpansion: true

|

||||

---

|

||||

apiVersion: storage.k8s.io/v1

|

||||

kind: StorageClass

|

||||

metadata:

|

||||

name: replicated

|

||||

provisioner: linstor.csi.linbit.com

|

||||

parameters:

|

||||

linstor.csi.linbit.com/storagePool: "data"

|

||||

linstor.csi.linbit.com/autoPlace: "3"

|

||||

linstor.csi.linbit.com/layerList: "drbd storage"

|

||||

linstor.csi.linbit.com/allowRemoteVolumeAccess: "true"

|

||||

property.linstor.csi.linbit.com/DrbdOptions/auto-quorum: suspend-io

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Resource/on-no-data-accessible: suspend-io

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Resource/on-suspended-primary-outdated: force-secondary

|

||||

property.linstor.csi.linbit.com/DrbdOptions/Net/rr-conflict: retry-connect

|

||||

volumeBindingMode: WaitForFirstConsumer

|

||||

allowVolumeExpansion: true

|

||||

EOT

|

||||

```

|

||||

|

||||

list storageclasses:

|

||||

|

||||

```bash

|

||||

kubectl get storageclasses

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

|

||||

local (default) linstor.csi.linbit.com Delete WaitForFirstConsumer true 11m

|

||||

replicated linstor.csi.linbit.com Delete WaitForFirstConsumer true 11m

|

||||

```

|

||||

|

||||

#### Configure Networking interconnection

|

||||

|

||||

To access your services select the range of unused IPs, eg. `192.168.100.200-192.168.100.250`

|

||||

|

||||

**Note:** These IPs should be from the same network as nodes or they should have all necessary routes for them.

|

||||

|

||||

Configure MetalLB to use and announce this range:

|

||||

```yaml

|

||||

kubectl create -f- <<EOT

|

||||

---

|

||||

apiVersion: metallb.io/v1beta1

|

||||

kind: L2Advertisement

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-metallb

|

||||

spec:

|

||||

ipAddressPools:

|

||||

- cozystack

|

||||

---

|

||||

apiVersion: metallb.io/v1beta1

|

||||

kind: IPAddressPool

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-metallb

|

||||

spec:

|

||||

addresses:

|

||||

- 192.168.100.200-192.168.100.250

|

||||

autoAssign: true

|

||||

avoidBuggyIPs: false

|

||||

EOT

|

||||

```

|

||||

|

||||

#### Setup basic applications

|

||||

|

||||

Get token from `tenant-root`:

|

||||

```bash

|

||||

kubectl get secret -n tenant-root tenant-root -o go-template='{{ printf "%s\n" (index .data "token" | base64decode) }}'

|

||||

```

|

||||

|

||||

Enable port forward to cozy-dashboard:

|

||||

```bash

|

||||

kubectl port-forward -n cozy-dashboard svc/dashboard 8080:80

|

||||

```

|

||||

|

||||

Open: http://localhost:8080/

|

||||

|

||||

- Select `tenant-root`

|

||||

- Click `Upgrade` button

|

||||

- Write a domain into `host` which you wish to use as parent domain for all deployed applications

|

||||

**Note:**

|

||||

- if you have no domain yet, you can use `192.168.100.200.nip.io` where `192.168.100.200` is a first IP address in your network addresses range.

|

||||

- alternatively you can leave the default value, however you'll be need to modify your `/etc/hosts` every time you want to access specific application.

|

||||

- Set `etcd`, `monitoring` and `ingress` to enabled position

|

||||

- Click Deploy

|

||||

|

||||

|

||||

Check persistent volumes provisioned:

|

||||

|

||||

```bash

|

||||

kubectl get pvc -n tenant-root

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

|

||||

data-etcd-0 Bound pvc-4cbd29cc-a29f-453d-b412-451647cd04bf 10Gi RWO local <unset> 2m10s

|

||||

data-etcd-1 Bound pvc-1579f95a-a69d-4a26-bcc2-b15ccdbede0d 10Gi RWO local <unset> 115s

|

||||

data-etcd-2 Bound pvc-907009e5-88bf-4d18-91e7-b56b0dbfb97e 10Gi RWO local <unset> 91s

|

||||

grafana-db-1 Bound pvc-7b3f4e23-228a-46fd-b820-d033ef4679af 10Gi RWO local <unset> 2m41s

|

||||

grafana-db-2 Bound pvc-ac9b72a4-f40e-47e8-ad24-f50d843b55e4 10Gi RWO local <unset> 113s

|

||||

vmselect-cachedir-vmselect-longterm-0 Bound pvc-622fa398-2104-459f-8744-565eee0a13f1 2Gi RWO local <unset> 2m21s

|

||||

vmselect-cachedir-vmselect-longterm-1 Bound pvc-fc9349f5-02b2-4e25-8bef-6cbc5cc6d690 2Gi RWO local <unset> 2m21s

|

||||

vmselect-cachedir-vmselect-shortterm-0 Bound pvc-7acc7ff6-6b9b-4676-bd1f-6867ea7165e2 2Gi RWO local <unset> 2m41s

|

||||

vmselect-cachedir-vmselect-shortterm-1 Bound pvc-e514f12b-f1f6-40ff-9838-a6bda3580eb7 2Gi RWO local <unset> 2m40s

|

||||

vmstorage-db-vmstorage-longterm-0 Bound pvc-e8ac7fc3-df0d-4692-aebf-9f66f72f9fef 10Gi RWO local <unset> 2m21s

|

||||

vmstorage-db-vmstorage-longterm-1 Bound pvc-68b5ceaf-3ed1-4e5a-9568-6b95911c7c3a 10Gi RWO local <unset> 2m21s

|

||||

vmstorage-db-vmstorage-shortterm-0 Bound pvc-cee3a2a4-5680-4880-bc2a-85c14dba9380 10Gi RWO local <unset> 2m41s

|

||||

vmstorage-db-vmstorage-shortterm-1 Bound pvc-d55c235d-cada-4c4a-8299-e5fc3f161789 10Gi RWO local <unset> 2m41s

|

||||

```

|

||||

|

||||

Check all pods are running:

|

||||

|

||||

|

||||

```bash

|

||||

kubectl get pod -n tenant-root

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME READY STATUS RESTARTS AGE

|

||||

etcd-0 1/1 Running 0 2m1s

|

||||

etcd-1 1/1 Running 0 106s

|

||||

etcd-2 1/1 Running 0 82s

|

||||

grafana-db-1 1/1 Running 0 119s

|

||||

grafana-db-2 1/1 Running 0 13s

|

||||

grafana-deployment-74b5656d6-5dcvn 1/1 Running 0 90s

|

||||

grafana-deployment-74b5656d6-q5589 1/1 Running 1 (105s ago) 111s

|

||||

root-ingress-controller-6ccf55bc6d-pg79l 2/2 Running 0 2m27s

|

||||

root-ingress-controller-6ccf55bc6d-xbs6x 2/2 Running 0 2m29s

|

||||

root-ingress-defaultbackend-686bcbbd6c-5zbvp 1/1 Running 0 2m29s

|

||||

vmalert-vmalert-644986d5c-7hvwk 2/2 Running 0 2m30s

|

||||

vmalertmanager-alertmanager-0 2/2 Running 0 2m32s

|

||||

vmalertmanager-alertmanager-1 2/2 Running 0 2m31s

|

||||

vminsert-longterm-75789465f-hc6cz 1/1 Running 0 2m10s

|

||||

vminsert-longterm-75789465f-m2v4t 1/1 Running 0 2m12s

|

||||

vminsert-shortterm-78456f8fd9-wlwww 1/1 Running 0 2m29s

|

||||

vminsert-shortterm-78456f8fd9-xg7cw 1/1 Running 0 2m28s

|

||||

vmselect-longterm-0 1/1 Running 0 2m12s

|

||||

vmselect-longterm-1 1/1 Running 0 2m12s

|

||||

vmselect-shortterm-0 1/1 Running 0 2m31s

|

||||

vmselect-shortterm-1 1/1 Running 0 2m30s

|

||||

vmstorage-longterm-0 1/1 Running 0 2m12s

|

||||

vmstorage-longterm-1 1/1 Running 0 2m12s

|

||||

vmstorage-shortterm-0 1/1 Running 0 2m32s

|

||||

vmstorage-shortterm-1 1/1 Running 0 2m31s

|

||||

```

|

||||

|

||||

Now you can get public IP of ingress controller:

|

||||

```

|

||||

kubectl get svc -n tenant-root root-ingress-controller

|

||||

```

|

||||

|

||||

example output:

|

||||

```console

|

||||

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

|

||||

root-ingress-controller LoadBalancer 10.96.16.141 192.168.100.200 80:31632/TCP,443:30113/TCP 3m33s

|

||||

```

|

||||

|

||||

Use `grafana.example.org` (under 192.168.100.200) to access system monitoring, where `example.org` is your domain specified for `tenant-root`

|

||||

|

||||

- login: `admin`

|

||||

- password:

|

||||

|

||||

```bash

|

||||

kubectl get secret -n tenant-root grafana-admin-password -o go-template='{{ printf "%s\n" (index .data "password" | base64decode) }}'

|

||||

```

|

||||

|

||||

@@ -20,28 +20,9 @@ miss_map=$(echo "$new_map" | awk 'NR==FNR { new_map[$1 " " $2] = $3; next } { if

|

||||

resolved_miss_map=$(

|

||||

echo "$miss_map" | while read chart version commit; do

|

||||

if [ "$commit" = HEAD ]; then

|

||||

line=$(awk '/^version:/ {print NR; exit}' "./$chart/Chart.yaml")

|

||||

change_commit=$(git --no-pager blame -L"$line",+1 -- "$chart/Chart.yaml" | awk '{print $1}')

|

||||

|

||||

if [ "$change_commit" = "00000000" ]; then

|

||||

# Not commited yet, use previus commit

|

||||

line=$(git show HEAD:"./$chart/Chart.yaml" | awk '/^version:/ {print NR; exit}')

|

||||

commit=$(git --no-pager blame -L"$line",+1 HEAD -- "$chart/Chart.yaml" | awk '{print $1}')

|

||||

if [ $(echo $commit | cut -c1) = "^" ]; then

|

||||

# Previus commit not exists

|

||||

commit=$(echo $commit | cut -c2-)

|

||||

fi

|

||||

else

|

||||

# Commited, but version_map wasn't updated

|

||||

line=$(git show HEAD:"./$chart/Chart.yaml" | awk '/^version:/ {print NR; exit}')

|

||||

change_commit=$(git --no-pager blame -L"$line",+1 HEAD -- "$chart/Chart.yaml" | awk '{print $1}')

|

||||

if [ $(echo $change_commit | cut -c1) = "^" ]; then

|

||||

# Previus commit not exists

|

||||

commit=$(echo $change_commit | cut -c2-)

|

||||

else

|

||||

commit=$(git describe --always "$change_commit~1")

|

||||

fi

|

||||

fi

|

||||

line=$(git show HEAD:"./$chart/Chart.yaml" | awk '/^version:/ {print NR; exit}')

|

||||

change_commit=$(git --no-pager blame -L"$line",+1 HEAD -- "$chart/Chart.yaml" | awk '{print $1}')

|

||||

commit=$(git describe --always "$change_commit~1")

|

||||

fi

|

||||

echo "$chart $version $commit"

|

||||

done

|

||||

|

||||

22

hack/prepare_release.sh

Executable file

22

hack/prepare_release.sh

Executable file

@@ -0,0 +1,22 @@

|

||||

#!/bin/sh

|

||||

set -e

|

||||

|

||||

if [ -e $1 ]; then

|

||||

echo "Please pass version in the first argument"

|

||||

echo "Example: $0 v0.0.2"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

version=$1

|

||||

talos_version=$(awk '/^version:/ {print $2}' packages/core/installer/images/talos/profiles/installer.yaml)

|

||||

|

||||

set -x

|

||||

|

||||

sed -i "s|\(ghcr.io/aenix-io/cozystack/matchbox:\)v[^ ]\+|\1${version}|g" README.md

|

||||

sed -i "s|\(ghcr.io/aenix-io/cozystack/talos:\)v[^ ]\+|\1${talos_version}|g" README.md

|

||||

|

||||

sed -i "/^TAG / s|=.*|= ${version}|" \

|

||||

packages/apps/http-cache/Makefile \

|

||||

packages/apps/kubernetes/Makefile \

|

||||

packages/core/installer/Makefile \

|

||||

packages/system/dashboard/Makefile

|

||||

@@ -15,6 +15,13 @@ metadata:

|

||||

namespace: cozy-system

|

||||

---

|

||||

# Source: cozy-installer/templates/cozystack.yaml

|

||||

apiVersion: v1

|

||||

kind: ServiceAccount

|

||||

metadata:

|

||||

name: cozystack

|

||||

namespace: cozy-system

|

||||

---

|

||||

# Source: cozy-installer/templates/cozystack.yaml

|

||||

apiVersion: rbac.authorization.k8s.io/v1

|

||||

kind: ClusterRoleBinding

|

||||

metadata:

|

||||

@@ -54,6 +61,8 @@ spec:

|

||||

selector:

|

||||

matchLabels:

|

||||

app: cozystack

|

||||

strategy:

|

||||

type: Recreate

|

||||

template:

|

||||

metadata:

|

||||

labels:

|

||||

@@ -63,26 +72,14 @@ spec:

|

||||

serviceAccountName: cozystack

|

||||

containers:

|

||||

- name: cozystack

|

||||

image: "ghcr.io/aenix-io/cozystack/cozystack:v0.4.0"

|

||||

image: "ghcr.io/aenix-io/cozystack/installer:v0.0.2"

|

||||

env:

|

||||

- name: KUBERNETES_SERVICE_HOST

|

||||

value: localhost

|

||||

- name: KUBERNETES_SERVICE_PORT

|

||||

value: "7445"

|

||||

- name: K8S_AWAIT_ELECTION_ENABLED

|

||||

value: "1"

|

||||

- name: K8S_AWAIT_ELECTION_NAME

|

||||

value: cozystack

|

||||

- name: K8S_AWAIT_ELECTION_LOCK_NAME

|

||||

value: cozystack

|

||||

- name: K8S_AWAIT_ELECTION_LOCK_NAMESPACE

|

||||

value: cozy-system

|

||||

- name: K8S_AWAIT_ELECTION_IDENTITY

|

||||

valueFrom:

|

||||

fieldRef:

|

||||

fieldPath: metadata.name

|

||||

- name: darkhttpd

|

||||

image: "ghcr.io/aenix-io/cozystack/cozystack:v0.4.0"

|

||||

image: "ghcr.io/aenix-io/cozystack/installer:v0.0.2"

|

||||

command:

|

||||

- /usr/bin/darkhttpd

|

||||

- /cozystack/assets

|

||||

@@ -95,6 +92,3 @@ spec:

|

||||

- key: "node.kubernetes.io/not-ready"

|

||||

operator: "Exists"

|

||||

effect: "NoSchedule"

|

||||

- key: "node.cilium.io/agent-not-ready"

|

||||

operator: "Exists"

|

||||

effect: "NoSchedule"

|

||||

|

||||

@@ -7,7 +7,7 @@ repo:

|

||||

awk '$$3 != "HEAD" {print "mkdir -p $(TMP)/" $$1 "-" $$2}' versions_map | sh -ex

|

||||

awk '$$3 != "HEAD" {print "git archive " $$3 " " $$1 " | tar -xf- --strip-components=1 -C $(TMP)/" $$1 "-" $$2 }' versions_map | sh -ex

|

||||

helm package -d "$(OUT)" $$(find . $(TMP) -mindepth 2 -maxdepth 2 -name Chart.yaml | awk 'sub("/Chart.yaml", "")' | sort -V)

|

||||

cd "$(OUT)" && helm repo index . --url http://cozystack.cozy-system.svc/repos/apps

|

||||

cd "$(OUT)" && helm repo index .

|

||||

rm -rf "$(TMP)"

|

||||

|

||||

fix-chartnames:

|

||||

|

||||

@@ -1,25 +0,0 @@

|

||||

apiVersion: v2

|

||||

name: clickhouse

|

||||

description: Managed ClickHouse service

|

||||

icon: https://cdn.worldvectorlogo.com/logos/clickhouse.svg

|

||||

|

||||

# A chart can be either an 'application' or a 'library' chart.

|

||||

#

|

||||

# Application charts are a collection of templates that can be packaged into versioned archives

|

||||

# to be deployed.

|

||||

#

|

||||

# Library charts provide useful utilities or functions for the chart developer. They're included as

|

||||

# a dependency of application charts to inject those utilities and functions into the rendering

|

||||

# pipeline. Library charts do not define any templates and therefore cannot be deployed.

|

||||

type: application

|

||||

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.2.0

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

# follow Semantic Versioning. They should reflect the version the application is using.

|

||||

# It is recommended to use it with quotes.

|

||||

appVersion: "24.3.0"

|

||||

@@ -1,36 +0,0 @@

|

||||

apiVersion: "clickhouse.altinity.com/v1"

|

||||

kind: "ClickHouseInstallation"

|

||||

metadata:

|

||||

name: "{{ .Release.Name }}"

|

||||

spec:

|

||||

{{- with .Values.size }}

|

||||

defaults:

|

||||

templates:

|

||||

dataVolumeClaimTemplate: data-volume-template

|

||||

{{- end }}

|

||||

configuration:

|

||||

{{- with .Values.users }}

|

||||

users:

|

||||

{{- range $name, $u := . }}

|

||||

{{ $name }}/password_sha256_hex: {{ sha256sum $u.password }}

|

||||

{{ $name }}/profile: {{ ternary "readonly" "default" (index $u "readonly" | default false) }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

profiles:

|

||||

readonly/readonly: "1"

|

||||

clusters:

|

||||

- name: "clickhouse"

|

||||

layout:

|

||||

shardsCount: {{ .Values.shards }}

|

||||

replicasCount: {{ .Values.replicas }}

|

||||

{{- with .Values.size }}

|

||||

templates:

|

||||

volumeClaimTemplates:

|

||||

- name: data-volume-template

|

||||

spec:

|

||||

accessModes:

|

||||

- ReadWriteOnce

|

||||

resources:

|

||||

requests:

|

||||

storage: {{ . }}

|

||||

{{- end }}

|

||||

@@ -1,10 +0,0 @@

|

||||

size: 10Gi

|

||||

shards: 1

|

||||

replicas: 2

|

||||

|

||||

users:

|

||||

user1:

|

||||

password: strongpassword

|

||||

user2:

|

||||

readonly: true

|

||||

password: hackme

|

||||

@@ -16,10 +16,10 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.2.0

|

||||

version: 0.1.0

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

# follow Semantic Versioning. They should reflect the version the application is using.

|

||||

# It is recommended to use it with quotes.

|

||||

appVersion: "1.25.3"

|

||||

appVersion: "1.16.0"

|

||||

|

||||

@@ -1,20 +1,22 @@

|

||||

PUSH := 1

|

||||

LOAD := 0

|

||||

REGISTRY := ghcr.io/aenix-io/cozystack

|

||||

NGINX_CACHE_TAG = v0.1.0

|

||||

|

||||

include ../../../scripts/common-envs.mk

|

||||

TAG := v0.0.2

|

||||

|

||||

image: image-nginx

|

||||

|

||||

image-nginx:

|

||||

docker buildx build --platform linux/amd64 --build-arg ARCH=amd64 images/nginx-cache \

|

||||

--provenance false \

|

||||

--tag $(REGISTRY)/nginx-cache:$(call settag,$(NGINX_CACHE_TAG)) \

|

||||

--tag $(REGISTRY)/nginx-cache:$(call settag,$(NGINX_CACHE_TAG)-$(TAG)) \

|

||||

--cache-from type=registry,ref=$(REGISTRY)/nginx-cache:latest \

|

||||

--tag $(REGISTRY)/nginx-cache:$(NGINX_CACHE_TAG) \

|

||||

--tag $(REGISTRY)/nginx-cache:$(NGINX_CACHE_TAG)-$(TAG) \

|

||||

--cache-from type=registry,ref=$(REGISTRY)/nginx-cache:$(NGINX_CACHE_TAG) \

|

||||

--cache-to type=inline \

|

||||

--metadata-file images/nginx-cache.json \

|

||||

--push=$(PUSH) \

|

||||

--load=$(LOAD)

|

||||

echo "$(REGISTRY)/nginx-cache:$(call settag,$(NGINX_CACHE_TAG))" > images/nginx-cache.tag

|

||||

echo "$(REGISTRY)/nginx-cache:$(NGINX_CACHE_TAG)" > images/nginx-cache.tag

|

||||

|

||||

update:

|

||||

tag=$$(git ls-remote --tags --sort="v:refname" https://github.com/chrislim2888/IP2Location-C-Library | awk -F'[/^]' 'END{print $$3}') && \

|

||||

|

||||

@@ -1,4 +1,14 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:9eb68d2d503d7e22afc6fde2635f566fd3456bbdb3caad5dc9f887be1dc2b8ab",

|

||||

"containerimage.digest": "sha256:1f44274dbc2c3be2a98e6cef83d68a041ae9ef31abb8ab069a525a2a92702bdd"

|

||||

"containerimage.config.digest": "sha256:f4ad0559a74749de0d11b1835823bf9c95332962b0909450251d849113f22c19",

|

||||

"containerimage.descriptor": {

|

||||

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

|

||||

"digest": "sha256:3a0e8d791e0ccf681711766387ea9278e7d39f1956509cead2f72aa0001797ef",

|

||||

"size": 1093,

|

||||

"platform": {

|

||||

"architecture": "amd64",

|

||||

"os": "linux"

|

||||

}

|

||||

},

|

||||

"containerimage.digest": "sha256:3a0e8d791e0ccf681711766387ea9278e7d39f1956509cead2f72aa0001797ef",

|

||||

"image.name": "ghcr.io/aenix-io/cozystack/nginx-cache:v0.1.0,ghcr.io/aenix-io/cozystack/nginx-cache:v0.1.0-v0.0.2"

|

||||

}

|

||||

@@ -74,7 +74,7 @@ data:

|

||||

option redispatch 1

|

||||

default-server observe layer7 error-limit 10 on-error mark-down

|

||||

|

||||

{{- range $i, $e := until (int $.Values.nginx.replicas) }}

|

||||

{{- range $i, $e := until (int $.Values.replicas) }}

|

||||

server cache{{ $i }} {{ $.Release.Name }}-nginx-cache-{{ $i }}:80 check

|

||||

{{- end }}

|

||||

{{- range $i, $e := $.Values.endpoints }}

|

||||

|

||||

@@ -7,7 +7,7 @@ metadata:

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

spec:

|

||||

replicas: {{ .Values.haproxy.replicas }}

|

||||

replicas: 2

|

||||

selector:

|

||||

matchLabels:

|

||||

app: {{ .Release.Name }}-haproxy

|

||||

|

||||

@@ -11,7 +11,7 @@ spec:

|

||||

selector:

|

||||

matchLabels:

|

||||

app: {{ $.Release.Name }}-nginx-cache

|

||||

{{- range $i := until (int $.Values.nginx.replicas) }}

|

||||

{{- range $i := until 3 }}

|

||||

---

|

||||

apiVersion: apps/v1

|

||||

kind: Deployment

|

||||

|

||||

@@ -1,10 +1,4 @@

|

||||

external: false

|

||||

|

||||

haproxy:

|

||||

replicas: 2

|

||||

nginx:

|

||||

replicas: 2

|

||||

|

||||

size: 10Gi

|

||||

endpoints:

|

||||

- 10.100.3.1:80

|

||||

|

||||

@@ -1,25 +0,0 @@

|

||||

apiVersion: v2

|

||||

name: kafka

|

||||

description: Managed Kafka service

|

||||

icon: https://upload.wikimedia.org/wikipedia/commons/0/05/Apache_kafka.svg

|

||||

|

||||

# A chart can be either an 'application' or a 'library' chart.

|

||||

#

|

||||

# Application charts are a collection of templates that can be packaged into versioned archives

|

||||

# to be deployed.

|

||||

#

|

||||

# Library charts provide useful utilities or functions for the chart developer. They're included as

|

||||

# a dependency of application charts to inject those utilities and functions into the rendering

|

||||

# pipeline. Library charts do not define any templates and therefore cannot be deployed.

|

||||

type: application

|

||||

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.1.0

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

# follow Semantic Versioning. They should reflect the version the application is using.

|

||||

# It is recommended to use it with quotes.

|

||||

appVersion: "3.7.0"

|

||||

@@ -1,53 +0,0 @@

|

||||

apiVersion: kafka.strimzi.io/v1beta2

|

||||

kind: Kafka

|

||||

metadata:

|

||||

name: {{ .Release.Name }}

|

||||

labels:

|

||||

app.kubernetes.io/instance: {{ .Release.Name }}

|

||||

app.kubernetes.io/managed-by: {{ .Release.Service }}

|

||||

spec:

|

||||

kafka:

|

||||

replicas: {{ .Values.replicas }}

|

||||

listeners:

|

||||

- name: plain

|

||||

port: 9092

|

||||

type: internal

|

||||

tls: false

|

||||

- name: tls

|

||||

port: 9093

|

||||

type: internal

|

||||

tls: true

|

||||

- name: external

|

||||

port: 9094

|

||||

{{- if .Values.external }}

|

||||

type: loadbalancer

|

||||

{{- else }}

|

||||

type: internal

|

||||

{{- end }}

|

||||

tls: false

|

||||

config:

|

||||

offsets.topic.replication.factor: 3

|

||||

transaction.state.log.replication.factor: 3

|

||||

transaction.state.log.min.isr: 2

|

||||

default.replication.factor: 3

|

||||

min.insync.replicas: 2

|

||||

storage:

|

||||

type: jbod

|

||||

volumes:

|

||||

- id: 0

|

||||

type: persistent-claim

|

||||

{{- with .Values.kafka.size }}

|

||||

size: {{ . }}

|

||||

{{- end }}

|

||||

deleteClaim: true

|

||||

zookeeper:

|

||||

replicas: {{ .Values.replicas }}

|

||||

storage:

|

||||

type: persistent-claim

|

||||

{{- with .Values.zookeeper.size }}

|

||||

size: {{ . }}

|

||||

{{- end }}

|

||||

deleteClaim: false

|

||||

entityOperator:

|

||||

topicOperator: {}

|

||||

userOperator: {}

|

||||

@@ -1,17 +0,0 @@

|

||||

{{- range $topic := .Values.topics }}

|

||||

---

|

||||

apiVersion: kafka.strimzi.io/v1beta2

|

||||

kind: KafkaTopic

|

||||

metadata:

|

||||

name: "{{ $.Release.Name }}-{{ kebabcase $topic.name }}"

|

||||

labels:

|

||||

strimzi.io/cluster: "{{ $.Release.Name }}"

|

||||

spec:

|

||||

topicName: "{{ $topic.name }}"

|

||||

partitions: 10

|

||||

replicas: 3

|

||||

{{- with $topic.config }}

|

||||

config:

|

||||

{{- toYaml . | nindent 4 }}

|

||||

{{- end }}

|

||||

{{- end }}

|

||||

@@ -1,22 +0,0 @@

|

||||

external: false

|

||||

kafka:

|

||||

size: 10Gi

|

||||

replicas: 3

|

||||

zookeeper:

|

||||

size: 5Gi

|

||||

replicas: 3

|

||||

|

||||

topics:

|

||||

- name: Results

|

||||

partitions: 1

|

||||

replicas: 3

|

||||

config:

|

||||

min.insync.replicas: 2

|

||||

- name: Orders

|

||||

config:

|

||||

cleanup.policy: compact

|

||||

segment.ms: 3600000

|

||||

max.compaction.lag.ms: 5400000

|

||||

min.insync.replicas: 2

|

||||

partitions: 1

|

||||

replicationFactor: 3

|

||||

@@ -16,10 +16,10 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.2.0

|

||||

version: 0.1.0

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

# follow Semantic Versioning. They should reflect the version the application is using.

|

||||

# It is recommended to use it with quotes.

|

||||

appVersion: "1.19.0"

|

||||

appVersion: "1.16.0"

|

||||

|

||||

@@ -1,17 +1,19 @@

|

||||

PUSH := 1

|

||||

LOAD := 0

|

||||

REGISTRY := ghcr.io/aenix-io/cozystack

|

||||

TAG := v0.0.2

|

||||

UBUNTU_CONTAINER_DISK_TAG = v1.29.1

|

||||

|

||||

include ../../../scripts/common-envs.mk

|

||||

|

||||

image: image-ubuntu-container-disk

|

||||

|

||||

image-ubuntu-container-disk:

|

||||

docker buildx build --platform linux/amd64 --build-arg ARCH=amd64 images/ubuntu-container-disk \

|

||||

--provenance false \

|

||||

--tag $(REGISTRY)/ubuntu-container-disk:$(call settag,$(UBUNTU_CONTAINER_DISK_TAG)) \

|

||||

--tag $(REGISTRY)/ubuntu-container-disk:$(call settag,$(UBUNTU_CONTAINER_DISK_TAG)-$(TAG)) \

|

||||

--cache-from type=registry,ref=$(REGISTRY)/ubuntu-container-disk:latest \

|

||||

--tag $(REGISTRY)/ubuntu-container-disk:$(UBUNTU_CONTAINER_DISK_TAG) \

|

||||

--tag $(REGISTRY)/ubuntu-container-disk:$(UBUNTU_CONTAINER_DISK_TAG)-$(TAG) \

|

||||

--cache-from type=registry,ref=$(REGISTRY)/ubuntu-container-disk:$(UBUNTU_CONTAINER_DISK_TAG) \

|

||||

--cache-to type=inline \

|

||||

--metadata-file images/ubuntu-container-disk.json \

|

||||

--push=$(PUSH) \

|

||||

--load=$(LOAD)

|

||||

echo "$(REGISTRY)/ubuntu-container-disk:$(call settag,$(UBUNTU_CONTAINER_DISK_TAG))" > images/ubuntu-container-disk.tag

|

||||

echo "$(REGISTRY)/ubuntu-container-disk:$(UBUNTU_CONTAINER_DISK_TAG)" > images/ubuntu-container-disk.tag

|

||||

|

||||

@@ -1,4 +1,4 @@

|

||||

{

|

||||

"containerimage.config.digest": "sha256:a7e8e6e35ac07bcf6253c9cfcf21fd3c315bd0653ad0427dd5f0cae95ffd3722",

|

||||

"containerimage.digest": "sha256:c03bffeeb70fe7dd680d2eca3021d2405fbcd9961dd38437f5673560c31c72cc"

|

||||

"containerimage.config.digest": "sha256:e982cfa2320d3139ed311ae44bcc5ea18db7e4e76d2746e0af04c516288ff0f1",

|

||||

"containerimage.digest": "sha256:34f6aba5b5a2afbb46bbb891ef4ddc0855c2ffe4f9e5a99e8e553286ddd2c070"

|

||||

}

|

||||

@@ -15,12 +15,6 @@ spec:

|

||||

labels:

|

||||

app: {{ .Release.Name }}-cluster-autoscaler

|

||||

spec:

|

||||

tolerations:

|

||||

- key: CriticalAddonsOnly

|

||||

operator: Exists

|

||||

- key: node-role.kubernetes.io/control-plane

|

||||

operator: Exists

|

||||

effect: "NoSchedule"

|

||||

containers:

|

||||

- image: ghcr.io/kvaps/test:cluster-autoscaller

|

||||

name: cluster-autoscaler

|

||||

|

||||

@@ -64,13 +64,12 @@ metadata:

|

||||

cluster.x-k8s.io/managed-by: kamaji

|

||||

name: {{ .Release.Name }}

|

||||

namespace: {{ .Release.Namespace }}

|

||||

{{- range $groupName, $group := .Values.nodeGroups }}

|

||||

---

|

||||

apiVersion: bootstrap.cluster.x-k8s.io/v1beta1

|

||||

kind: KubeadmConfigTemplate

|

||||

metadata:

|

||||

name: {{ $.Release.Name }}-{{ $groupName }}

|

||||

namespace: {{ $.Release.Namespace }}

|

||||

name: {{ .Release.Name }}-md-0

|

||||

namespace: {{ .Release.Namespace }}

|

||||

spec:

|

||||

template:

|

||||

spec:

|

||||

@@ -79,7 +78,7 @@ spec:

|

||||

kubeletExtraArgs: {}

|

||||

discovery:

|

||||

bootstrapToken:

|

||||

apiServerEndpoint: {{ $.Release.Name }}.{{ $.Release.Namespace }}.svc:6443

|

||||

apiServerEndpoint: {{ .Release.Name }}.{{ .Release.Namespace }}.svc:6443

|

||||

initConfiguration:

|

||||

skipPhases:

|

||||

- addon/kube-proxy

|

||||

@@ -87,8 +86,8 @@ spec:

|

||||

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha1

|

||||

kind: KubevirtMachineTemplate

|

||||

metadata:

|

||||

name: {{ $.Release.Name }}-{{ $groupName }}

|

||||

namespace: {{ $.Release.Namespace }}

|

||||

name: {{ .Release.Name }}-md-0

|

||||

namespace: {{ .Release.Namespace }}

|

||||

spec:

|

||||

template:

|

||||

spec:

|

||||

@@ -96,7 +95,7 @@ spec:

|

||||

checkStrategy: ssh

|

||||

virtualMachineTemplate:

|

||||

metadata:

|

||||

namespace: {{ $.Release.Namespace }}

|

||||

namespace: {{ .Release.Namespace }}

|

||||

spec:

|

||||

runStrategy: Always

|

||||

template:

|

||||

@@ -104,7 +103,7 @@ spec:

|

||||

domain:

|

||||

cpu:

|

||||

threads: 1

|

||||

cores: {{ $group.resources.cpu }}

|

||||

cores: 2

|

||||

sockets: 1

|

||||

devices:

|

||||

disks:

|

||||

@@ -113,7 +112,7 @@ spec:

|

||||

name: containervolume

|

||||

networkInterfaceMultiqueue: true

|

||||

memory:

|

||||

guest: {{ $group.resources.memory }}

|

||||

guest: 1024Mi

|

||||

evictionStrategy: External

|

||||

volumes:

|

||||

- containerDisk:

|

||||

@@ -123,28 +122,29 @@ spec:

|

||||

apiVersion: cluster.x-k8s.io/v1beta1

|

||||

kind: MachineDeployment

|

||||

metadata:

|

||||

name: {{ $.Release.Name }}-{{ $groupName }}

|

||||

namespace: {{ $.Release.Namespace }}

|

||||

name: {{ .Release.Name }}-md-0

|

||||

namespace: {{ .Release.Namespace }}

|

||||

annotations:

|

||||

cluster.x-k8s.io/cluster-api-autoscaler-node-group-min-size: "{{ $group.minReplicas }}"

|

||||

cluster.x-k8s.io/cluster-api-autoscaler-node-group-max-size: "{{ $group.maxReplicas }}"

|

||||

capacity.cluster-autoscaler.kubernetes.io/memory: "{{ $group.resources.memory }}"

|

||||

capacity.cluster-autoscaler.kubernetes.io/cpu: "{{ $group.resources.cpu }}"

|

||||

cluster.x-k8s.io/cluster-api-autoscaler-node-group-max-size: "2"

|

||||

cluster.x-k8s.io/cluster-api-autoscaler-node-group-min-size: "0"

|

||||

capacity.cluster-autoscaler.kubernetes.io/memory: "1024Mi"

|

||||

capacity.cluster-autoscaler.kubernetes.io/cpu: "2"

|

||||

spec:

|

||||

clusterName: {{ $.Release.Name }}

|

||||

clusterName: {{ .Release.Name }}

|

||||

selector:

|

||||

matchLabels: null

|

||||

template:

|

||||

spec:

|

||||

bootstrap:

|

||||

configRef:

|

||||

apiVersion: bootstrap.cluster.x-k8s.io/v1beta1

|

||||

kind: KubeadmConfigTemplate

|

||||

name: {{ $.Release.Name }}-{{ $groupName }}

|

||||

name: {{ .Release.Name }}-md-0

|

||||

namespace: default

|

||||

clusterName: {{ $.Release.Name }}

|

||||

clusterName: {{ .Release.Name }}

|

||||

infrastructureRef:

|

||||

apiVersion: infrastructure.cluster.x-k8s.io/v1alpha1

|

||||

kind: KubevirtMachineTemplate

|

||||

name: {{ $.Release.Name }}-{{ $groupName }}

|

||||

name: {{ .Release.Name }}-md-0

|

||||

namespace: default

|

||||

version: v1.29.0

|

||||

{{- end }}

|

||||

version: v1.23.10

|

||||

|

||||

@@ -16,10 +16,12 @@ spec:

|

||||

spec:

|

||||

serviceAccountName: {{ .Release.Name }}-kcsi

|

||||

priorityClassName: system-cluster-critical

|

||||

nodeSelector:

|

||||

node-role.kubernetes.io/control-plane: ""

|

||||

tolerations:

|

||||

- key: CriticalAddonsOnly

|

||||

operator: Exists

|

||||

- key: node-role.kubernetes.io/control-plane

|

||||

- key: node-role.kubernetes.io/master

|

||||

operator: Exists

|

||||

effect: "NoSchedule"

|

||||

containers:

|

||||

|

||||

@@ -12,12 +12,6 @@ spec:

|

||||

spec:

|

||||

serviceAccountName: {{ .Release.Name }}-flux-teardown

|

||||

restartPolicy: Never

|

||||

tolerations:

|

||||

- key: CriticalAddonsOnly

|

||||

operator: Exists

|

||||

- key: node-role.kubernetes.io/control-plane

|

||||

operator: Exists

|

||||

effect: "NoSchedule"

|

||||

containers:

|

||||

- name: kubectl

|

||||

image: docker.io/clastix/kubectl:v1.29.1

|

||||

|

||||

@@ -14,12 +14,6 @@ spec:

|

||||

labels:

|

||||

k8s-app: {{ .Release.Name }}-kccm

|

||||

spec:

|

||||

tolerations:

|

||||

- key: CriticalAddonsOnly

|

||||

operator: Exists

|

||||

- key: node-role.kubernetes.io/control-plane

|

||||

operator: Exists

|

||||

effect: "NoSchedule"

|

||||

containers:

|

||||

- name: kubevirt-cloud-controller-manager

|

||||

args:

|

||||

@@ -50,4 +44,6 @@ spec:

|

||||

- secret:

|

||||

secretName: {{ .Release.Name }}-admin-kubeconfig

|

||||

name: kubeconfig

|

||||

tolerations:

|

||||

- operator: Exists

|

||||

serviceAccountName: {{ .Release.Name }}-kccm

|

||||

|

||||

11

packages/apps/kubernetes/values.schema.json

Normal file

11

packages/apps/kubernetes/values.schema.json

Normal file

@@ -0,0 +1,11 @@

|

||||

{

|

||||

"$schema": "http://json-schema.org/schema#",

|

||||

"type": "object",

|

||||

"properties": {

|

||||

"host": {

|

||||

"type": "string",

|

||||

"title": "Domain name for this kubernetes cluster",

|

||||

"description": "This host will be used for all apps deployed in this tenant"

|

||||

}

|

||||

}

|

||||

}

|

||||

@@ -1,10 +1 @@

|

||||

host: ""

|

||||

controlPlane:

|

||||

replicas: 2

|

||||

nodeGroups:

|

||||

md0:

|

||||

minReplicas: 0

|

||||

maxReplicas: 10

|

||||

resources:

|

||||

cpu: 2

|

||||

memory: 1024Mi

|

||||

|

||||

@@ -16,10 +16,10 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.3.0

|

||||

version: 0.1.0

|

||||

|

||||

# This is the version number of the application being deployed. This version number should be

|

||||

# incremented each time you make changes to the application. Versions are not expected to

|

||||

# follow Semantic Versioning. They should reflect the version the application is using.

|

||||

# It is recommended to use it with quotes.

|

||||

appVersion: "11.0.2"

|

||||

appVersion: "1.16.0"

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

{{- range $name := .Values.databases }}

|

||||

{{ $dnsName := replace "_" "-" $name }}

|

||||

---

|

||||

apiVersion: k8s.mariadb.com/v1alpha1

|

||||

apiVersion: mariadb.mmontes.io/v1alpha1

|

||||

kind: Database

|

||||

metadata:

|

||||

name: {{ $.Release.Name }}-{{ $dnsName }}

|

||||

|

||||

@@ -1,5 +1,5 @@

|

||||

---

|

||||

apiVersion: k8s.mariadb.com/v1alpha1

|

||||

apiVersion: mariadb.mmontes.io/v1alpha1

|

||||

kind: MariaDB

|

||||

metadata:

|

||||

name: {{ .Release.Name }}

|

||||

@@ -12,7 +12,7 @@ spec:

|

||||

|

||||

port: 3306

|

||||

|

||||

replicas: {{ .Values.replicas }}

|

||||

replicas: 2

|

||||

affinity:

|

||||

podAntiAffinity:

|

||||

requiredDuringSchedulingIgnoredDuringExecution:

|

||||

@@ -28,18 +28,15 @@ spec:

|

||||

- {{ .Release.Name }}

|

||||

topologyKey: "kubernetes.io/hostname"

|

||||

|

||||

{{- if gt (int .Values.replicas) 1 }}

|

||||

replication:

|

||||

enabled: true

|

||||

#primary:

|

||||

# podIndex: 0

|

||||

# automaticFailover: true

|

||||

{{- end }}

|

||||

|

||||

metrics:

|

||||

enabled: true

|

||||

exporter:

|

||||

image: prom/mysqld-exporter:v0.15.1

|

||||

image: prom/mysqld-exporter:v0.14.0

|

||||

resources:

|

||||

requests:

|

||||

cpu: 50m

|

||||

@@ -56,10 +53,14 @@ spec:

|

||||

name: {{ .Release.Name }}-my-cnf

|

||||

key: config

|

||||

|

||||

storage:

|

||||

size: {{ .Values.size }}

|

||||

resizeInUseVolumes: true

|

||||

waitForVolumeResize: true

|

||||

volumeClaimTemplate:

|

||||

resources:

|

||||

requests:

|

||||

storage: {{ .Values.size }}

|

||||

accessModes:

|

||||

- ReadWriteOnce

|

||||

|

||||

|

||||

|

||||

{{- if .Values.external }}

|

||||

primaryService:

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

{{ if not (eq $name "root") }}

|

||||

{{ $dnsName := replace "_" "-" $name }}

|

||||

---

|

||||

apiVersion: k8s.mariadb.com/v1alpha1

|

||||

apiVersion: mariadb.mmontes.io/v1alpha1

|

||||

kind: User

|

||||

metadata:

|

||||

name: {{ $.Release.Name }}-{{ $dnsName }}

|

||||

@@ -15,7 +15,7 @@ spec:

|

||||

key: {{ $name }}-password

|

||||

maxUserConnections: {{ $u.maxUserConnections }}

|

||||

---

|

||||

apiVersion: k8s.mariadb.com/v1alpha1

|

||||

apiVersion: mariadb.mmontes.io/v1alpha1

|

||||

kind: Grant

|

||||

metadata:

|

||||

name: {{ $.Release.Name }}-{{ $dnsName }}

|

||||

|

||||

@@ -1,8 +1,6 @@

|

||||

external: false

|

||||

size: 10Gi

|

||||

|

||||

replicas: 2

|

||||

|

||||

users:

|

||||

root:

|

||||

password: strongpassword

|

||||

|

||||

@@ -16,10 +16,10 @@ type: application

|

||||

# This is the chart version. This version number should be incremented each time you make changes

|

||||

# to the chart and its templates, including the app version.

|

||||

# Versions are expected to follow Semantic Versioning (https://semver.org/)

|

||||

version: 0.2.0

|

||||

version: 0.1.0

|

||||

|

||||