mirror of

https://github.com/outbackdingo/cozystack.git

synced 2026-01-28 18:18:41 +00:00

Compare commits

254 Commits

v0.31.0-rc

...

v0.32.0

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

3ce6dbe850 | ||

|

|

8d5007919f | ||

|

|

08e569918b | ||

|

|

6498000721 | ||

|

|

8486e6b3aa | ||

|

|

3f6b6798f4 | ||

|

|

c1b928b8ef | ||

|

|

c2e8fba483 | ||

|

|

62cb694d72 | ||

|

|

c619343aa2 | ||

|

|

75ad26989d | ||

|

|

c4fc8c18df | ||

|

|

8663dc940f | ||

|

|

cf983a8f9c | ||

|

|

ad6aa0ca94 | ||

|

|

9dc5d62f47 | ||

|

|

3b8a9f9d2c | ||

|

|

ab9926a177 | ||

|

|

f83741eb09 | ||

|

|

028f2e4e8d | ||

|

|

255fa8cbe1 | ||

|

|

b42f5cdc01 | ||

|

|

74633ad699 | ||

|

|

980185ca2b | ||

|

|

8eabe30548 | ||

|

|

0c9c688e6d | ||

|

|

908c75927e | ||

|

|

0a1f078384 | ||

|

|

6a713e5eb4 | ||

|

|

8f0a28bad5 | ||

|

|

0fa70d9d38 | ||

|

|

b14c82d606 | ||

|

|

8e79f24c5b | ||

|

|

3266a5514e | ||

|

|

0c37323a15 | ||

|

|

10af98e158 | ||

|

|

632224a30a | ||

|

|

e8d11e64a6 | ||

|

|

27c7a2feb5 | ||

|

|

9555386bd7 | ||

|

|

9733de38a3 | ||

|

|

775a05cc3a | ||

|

|

4e5cc2ae61 | ||

|

|

32adf5ab38 | ||

|

|

28302e776e | ||

|

|

911ca64de0 | ||

|

|

045ea76539 | ||

|

|

cee820e82c | ||

|

|

6183b715b7 | ||

|

|

2669ab6072 | ||

|

|

96506c7cce | ||

|

|

7bb70c839e | ||

|

|

ba97a4593c | ||

|

|

c467ed798a | ||

|

|

ed881f0741 | ||

|

|

0e0dabdd08 | ||

|

|

bd8f8bde95 | ||

|

|

646dab497c | ||

|

|

dc3b61d164 | ||

|

|

4479a038cd | ||

|

|

dfd01ff118 | ||

|

|

d2bb66db31 | ||

|

|

7af97e2d9f | ||

|

|

ac5145be87 | ||

|

|

4779db2dda | ||

|

|

25c2774bc8 | ||

|

|

bbee8103eb | ||

|

|

730ea4d5ef | ||

|

|

13fccdc465 | ||

|

|

f1b66c80f6 | ||

|

|

f34f140d49 | ||

|

|

520fbfb2e4 | ||

|

|

25016580c1 | ||

|

|

f10f8455fc | ||

|

|

974581d39b | ||

|

|

7e24297913 | ||

|

|

b6142cd4f5 | ||

|

|

e87994c769 | ||

|

|

b140f1b57f | ||

|

|

64936021d2 | ||

|

|

a887e19e6c | ||

|

|

92b97a569e | ||

|

|

0e22358b30 | ||

|

|

7429daf99c | ||

|

|

b470b82e2a | ||

|

|

a0700e7399 | ||

|

|

228e1983bc | ||

|

|

7023abdba7 | ||

|

|

1b43a5f160 | ||

|

|

20f4066c16 | ||

|

|

ea0dd68e84 | ||

|

|

e0c3d2324f | ||

|

|

cb303d694c | ||

|

|

6130f43d06 | ||

|

|

4db55ac5eb | ||

|

|

bfd20a5e0e | ||

|

|

977141bed3 | ||

|

|

c4f8d6a251 | ||

|

|

9633ca4d25 | ||

|

|

f798cbd9f9 | ||

|

|

cf87779f7b | ||

|

|

c69135e0e5 | ||

|

|

a9c3a4c601 | ||

|

|

d1081c86b3 | ||

|

|

beadc80778 | ||

|

|

5bbb5a6266 | ||

|

|

0664370218 | ||

|

|

225d103509 | ||

|

|

0e22e3c12c | ||

|

|

7b8e7e40ce | ||

|

|

c941e487fb | ||

|

|

8386e985f2 | ||

|

|

e4c944488f | ||

|

|

99a7754c00 | ||

|

|

6cbfab9b2a | ||

|

|

461f756c88 | ||

|

|

50932ba49e | ||

|

|

f9f8bb2f11 | ||

|

|

2ae8f2aa19 | ||

|

|

1a872ca95c | ||

|

|

3e379e9697 | ||

|

|

7746974644 | ||

|

|

d989a8865d | ||

|

|

4aad0fc8f2 | ||

|

|

0e5ac5ed7c | ||

|

|

c267c7eb9a | ||

|

|

7792e29065 | ||

|

|

d35ff17de8 | ||

|

|

3a7d4c24ee | ||

|

|

ff2638ef66 | ||

|

|

bc294a0fe6 | ||

|

|

bf5bccb7d9 | ||

|

|

f00364037e | ||

|

|

e83bf379ba | ||

|

|

ae0549f78b | ||

|

|

74e7e5cdfb | ||

|

|

2bf4032d5b | ||

|

|

ee1763cb85 | ||

|

|

d497be9e95 | ||

|

|

6176a18a12 | ||

|

|

5789f12f3f | ||

|

|

6279873a35 | ||

|

|

79c441acb7 | ||

|

|

7864811016 | ||

|

|

13938f34fd | ||

|

|

9fb6b41e03 | ||

|

|

a8ba6b1328 | ||

|

|

9592f7fe46 | ||

|

|

119d582379 | ||

|

|

451267310b | ||

|

|

0fee3f280b | ||

|

|

2461fcd531 | ||

|

|

866b6e0a5a | ||

|

|

1dccf96506 | ||

|

|

bca27dcfdc | ||

|

|

5407ee01ee | ||

|

|

fc8b52d73d | ||

|

|

609e7ede86 | ||

|

|

289853e661 | ||

|

|

013e1936ec | ||

|

|

893818f64e | ||

|

|

56bdaae2c9 | ||

|

|

775ecb7b11 | ||

|

|

8d74a35e9c | ||

|

|

b753fd9fa8 | ||

|

|

9698f3c9f4 | ||

|

|

31b110cd39 | ||

|

|

b4da00f96f | ||

|

|

0369852035 | ||

|

|

115497b73f | ||

|

|

4f78b133c2 | ||

|

|

d550a67f19 | ||

|

|

8e6941dfbd | ||

|

|

c54567ab45 | ||

|

|

dd592ca676 | ||

|

|

5273722769 | ||

|

|

fb26e3e9b7 | ||

|

|

5e0b0167fc | ||

|

|

73fdc5ded7 | ||

|

|

5fe7b3bf16 | ||

|

|

4ecf492cd4 | ||

|

|

c42a50229f | ||

|

|

6f55a66328 | ||

|

|

9d551cc69b | ||

|

|

93b8dbb9ab | ||

|

|

8ad010d331 | ||

|

|

404579c361 | ||

|

|

f8210cf276 | ||

|

|

545e256695 | ||

|

|

e9c463c867 | ||

|

|

798ca12e43 | ||

|

|

3780925a68 | ||

|

|

a240c0b6ed | ||

|

|

de1b38c64b | ||

|

|

15d7b6d99e | ||

|

|

9377f55000 | ||

|

|

d002879b0b | ||

|

|

2c6338a2ef | ||

|

|

fd72d7c486 | ||

|

|

db34f31175 | ||

|

|

653e2bc774 | ||

|

|

31ea5eeeb2 | ||

|

|

4a2c67e045 | ||

|

|

68fb7570f7 | ||

|

|

56fc08fab4 | ||

|

|

b00ba53171 | ||

|

|

4dd52290ea | ||

|

|

492aff5265 | ||

|

|

395cdc3af1 | ||

|

|

e6f3000b3c | ||

|

|

e21c38c103 | ||

|

|

7a7512da30 | ||

|

|

58b5f6610d | ||

|

|

e81053f7dd | ||

|

|

424aab4a83 | ||

|

|

77e6db3381 | ||

|

|

f6e3188ab8 | ||

|

|

1ca0594060 | ||

|

|

ac59b4540b | ||

|

|

d0bd4b1329 | ||

|

|

ccbcaf6331 | ||

|

|

1ad1b15a5b | ||

|

|

2349ff61c1 | ||

|

|

13139dd71d | ||

|

|

57ac614865 | ||

|

|

bbb93c647d | ||

|

|

951ba75d93 | ||

|

|

15c9c4a068 | ||

|

|

57fefde732 | ||

|

|

b4a04df6f3 | ||

|

|

1e63b5e8ce | ||

|

|

6ad30915eb | ||

|

|

557ffa536f | ||

|

|

ae05d2f545 | ||

|

|

563c643813 | ||

|

|

68c85ac9ef | ||

|

|

3ac00ea4ec | ||

|

|

29b49496f2 | ||

|

|

3c27192d3e | ||

|

|

dca732cde0 | ||

|

|

0346dc05bb | ||

|

|

a03cdeff04 | ||

|

|

062d72805a | ||

|

|

70fed8148d | ||

|

|

12c6df83f5 | ||

|

|

f61a7817e6 | ||

|

|

c482289b14 | ||

|

|

1e59e5fbb6 | ||

|

|

6106a9fe51 | ||

|

|

ec9e26c054 | ||

|

|

108fc647ea | ||

|

|

a9b235048d | ||

|

|

e1c14619d2 | ||

|

|

f644bf20c5 |

9

.github/workflows/pre-commit.yml

vendored

9

.github/workflows/pre-commit.yml

vendored

@@ -3,8 +3,6 @@ name: Pre-Commit Checks

|

||||

on:

|

||||

pull_request:

|

||||

types: [labeled, opened, synchronize, reopened]

|

||||

paths-ignore:

|

||||

- '**.md'

|

||||

|

||||

concurrency:

|

||||

group: pre-commit-${{ github.workflow }}-${{ github.event.pull_request.number }}

|

||||

@@ -32,12 +30,13 @@ jobs:

|

||||

run: |

|

||||

sudo apt update

|

||||

sudo apt install curl -y

|

||||

curl -fsSL https://deb.nodesource.com/setup_16.x | sudo -E bash -

|

||||

sudo apt install nodejs -y

|

||||

git clone https://github.com/bitnami/readme-generator-for-helm

|

||||

sudo apt install npm -y

|

||||

|

||||

git clone --branch 2.7.0 --depth 1 https://github.com/bitnami/readme-generator-for-helm.git

|

||||

cd ./readme-generator-for-helm

|

||||

npm install

|

||||

npm install -g pkg

|

||||

npm install -g @yao-pkg/pkg

|

||||

pkg . -o /usr/local/bin/readme-generator

|

||||

|

||||

- name: Run pre-commit hooks

|

||||

|

||||

121

.github/workflows/pull-requests-release.yaml

vendored

121

.github/workflows/pull-requests-release.yaml

vendored

@@ -3,11 +3,6 @@ name: Releasing PR

|

||||

on:

|

||||

pull_request:

|

||||

types: [labeled, opened, synchronize, reopened, closed]

|

||||

workflow_dispatch:

|

||||

inputs:

|

||||

sha:

|

||||

description: "Commit SHA to test"

|

||||

required: true

|

||||

|

||||

concurrency:

|

||||

group: pull-requests-release-${{ github.workflow }}-${{ github.event.pull_request.number }}

|

||||

@@ -21,19 +16,14 @@ jobs:

|

||||

contents: read

|

||||

packages: write

|

||||

|

||||

# Run only when the PR carries the "release" label and not closed or via workflow_dispatch

|

||||

if: |

|

||||

(github.event_name == 'pull_request' &&

|

||||

contains(github.event.pull_request.labels.*.name, 'release') &&

|

||||

github.event.action != 'closed')

|

||||

|| github.event_name == 'workflow_dispatch'

|

||||

contains(github.event.pull_request.labels.*.name, 'release') &&

|

||||

github.event.action != 'closed'

|

||||

|

||||

steps:

|

||||

- name: Checkout code

|

||||

uses: actions/checkout@v4

|

||||

with:

|

||||

# for workflow_dispatch take a specific SHA, otherwise — head.sha PR

|

||||

ref: ${{ github.event_name == 'pull_request' && github.event.pull_request.head.sha || inputs.sha }}

|

||||

fetch-depth: 0

|

||||

fetch-tags: true

|

||||

|

||||

@@ -44,6 +34,64 @@ jobs:

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

registry: ghcr.io

|

||||

|

||||

- name: Extract tag from PR branch

|

||||

id: get_tag

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

script: |

|

||||

const branch = context.payload.pull_request.head.ref;

|

||||

const m = branch.match(/^release-(\d+\.\d+\.\d+(?:[-\w\.]+)?)$/);

|

||||

if (!m) {

|

||||

core.setFailed(`❌ Branch '${branch}' does not match 'release-X.Y.Z[-suffix]'`);

|

||||

return;

|

||||

}

|

||||

const tag = `v${m[1]}`;

|

||||

core.setOutput('tag', tag);

|

||||

|

||||

- name: Find draft release and get asset IDs

|

||||

id: fetch_assets

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

github-token: ${{ secrets.GH_PAT }}

|

||||

script: |

|

||||

const tag = '${{ steps.get_tag.outputs.tag }}';

|

||||

const releases = await github.rest.repos.listReleases({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

per_page: 100

|

||||

});

|

||||

const draft = releases.data.find(r => r.tag_name === tag && r.draft);

|

||||

if (!draft) {

|

||||

core.setFailed(`Draft release '${tag}' not found`);

|

||||

return;

|

||||

}

|

||||

const findAssetId = (name) =>

|

||||

draft.assets.find(a => a.name === name)?.id;

|

||||

const installerId = findAssetId("cozystack-installer.yaml");

|

||||

const diskId = findAssetId("nocloud-amd64.raw.xz");

|

||||

if (!installerId || !diskId) {

|

||||

core.setFailed("Missing required assets");

|

||||

return;

|

||||

}

|

||||

core.setOutput("installer_id", installerId);

|

||||

core.setOutput("disk_id", diskId);

|

||||

|

||||

- name: Download assets from GitHub API

|

||||

run: |

|

||||

mkdir -p _out/assets

|

||||

curl -sSL \

|

||||

-H "Authorization: token ${GH_PAT}" \

|

||||

-H "Accept: application/octet-stream" \

|

||||

-o _out/assets/cozystack-installer.yaml \

|

||||

"https://api.github.com/repos/${GITHUB_REPOSITORY}/releases/assets/${{ steps.fetch_assets.outputs.installer_id }}"

|

||||

curl -sSL \

|

||||

-H "Authorization: token ${GH_PAT}" \

|

||||

-H "Accept: application/octet-stream" \

|

||||

-o _out/assets/nocloud-amd64.raw.xz \

|

||||

"https://api.github.com/repos/${GITHUB_REPOSITORY}/releases/assets/${{ steps.fetch_assets.outputs.disk_id }}"

|

||||

env:

|

||||

GH_PAT: ${{ secrets.GH_PAT }}

|

||||

|

||||

- name: Run tests

|

||||

run: make test

|

||||

|

||||

@@ -89,6 +137,7 @@ jobs:

|

||||

- name: Ensure maintenance branch release-X.Y

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

github-token: ${{ secrets.GH_PAT }}

|

||||

script: |

|

||||

const tag = '${{ steps.get_tag.outputs.tag }}'; // e.g. v0.1.3 or v0.1.3-rc3

|

||||

const match = tag.match(/^v(\d+)\.(\d+)\.\d+(?:[-\w\.]+)?$/);

|

||||

@@ -98,21 +147,45 @@ jobs:

|

||||

}

|

||||

const line = `${match[1]}.${match[2]}`;

|

||||

const branch = `release-${line}`;

|

||||

|

||||

// Get main branch commit for the tag

|

||||

const ref = await github.rest.git.getRef({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

ref: `tags/${tag}`

|

||||

});

|

||||

|

||||

const commitSha = ref.data.object.sha;

|

||||

|

||||

try {

|

||||

await github.rest.repos.getBranch({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

repo: context.repo.repo,

|

||||

branch

|

||||

});

|

||||

console.log(`Branch '${branch}' already exists`);

|

||||

} catch (_) {

|

||||

await github.rest.git.createRef({

|

||||

|

||||

await github.rest.git.updateRef({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

ref: `refs/heads/${branch}`,

|

||||

sha: context.sha

|

||||

repo: context.repo.repo,

|

||||

ref: `heads/${branch}`,

|

||||

sha: commitSha,

|

||||

force: true

|

||||

});

|

||||

console.log(`✅ Branch '${branch}' created at ${context.sha}`);

|

||||

console.log(`🔁 Force-updated '${branch}' to ${commitSha}`);

|

||||

} catch (err) {

|

||||

if (err.status === 404) {

|

||||

await github.rest.git.createRef({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

ref: `refs/heads/${branch}`,

|

||||

sha: commitSha

|

||||

});

|

||||

console.log(`✅ Created branch '${branch}' at ${commitSha}`);

|

||||

} else {

|

||||

console.error('Unexpected error --', err);

|

||||

core.setFailed(`Unexpected error creating/updating branch: ${err.message}`);

|

||||

throw err;

|

||||

}

|

||||

}

|

||||

|

||||

# Get the latest published release

|

||||

@@ -145,13 +218,13 @@ jobs:

|

||||

uses: actions/github-script@v7

|

||||

with:

|

||||

script: |

|

||||

const tag = '${{ steps.get_tag.outputs.tag }}'; // v0.31.5-rc1

|

||||

const m = tag.match(/^v(\d+\.\d+\.\d+)(-rc\d+)?$/);

|

||||

const tag = '${{ steps.get_tag.outputs.tag }}'; // v0.31.5-rc.1

|

||||

const m = tag.match(/^v(\d+\.\d+\.\d+)(-(?:alpha|beta|rc)\.\d+)?$/);

|

||||

if (!m) {

|

||||

core.setFailed(`❌ tag '${tag}' must match 'vX.Y.Z' or 'vX.Y.Z-rcN'`);

|

||||

core.setFailed(`❌ tag '${tag}' must match 'vX.Y.Z' or 'vX.Y.Z-(alpha|beta|rc).N'`);

|

||||

return;

|

||||

}

|

||||

const version = m[1] + (m[2] ?? ''); // 0.31.5‑rc1

|

||||

const version = m[1] + (m[2] ?? ''); // 0.31.5-rc.1

|

||||

const isRc = Boolean(m[2]);

|

||||

core.setOutput('is_rc', isRc);

|

||||

const outdated = '${{ steps.semver.outputs.comparison-result }}' === '<';

|

||||

|

||||

45

.github/workflows/pull-requests.yaml

vendored

45

.github/workflows/pull-requests.yaml

vendored

@@ -9,8 +9,8 @@ concurrency:

|

||||

cancel-in-progress: true

|

||||

|

||||

jobs:

|

||||

e2e:

|

||||

name: Build and Test

|

||||

build:

|

||||

name: Build

|

||||

runs-on: [self-hosted]

|

||||

permissions:

|

||||

contents: read

|

||||

@@ -33,9 +33,50 @@ jobs:

|

||||

username: ${{ github.repository_owner }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

registry: ghcr.io

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

|

||||

- name: Build

|

||||

run: make build

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

|

||||

- name: Build Talos image

|

||||

run: make -C packages/core/installer talos-nocloud

|

||||

|

||||

- name: Upload installer

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: cozystack-installer

|

||||

path: _out/assets/cozystack-installer.yaml

|

||||

|

||||

- name: Upload Talos image

|

||||

uses: actions/upload-artifact@v4

|

||||

with:

|

||||

name: talos-image

|

||||

path: _out/assets/nocloud-amd64.raw.xz

|

||||

|

||||

test:

|

||||

name: Test

|

||||

runs-on: [self-hosted]

|

||||

needs: build

|

||||

|

||||

# Never run when the PR carries the "release" label.

|

||||

if: |

|

||||

!contains(github.event.pull_request.labels.*.name, 'release')

|

||||

|

||||

steps:

|

||||

- name: Download installer

|

||||

uses: actions/download-artifact@v4

|

||||

with:

|

||||

name: cozystack-installer

|

||||

path: _out/assets/

|

||||

|

||||

- name: Download Talos image

|

||||

uses: actions/download-artifact@v4

|

||||

with:

|

||||

name: talos-image

|

||||

path: _out/assets/

|

||||

|

||||

- name: Test

|

||||

run: make test

|

||||

|

||||

47

.github/workflows/tags.yaml

vendored

47

.github/workflows/tags.yaml

vendored

@@ -3,9 +3,10 @@ name: Versioned Tag

|

||||

on:

|

||||

push:

|

||||

tags:

|

||||

- 'v*.*.*' # vX.Y.Z

|

||||

- 'v*.*.*-rc.*' # vX.Y.Z-rc.N

|

||||

|

||||

- 'v*.*.*' # vX.Y.Z

|

||||

- 'v*.*.*-rc.*' # vX.Y.Z-rc.N

|

||||

- 'v*.*.*-beta.*' # vX.Y.Z-beta.N

|

||||

- 'v*.*.*-alpha.*' # vX.Y.Z-alpha.N

|

||||

|

||||

concurrency:

|

||||

group: tags-${{ github.workflow }}-${{ github.ref }}

|

||||

@@ -42,7 +43,7 @@ jobs:

|

||||

if: steps.check_release.outputs.skip == 'true'

|

||||

run: echo "Release already exists, skipping workflow."

|

||||

|

||||

# Parse tag meta‑data (rc?, maintenance line, etc.)

|

||||

# Parse tag meta-data (rc?, maintenance line, etc.)

|

||||

- name: Parse tag

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

id: tag

|

||||

@@ -50,12 +51,12 @@ jobs:

|

||||

with:

|

||||

script: |

|

||||

const ref = context.ref.replace('refs/tags/', ''); // e.g. v0.31.5-rc.1

|

||||

const m = ref.match(/^v(\d+\.\d+\.\d+)(-rc\.\d+)?$/); // ['0.31.5', '-rc.1']

|

||||

const m = ref.match(/^v(\d+\.\d+\.\d+)(-(?:alpha|beta|rc)\.\d+)?$/); // ['0.31.5', '-rc.1' | '-beta.1' | …]

|

||||

if (!m) {

|

||||

core.setFailed(`❌ tag '${ref}' must match 'vX.Y.Z' or 'vX.Y.Z-rc.N'`);

|

||||

core.setFailed(`❌ tag '${ref}' must match 'vX.Y.Z' or 'vX.Y.Z-(alpha|beta|rc).N'`);

|

||||

return;

|

||||

}

|

||||

const version = m[1] + (m[2] ?? ''); // 0.31.5‑rc.1

|

||||

const version = m[1] + (m[2] ?? ''); // 0.31.5-rc.1

|

||||

const isRc = Boolean(m[2]);

|

||||

const [maj, min] = m[1].split('.');

|

||||

core.setOutput('tag', ref); // v0.31.5-rc.1

|

||||

@@ -63,7 +64,7 @@ jobs:

|

||||

core.setOutput('is_rc', isRc); // true

|

||||

core.setOutput('line', `${maj}.${min}`); // 0.31

|

||||

|

||||

# Detect base branch (main or release‑X.Y) the tag was pushed from

|

||||

# Detect base branch (main or release-X.Y) the tag was pushed from

|

||||

- name: Get base branch

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

id: get_base

|

||||

@@ -98,11 +99,15 @@ jobs:

|

||||

username: ${{ github.repository_owner }}

|

||||

password: ${{ secrets.GITHUB_TOKEN }}

|

||||

registry: ghcr.io

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

|

||||

# Build project artifacts

|

||||

- name: Build

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

run: make build

|

||||

env:

|

||||

DOCKER_CONFIG: ${{ runner.temp }}/.docker

|

||||

|

||||

# Commit built artifacts

|

||||

- name: Commit release artifacts

|

||||

@@ -168,7 +173,7 @@ jobs:

|

||||

});

|

||||

console.log(`Draft release created for ${tag}`);

|

||||

} else {

|

||||

console.log(`Re‑using existing release ${tag}`);

|

||||

console.log(`Re-using existing release ${tag}`);

|

||||

}

|

||||

core.setOutput('upload_url', rel.upload_url);

|

||||

|

||||

@@ -181,7 +186,7 @@ jobs:

|

||||

env:

|

||||

GH_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

|

||||

# Create release‑X.Y.Z branch and push (force‑update)

|

||||

# Create release-X.Y.Z branch and push (force-update)

|

||||

- name: Create release branch

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

run: |

|

||||

@@ -225,25 +230,3 @@ jobs:

|

||||

} else {

|

||||

console.log(`PR already exists from ${head} to ${base}`);

|

||||

}

|

||||

|

||||

# Run tests

|

||||

- name: Trigger release-verify tests

|

||||

if: steps.check_release.outputs.skip == 'false'

|

||||

uses: actions/github-script@v7

|

||||

env:

|

||||

GH_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

DISPATCH_REF: ${{ steps.get_base.outputs.branch }} # main or release-X.Y

|

||||

DISPATCH_SHA: ${{ github.sha }} # commit for tests

|

||||

with:

|

||||

github-token: ${{ env.GH_TOKEN }}

|

||||

script: |

|

||||

await github.rest.actions.createWorkflowDispatch({

|

||||

owner: context.repo.owner,

|

||||

repo: context.repo.repo,

|

||||

workflow_id: 'pull-requests-release.yaml',

|

||||

ref: process.env.DISPATCH_REF,

|

||||

inputs: {

|

||||

sha: process.env.DISPATCH_SHA

|

||||

}

|

||||

});

|

||||

console.log(`🔔 verify-job triggered on ${process.env.DISPATCH_SHA}`);

|

||||

|

||||

3

.gitignore

vendored

3

.gitignore

vendored

@@ -1,6 +1,7 @@

|

||||

_out

|

||||

.git

|

||||

.idea

|

||||

.vscode

|

||||

|

||||

# User-specific stuff

|

||||

.idea/**/workspace.xml

|

||||

@@ -75,4 +76,4 @@ fabric.properties

|

||||

.idea/caches/build_file_checksums.ser

|

||||

|

||||

.DS_Store

|

||||

**/.DS_Store

|

||||

**/.DS_Store

|

||||

|

||||

@@ -18,6 +18,7 @@ repos:

|

||||

(cd "$dir" && make generate)

|

||||

fi

|

||||

done

|

||||

git diff --color=always | cat

|

||||

'

|

||||

language: script

|

||||

files: ^.*$

|

||||

|

||||

3

Makefile

3

Makefile

@@ -20,6 +20,7 @@ build: build-deps

|

||||

make -C packages/system/kubeovn image

|

||||

make -C packages/system/kubeovn-webhook image

|

||||

make -C packages/system/dashboard image

|

||||

make -C packages/system/metallb image

|

||||

make -C packages/system/kamaji image

|

||||

make -C packages/system/bucket image

|

||||

make -C packages/core/testing image

|

||||

@@ -42,7 +43,7 @@ manifests:

|

||||

(cd packages/core/installer/; helm template -n cozy-installer installer .) > _out/assets/cozystack-installer.yaml

|

||||

|

||||

assets:

|

||||

make -C packages/core/installer/ assets

|

||||

make -C packages/core/installer assets

|

||||

|

||||

test:

|

||||

make -C packages/core/testing apply

|

||||

|

||||

12

README.md

12

README.md

@@ -12,11 +12,15 @@

|

||||

|

||||

**Cozystack** is a free PaaS platform and framework for building clouds.

|

||||

|

||||

Cozystack is a [CNCF Sandbox Level Project](https://www.cncf.io/sandbox-projects/) that was originally built and sponsored by [Ænix](https://aenix.io/).

|

||||

|

||||

With Cozystack, you can transform a bunch of servers into an intelligent system with a simple REST API for spawning Kubernetes clusters,

|

||||

Database-as-a-Service, virtual machines, load balancers, HTTP caching services, and other services with ease.

|

||||

|

||||

Use Cozystack to build your own cloud or provide a cost-effective development environment.

|

||||

|

||||

|

||||

|

||||

## Use-Cases

|

||||

|

||||

* [**Using Cozystack to build a public cloud**](https://cozystack.io/docs/guides/use-cases/public-cloud/)

|

||||

@@ -28,9 +32,6 @@ You can use Cozystack as a platform to build a private cloud powered by Infrastr

|

||||

* [**Using Cozystack as a Kubernetes distribution**](https://cozystack.io/docs/guides/use-cases/kubernetes-distribution/)

|

||||

You can use Cozystack as a Kubernetes distribution for Bare Metal

|

||||

|

||||

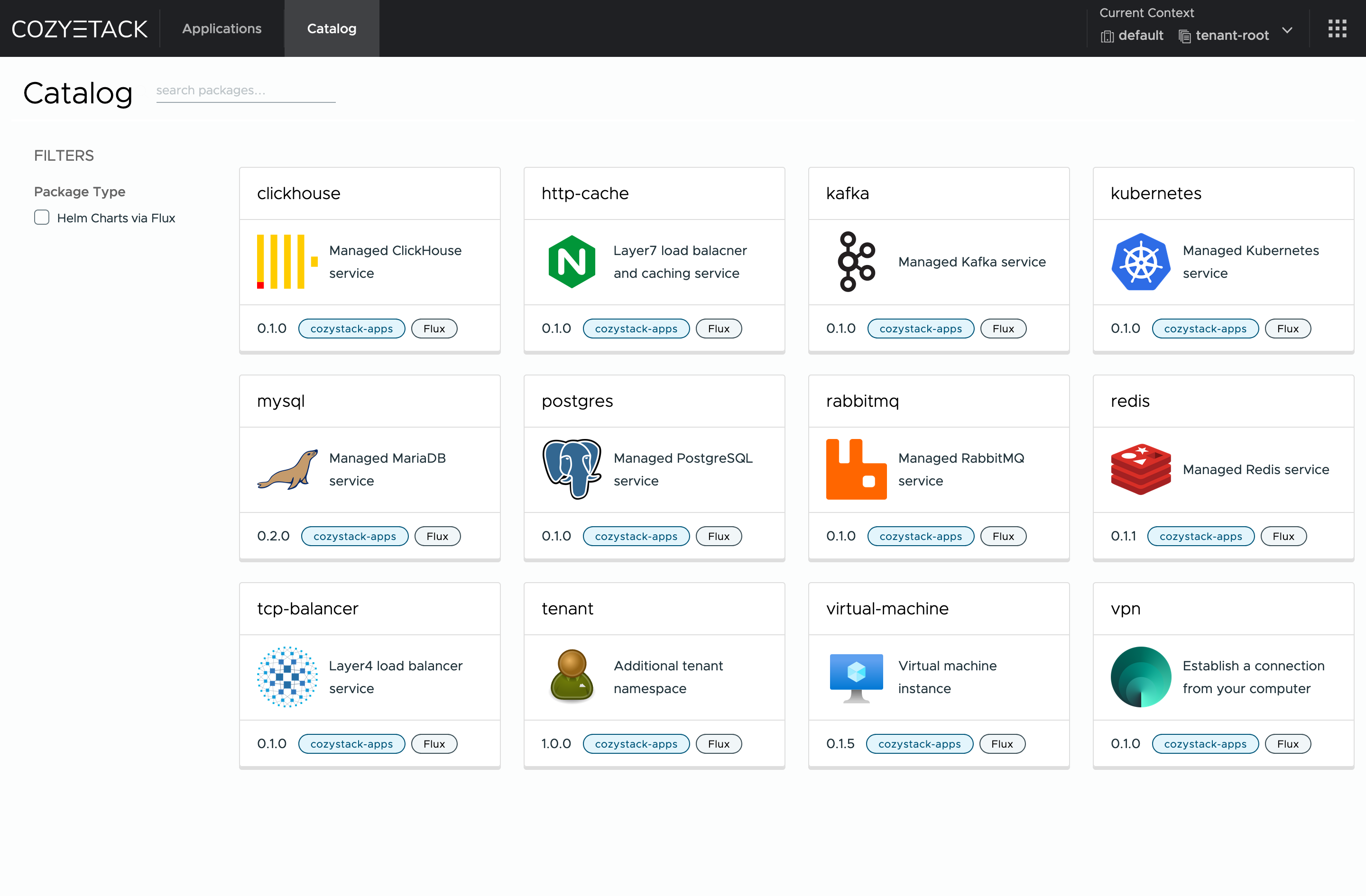

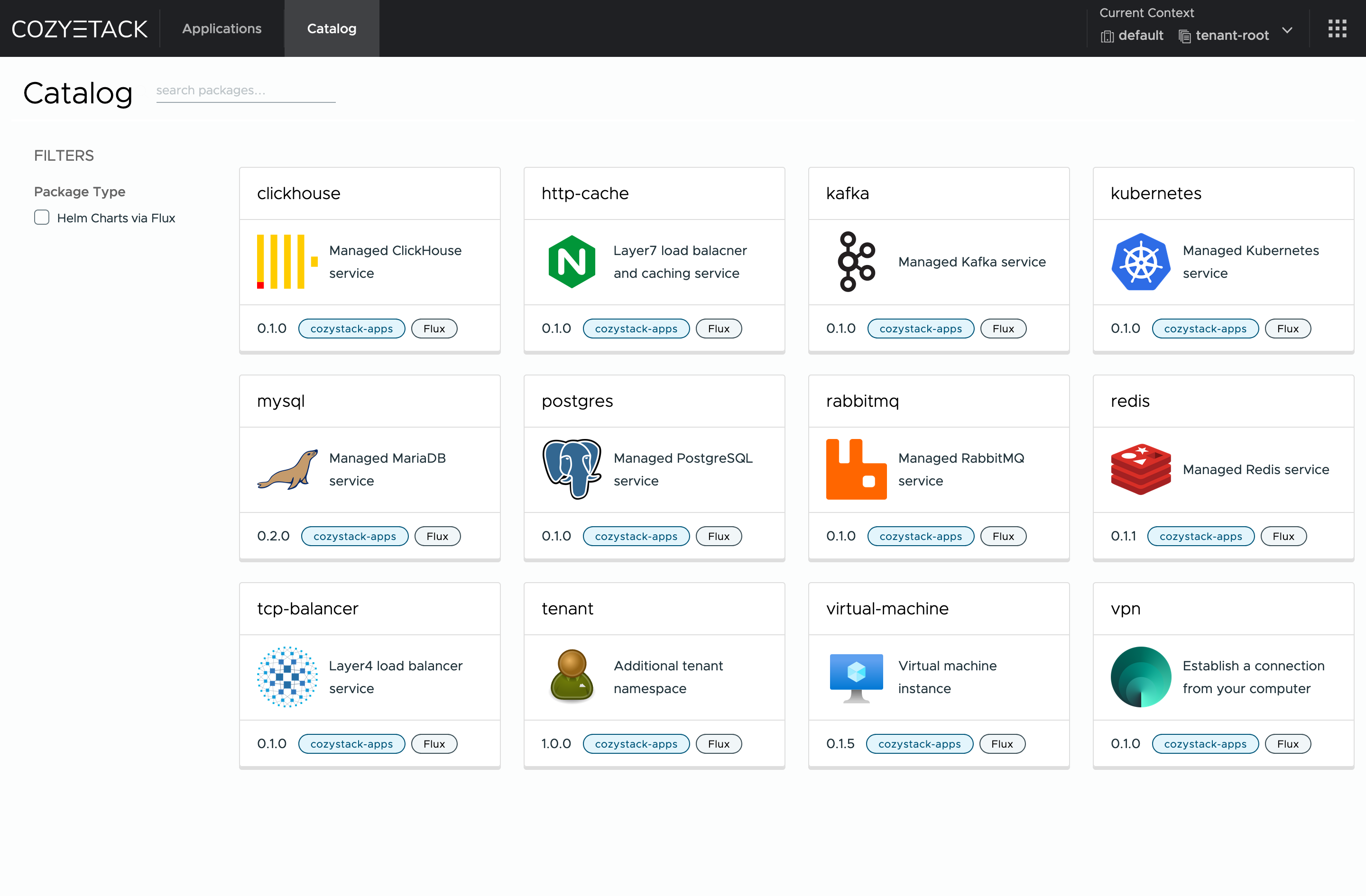

## Screenshot

|

||||

|

||||

|

||||

|

||||

## Documentation

|

||||

|

||||

@@ -59,7 +60,10 @@ Commits are used to generate the changelog, and their author will be referenced

|

||||

|

||||

If you have **Feature Requests** please use the [Discussion's Feature Request section](https://github.com/cozystack/cozystack/discussions/categories/feature-requests).

|

||||

|

||||

You are welcome to join our weekly community meetings (just add this events to your [Google Calendar](https://calendar.google.com/calendar?cid=ZTQzZDIxZTVjOWI0NWE5NWYyOGM1ZDY0OWMyY2IxZTFmNDMzZTJlNjUzYjU2ZGJiZGE3NGNhMzA2ZjBkMGY2OEBncm91cC5jYWxlbmRhci5nb29nbGUuY29t) or [iCal](https://calendar.google.com/calendar/ical/e43d21e5c9b45a95f28c5d649c2cb1e1f433e2e653b56dbbda74ca306f0d0f68%40group.calendar.google.com/public/basic.ics)) or [Telegram group](https://t.me/cozystack).

|

||||

## Community

|

||||

|

||||

You are welcome to join our [Telegram group](https://t.me/cozystack) and come to our weekly community meetings.

|

||||

Add them to your [Google Calendar](https://calendar.google.com/calendar?cid=ZTQzZDIxZTVjOWI0NWE5NWYyOGM1ZDY0OWMyY2IxZTFmNDMzZTJlNjUzYjU2ZGJiZGE3NGNhMzA2ZjBkMGY2OEBncm91cC5jYWxlbmRhci5nb29nbGUuY29t) or [iCal](https://calendar.google.com/calendar/ical/e43d21e5c9b45a95f28c5d649c2cb1e1f433e2e653b56dbbda74ca306f0d0f68%40group.calendar.google.com/public/basic.ics) for convenience.

|

||||

|

||||

## License

|

||||

|

||||

|

||||

@@ -39,6 +39,8 @@ import (

|

||||

cozystackiov1alpha1 "github.com/cozystack/cozystack/api/v1alpha1"

|

||||

"github.com/cozystack/cozystack/internal/controller"

|

||||

"github.com/cozystack/cozystack/internal/telemetry"

|

||||

|

||||

helmv2 "github.com/fluxcd/helm-controller/api/v2"

|

||||

// +kubebuilder:scaffold:imports

|

||||

)

|

||||

|

||||

@@ -51,6 +53,7 @@ func init() {

|

||||

utilruntime.Must(clientgoscheme.AddToScheme(scheme))

|

||||

|

||||

utilruntime.Must(cozystackiov1alpha1.AddToScheme(scheme))

|

||||

utilruntime.Must(helmv2.AddToScheme(scheme))

|

||||

// +kubebuilder:scaffold:scheme

|

||||

}

|

||||

|

||||

@@ -183,7 +186,23 @@ func main() {

|

||||

Client: mgr.GetClient(),

|

||||

Scheme: mgr.GetScheme(),

|

||||

}).SetupWithManager(mgr); err != nil {

|

||||

setupLog.Error(err, "unable to create controller", "controller", "Workload")

|

||||

setupLog.Error(err, "unable to create controller", "controller", "WorkloadReconciler")

|

||||

os.Exit(1)

|

||||

}

|

||||

|

||||

if err = (&controller.TenantHelmReconciler{

|

||||

Client: mgr.GetClient(),

|

||||

Scheme: mgr.GetScheme(),

|

||||

}).SetupWithManager(mgr); err != nil {

|

||||

setupLog.Error(err, "unable to create controller", "controller", "TenantHelmReconciler")

|

||||

os.Exit(1)

|

||||

}

|

||||

|

||||

if err = (&controller.CozystackConfigReconciler{

|

||||

Client: mgr.GetClient(),

|

||||

Scheme: mgr.GetScheme(),

|

||||

}).SetupWithManager(mgr); err != nil {

|

||||

setupLog.Error(err, "unable to create controller", "controller", "CozystackConfigReconciler")

|

||||

os.Exit(1)

|

||||

}

|

||||

|

||||

|

||||

243

docs/changelogs/v0.31.0.md

Normal file

243

docs/changelogs/v0.31.0.md

Normal file

@@ -0,0 +1,243 @@

|

||||

Cozystack v0.31.0 is a significant release that brings new features, key fixes, and updates to underlying components.

|

||||

This version enhances GPU support, improves many components of Cozystack, and introduces a more robust release process to improve stability.

|

||||

Below, we'll go over the highlights in each area for current users, developers, and our community.

|

||||

|

||||

## Major Features and Improvements

|

||||

|

||||

### GPU support for tenant Kubernetes clusters

|

||||

|

||||

Cozystack now integrates NVIDIA GPU Operator support for tenant Kubernetes clusters.

|

||||

This enables platform users to run GPU-powered AI/ML applications in their own clusters.

|

||||

To enable GPU Operator, set `addons.gpuOperator.enabled: true` in the cluster configuration.

|

||||

(@kvaps in https://github.com/cozystack/cozystack/pull/834)

|

||||

|

||||

Check out Andrei Kvapil's CNCF webinar [showcasing the GPU support by running Stable Diffusion in Cozystack](https://www.youtube.com/watch?v=S__h_QaoYEk).

|

||||

|

||||

<!--

|

||||

* [kubernetes] Introduce GPU support for tenant Kubernetes clusters. (@kvaps in https://github.com/cozystack/cozystack/pull/834)

|

||||

-->

|

||||

|

||||

### Cilium Improvements

|

||||

|

||||

Cozystack’s Cilium integration received two significant enhancements.

|

||||

First, Gateway API support in Cilium is now enabled, allowing advanced L4/L7 routing features via Kubernetes Gateway API.

|

||||

We thank Zdenek Janda @zdenekjanda for contributing this feature in https://github.com/cozystack/cozystack/pull/924.

|

||||

|

||||

Second, Cozystack now permits custom user-provided parameters in the tenant cluster’s Cilium configuration.

|

||||

(@lllamnyp in https://github.com/cozystack/cozystack/pull/917)

|

||||

|

||||

<!--

|

||||

* [cilium] Enable Cilium Gateway API. (@zdenekjanda in https://github.com/cozystack/cozystack/pull/924)

|

||||

* [cilium] Enable user-added parameters in a tenant cluster Cilium. (@lllamnyp in https://github.com/cozystack/cozystack/pull/917)

|

||||

-->

|

||||

|

||||

### Cross-Architecture Builds (ARM Support Beta)

|

||||

|

||||

Cozystack's build system was refactored to support multi-architecture binaries and container images.

|

||||

This paves the road to running Cozystack on ARM64 servers.

|

||||

Changes include Makefile improvements (https://github.com/cozystack/cozystack/pull/907)

|

||||

and multi-arch Docker image builds (https://github.com/cozystack/cozystack/pull/932 and https://github.com/cozystack/cozystack/pull/970).

|

||||

|

||||

We thank Nikita Bykov @nbykov0 for his ongoing work on ARM support!

|

||||

|

||||

<!--

|

||||

* Introduce support for cross-architecture builds and Cozystack on ARM:

|

||||

* [build] Refactor Makefiles introducing build variables. (@nbykov0 in https://github.com/cozystack/cozystack/pull/907)

|

||||

* [build] Add support for multi-architecture and cross-platform image builds. (@nbykov0 in https://github.com/cozystack/cozystack/pull/932 and https://github.com/cozystack/cozystack/pull/970)

|

||||

-->

|

||||

|

||||

### VerticalPodAutoscaler (VPA) Expansion

|

||||

|

||||

The VerticalPodAutoscaler is now enabled for more Cozystack components to automate resource tuning.

|

||||

Specifically, VPA was added for tenant Kubernetes control planes (@klinch0 in https://github.com/cozystack/cozystack/pull/806),

|

||||

the Cozystack Dashboard (https://github.com/cozystack/cozystack/pull/828),

|

||||

and the Cozystack etcd-operator (https://github.com/cozystack/cozystack/pull/850).

|

||||

All Cozystack components that have VPA enabled can automatically adjust their CPU and memory requests based on usage, improving platform and application stability.

|

||||

|

||||

<!--

|

||||

* Add VerticalPodAutoscaler to a few more components:

|

||||

* [kubernetes] Kubernetes clusters in user tenants. (@klinch0 in https://github.com/cozystack/cozystack/pull/806)

|

||||

* [platform] Cozystack dashboard. (@klinch0 in https://github.com/cozystack/cozystack/pull/828)

|

||||

* [platform] Cozystack etcd-operator (@klinch0 in https://github.com/cozystack/cozystack/pull/850)

|

||||

-->

|

||||

|

||||

### Tenant HelmRelease Reconcile Controller

|

||||

|

||||

A new controller was introduced to monitor and synchronize HelmRelease resources across tenants.

|

||||

This controller propagates configuration changes to tenant workloads and ensures that any HelmRelease defined in a tenant

|

||||

stays in sync with platform updates.

|

||||

It improves the reliability of deploying managed applications in Cozystack.

|

||||

(@klinch0 in https://github.com/cozystack/cozystack/pull/870)

|

||||

|

||||

<!--

|

||||

* [platform] Introduce a new controller to synchronize tenant HelmReleases and propagate configuration changes. (@klinch0 in https://github.com/cozystack/cozystack/pull/870)

|

||||

-->

|

||||

|

||||

### Virtual Machine Improvements

|

||||

|

||||

**Configurable KubeVirt CPU Overcommit**: The CPU allocation ratio in KubeVirt (how virtual CPUs are overcommitted relative to physical) is now configurable

|

||||

via the `cpu-allocation-ratio` value in the Cozystack configmap.

|

||||

This means Cozystack administrators can now tune CPU overcommitment for VMs to balance performance vs. density.

|

||||

(@lllamnyp in https://github.com/cozystack/cozystack/pull/905)

|

||||

|

||||

**KubeVirt VM Export**: Cozystack now allows exporting KubeVirt virtual machines.

|

||||

This feature, enabled via KubeVirt's `VirtualMachineExport` capability, lets users snapshot or back up VM images.

|

||||

(@kvaps in https://github.com/cozystack/cozystack/pull/808)

|

||||

|

||||

**Support for various storage classes in Virtual Machines**: The `virtual-machine` application (since version 0.9.2) lets you pick any StorageClass for a VM's

|

||||

system disk instead of relying on a hard-coded PVC.

|

||||

Refer to values `systemDisk.storage` and `systemDisk.storageClass` in the [application's configs](https://cozystack.io/docs/reference/applications/virtual-machine/#common-parameters).

|

||||

(@kvaps in https://github.com/cozystack/cozystack/pull/974)

|

||||

|

||||

<!--

|

||||

* [kubevirt] Enable exporting VMs. (@kvaps in https://github.com/cozystack/cozystack/pull/808)

|

||||

* [kubevirt] Make KubeVirt's CPU allocation ratio configurable. (@lllamnyp in https://github.com/cozystack/cozystack/pull/905)

|

||||

* [virtual-machine] Add support for various storages. (@kvaps in https://github.com/cozystack/cozystack/pull/974)

|

||||

-->

|

||||

|

||||

### Other Features and Improvements

|

||||

|

||||

* [platform] Introduce options `expose-services`, `expose-ingress`, and `expose-external-ips` to the ingress service. (@kvaps in https://github.com/cozystack/cozystack/pull/929)

|

||||

* [cozystack-controller] Record the IP address pool and storage class in Workload objects. (@lllamnyp in https://github.com/cozystack/cozystack/pull/831)

|

||||

* [apps] Remove user-facing config of limits and requests. (@lllamnyp in https://github.com/cozystack/cozystack/pull/935)

|

||||

|

||||

## New Release Lifecycle

|

||||

|

||||

Cozystack release lifecycle is changing to provide a more stable and predictable lifecycle to customers running Cozystack in mission-critical environments.

|

||||

|

||||

* **Gradual Release with Alpha, Beta, and Release Candidates**: Cozystack will now publish pre-release versions (alpha, beta, release candidates) before a stable release.

|

||||

Starting with v0.31.0, the team made three release candidates before releasing version v0.31.0.

|

||||

This allows more testing and feedback before marking a release as stable.

|

||||

|

||||

* **Prolonged Release Support with Patch Versions**: After the initial `vX.Y.0` release, a long-lived branch `release-X.Y` will be created to backport fixes.

|

||||

For example, with 0.31.0’s release, a `release-0.31` branch will track patch fixes (`0.31.x`).

|

||||

This strategy lets Cozystack users receive timely patch releases and updates with minimal risks.

|

||||

|

||||

To implement these new changes, we have rebuilt our CI/CD workflows and introduced automation, enabling automatic backports.

|

||||

You can read more about how it's implemented in the Development section below.

|

||||

|

||||

For more information, read the [Cozystack Release Workflow](https://github.com/cozystack/cozystack/blob/main/docs/release.md) documentation.

|

||||

|

||||

## Fixes

|

||||

|

||||

* [virtual-machine] Add GPU names to the virtual machine specifications. (@kvaps in https://github.com/cozystack/cozystack/pull/862)

|

||||

* [virtual-machine] Count Workload resources for pods by requests, not limits. Other improvements to VM resource tracking. (@lllamnyp in https://github.com/cozystack/cozystack/pull/904)

|

||||

* [virtual-machine] Set PortList method by default. (@kvaps in https://github.com/cozystack/cozystack/pull/996)

|

||||

* [virtual-machine] Specify ports even for wholeIP mode. (@kvaps in https://github.com/cozystack/cozystack/pull/1000)

|

||||

* [platform] Fix installing HelmReleases on initial setup. (@kvaps in https://github.com/cozystack/cozystack/pull/833)

|

||||

* [platform] Migration scripts update Kubernetes ConfigMap with the current stack version for improved version tracking. (@klinch0 in https://github.com/cozystack/cozystack/pull/840)

|

||||

* [platform] Reduce requested CPU and RAM for the `kamaji` provider. (@klinch0 in https://github.com/cozystack/cozystack/pull/825)

|

||||

* [platform] Improve the reconciliation loop for the Cozystack system HelmReleases logic. (@klinch0 in https://github.com/cozystack/cozystack/pull/809 and https://github.com/cozystack/cozystack/pull/810, @kvaps in https://github.com/cozystack/cozystack/pull/811)

|

||||

* [platform] Remove extra dependencies for the Piraeus operator. (@klinch0 in https://github.com/cozystack/cozystack/pull/856)

|

||||

* [platform] Refactor dashboard values. (@kvaps in https://github.com/cozystack/cozystack/pull/928, patched by @llamnyp in https://github.com/cozystack/cozystack/pull/952)

|

||||

* [platform] Make FluxCD artifact disabled by default. (@klinch0 in https://github.com/cozystack/cozystack/pull/964)

|

||||

* [kubernetes] Update garbage collection of HelmReleases in tenant Kubernetes clusters. (@kvaps in https://github.com/cozystack/cozystack/pull/835)

|

||||

* [kubernetes] Fix merging `valuesOverride` for tenant clusters. (@kvaps in https://github.com/cozystack/cozystack/pull/879)

|

||||

* [kubernetes] Fix `ubuntu-container-disk` tag. (@kvaps in https://github.com/cozystack/cozystack/pull/887)

|

||||

* [kubernetes] Refactor Helm manifests for tenant Kubernetes clusters. (@kvaps in https://github.com/cozystack/cozystack/pull/866)

|

||||

* [kubernetes] Fix Ingress-NGINX depends on Cert-Manager. (@kvaps in https://github.com/cozystack/cozystack/pull/976)

|

||||

* [kubernetes, apps] Enable `topologySpreadConstraints` for tenant Kubernetes clusters and fix it for managed PostgreSQL. (@klinch0 in https://github.com/cozystack/cozystack/pull/995)

|

||||

* [tenant] Fix an issue with accessing external IPs of a cluster from the cluster itself. (@kvaps in https://github.com/cozystack/cozystack/pull/854)

|

||||

* [cluster-api] Remove the no longer necessary workaround for Kamaji. (@kvaps in https://github.com/cozystack/cozystack/pull/867, patched in https://github.com/cozystack/cozystack/pull/956)

|

||||

* [monitoring] Remove legacy label "POD" from the exclude filter in metrics. (@xy2 in https://github.com/cozystack/cozystack/pull/826)

|

||||

* [monitoring] Refactor management etcd monitoring config. Introduce a migration script for updating monitoring resources (`kube-rbac-proxy` daemonset). (@lllamnyp in https://github.com/cozystack/cozystack/pull/799 and https://github.com/cozystack/cozystack/pull/830)

|

||||

* [monitoring] Fix VerticalPodAutoscaler resource allocation for VMagent. (@klinch0 in https://github.com/cozystack/cozystack/pull/820)

|

||||

* [postgres] Remove duplicated `template` entry from backup manifest. (@etoshutka in https://github.com/cozystack/cozystack/pull/872)

|

||||

* [kube-ovn] Fix versions mapping in Makefile. (@kvaps in https://github.com/cozystack/cozystack/pull/883)

|

||||

* [dx] Automatically detect version for migrations in the installer.sh. (@kvaps in https://github.com/cozystack/cozystack/pull/837)

|

||||

* [dx] remove version_map and building for library charts. (@kvaps in https://github.com/cozystack/cozystack/pull/998)

|

||||

* [docs] Review the tenant Kubernetes cluster docs. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/969)

|

||||

* [docs] Explain that tenants cannot have dashes in their names. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/980)

|

||||

|

||||

## Dependencies

|

||||

|

||||

* MetalLB images are now built in-tree based on version 0.14.9 with additional critical patches. (@lllamnyp in https://github.com/cozystack/cozystack/pull/945)

|

||||

* Update Kubernetes to v1.32.4. (@kvaps in https://github.com/cozystack/cozystack/pull/949)

|

||||

* Update Talos Linux to v1.10.1. (@kvaps in https://github.com/cozystack/cozystack/pull/931)

|

||||

* Update Cilium to v1.17.3. (@kvaps in https://github.com/cozystack/cozystack/pull/848)

|

||||

* Update LINSTOR to v1.31.0. (@kvaps in https://github.com/cozystack/cozystack/pull/846)

|

||||

* Update Kube-OVN to v1.13.11. (@kvaps in https://github.com/cozystack/cozystack/pull/847, @lllamnyp in https://github.com/cozystack/cozystack/pull/922)

|

||||

* Update tenant Kubernetes to v1.32. (@kvaps in https://github.com/cozystack/cozystack/pull/871)

|

||||

* Update flux-operator to 0.20.0. (@kingdonb in https://github.com/cozystack/cozystack/pull/880 and https://github.com/cozystack/cozystack/pull/934)

|

||||

* Update multiple Cluster API components. (@kvaps in https://github.com/cozystack/cozystack/pull/867 and https://github.com/cozystack/cozystack/pull/947)

|

||||

* Update KamajiControlPlane to edge-25.4.1. (@kvaps in https://github.com/cozystack/cozystack/pull/953, fixed by @nbykov0 in https://github.com/cozystack/cozystack/pull/983)

|

||||

* Update cert-manager to v1.17.2. (@kvaps in https://github.com/cozystack/cozystack/pull/975)

|

||||

|

||||

## Documentation

|

||||

|

||||

* [Installing Talos in Air-Gapped Environment](https://cozystack.io/docs/operations/talos/configuration/air-gapped/):

|

||||

new guide for configuring and bootstrapping Talos Linux clusters in air-gapped environments.

|

||||

(@klinch0 in https://github.com/cozystack/website/pull/203)

|

||||

|

||||

* [Cozystack Bundles](https://cozystack.io/docs/guides/bundles/): new page in the learning section explaining how Cozystack bundles work and how to choose a bundle.

|

||||

(@NickVolynkin in https://github.com/cozystack/website/pull/188, https://github.com/cozystack/website/pull/189, and others;

|

||||

updated by @kvaps in https://github.com/cozystack/website/pull/192 and https://github.com/cozystack/website/pull/193)

|

||||

|

||||

* [Managed Application Reference](https://cozystack.io/docs/reference/applications/): A set of new pages in the docs, mirroring application docs from the Cozystack dashboard.

|

||||

(@NickVolynkin in https://github.com/cozystack/website/pull/198, https://github.com/cozystack/website/pull/202, and https://github.com/cozystack/website/pull/204)

|

||||

|

||||

* **LINSTOR Networking**: Guides on [configuring dedicated network for LINSTOR](https://cozystack.io/docs/operations/storage/dedicated-network/)

|

||||

and [configuring network for distributed storage in multi-datacenter setup](https://cozystack.io/docs/operations/stretched/linstor-dedicated-network/).

|

||||

(@xy2, edited by @NickVolynkin in https://github.com/cozystack/website/pull/171, https://github.com/cozystack/website/pull/182, and https://github.com/cozystack/website/pull/184)

|

||||

|

||||

### Fixes

|

||||

|

||||

* Correct error in the doc for the command to edit the configmap. (@lb0o in https://github.com/cozystack/website/pull/207)

|

||||

* Fix group name in OIDC docs (@kingdonb in https://github.com/cozystack/website/pull/179)

|

||||

* A bit more explanation of Docker buildx builders. (@nbykov0 in https://github.com/cozystack/website/pull/187)

|

||||

|

||||

## Development, Testing, and CI/CD

|

||||

|

||||

### Testing

|

||||

|

||||

Improvements:

|

||||

|

||||

* Introduce `cozytest` — a new [BATS-based](https://github.com/bats-core/bats-core) testing framework. (@kvaps in https://github.com/cozystack/cozystack/pull/982)

|

||||

|

||||

Fixes:

|

||||

|

||||

* Fix `device_ownership_from_security_context` CRI. (@dtrdnk in https://github.com/cozystack/cozystack/pull/896)

|

||||

* Increase timeout durations for `capi` and `keycloak` to improve reliability during e2e-tests. (@kvaps in https://github.com/cozystack/cozystack/pull/858)

|

||||

* Return `genisoimage` to the e2e-test Dockerfile (@gwynbleidd2106 in https://github.com/cozystack/cozystack/pull/962)

|

||||

|

||||

### CI/CD Changes

|

||||

|

||||

Improvements:

|

||||

|

||||

* Use release branches `release-X.Y` for gathering and releasing fixes after initial `vX.Y.0` release. (@kvaps in https://github.com/cozystack/cozystack/pull/816)

|

||||

* Automatically create release branches after initial `vX.Y.0` release is published. (@kvaps in https://github.com/cozystack/cozystack/pull/886)

|

||||

* Introduce Release Candidate versions. Automate patch backporting by applying patches from pull requests labeled `[backport]` to the current release branch. (@kvaps in https://github.com/cozystack/cozystack/pull/841 and https://github.com/cozystack/cozystack/pull/901, @nickvolynkin in https://github.com/cozystack/cozystack/pull/890)

|

||||

* Support alpha and beta pre-releases. (@kvaps in https://github.com/cozystack/cozystack/pull/978)

|

||||

* Commit changes in release pipelines under `github-actions <github-actions@github.com>`. (@kvaps in https://github.com/cozystack/cozystack/pull/823)

|

||||

* Describe the Cozystack release workflow. (@NickVolynkin in https://github.com/cozystack/cozystack/pull/817 and https://github.com/cozystack/cozystack/pull/897)

|

||||

|

||||

Fixes:

|

||||

|

||||

* Improve the check for `versions_map` running on pull requests. (@kvaps and @klinch0 in https://github.com/cozystack/cozystack/pull/836, https://github.com/cozystack/cozystack/pull/842, and https://github.com/cozystack/cozystack/pull/845)

|

||||

* If the release step was skipped on a tag, skip tests as well. (@kvaps in https://github.com/cozystack/cozystack/pull/822)

|

||||

* Allow CI to cancel the previous job if a new one is scheduled. (@kvaps in https://github.com/cozystack/cozystack/pull/873)

|

||||

* Use the correct version name when uploading build assets to the release page. (@kvaps in https://github.com/cozystack/cozystack/pull/876)

|

||||

* Stop using `ok-to-test` label to trigger CI in pull requests. (@kvaps in https://github.com/cozystack/cozystack/pull/875)

|

||||

* Do not run tests in the release building pipeline. (@kvaps in https://github.com/cozystack/cozystack/pull/882)

|

||||

* Fix release branch creation. (@kvaps in https://github.com/cozystack/cozystack/pull/884)

|

||||

* Reduce noise in the test logs by suppressing the `wget` progress bar. (@lllamnyp in https://github.com/cozystack/cozystack/pull/865)

|

||||

* Revert "automatically trigger tests in releasing PR". (@kvaps in https://github.com/cozystack/cozystack/pull/900)

|

||||

* Force-update release branch on tagged main commits. (@kvaps in https://github.com/cozystack/cozystack/pull/977)

|

||||

* Show detailed errors in the `pull-request-release` workflow. (@lllamnyp in https://github.com/cozystack/cozystack/pull/992)

|

||||

|

||||

## Community and Maintenance

|

||||

|

||||

### Repository Maintenance

|

||||

|

||||

Added @klinch0 to CODEOWNERS. (@kvaps in https://github.com/cozystack/cozystack/pull/838)

|

||||

|

||||

### New Contributors

|

||||

|

||||

* @etoshutka made their first contribution in https://github.com/cozystack/cozystack/pull/872

|

||||

* @dtrdnk made their first contribution in https://github.com/cozystack/cozystack/pull/896

|

||||

* @zdenekjanda made their first contribution in https://github.com/cozystack/cozystack/pull/924

|

||||

* @gwynbleidd2106 made their first contribution in https://github.com/cozystack/cozystack/pull/962

|

||||

|

||||

## Full Changelog

|

||||

|

||||

See https://github.com/cozystack/cozystack/compare/v0.30.0...v0.31.0

|

||||

@@ -1,10 +1,37 @@

|

||||

# Release Workflow

|

||||

|

||||

This section explains how Cozystack builds and releases are made.

|

||||

This document describes Cozystack’s release process.

|

||||

|

||||

## Introduction

|

||||

|

||||

Cozystack uses a staged release process to ensure stability and flexibility during development.

|

||||

|

||||

There are three types of releases:

|

||||

|

||||

- **Release Candidates (RC)** – Preview versions (e.g., `v0.42.0-rc.1`) used for final testing and validation.

|

||||

- **Regular Releases** – Final versions (e.g., `v0.42.0`) that are feature-complete and thoroughly tested.

|

||||

- **Patch Releases** – Bugfix-only updates (e.g., `v0.42.1`) made after a stable release, based on a dedicated release branch.

|

||||

|

||||

Each type plays a distinct role in delivering reliable and tested updates while allowing ongoing development to continue smoothly.

|

||||

|

||||

## Release Candidates

|

||||

|

||||

Release candidates are Cozystack versions that introduce new features and are published before a stable release.

|

||||

Their purpose is to help validate stability before finalizing a new feature release.

|

||||

They allow for final rounds of testing and bug fixes without freezing development.

|

||||

|

||||

Release candidates are given numbers `vX.Y.0-rc.N`, for example, `v0.42.0-rc.1`.

|

||||

They are created directly in the `main` branch.

|

||||

An RC is typically tagged when all major features for the upcoming release have been merged into main and the release enters its testing phase.

|

||||

However, new features and changes can still be added before the regular release `vX.Y.0`.

|

||||

|

||||

Each RC contributes to a cumulative set of release notes that will be finalized when `vX.Y.0` is released.

|

||||

After testing, if no critical issues remain, the regular release (`vX.Y.0`) is tagged from the last RC or a later commit in main.

|

||||

This begins the regular release process, creates a dedicated `release-X.Y` branch, and opens the way for patch releases.

|

||||

|

||||

## Regular Releases

|

||||

|

||||

When making regular releases, we take a commit in `main` and decide to make it a release `x.y.0`.

|

||||

When making a regular release, we tag the latest RC or a subsequent minimal-change commit as `vX.Y.0`.

|

||||

In this explanation, we'll use version `v0.42.0` as an example:

|

||||

|

||||

```mermaid

|

||||

|

||||

13

go.mod

13

go.mod

@@ -37,6 +37,7 @@ require (

|

||||

github.com/coreos/go-systemd/v22 v22.5.0 // indirect

|

||||

github.com/davecgh/go-spew v1.1.2-0.20180830191138-d8f796af33cc // indirect

|

||||

github.com/emicklei/go-restful/v3 v3.11.0 // indirect

|

||||

github.com/evanphx/json-patch v4.12.0+incompatible // indirect

|

||||

github.com/evanphx/json-patch/v5 v5.9.0 // indirect

|

||||

github.com/felixge/httpsnoop v1.0.4 // indirect

|

||||

github.com/fluxcd/pkg/apis/kustomize v1.6.1 // indirect

|

||||

@@ -91,14 +92,14 @@ require (

|

||||

go.opentelemetry.io/proto/otlp v1.3.1 // indirect

|

||||

go.uber.org/multierr v1.11.0 // indirect

|

||||

go.uber.org/zap v1.27.0 // indirect

|

||||

golang.org/x/crypto v0.28.0 // indirect

|

||||

golang.org/x/crypto v0.31.0 // indirect

|

||||

golang.org/x/exp v0.0.0-20240719175910-8a7402abbf56 // indirect

|

||||

golang.org/x/net v0.30.0 // indirect

|

||||

golang.org/x/net v0.33.0 // indirect

|

||||

golang.org/x/oauth2 v0.23.0 // indirect

|

||||

golang.org/x/sync v0.8.0 // indirect

|

||||

golang.org/x/sys v0.26.0 // indirect

|

||||

golang.org/x/term v0.25.0 // indirect

|

||||

golang.org/x/text v0.19.0 // indirect

|

||||

golang.org/x/sync v0.10.0 // indirect

|

||||

golang.org/x/sys v0.28.0 // indirect

|

||||

golang.org/x/term v0.27.0 // indirect

|

||||

golang.org/x/text v0.21.0 // indirect

|

||||

golang.org/x/time v0.7.0 // indirect

|

||||

golang.org/x/tools v0.26.0 // indirect

|

||||

gomodules.xyz/jsonpatch/v2 v2.4.0 // indirect

|

||||

|

||||

28

go.sum

28

go.sum

@@ -26,8 +26,8 @@ github.com/dustin/go-humanize v1.0.1 h1:GzkhY7T5VNhEkwH0PVJgjz+fX1rhBrR7pRT3mDkp

|

||||

github.com/dustin/go-humanize v1.0.1/go.mod h1:Mu1zIs6XwVuF/gI1OepvI0qD18qycQx+mFykh5fBlto=

|

||||

github.com/emicklei/go-restful/v3 v3.11.0 h1:rAQeMHw1c7zTmncogyy8VvRZwtkmkZ4FxERmMY4rD+g=

|

||||

github.com/emicklei/go-restful/v3 v3.11.0/go.mod h1:6n3XBCmQQb25CM2LCACGz8ukIrRry+4bhvbpWn3mrbc=

|

||||

github.com/evanphx/json-patch v0.5.2 h1:xVCHIVMUu1wtM/VkR9jVZ45N3FhZfYMMYGorLCR8P3k=

|

||||

github.com/evanphx/json-patch v0.5.2/go.mod h1:ZWS5hhDbVDyob71nXKNL0+PWn6ToqBHMikGIFbs31qQ=

|

||||

github.com/evanphx/json-patch v4.12.0+incompatible h1:4onqiflcdA9EOZ4RxV643DvftH5pOlLGNtQ5lPWQu84=

|

||||

github.com/evanphx/json-patch v4.12.0+incompatible/go.mod h1:50XU6AFN0ol/bzJsmQLiYLvXMP4fmwYFNcr97nuDLSk=

|

||||

github.com/evanphx/json-patch/v5 v5.9.0 h1:kcBlZQbplgElYIlo/n1hJbls2z/1awpXxpRi0/FOJfg=

|

||||

github.com/evanphx/json-patch/v5 v5.9.0/go.mod h1:VNkHZ/282BpEyt/tObQO8s5CMPmYYq14uClGH4abBuQ=

|

||||

github.com/felixge/httpsnoop v1.0.4 h1:NFTV2Zj1bL4mc9sqWACXbQFVBBg2W3GPvqp8/ESS2Wg=

|

||||

@@ -212,8 +212,8 @@ go.uber.org/zap v1.27.0/go.mod h1:GB2qFLM7cTU87MWRP2mPIjqfIDnGu+VIO4V/SdhGo2E=

|

||||

golang.org/x/crypto v0.0.0-20190308221718-c2843e01d9a2/go.mod h1:djNgcEr1/C05ACkg1iLfiJU5Ep61QUkGW8qpdssI0+w=

|

||||

golang.org/x/crypto v0.0.0-20191011191535-87dc89f01550/go.mod h1:yigFU9vqHzYiE8UmvKecakEJjdnWj3jj499lnFckfCI=

|

||||

golang.org/x/crypto v0.0.0-20200622213623-75b288015ac9/go.mod h1:LzIPMQfyMNhhGPhUkYOs5KpL4U8rLKemX1yGLhDgUto=

|

||||

golang.org/x/crypto v0.28.0 h1:GBDwsMXVQi34v5CCYUm2jkJvu4cbtru2U4TN2PSyQnw=

|

||||

golang.org/x/crypto v0.28.0/go.mod h1:rmgy+3RHxRZMyY0jjAJShp2zgEdOqj2AO7U0pYmeQ7U=

|

||||

golang.org/x/crypto v0.31.0 h1:ihbySMvVjLAeSH1IbfcRTkD/iNscyz8rGzjF/E5hV6U=

|

||||

golang.org/x/crypto v0.31.0/go.mod h1:kDsLvtWBEx7MV9tJOj9bnXsPbxwJQ6csT/x4KIN4Ssk=

|

||||

golang.org/x/exp v0.0.0-20240719175910-8a7402abbf56 h1:2dVuKD2vS7b0QIHQbpyTISPd0LeHDbnYEryqj5Q1ug8=

|

||||

golang.org/x/exp v0.0.0-20240719175910-8a7402abbf56/go.mod h1:M4RDyNAINzryxdtnbRXRL/OHtkFuWGRjvuhBJpk2IlY=

|

||||

golang.org/x/mod v0.2.0/go.mod h1:s0Qsj1ACt9ePp/hMypM3fl4fZqREWJwdYDEqhRiZZUA=

|

||||

@@ -222,26 +222,26 @@ golang.org/x/net v0.0.0-20190404232315-eb5bcb51f2a3/go.mod h1:t9HGtf8HONx5eT2rtn

|

||||

golang.org/x/net v0.0.0-20190620200207-3b0461eec859/go.mod h1:z5CRVTTTmAJ677TzLLGU+0bjPO0LkuOLi4/5GtJWs/s=

|

||||

golang.org/x/net v0.0.0-20200226121028-0de0cce0169b/go.mod h1:z5CRVTTTmAJ677TzLLGU+0bjPO0LkuOLi4/5GtJWs/s=

|

||||

golang.org/x/net v0.0.0-20201021035429-f5854403a974/go.mod h1:sp8m0HH+o8qH0wwXwYZr8TS3Oi6o0r6Gce1SSxlDquU=

|

||||

golang.org/x/net v0.30.0 h1:AcW1SDZMkb8IpzCdQUaIq2sP4sZ4zw+55h6ynffypl4=

|

||||

golang.org/x/net v0.30.0/go.mod h1:2wGyMJ5iFasEhkwi13ChkO/t1ECNC4X4eBKkVFyYFlU=

|

||||

golang.org/x/net v0.33.0 h1:74SYHlV8BIgHIFC/LrYkOGIwL19eTYXQ5wc6TBuO36I=

|

||||

golang.org/x/net v0.33.0/go.mod h1:HXLR5J+9DxmrqMwG9qjGCxZ+zKXxBru04zlTvWlWuN4=

|

||||

golang.org/x/oauth2 v0.23.0 h1:PbgcYx2W7i4LvjJWEbf0ngHV6qJYr86PkAV3bXdLEbs=

|

||||

golang.org/x/oauth2 v0.23.0/go.mod h1:XYTD2NtWslqkgxebSiOHnXEap4TF09sJSc7H1sXbhtI=

|

||||

golang.org/x/sync v0.0.0-20190423024810-112230192c58/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||

golang.org/x/sync v0.0.0-20190911185100-cd5d95a43a6e/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||

golang.org/x/sync v0.0.0-20201020160332-67f06af15bc9/go.mod h1:RxMgew5VJxzue5/jJTE5uejpjVlOe/izrB70Jof72aM=

|

||||

golang.org/x/sync v0.8.0 h1:3NFvSEYkUoMifnESzZl15y791HH1qU2xm6eCJU5ZPXQ=

|

||||

golang.org/x/sync v0.8.0/go.mod h1:Czt+wKu1gCyEFDUtn0jG5QVvpJ6rzVqr5aXyt9drQfk=

|

||||

golang.org/x/sync v0.10.0 h1:3NQrjDixjgGwUOCaF8w2+VYHv0Ve/vGYSbdkTa98gmQ=

|

||||

golang.org/x/sync v0.10.0/go.mod h1:Czt+wKu1gCyEFDUtn0jG5QVvpJ6rzVqr5aXyt9drQfk=

|

||||

golang.org/x/sys v0.0.0-20190215142949-d0b11bdaac8a/go.mod h1:STP8DvDyc/dI5b8T5hshtkjS+E42TnysNCUPdjciGhY=

|

||||

golang.org/x/sys v0.0.0-20190412213103-97732733099d/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

|

||||

golang.org/x/sys v0.0.0-20200930185726-fdedc70b468f/go.mod h1:h1NjWce9XRLGQEsW7wpKNCjG9DtNlClVuFLEZdDNbEs=

|

||||

golang.org/x/sys v0.26.0 h1:KHjCJyddX0LoSTb3J+vWpupP9p0oznkqVk/IfjymZbo=

|

||||

golang.org/x/sys v0.26.0/go.mod h1:/VUhepiaJMQUp4+oa/7Zr1D23ma6VTLIYjOOTFZPUcA=

|

||||

golang.org/x/term v0.25.0 h1:WtHI/ltw4NvSUig5KARz9h521QvRC8RmF/cuYqifU24=

|

||||

golang.org/x/term v0.25.0/go.mod h1:RPyXicDX+6vLxogjjRxjgD2TKtmAO6NZBsBRfrOLu7M=

|

||||

golang.org/x/sys v0.28.0 h1:Fksou7UEQUWlKvIdsqzJmUmCX3cZuD2+P3XyyzwMhlA=

|

||||

golang.org/x/sys v0.28.0/go.mod h1:/VUhepiaJMQUp4+oa/7Zr1D23ma6VTLIYjOOTFZPUcA=

|

||||

golang.org/x/term v0.27.0 h1:WP60Sv1nlK1T6SupCHbXzSaN0b9wUmsPoRS9b61A23Q=

|

||||

golang.org/x/term v0.27.0/go.mod h1:iMsnZpn0cago0GOrHO2+Y7u7JPn5AylBrcoWkElMTSM=

|

||||

golang.org/x/text v0.3.0/go.mod h1:NqM8EUOU14njkJ3fqMW+pc6Ldnwhi/IjpwHt7yyuwOQ=

|

||||

golang.org/x/text v0.3.3/go.mod h1:5Zoc/QRtKVWzQhOtBMvqHzDpF6irO9z98xDceosuGiQ=

|

||||

golang.org/x/text v0.19.0 h1:kTxAhCbGbxhK0IwgSKiMO5awPoDQ0RpfiVYBfK860YM=

|

||||

golang.org/x/text v0.19.0/go.mod h1:BuEKDfySbSR4drPmRPG/7iBdf8hvFMuRexcpahXilzY=

|

||||

golang.org/x/text v0.21.0 h1:zyQAAkrwaneQ066sspRyJaG9VNi/YJ1NfzcGB3hZ/qo=

|

||||

golang.org/x/text v0.21.0/go.mod h1:4IBbMaMmOPCJ8SecivzSH54+73PCFmPWxNTLm+vZkEQ=

|

||||

golang.org/x/time v0.7.0 h1:ntUhktv3OPE6TgYxXWv9vKvUSJyIFJlyohwbkEwPrKQ=

|

||||

golang.org/x/time v0.7.0/go.mod h1:3BpzKBy/shNhVucY/MWOyx10tF3SFh9QdLuxbVysPQM=

|

||||

golang.org/x/tools v0.0.0-20180917221912-90fa682c2a6e/go.mod h1:n7NCudcB/nEzxVGmLbDWY5pfWTLqBcC2KZ6jyYvM4mQ=

|

||||

|

||||

117

hack/cozytest.sh

Executable file

117

hack/cozytest.sh

Executable file

@@ -0,0 +1,117 @@

|

||||

#!/bin/sh

|

||||

###############################################################################

|

||||

# cozytest.sh - Bats-compatible test runner with live trace and enhanced #

|

||||

# output, written in pure shell #

|

||||

###############################################################################

|

||||

set -eu

|

||||

|

||||

TEST_FILE=${1:?Usage: ./cozytest.sh <file.bats> [pattern]}

|

||||

PATTERN=${2:-*}

|

||||

LINE='----------------------------------------------------------------'

|

||||

|

||||

cols() { stty size 2>/dev/null | awk '{print $2}' || echo 80; }

|

||||

MAXW=$(( $(cols) - 12 )); [ "$MAXW" -lt 40 ] && MAXW=70

|

||||

BEGIN=$(date +%s)

|

||||

timestamp() { s=$(( $(date +%s) - BEGIN )); printf '[%02d:%02d]' $((s/60)) $((s%60)); }

|

||||

|

||||

###############################################################################

|

||||

# run_one <fn> <title> #

|

||||

###############################################################################

|

||||

run_one() {

|

||||

fn=$1 title=$2

|

||||

tmp=$(mktemp -d) || { echo "Failed to create temp directory" >&2; exit 1; }

|

||||

log="$tmp/log"

|

||||

|

||||

echo "╭ » Run test: $title"

|

||||

START=$(date +%s)

|

||||

skip_next="+ $fn" # первую строку трассировки с именем функции пропустим

|

||||

|

||||

{

|

||||

(

|

||||

PS4='+ ' # prefix for set -x

|

||||

set -eu -x # strict + trace

|

||||

"$fn"

|

||||

)

|

||||

printf '__RC__%s\n' "$?"

|

||||

} 2>&1 | tee "$log" | while IFS= read -r line; do

|

||||

case "$line" in

|

||||

'__RC__'*) : ;;

|

||||

'+ '*) cmd=${line#'+ '}

|

||||

[ "$cmd" = "${skip_next#+ }" ] && continue

|

||||

case "$cmd" in

|

||||

'set -e'|'set -x'|'set -u'|'return 0') continue ;;

|

||||

esac

|

||||

out=$cmd ;;

|

||||

*) out=$line ;;

|

||||

esac

|

||||

now=$(( $(date +%s) - START ))

|

||||

[ ${#out} -gt "$MAXW" ] && out="$(printf '%.*s…' "$MAXW" "$out")"

|

||||

printf '┊[%02d:%02d] %s\n' $((now/60)) $((now%60)) "$out"

|

||||

done

|

||||

|

||||

rc=$(awk '/^__RC__/ {print substr($0,7)}' "$log" | tail -n1)

|

||||

[ -z "$rc" ] && rc=1

|

||||

now=$(( $(date +%s) - START ))

|

||||

|

||||

if [ "$rc" -eq 0 ]; then

|

||||

printf '╰[%02d:%02d] ✅ Test OK: %s\n' $((now/60)) $((now%60)) "$title"

|

||||

else

|

||||

printf '╰[%02d:%02d] ❌ Test failed: %s (exit %s)\n' \

|

||||

$((now/60)) $((now%60)) "$title" "$rc"

|

||||

echo "----- captured output -----------------------------------------"

|

||||

grep -v '^__RC__' "$log"

|

||||

echo "$LINE"

|

||||

exit "$rc"

|

||||

fi

|

||||

|

||||